The Unstructured Data Breakthrough: From Noise to Gold

Introduction

Imagine walking into the Library of Alexandria, but instead of organized scrolls and manuscripts, you find 80% of the knowledge scattered as random notes, sketches, whispered conversations, and fragments of ideas. That’s essentially what most enterprise data looks like today—a vast treasure trove where the majority of valuable insights are trapped in unstructured formats that traditional systems can’t understand.

If 2024 was about recognizing this hidden potential, 2025 is the year we finally unlock it. We’re witnessing a fundamental transformation in how organizations process the emails, documents, images, videos, sensor readings, and social media posts that make up the majority of their data universe. What was once considered “data exhaust”—the byproduct of digital operations—is becoming the primary source of competitive advantage.

The numbers tell a compelling story: the global datasphere will reach over 180 zettabytes by 2025, with real-time unstructured data volume surging from 25 to 51 zettabytes in just two years. But more importantly, we now have the technological breakthroughs to turn this data deluge from an overwhelming challenge into unprecedented opportunity.

The Great Data Awakening

Understanding the 80/20 Reality

For decades, data engineering has been optimized for the 20% of enterprise data that fits neatly into rows and columns. Customer databases, financial transactions, inventory systems—these structured datasets were the foundation of business intelligence and analytics. They were predictable, queryable, and manageable using established relational database technologies.

But this focus on structured data created a massive blind spot. The other 80% of enterprise data—emails discussing strategy, customer service call recordings, product images, social media mentions, IoT sensor streams, and PDF documents—remained largely untapped. Organizations knew this data existed, but lacked the tools to extract meaningful insights from it at scale.

The Traditional Approach:

- Extract: Copy unstructured data to specialized systems

- Transform: Convert to structured formats (often losing nuance)

- Load: Store in data warehouses optimized for structured analysis

- Analyze: Apply traditional BI tools to simplified representations

This process was expensive, time-consuming, and often destroyed the very context that made unstructured data valuable in the first place.

The Awakening Moment

The breakthrough came from the convergence of three technological revolutions:

- Advanced AI Models: Large language models and computer vision systems that can understand context, sentiment, and meaning in unstructured data

- Scalable Storage Architectures: Technologies like Apache Iceberg and Delta Lake that enable efficient querying of massive datasets in their native formats

- Edge Processing Capabilities: Distributed computing frameworks that bring intelligence to where data is generated

This convergence created a new possibility: analyzing unstructured data in place, at scale, without losing the rich context that makes it valuable.

The Technology Stack Revolution

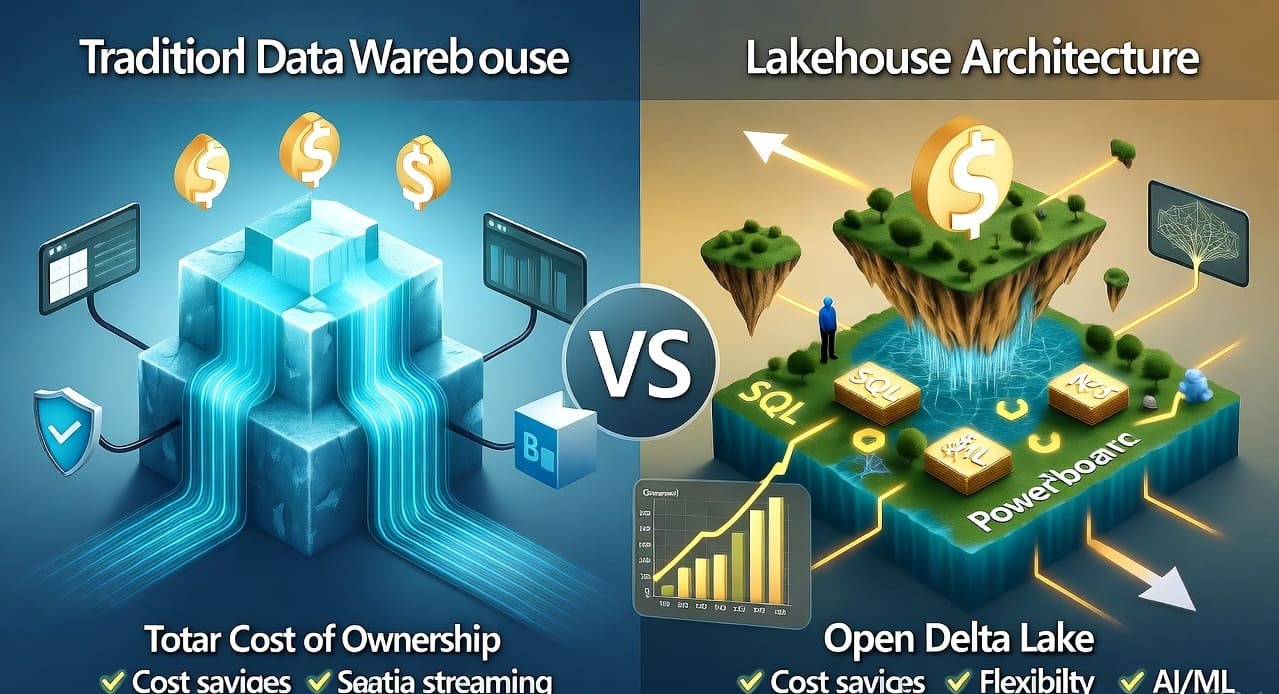

LakeDB: The New Data Architecture

Traditional data architectures forced organizations to choose between the flexibility of data lakes and the performance of data warehouses. LakeDB architectures eliminate this trade-off by combining:

Data Lake Flexibility:

- Store any type of data in its native format

- Accommodate rapid schema evolution

- Handle both structured and unstructured data seamlessly

Data Warehouse Performance:

- Enable fast, complex queries across massive datasets

- Support concurrent user access

- Provide reliable transactional consistency

Operational Database Capabilities:

- Handle real-time data ingestion and updates

- Support both analytical and transactional workloads

- Enable immediate consistency for time-sensitive operations

Real-World Example: A global retail company uses LakeDB architecture to analyze:

- Structured data: Sales transactions, inventory levels, customer demographics

- Semi-structured data: Website clickstreams, mobile app interactions, API logs

- Unstructured data: Customer reviews, social media mentions, product images, store security footage

All of this data coexists in the same system, enabling queries like “Show me correlation between negative sentiment in social media, specific product images, and subsequent return rates by geographic region.” This type of cross-format analysis was impossible with traditional architectures.

Apache Iceberg and Delta Lake: The Format Evolution

These open table formats represent a fundamental shift in how we store and query large datasets:

Apache Iceberg Advantages:

- Schema Evolution: Add, rename, or reorder columns without rewriting data

- Time Travel: Query data as it existed at any point in time

- Partition Evolution: Change partitioning schemes without data migration

- Metadata Management: Efficient handling of table metadata for massive datasets

Delta Lake Benefits:

- ACID Transactions: Reliable writes and consistent reads for analytical workloads

- Unified Batch and Streaming: Handle both real-time and historical data seamlessly

- Data Quality Enforcement: Schema validation and constraint checking

- Audit History: Complete log of all changes to datasets

The Performance Impact: Organizations report 10-100x performance improvements when migrating from traditional formats to these advanced table formats, particularly for queries that span both structured and unstructured data.

Domain-Specific AI: The Intelligence Layer

Beyond General-Purpose Models

While general-purpose AI models like GPT-4 can handle many tasks, the real breakthrough in unstructured data processing comes from domain-specific models trained on specialized datasets.

Healthcare AI Models:

- Trained on medical literature, clinical notes, and diagnostic images

- Understand medical terminology, drug interactions, and clinical contexts

- Can extract insights from patient records, research papers, and diagnostic reports

- Enable personalized treatment recommendations based on comprehensive patient data

Financial Services Models:

- Trained on regulatory documents, market reports, and financial statements

- Understand complex financial relationships and risk factors

- Can analyze earnings calls, regulatory filings, and market sentiment

- Enable real-time risk assessment and compliance monitoring

Legal AI Models:

- Trained on case law, contracts, and legal documents

- Understand legal precedents and regulatory requirements

- Can analyze contracts, legal briefs, and compliance documents

- Enable automated contract review and legal research

Manufacturing Models:

- Trained on technical specifications, maintenance logs, and sensor data

- Understand equipment behavior and failure patterns

- Can analyze maintenance reports, sensor readings, and quality control data

- Enable predictive maintenance and quality optimization

The Accuracy Revolution

Domain-specific models deliver dramatically improved accuracy compared to general-purpose alternatives:

- Medical Diagnosis: 95% accuracy in interpreting radiology images vs. 78% for general models

- Financial Risk Assessment: 40% reduction in false positives for fraud detection

- Legal Document Analysis: 90% accuracy in contract clause identification vs. 65% for general models

- Manufacturing Quality Control: 85% accuracy in defect detection vs. 60% for general models

Real-World Transformation Stories

Case Study 1: Global Healthcare Network

Challenge: A multinational healthcare organization had decades of patient records, research notes, diagnostic images, and clinical trial data stored in various formats across different systems. Critical insights were trapped in unstructured clinical notes and research documents.

Traditional Approach Problems:

- Manual review of clinical notes took weeks per patient

- Research insights were siloed in individual documents

- Patterns across patient populations were invisible

- Regulatory compliance required manual document review

Unstructured Data Solution:

- Deployed healthcare-specific language models to analyze clinical notes

- Implemented computer vision systems for diagnostic image analysis

- Used LakeDB architecture to query across all data types simultaneously

- Enabled real-time pattern detection across patient populations

Results:

- 90% reduction in time to identify relevant patient cohorts for clinical trials

- $50M annual savings from improved diagnostic accuracy and reduced redundant testing

- 300% faster research publication cycle through automated literature analysis

- Zero compliance violations through automated regulatory document monitoring

Case Study 2: Investment Management Firm

Challenge: A major investment firm needed to analyze market sentiment, regulatory changes, earnings calls, social media discussions, and economic reports to inform investment decisions. 99% of this data was unstructured.

Traditional Limitations:

- Analyst teams could only review a fraction of available information

- Market sentiment analysis was subjective and inconsistent

- Regulatory changes were identified weeks after publication

- Investment decisions relied on incomplete information

Breakthrough Implementation:

- Financial domain models analyze earnings calls, SEC filings, and market reports

- Sentiment analysis across social media, news, and analyst reports

- Real-time regulatory change detection and impact assessment

- Multi-modal analysis combining text, audio, and numerical data

Impact:

- 15% improvement in portfolio performance through better-informed decisions

- Real-time alerts for regulatory changes affecting portfolio companies

- 80% reduction in research time through automated analysis

- $200M additional AUM attracted through demonstrated performance improvements

Case Study 3: Manufacturing Giant

Challenge: A global manufacturer generates terabytes of unstructured data daily from sensor readings, maintenance logs, quality control reports, and supply chain communications. Equipment failures cost millions in downtime.

Previous State:

- Reactive maintenance based on scheduled intervals

- Quality issues discovered after production

- Supply chain disruptions identified after they occur

- Knowledge trapped in individual technician reports

Transformation Approach:

- Manufacturing-specific AI models analyze sensor patterns and maintenance logs

- Computer vision systems inspect product quality in real-time

- Natural language processing extracts insights from technician reports

- Predictive models identify potential failures before they occur

Outcomes:

- 60% reduction in unplanned downtime through predictive maintenance

- 95% improvement in quality control through automated inspection

- $100M annual savings from optimized maintenance scheduling

- 30% faster problem resolution through automated knowledge extraction

The Technical Architecture Deep Dive

Modern Unstructured Data Pipeline

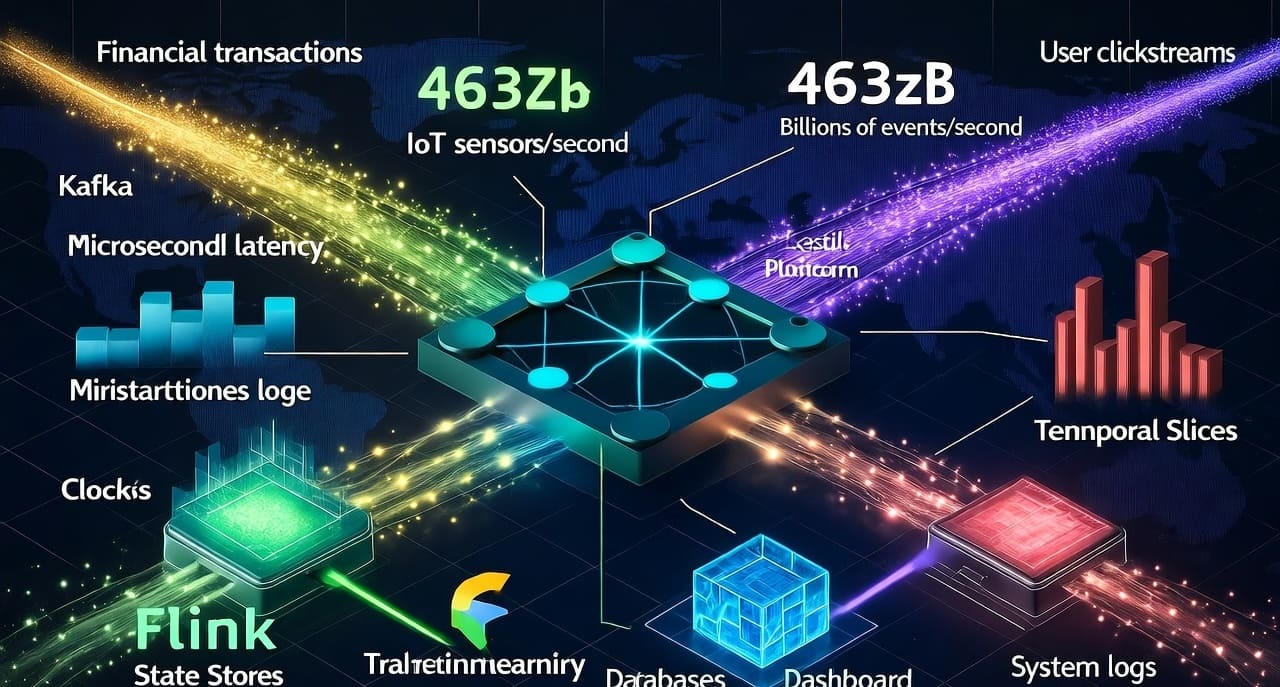

1. Ingestion Layer

- Real-time Streaming: Apache Kafka and Pulsar handle high-volume data streams

- Batch Processing: Apache Spark and Flink process large historical datasets

- Edge Collection: IoT gateways and edge devices collect data at the source

- API Integration: RESTful and GraphQL APIs connect to external data sources

2. Storage Layer

- Object Storage: AWS S3, Azure Blob, Google Cloud Storage for massive scale

- Table Formats: Apache Iceberg and Delta Lake for queryable datasets

- Search Indexes: Elasticsearch and Solr for full-text search capabilities

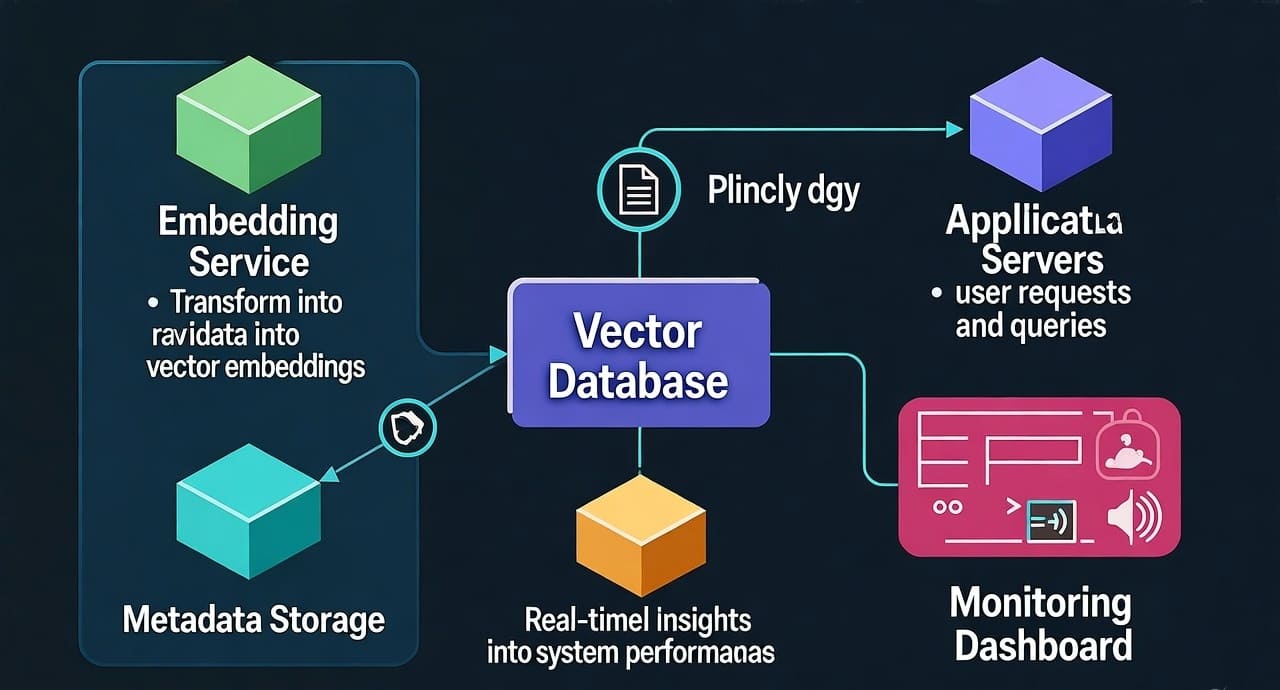

- Vector Databases: Pinecone and Weaviate for AI-generated embeddings

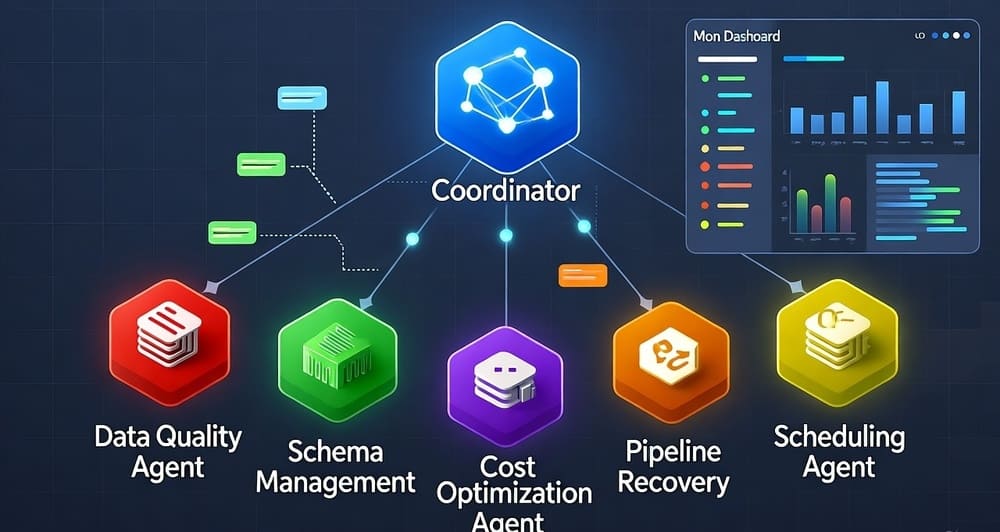

3. Processing Layer

- AI/ML Frameworks: PyTorch, TensorFlow, and Hugging Face for model deployment

- Query Engines: Apache Spark, Presto, and Trino for distributed queries

- Workflow Orchestration: Apache Airflow and Prefect for pipeline management

- Feature Stores: Feast and Tecton for ML feature management

4. Intelligence Layer

- Domain-Specific Models: Specialized AI models for different industries

- Multi-Modal Processing: Combined text, image, audio, and sensor analysis

- Real-Time Inference: Low-latency model serving for immediate insights

- Continuous Learning: Models that improve based on new data patterns

5. Application Layer

- Analytics Dashboards: Interactive visualizations of unstructured insights

- Alert Systems: Real-time notifications based on pattern detection

- API Services: Programmatic access to processed insights

- Decision Support: AI-powered recommendations for business users

Performance and Scale Considerations

Query Performance Optimization:

- Partition Pruning: Eliminate irrelevant data sections before processing

- Predicate Pushdown: Apply filters at the storage layer

- Columnar Storage: Optimize for analytical query patterns

- Caching Strategies: Intelligent caching of frequently accessed data

Scalability Patterns:

- Horizontal Scaling: Distribute processing across multiple nodes

- Auto-scaling: Dynamic resource allocation based on workload

- Resource Isolation: Separate compute resources for different workload types

- Cost Optimization: Efficient resource utilization to minimize costs

The Business Value Revolution

From Cost to Revenue Center

Unstructured data processing is transforming from a technical challenge into a business advantage. Organizations that master these capabilities are seeing direct impact on their bottom line:

Revenue Generation:

- New Product Features: AI-powered features that differentiate products

- Data Monetization: Selling insights derived from unstructured data analysis

- Customer Intelligence: Personalization that drives higher conversion rates

- Market Advantage: Faster decision-making based on comprehensive information

Cost Reduction:

- Automated Processes: AI handling tasks that previously required human analysis

- Risk Mitigation: Early detection of problems before they become costly

- Operational Efficiency: Optimized operations based on comprehensive data analysis

- Compliance Automation: Reduced compliance costs through automated monitoring

Competitive Differentiation:

- Market Intelligence: Understanding customer sentiment and market trends

- Innovation Acceleration: Faster product development based on customer feedback

- Supply Chain Optimization: End-to-end visibility and optimization

- Customer Experience: Personalized experiences based on comprehensive customer understanding

ROI Metrics That Matter

Quantitative Measures:

- Time to Insight: How quickly organizations can extract actionable intelligence

- Data Utilization Rate: Percentage of available data actually used for decision-making

- Automation Rate: Percentage of previously manual processes now automated

- Accuracy Improvement: Enhancement in prediction and detection accuracy

Business Impact Measures:

- Revenue per Customer: Increased through better personalization and recommendations

- Customer Satisfaction: Improved through better understanding of customer needs

- Operational Efficiency: Reduced costs and improved productivity

- Risk Reduction: Fewer surprises and better preparation for challenges

Overcoming Implementation Challenges

Technical Challenges and Solutions

Data Quality and Consistency:

- Challenge: Unstructured data often contains errors, duplicates, and inconsistencies

- Solution: Implement automated data quality checks and cleaning pipelines

- Best Practice: Use ML models to detect and correct common data quality issues

Processing Complexity:

- Challenge: Unstructured data processing requires specialized skills and tools

- Solution: Adopt platforms that abstract complexity and provide easy-to-use interfaces

- Best Practice: Start with pre-built models and gradually customize as expertise grows

Integration Difficulties:

- Challenge: Connecting unstructured data insights with existing business systems

- Solution: Use API-first architectures and standard data formats

- Best Practice: Design integration points from the beginning of the project

Organizational Challenges and Solutions

Skills Gap:

- Challenge: Limited expertise in AI/ML and unstructured data processing

- Solution: Combination of training existing staff and hiring specialized talent

- Best Practice: Partner with technology vendors who provide managed services

Change Management:

- Challenge: Resistance to new ways of working and decision-making

- Solution: Start with pilot projects that demonstrate clear value

- Best Practice: Include business users in the design and implementation process

Budget Constraints:

- Challenge: Significant upfront investment in new technology and skills

- Solution: Phased implementation that demonstrates ROI at each stage

- Best Practice: Focus on high-impact use cases that justify initial investment

Future Trends and Predictions

2025-2027 Evolution

Democratization of AI:

- Pre-built models for common unstructured data use cases

- No-code/low-code platforms for business users

- Automated model selection and optimization

- Self-service analytics for unstructured data

Real-Time Everything:

- Streaming analysis of unstructured data

- Immediate insights from live data feeds

- Real-time personalization and recommendations

- Instant anomaly detection and alerting

Multi-Modal Intelligence:

- Combined analysis of text, images, audio, and sensor data

- Cross-modal pattern recognition and insights

- Unified understanding across different data types

- Holistic business intelligence from all data sources

Edge Processing:

- AI models running on edge devices

- Local processing of sensitive unstructured data

- Reduced latency for time-critical applications

- Distributed intelligence across the organization

The Long-Term Vision

By 2030, we can expect:

- Seamless Integration: Unstructured and structured data analysis become indistinguishable

- Autonomous Insights: AI systems that automatically discover and report important patterns

- Predictive Intelligence: Systems that anticipate business needs and opportunities

- Natural Interfaces: Business users interact with data using natural language

Getting Started: A Practical Roadmap

Phase 1: Assessment and Planning (Months 1-2)

Data Inventory:

- Catalog all unstructured data sources in your organization

- Estimate volume, velocity, and variety of each source

- Identify high-value use cases with clear business impact

- Assess current infrastructure and skill capabilities

Use Case Prioritization:

- Focus on use cases with high business value and manageable complexity

- Consider data quality and availability for initial projects

- Evaluate potential ROI and implementation timeline

- Select 2-3 pilot projects for initial implementation

Phase 2: Infrastructure and Tools (Months 3-4)

Technology Stack Selection:

- Choose cloud platforms that support modern data lake architectures

- Implement Apache Iceberg or Delta Lake for advanced table formats

- Select AI/ML platforms that support domain-specific models

- Establish monitoring and governance frameworks

Proof of Concept:

- Implement pilot projects with limited scope and clear success metrics

- Test technical capabilities and business value

- Validate technology choices and architectural decisions

- Build internal expertise and confidence

Phase 3: Production Implementation (Months 5-8)

Scaling Up:

- Expand successful pilot projects to production scale

- Implement robust data quality and monitoring systems

- Establish automated pipelines for continuous data processing

- Train business users on new capabilities and interfaces

Integration:

- Connect unstructured data insights with existing business systems

- Establish APIs and data feeds for downstream applications

- Implement security and compliance controls

- Monitor performance and optimize for scale

Phase 4: Optimization and Expansion (Months 9-12)

Continuous Improvement:

- Optimize models and pipelines based on usage patterns

- Expand to additional use cases and data sources

- Implement advanced features like real-time processing

- Measure and report business value and ROI

Organizational Development:

- Build internal expertise and centers of excellence

- Establish governance and best practices

- Plan for long-term technology evolution

- Prepare for next-generation capabilities

Key Takeaways

The Paradigm Shift is Real We’re moving from a world where 80% of enterprise data was unusable to one where it becomes the primary source of competitive advantage. This isn’t just a technology upgrade—it’s a fundamental transformation in how organizations understand and operate their businesses.

Technology Convergence Enables Breakthrough The combination of advanced AI models, scalable storage architectures, and distributed processing capabilities has reached a tipping point. What was impossible five years ago is now practical and cost-effective.

Domain Expertise Drives Value Generic AI models provide a starting point, but domain-specific intelligence delivers the accuracy and insights that create real business value. Organizations that invest in specialized capabilities will see the greatest returns.

Implementation Requires Strategy Success requires more than just adopting new technology. Organizations need clear strategies, phased implementation plans, and commitment to developing new capabilities and ways of working.

The Time is Now Organizations that wait for these technologies to mature further risk being left behind. The competitive advantages are available today for organizations willing to invest in unstructured data capabilities.

Start with Value, Scale with Success Begin with high-impact use cases that demonstrate clear business value. Use early successes to build momentum, expertise, and investment for broader transformation.

The unstructured data breakthrough represents one of the most significant opportunities in modern business. Organizations that embrace this transformation will unlock insights, efficiencies, and innovations that were previously impossible. The technology is ready, the business case is clear, and the competitive advantage awaits those bold enough to seize it.

The question isn’t whether unstructured data will transform your industry—it’s whether you’ll lead that transformation or be disrupted by it.

Leave a Reply