Snowflake for Machine Learning: Unlocking the Power of Scalable Data Platforms

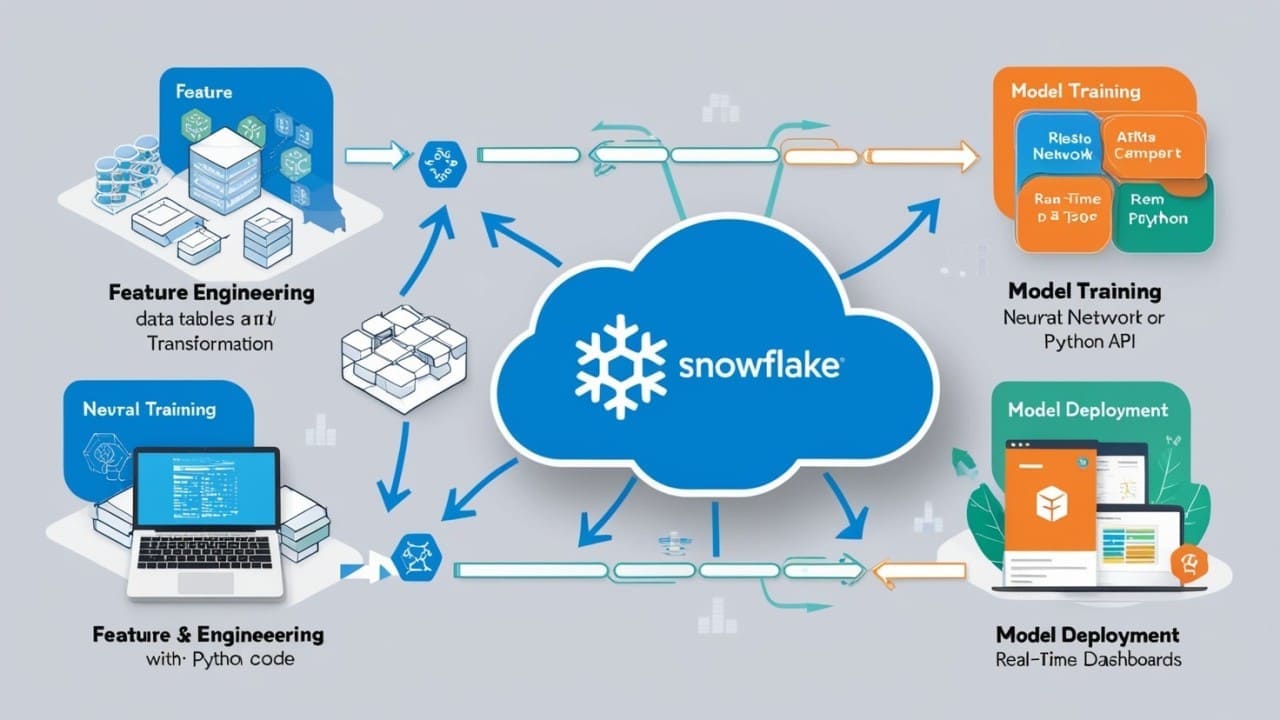

As machine learning continues to redefine industries, the need for scalable, efficient, and integrated data solutions has never been greater. Enter Snowflake, the cloud-based data platform that not only excels in data warehousing but also empowers machine learning (ML) workflows. From feature engineering to model deployment, Snowflake offers a suite of tools and integrations that make it a valuable asset for ML practitioners.

In this article, we’ll explore how to use Snowflake for machine learning tasks, including feature engineering, model training, and model deployment.

1. Why Snowflake for Machine Learning?

Snowflake’s unique architecture and features make it an excellent choice for ML workflows:

- Scalability: Snowflake’s cloud-native architecture allows seamless scaling to handle large datasets, ensuring that ML models can access the data they need without bottlenecks.

- Data Sharing and Collaboration: With Snowflake’s secure data sharing capabilities, teams can collaborate effortlessly across departments or organizations.

- Integration-Friendly: Snowflake integrates with popular ML tools and frameworks like Python, Spark, TensorFlow, and Jupyter Notebooks.

- SQL-Based Processing: Data engineers and analysts can use familiar SQL queries for feature engineering and data preprocessing, reducing the learning curve.

2. Feature Engineering in Snowflake

Feature engineering is a critical step in any ML pipeline, and Snowflake provides robust capabilities for this task:

Key Capabilities:

- SQL for Feature Transformation: Use SQL queries to normalize, aggregate, and create derived features directly in Snowflake. For example:

SELECT

user_id,

AVG(purchase_amount) AS avg_purchase,

MAX(purchase_date) AS last_purchase_date

FROM

transactions

GROUP BY

user_id; - Window Functions: Perform time-based calculations or ranking operations using Snowflake’s advanced SQL window functions.

Examples:

- Churn Prediction Features: Calculate customer engagement metrics like average session time and last login date.

- Fraud Detection Features: Create transaction aggregates such as total spend by category within the past 30 days.

Data Pipelines for Feature Engineering:

Snowflake integrates seamlessly with tools like dbt (data build tool) to automate and orchestrate feature engineering pipelines. This ensures reproducibility and scalability for complex ML workflows.

3. Model Training with Snowflake

While Snowflake is not designed as a model training platform, it works beautifully in tandem with ML frameworks by serving as a powerful data backend.

Connecting to ML Frameworks:

- Use Snowpark, Snowflake’s developer framework, to process data for training models in Python or Scala.

- Export data to ML platforms like TensorFlow or PyTorch via Python libraries such as pandas or snowflake-connector-python.

Example Workflow:

- Extract Data from Snowflake:

- Train Your Model:

- Examples:

- Store Model Outputs Back in Snowflake: Use Snowflake’s support for semi-structured data (like JSON) to store model outputs or metadata.

4. Model Deployment with Snowflake

Snowflake’s architecture also facilitates seamless model deployment:

Using UDFs (User-Defined Functions):

Deploy models as Python UDFs within Snowflake to enable real-time inference directly in the database. For example:

import joblib

def predict_purchase(user_features):

model = joblib.load("model.joblib")

return model.predict([user_features]) Register this function as a Snowflake Python UDF for direct use:

SELECT

predict_purchase(user_features)

FROM

user_data; Examples:

- Real-Time Recommendations: Use a deployed model to suggest products to users based on their browsing history.

- Fraud Detection Alerts: Trigger immediate notifications for transactions flagged as high risk by the model.

Integration with External Tools:

- Streamlit: Build lightweight, interactive dashboards for model inference using Snowflake as the backend.

- Airflow: Automate the deployment pipeline, ensuring models are retrained and redeployed regularly.

5. Best Practices for ML Workflows in Snowflake

To get the most out of Snowflake for ML, consider the following:

- Use Materialized Views: Speed up feature engineering queries by caching frequently accessed data.

- Leverage Snowflake’s Zero-Copy Cloning: Create test environments for ML experimentation without duplicating data.

- Monitor Costs: Track query usage and optimize storage to keep ML workflows cost-effective.

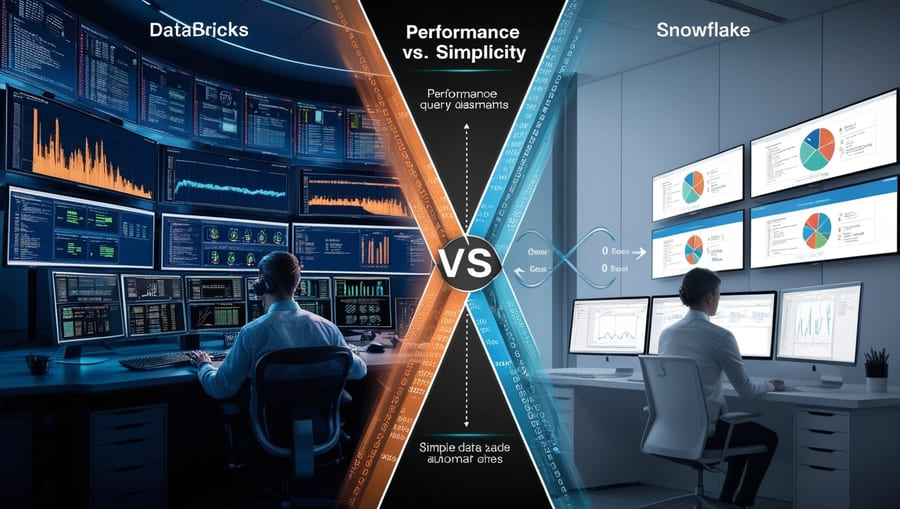

- Combine with Lakehouse Architectures: Use Snowflake in a hybrid approach alongside tools like Databricks for specialized training tasks.

Conclusion

Snowflake’s powerful features make it an indispensable tool for machine learning workflows. From efficient feature engineering to seamless integration with training frameworks and scalable model deployment, Snowflake simplifies the end-to-end ML pipeline.

As the demand for machine learning grows, leveraging platforms like Snowflake ensures that data teams can build, train, and deploy models with confidence and efficiency.

How are you using Snowflake for your machine learning projects? Share your tips and experiences in the comments below!

Leave a Reply