QuestDB vs. General-Purpose Databases for Metrics: Benchmark Methodology & TCO Trade-offs

Meta description (159 chars):

How to fairly compare QuestDB to PostgreSQL/MySQL for metrics. A conservative benchmark plan, architecture trade-offs, and TCO framing for mid-level data engineers.

Introduction — why this matters

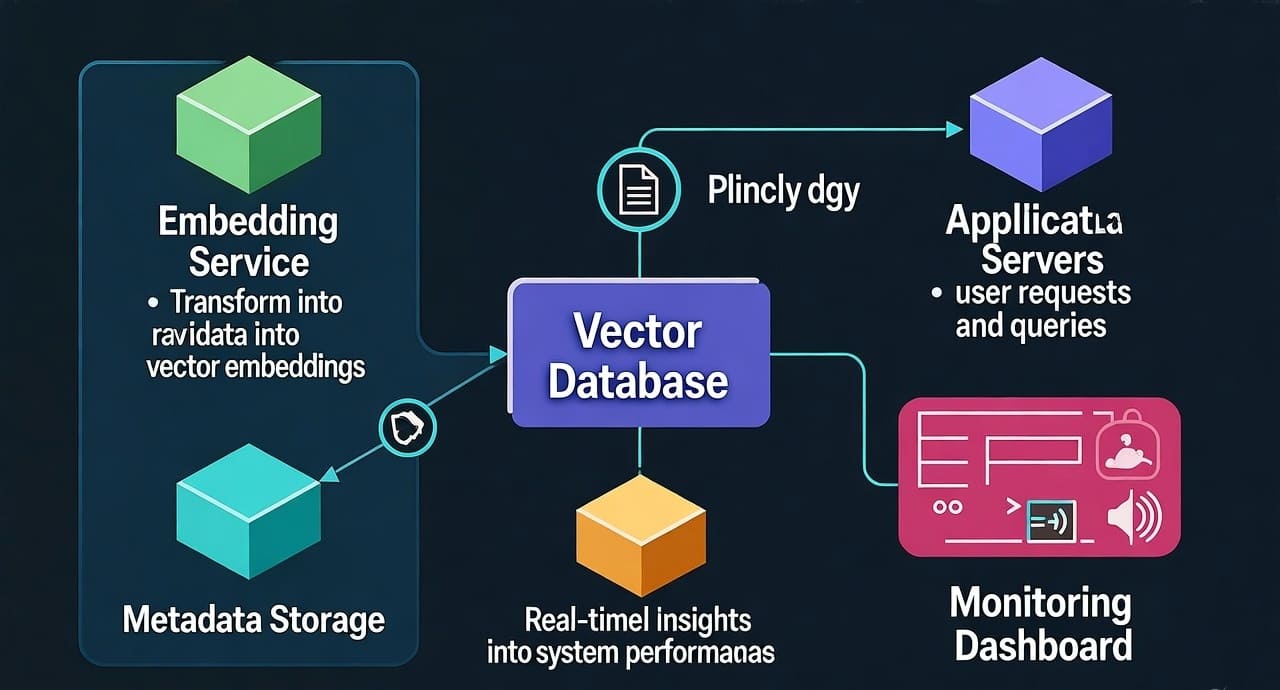

Your product team wants real-time metrics: ingest millions of points, query “last 15 minutes” instantly, and retain weeks or months at reasonable cost. You can hack this into a general-purpose database (PostgreSQL/MySQL) or pick a purpose-built time-series engine like QuestDB. The right choice depends less on hype and more on methodical benchmarking and a clear TCO lens.

This article gives you a vendor-neutral way to test, plus pragmatic guidance on where each approach shines (and where it hurts).

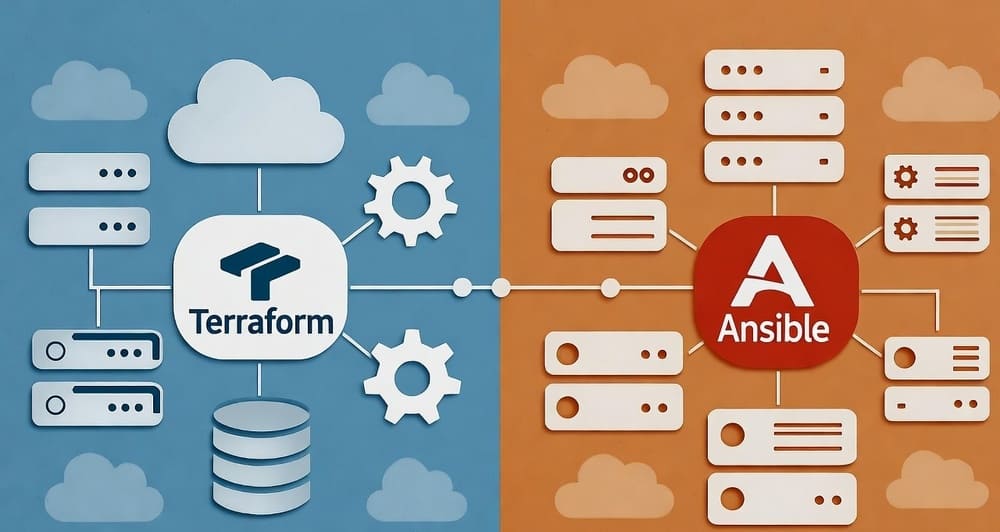

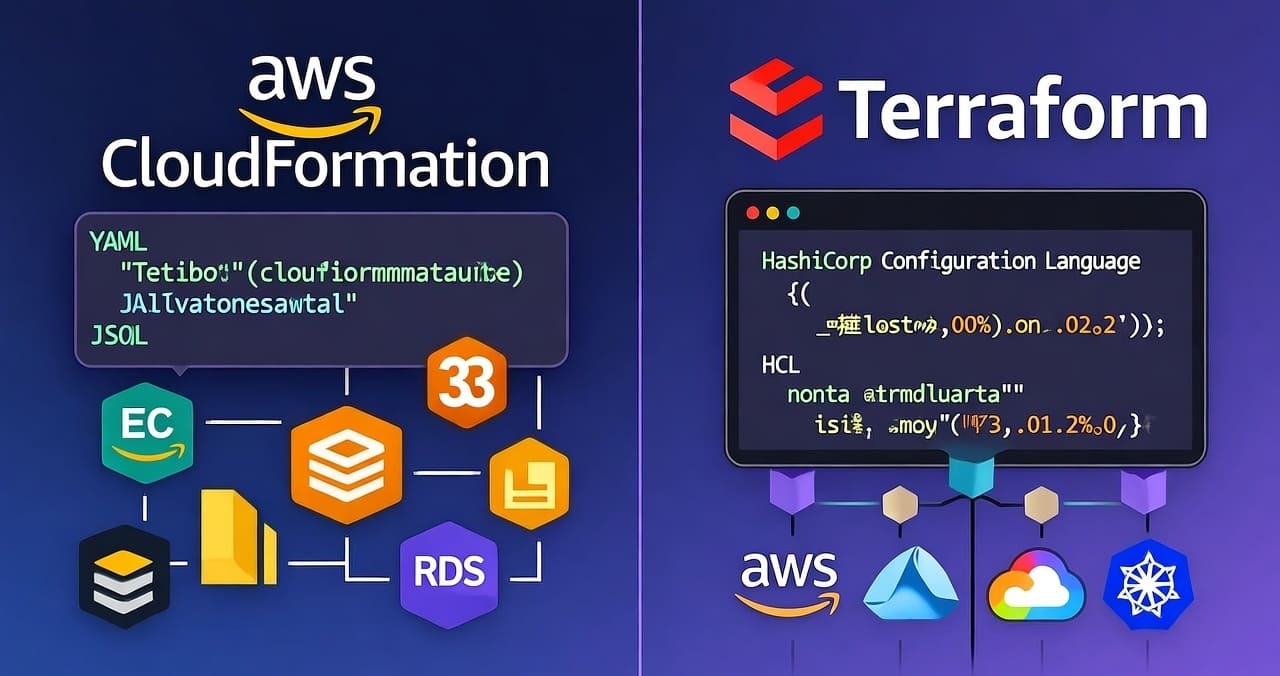

Architectures in 5 minutes (what you’re actually comparing)

QuestDB (time-series specialized)

- Columnar storage, time partitions, vectorized execution for fast scans and aggregations over time windows. (QuestDB)

- SQL with time-series extensions like

SAMPLE BY(downsampling) andASOF JOIN(point-in-time joins). (QuestDB) - Ingestion: high-throughput line protocol (ILP) over TCP/UDP; official clients (e.g., Python) available. (QuestDB)

- Connectivity: PostgreSQL wire protocol for standard drivers/tools. (QuestDB)

- Write → read paths: row-oriented WAL write path that converts to columnar; multi-tier storage model. (QuestDB)

General-purpose row stores (PostgreSQL/MySQL)

- Optimized for OLTP; row-oriented pages with B-tree indexes by default. Time-range scans rely on partitioning and specialized indexes for scale.

- PostgreSQL offers BRIN indexes that summarize block ranges (useful for append-only, time-ordered tables). (PostgreSQL)

- MySQL relies on InnoDB partitioning (by RANGE/LIST) for large time-series tables. (MySQL)

What this means for metrics

- QuestDB reduces the knobs you tune for time-series: time partitioning is a first-class concept; downsampling and point-in-time joins are built-in.

- PostgreSQL/MySQL can absolutely serve metrics at moderate scale, but you’ll engineer around row storage: partition DDL, index choices (BRIN vs B-tree), and maintenance behavior (e.g., vacuum, index bloat) as cardinality or retention grows.

A fair, conservative benchmark methodology

Goal: produce decision-grade evidence without vendor bias. No cherry-picked numbers. Publish your scripts and configs.

1) Workload model (metrics reality check)

Model counters/gauges (e.g., service latency, CPU, error rate) from 5–50k metric series:

- Schema shape:

ts TIMESTAMP,metric TEXT/SYMBOL,labels JSON/tags,value DOUBLE. - Ingestion: mostly append-only, small batches (100–10k rows), occasional backfill/out-of-order.

- Queries:

- Q1: Last 15 minutes, group by 10-sec buckets (

avg(value)) for top 50 series. - Q2: Last 1 hour, top-N by rate.

- Q3: Point-in-time join (metric + dimension table) for the most recent value per series.

- Q4: Day-over-day comparison windows.

- Q1: Last 15 minutes, group by 10-sec buckets (

2) Test variants (so results generalize)

- Hot path: data fits in cache; Warm: partial cache; Cold: storage-bound.

- Concurrency: 16, 64, 256 concurrent queries; 1–4 concurrent ingesters.

- Retention windows: 7, 30, 90 days.

- Cardinality: 100k, 1M series (if feasible).

3) Implementation details (be explicit)

- QuestDB

- Table: designated timestamp + time partitioning (day). (QuestDB)

- Ingest via ILP (TCP). Use the official client; enable out-of-order tolerance only if your data actually needs it. (QuestDB)

- Query via PG wire from your chosen client/BI. (QuestDB)

- Avoid mixing multiple write protocols to the same table in the same moment (stick to ILP for writes). (GitHub)

- PostgreSQL

- Table: range partitioned by time (daily).

- Index: BRIN on timestamp; add B-tree only if necessary. (PostgreSQL)

- MySQL

- Table: InnoDB partitioning by time (RANGE). Keep partition count sane (hundreds, not thousands). (MySQL)

4) Environment & fairness rules

- Run on the same instance class and disk type across systems.

- Disable “secret weapons” you wouldn’t use in production (e.g., exotic session flags).

- Warm cache before latency tests; report p50/p95/p99 latency and throughput.

- Record CPU%, disk IOPS, cache hit rates, and storage size on disk.

- Publish DDL, configs, and dataset generator.

5) Metrics to report (no hero numbers)

- Ingest: rows/sec sustained; backfill penalty (%).

- Query: p50/p95 latency for Q1–Q4 at each concurrency level.

- Operational: compaction/maintenance overhead, schema change cost.

- Efficiency: disk bytes/row after 7/30/90 days; RAM working-set.

- Stability: error rates under mixed read/write.

Example: minimal, comparable schemas & queries

QuestDB — table + ingest + downsample

-- 1) Metrics table (designated timestamp, day partitions)

CREATE TABLE metrics (

ts TIMESTAMP,

metric SYMBOL, -- low-cardinality string stored efficiently

labels SYMBOL, -- optional tag key (or multiple SYMBOL columns)

value DOUBLE

) TIMESTAMP(ts) PARTITION BY DAY; -- time partitions

# 2) Ingest via ILP (Python)

from questdb.ingress import Sender, TimestampNanos

with Sender(host="localhost", port=9009) as sender: # ILP over TCP

sender.row(

"metrics",

symbols={"metric": "latency_ms", "labels": "service=payments,env=prod"},

columns={"value": 125.4},

at=TimestampNanos.now()

)

sender.flush()

(ILP is the high-throughput ingest path; use official clients.) (QuestDB Client Library)

-- 3) Downsample last 15 minutes by 10s buckets

SELECT

metric,

SAMPLE BY 10s FILL(NULL) -- upsample/fill if needed

FROM metrics

WHERE ts > now() - 15m;

(SAMPLE BY provides native time bucketing.) (QuestDB)

PostgreSQL — partitions + BRIN + equivalent query

-- 1) Parent table

CREATE TABLE metrics (

ts timestamptz NOT NULL,

metric text NOT NULL,

labels jsonb,

value double precision

) PARTITION BY RANGE (ts);

-- 2) Daily partitions (generate for each day)

CREATE TABLE metrics_2025_11_20 PARTITION OF metrics

FOR VALUES FROM ('2025-11-20') TO ('2025-11-21');

-- 3) BRIN index on the parent (covers partitions)

CREATE INDEX ON metrics USING BRIN (ts);

(BRIN summarizes timestamp ranges; great for append-only.) (PostgreSQL)

-- 4) Downsample last 15 minutes by 10s buckets

WITH t AS (

SELECT date_trunc('second', ts) AS s, metric, value

FROM metrics

WHERE ts > now() - interval '15 minutes'

)

SELECT metric,

time_bucket('10 seconds', s) AS bucket, -- extension or manual binning

avg(value)

FROM t

GROUP BY metric, bucket

ORDER BY bucket;

MySQL — partitions

CREATE TABLE metrics (

ts TIMESTAMP NOT NULL,

metric VARCHAR(128) NOT NULL,

labels JSON,

value DOUBLE,

KEY (ts) -- optional; choose carefully

)

PARTITION BY RANGE (TO_DAYS(ts)) (

PARTITION p20251120 VALUES LESS THAN (TO_DAYS('2025-11-21')),

PARTITION p20251121 VALUES LESS THAN (TO_DAYS('2025-11-22'))

-- rotate daily

);

(InnoDB partitioning is the lever for very large time-series tables.) (MySQL)

TCO framing (what actually costs money)

TCO = infra (compute, storage, I/O) + ops (maintenance, incidents) + developer time (schema/index gymnastics) + risk (scale ceilings, availability).

QuestDB

- Infra: columnar scans + time partition pruning → efficient CPU/IO for aggregates; storage density benefits from column files. (QuestDB)

- Ops: retention = drop old partitions; fewer secondary indexes to babysit; single engine focused on time-series. (QuestDB)

- Dev time: SQL with built-ins (

SAMPLE BY,ASOF JOIN) reduces custom ETL for downsampling and PIT joins. (QuestDB) - Risks: new team habits (ILP ingestion), and be mindful about mixing write paths (stick to ILP for heavy ingest). (GitHub)

PostgreSQL/MySQL

- Infra: row layout + B-tree(s) can cost IO on wide scans; BRIN mitigates for PostgreSQL, but you still manage partitions and index strategy. (PostgreSQL)

- Ops: partition churn, vacuum/maintenance (PostgreSQL), schema evolution planning, index rebuilds.

- Dev time: implement downsampling/materialization layers yourself; more DDL/ops glue.

- Upside: ecosystems/tooling are ubiquitous; teams already know them.

Rule of thumb:

- If your SLO is sub-second analytics across hot windows with high concurrency, a time-series engine like QuestDB typically lowers both the latency and the ops overhead needed to sustain it.

- If your workload is modest or mostly OLTP with a few dashboards, optimizing PostgreSQL/MySQL (partitions + BRIN/keys) is perfectly reasonable and keeps stack complexity low.

Best practices & common pitfalls

- Pick honest partitions. Day partitions are a good default; go hourly only if you’ve proven the need. (Both worlds.) (QuestDB)

- Choose one write path. For QuestDB, standardize on ILP for ingestion; avoid concurrent heavy inserts via multiple protocols to the same table. (QuestDB)

- Downsample close to storage. Use

SAMPLE BY/materialized views in QuestDB; in general-purpose DBs, pre-aggregate via jobs to protect dashboards. (QuestDB) - Index minimally. PostgreSQL: start with BRIN; add selective B-trees only when justified. (PostgreSQL)

- Retention by dropping partitions. Avoid DELETE storms. (All systems with partitioning.) (QuestDB)

Summary & recommendation

- QuestDB is engineered for time-series: columnar, time-partitioned, time-aware SQL, ILP ingestion, and a write→read path optimized for metrics. This usually translates to simpler schemas, predictable retention, and fewer knobs for hot-window analytics. (QuestDB)

- General-purpose databases can deliver excellent results for small/medium metrics, especially with partitioning and (PostgreSQL) BRIN, but you’ll own more plumbing and maintenance. (PostgreSQL)

Call to action: implement the benchmark above with your real queries. Publish p50/p95, ingest stability, and disk/CPU profiles. Decide with data—not anecdotes.

Internal link ideas (for your site)

- “Designing Time-Series Partitions (Day vs Hour) with Real Traffic Patterns”

- “Downsampling Strategies: Database-Native vs ETL Jobs”

- “PostgreSQL BRIN in Practice for Append-Only Tables”

- “Ingestion Paths 101: ILP vs JDBC vs REST for Metrics”

- “Retention at Scale: Why Dropping Partitions Beats Deletes”

Image prompt (for DALL·E / Midjourney)

“A clean, isometric architecture diagram comparing QuestDB and a generic row-store: ILP ingestion → time-partitioned columnar engine vs JDBC inserts → partitioned row store with BRIN. Minimalist, high-contrast, blue/gray palette.”

Tags

#QuestDB #TimeSeries #PostgreSQL #MySQL #Metrics #Benchmarking #DataEngineering #TCO #BRIN #ILP

Leave a Reply