Snakemake: Workflow Management for Reproducible Scientific Computing

Introduction

Scientific research has a reproducibility problem. You run an analysis today, get results, publish a paper. Six months later, you can’t recreate your own findings. Dependencies changed. Data moved. You forgot which parameters you used.

Snakemake was built to solve this. It’s a workflow management system designed for computational research, particularly in bioinformatics and data science. The goal is simple: make your analyses reproducible, scalable, and portable.

Unlike general-purpose workflow tools, Snakemake understands scientific computing. It knows about conda environments, container images, and high-performance computing clusters. It tracks which files depend on which. It only reruns what needs rerunning when something changes.

If you’re analyzing genomics data, running computational experiments, or doing any data-intensive research that needs to be reproducible years later, Snakemake deserves your attention.

What is Snakemake?

Snakemake is a Python-based workflow management system. You define rules that describe how to create output files from input files. Snakemake figures out which rules to run and in what order.

The syntax is Python-like but declarative. You specify what you want, not how to compute it. Snakemake handles dependency resolution, parallelization, and execution.

Created by Johannes Köster in 2012, Snakemake has become the standard in computational biology. Thousands of published papers use it. The tool is actively maintained and continues to evolve.

The name comes from GNU Make, the classic build system. Snakemake borrows concepts from Make but adds features crucial for scientific workflows: Python integration, cluster execution, conda environments, and container support.

Core Concepts

Understanding Snakemake starts with a few key ideas.

Rules are the building blocks. Each rule defines how to create output files from input files. A rule has inputs, outputs, and a command or script to run.

Wildcards make rules general. Instead of writing separate rules for each sample, you use wildcards. Snakemake matches patterns and fills in values automatically.

The DAG (Directed Acyclic Graph) represents dependencies. Snakemake builds the DAG from your rules and target files. It determines what needs to run and in what order.

Targets are the files you want to create. You tell Snakemake what outputs you need. It works backward through the DAG to figure out what inputs are required.

Environments isolate software dependencies. Each rule can specify a conda environment or container. Snakemake creates these automatically.

A Simple Example

Here’s what a basic Snakefile looks like:

rule all:

input:

"results/sample1.bam",

"results/sample2.bam"

rule align_reads:

input:

fastq="data/{sample}.fastq",

reference="reference/genome.fa"

output:

"results/{sample}.bam"

shell:

"bwa mem {input.reference} {input.fastq} | samtools view -b > {output}"

The all rule specifies target files. The align_reads rule defines how to create BAM files from FASTQ files. The {sample} wildcard matches both sample1 and sample2.

When you run snakemake, it executes both alignments. If sample1.bam already exists and is up to date, only sample2.bam gets created.

Why Snakemake Exists

General workflow tools like Airflow or Prefect work fine for many tasks. But scientific computing has specific needs.

Reproducibility is critical. Results must be recreatable months or years later. Software versions matter. Parameters need documentation. Snakemake tracks all of this.

File-based workflows are common. Scientific pipelines transform files: FASTQ to BAM to VCF. Output from one step becomes input to the next. Snakemake’s file-centric model matches this naturally.

Environments change. Software updates break things. Conda environments and containers lock down dependencies. Each step can use different software versions safely.

Compute resources vary. Run locally during development. Scale to HPC clusters for production. Move to the cloud when needed. Snakemake workflows run anywhere without changes.

Partial reruns save time. Data analysis involves iteration. Change one parameter, rerun affected steps. Snakemake only recomputes what changed.

Common Use Cases

Genomics Pipelines

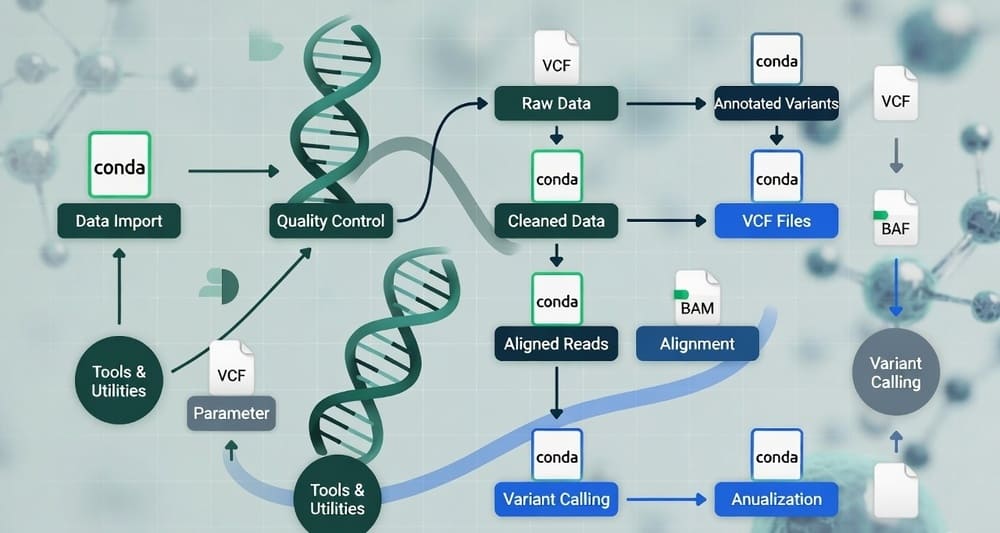

This is Snakemake’s home turf. Genome assembly, variant calling, RNA-seq analysis, ChIP-seq processing. These workflows have well-defined steps and tool chains.

A typical variant calling pipeline: align reads, mark duplicates, call variants, filter calls, annotate variants. Each step transforms files. Dependencies are clear. Snakemake handles the orchestration.

Many published genomics pipelines are Snakemake workflows. The nf-core project (originally for Nextflow) has Snakemake equivalents. Reference implementations exist for standard analyses.

Proteomics and Metabolomics

Mass spectrometry data analysis follows similar patterns. Raw data files get processed through multiple tools. Each step generates intermediate files leading to final results.

Snakemake workflows handle database searches, peptide identification, protein quantification, and statistical analysis. The reproducibility features matter when publishing results.

Computational Experiments

Machine learning research benefits from Snakemake. Train models with different hyperparameters. Compare architectures. Generate figures and tables for papers.

Each experiment configuration becomes a target. Snakemake runs experiments in parallel. Results are tracked and reproducible.

Data Science Workflows

Beyond biology, data scientists use Snakemake for analysis pipelines. Load data, clean it, transform it, model it, visualize results. Each step is a rule.

The file-based approach works well for notebooks, datasets, models, and reports. Changes to analysis code trigger reruns of affected outputs.

Rule Syntax and Features

Rules can be simple or complex. Here are key features.

Multiple inputs and outputs:

rule merge_files:

input:

"data/file1.txt",

"data/file2.txt",

"data/file3.txt"

output:

"results/merged.txt"

shell:

"cat {input} > {output}"

Named inputs for clarity:

rule variant_calling:

input:

bam="alignments/{sample}.bam",

ref="reference/genome.fa",

regions="regions.bed"

output:

"variants/{sample}.vcf"

shell:

"gatk HaplotypeCaller -R {input.ref} -I {input.bam} -L {input.regions} -O {output}"

Python code in rules:

rule process_data:

input:

"data/{sample}.csv"

output:

"results/{sample}.json"

run:

import pandas as pd

df = pd.read_csv(input[0])

result = df.groupby('category').mean().to_dict()

with open(output[0], 'w') as f:

json.dump(result, f)

External scripts:

rule analyze:

input:

"data/{sample}.txt"

output:

"results/{sample}.png"

script:

"scripts/analyze.py"

The script receives snakemake.input, snakemake.output, and other metadata automatically.

Resource specifications:

rule align_reads:

input:

"data/{sample}.fastq"

output:

"results/{sample}.bam"

threads: 8

resources:

mem_mb=16000,

runtime=120

shell:

"bwa mem -t {threads} ref.fa {input} | samtools view -b > {output}"

Snakemake uses this to schedule jobs efficiently on clusters.

Wildcards and Pattern Matching

Wildcards make rules reusable. Instead of hardcoding sample names, use patterns.

rule process_sample:

input:

"data/{sample}.fastq"

output:

"results/{sample}.bam"

shell:

"process {input} > {output}"

This single rule handles any sample. Snakemake matches the pattern and fills in wildcard values.

Multiple wildcards work:

rule process:

input:

"data/{dataset}/{sample}.txt"

output:

"results/{dataset}/{sample}.csv"

shell:

"convert {input} {output}"

Constraining wildcards prevents ambiguity:

rule process:

input:

"data/{sample}.txt"

output:

"results/{sample,[A-Za-z0-9]+}.csv"

shell:

"process {input} {output}"

The regex constrains what {sample} can match.

Dependency Resolution

Snakemake builds a dependency graph automatically. You specify targets. It works backward to determine required inputs.

Say you want results/final_report.html. Snakemake checks the rules. The report rule needs results/analysis.csv. That rule needs results/clean_data.csv. That needs data/raw_data.csv.

Snakemake creates the DAG: raw_data.csv → clean_data.csv → analysis.csv → final_report.html. It runs rules in order, handling dependencies.

If clean_data.csv already exists and is newer than raw_data.csv, Snakemake skips that step. Only outdated outputs get recomputed.

This is powerful for iterative analysis. Change analysis parameters. Only the analysis and report rerun. Data cleaning stays cached.

Software Environment Management

Scientific software is messy. Tools have conflicting dependencies. Versions matter. Reproducibility requires locked environments.

Snakemake integrates with conda:

rule align:

input:

"data/{sample}.fastq"

output:

"results/{sample}.bam"

conda:

"envs/alignment.yaml"

shell:

"bwa mem ref.fa {input} | samtools view -b > {output}"

The conda environment file:

channels:

- bioconda

- conda-forge

dependencies:

- bwa=0.7.17

- samtools=1.15

Snakemake creates this environment automatically. Each rule can use different environments. No conflicts.

Container support works similarly:

rule align:

input:

"data/{sample}.fastq"

output:

"results/{sample}.bam"

container:

"docker://biocontainers/bwa:0.7.17"

shell:

"bwa mem ref.fa {input} | samtools view -b > {output}"

Snakemake runs the command in the container. You get full environment isolation.

Both conda and containers ensure workflows run identically across machines and over time.

Cluster and Cloud Execution

Snakemake workflows scale from laptops to supercomputers without changes.

Local execution is the default. Run snakemake and jobs execute on your machine.

Cluster execution submits jobs to schedulers. Snakemake supports Slurm, SGE, LSF, and others.

snakemake --cluster "sbatch -p compute -t {resources.runtime}" --jobs 100

This submits up to 100 jobs to Slurm simultaneously. Snakemake manages dependencies. Jobs only run when their inputs are ready.

Cloud execution works with Kubernetes, Google Cloud, AWS Batch, and other platforms.

snakemake --kubernetes --jobs 50

Jobs run as Kubernetes pods. Snakemake handles submission and monitoring.

Executor plugins make this flexible. Write custom executors for your infrastructure.

The workflow stays the same. Change execution profiles for different environments.

Configuration and Parameterization

Hardcoded values make workflows brittle. Configuration separates logic from parameters.

configfile: "config.yaml"

rule process:

input:

"data/{sample}.txt"

output:

"results/{sample}.csv"

params:

threshold=config["threshold"],

method=config["method"]

shell:

"process --threshold {params.threshold} --method {params.method} {input} {output}"

The config file:

threshold: 0.05

method: "robust"

samples:

- sample1

- sample2

- sample3

Change parameters without touching workflow code. Run the same workflow with different configurations.

Command line overrides work:

snakemake --config threshold=0.01

This flexibility supports experimentation and production use with the same codebase.

Reporting and Documentation

Scientific workflows need documentation. What ran? Which versions? What parameters?

Snakemake generates reports automatically:

snakemake --report report.html

The report includes:

- Workflow graph visualization

- Runtime statistics

- Software versions

- Configuration values

- Input and output files

- Log files from each rule

You can annotate rules with descriptions:

rule analyze:

input:

"data/{sample}.txt"

output:

"results/{sample}.png"

conda:

"envs/analysis.yaml"

log:

"logs/analyze_{sample}.log"

benchmark:

"benchmarks/analyze_{sample}.txt"

shell:

"analyze {input} {output} 2> {log}"

Logs capture stdout and stderr. Benchmarks measure runtime and memory. These appear in reports.

Reports are self-contained HTML files. Include them in publications or share with collaborators. Everything needed to understand the workflow is there.

Best Practices

Here’s what works well in production scientific workflows.

Start simple. Write basic rules first. Test on small datasets. Add complexity gradually.

Use one rule per tool. Don’t chain commands in shell scripts. Make dependencies explicit with separate rules.

Leverage conda environments. Pin software versions. Each rule can have its own environment.

Log everything. Capture stdout and stderr. Debugging is easier with logs.

Benchmark resource usage. Measure time and memory. Optimize bottlenecks.

Version control workflows. Snakefiles are code. Store them in Git. Track changes over time.

Test workflows locally. Debug on small datasets before scaling to clusters.

Use checkpoints for dynamic workflows. When you don’t know outputs in advance, checkpoints handle it.

Document rules. Add comments explaining what each rule does and why.

Separate data from code. Don’t hardcode file paths. Use config files.

Archive workflows with results. When publishing, include the exact Snakefile used. Others can reproduce your work.

Advanced Features

Checkpoints

Sometimes you don’t know outputs until runtime. Checkpoints handle this.

checkpoint split_data:

input:

"data/large_file.txt"

output:

directory("splits/")

shell:

"split_script {input} {output}"

def aggregate_input(wildcards):

checkpoint_output = checkpoints.split_data.get(**wildcards).output[0]

parts = glob_wildcards(os.path.join(checkpoint_output, "part_{i}.txt")).i

return expand("processed/part_{i}.csv", i=parts)

rule aggregate:

input:

aggregate_input

output:

"results/final.csv"

shell:

"combine {input} > {output}"

The checkpoint runs first. Its outputs determine what comes next. Snakemake reruns the DAG after checkpoints to handle dynamic dependencies.

Modules

Large workflows get unwieldy. Modules split them into manageable pieces.

module preprocessing:

snakefile: "modules/preprocessing.smk"

config: config

use rule * from preprocessing as preprocessing_*

module analysis:

snakefile: "modules/analysis.smk"

config: config

use rule * from analysis as analysis_*

Each module is a separate Snakefile. The main workflow imports them. This keeps code organized.

Remote Files

Data often lives on remote servers or cloud storage. Snakemake handles downloads transparently.

from snakemake.remote.HTTP import RemoteProvider as HTTPRemoteProvider

HTTP = HTTPRemoteProvider()

rule download:

input:

HTTP.remote("example.com/data/sample.fastq.gz")

output:

"data/sample.fastq.gz"

shell:

"cp {input} {output}"

S3, GCS, FTP, and other protocols work similarly.

Wrappers

Common tools have reusable wrappers. These are maintained rule templates.

rule bwa_mem:

input:

reads=["data/{sample}_1.fastq", "data/{sample}_2.fastq"],

idx="reference/genome.fa"

output:

"results/{sample}.bam"

params:

extra=r"-R '@RG\tID:{sample}\tSM:{sample}'",

sorting="samtools",

sort_order="coordinate"

threads: 8

wrapper:

"0.80.0/bio/bwa/mem"

The wrapper handles the command line details. You just specify inputs and outputs.

The wrapper repository contains hundreds of bioinformatics tools. Use them instead of writing shell commands from scratch.

Comparison with Alternatives

Snakemake vs Nextflow

Nextflow is Snakemake’s main competitor in bioinformatics.

Both solve similar problems. Both support conda, containers, and clusters. The main differences are syntax and ecosystem.

Nextflow uses a Groovy-based DSL. Snakemake uses Python syntax. Which you prefer is partly personal taste.

Nextflow has channels and dataflow programming. Snakemake uses file-based dependencies. Nextflow is more flexible for complex dataflows. Snakemake is simpler for file transformations.

The nf-core project provides curated Nextflow pipelines. This is a huge advantage for standard analyses. Snakemake workflows exist but are less centralized.

Nextflow has stronger commercial backing (Seqera Labs). Snakemake is more community-driven.

Both are excellent. Choose based on your ecosystem and preferences.

Snakemake vs CWL

Common Workflow Language (CWL) is a standard for describing workflows.

CWL is more verbose than Snakemake. It uses YAML or JSON. The goal is portability across workflow engines.

Snakemake is a workflow engine. CWL is a specification. You can run CWL workflows on multiple engines (cwltool, Toil, Arvados).

CWL is better for maximum portability. Snakemake is better for rapid development and iteration.

Many researchers find Snakemake more practical. CWL’s verbosity slows development.

Snakemake vs WDL

Workflow Description Language (WDL) is another workflow specification, popular in genomics.

WDL came from the Broad Institute. It’s designed for genomics pipelines, particularly on cloud platforms.

WDL has strong typing and is more declarative than Snakemake. Snakemake is more imperative with Python code.

Cromwell executes WDL workflows. Terra and other platforms support it natively.

WDL is better for cloud-native genomics on platforms that support it. Snakemake is better for flexible local and cluster execution.

Snakemake vs Make

GNU Make inspired Snakemake. Both use rule-based dependency resolution.

Make is simpler and universal. But it lacks features crucial for scientific computing: no conda support, no cluster execution, limited pattern matching.

Snakemake adds Python integration, better wildcards, software management, and distributed execution.

For simple build tasks, Make is fine. For scientific workflows, Snakemake is better.

Challenges and Limitations

Snakemake isn’t perfect. Several issues come up.

Learning curve exists. Understanding wildcards, the DAG, and rule resolution takes time. Python knowledge helps but isn’t always sufficient.

Debugging can be hard. When the DAG doesn’t build as expected, figuring out why takes effort. Error messages aren’t always clear.

Large workflows get complex. Hundreds of rules become unwieldy. Modularization helps but adds abstraction.

File-based model has limits. Not everything fits file transformations. Streaming data or real-time processing doesn’t match Snakemake’s paradigm.

Performance can degrade. Very large workflows with thousands of jobs may stress Snakemake. Filesystem metadata checks take time.

Cluster integration requires tuning. Default profiles don’t always work. You need to understand both Snakemake and your cluster scheduler.

Documentation could be better. While improving, finding answers to advanced questions sometimes requires reading source code or asking the community.

The Snakemake Ecosystem

Several tools and resources complement Snakemake.

The Snakemake Wrappers Repository provides reusable rule templates for common tools. Use these instead of writing commands manually.

Snakemake Profiles contain configuration for different execution environments. Community-contributed profiles exist for major HPC systems and cloud platforms.

Snakedeploy helps deploy workflows. It creates project structure and manages profiles.

Snakemake Catalog is a registry of published workflows. Find existing pipelines for common analyses.

The Snakemake Google Group and Slack provide community support. Active users answer questions and share best practices.

Snakemake Paper and Documentation are authoritative resources. The original paper explains design decisions. The documentation covers features comprehensively.

Getting Started

Installation is straightforward with conda:

conda install -c conda-forge -c bioconda snakemake

Or use mamba (faster):

mamba install -c conda-forge -c bioconda snakemake

Create a simple Snakefile:

rule all:

input:

"output.txt"

rule create_output:

output:

"output.txt"

shell:

"echo 'Hello, Snakemake!' > {output}"

Run it:

snakemake --cores 1

Snakemake creates output.txt.

From here, add more rules, use wildcards, incorporate conda environments. Start simple and build complexity as needed.

The official tutorial walks through creating a variant calling pipeline. It covers core concepts with a real example.

Real-World Adoption

Snakemake is widely used in research.

The Human Cell Atlas uses Snakemake for data processing pipelines.

EMBL and other major research institutions have standardized on Snakemake for bioinformatics.

Published papers by the thousands cite Snakemake. Journals encourage depositing workflows for reproducibility.

Pharma and biotech companies use Snakemake for genomics analysis and drug discovery pipelines.

COVID-19 research leveraged Snakemake workflows for rapid variant analysis and surveillance.

The tool has proven itself at scale. Workflows processing petabytes of genomics data run on Snakemake.

The Future Direction

Snakemake continues to evolve. Several developments are happening.

Better cloud integration is a priority. Making cloud execution as easy as local execution.

Improved workflow sharing through registries and standardized packaging.

Performance optimizations for very large workflows with thousands of samples.

Enhanced reporting with richer visualization and documentation features.

Tighter integration with workflow standards like GA4GH for interoperability.

The project maintainers are responsive. Feature requests get implemented. The community contributes code and wrappers.

Funding from Chan Zuckerberg Initiative and other sources supports continued development.

Key Takeaways

Snakemake is the leading workflow management system for computational biology and data-intensive research.

It solves reproducibility through conda environments, containers, and workflow tracking. Results from years ago can be recreated exactly.

The file-based dependency model matches scientific computing workflows naturally. Transform data through stages, tracking intermediate files automatically.

Wildcards and pattern matching make rules reusable. Write once, apply to hundreds of samples.

Scaling from local execution to HPC clusters to cloud platforms requires no workflow changes. The same Snakefile runs anywhere.

Common use cases include genomics, proteomics, computational experiments, and data science. Any file-based transformation pipeline fits.

Challenges include the learning curve, debugging complexity, and file-based model limitations.

The ecosystem provides wrappers, profiles, and community support. You’re not starting from scratch.

Compared to alternatives, Snakemake offers the best balance of power and usability for Python-oriented researchers.

If you’re doing computational research that needs to be reproducible, Snakemake is worth learning. The investment pays off in reliable, maintainable workflows.

Tags: Snakemake, workflow management, bioinformatics, computational biology, reproducible research, scientific computing, genomics pipelines, data science workflows, Python workflows, conda environments, HPC workflows, workflow orchestration, research automation, computational pipelines, data analysis