Prefect: Modern Workflow Orchestration Built for Data Engineers

Introduction

Airflow dominated workflow orchestration for years. But it had problems. DAGs were hard to test. Development cycles were slow. Dynamic workflows required workarounds. Error handling felt like an afterthought.

Prefect was created to fix these issues. The founders worked with Airflow extensively and decided to build something better. They launched in 2018 with a simple goal: make workflow orchestration feel like writing normal Python code.

Prefect 2.0 changed everything in 2022. The architecture got simpler. The API got cleaner. Deployment became easier. Teams that struggled with Airflow found Prefect refreshing.

This guide explains what Prefect actually is, how it works, and when you should use it. You’ll understand the architecture, see real patterns, and learn where it fits in modern data infrastructure.

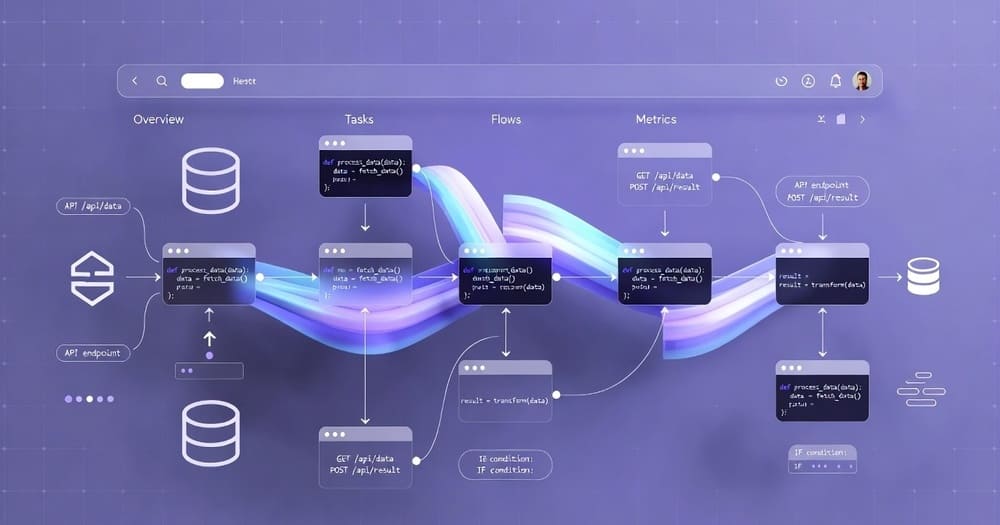

What is Prefect?

Prefect is a workflow orchestration framework for Python. You write normal Python functions. Add decorators. Prefect handles scheduling, retries, monitoring, and observability.

The core philosophy is different from traditional orchestrators. Prefect treats workflows as code that happens to be orchestrated, not as special configuration files. Your workflow logic looks like regular Python. No DAG objects. No special operators. Just functions and decorators.

The platform has two main components. Prefect is the open-source framework you use to build workflows. Prefect Cloud is the managed service that handles coordination, scheduling, and monitoring. You can also self-host the coordination layer with Prefect Server.

Unlike Airflow where the scheduler and executor are tightly coupled, Prefect uses a hybrid execution model. The control plane coordinates workflows. The execution happens wherever you want: your laptop, Kubernetes, AWS ECS, or serverless functions.

Core Concepts

Understanding Prefect means grasping a few key ideas.

Flows are the top-level workflow definition. A flow is just a Python function decorated with @flow. Inside a flow, you call other functions and define the workflow logic.

Tasks are individual units of work. They’re Python functions decorated with @task. Tasks are optional but recommended. They give you retry logic, caching, and better observability.

Deployments connect your flow code to scheduling and infrastructure. A deployment says where the code lives, how to run it, and when to trigger it.

Work pools define where flows execute. Different pools can point to different infrastructure: Kubernetes clusters, ECS clusters, or process-based execution.

Blocks store configuration and credentials. Database connections, cloud credentials, and webhook URLs all live in blocks. They’re reusable across flows.

The execution model is interesting. When you schedule a flow, Prefect creates a flow run. The control plane tracks it. An agent or worker pulls the run and executes it. Results stream back to the control plane for monitoring.

A Simple Example

Here’s what a basic Prefect flow looks like:

from prefect import flow, task

import httpx

@task(retries=3, retry_delay_seconds=5)

def fetch_data(url: str):

response = httpx.get(url)

response.raise_for_status()

return response.json()

@task

def transform_data(data: dict):

# Your transformation logic

return processed_data

@task

def load_data(data: dict):

# Your loading logic

print(f"Loaded {len(data)} records")

@flow(name="etl-pipeline")

def etl_flow(api_url: str):

raw_data = fetch_data(api_url)

transformed = transform_data(raw_data)

load_data(transformed)

return transformed

if __name__ == "__main__":

etl_flow("https://api.example.com/data")

This is a complete workflow. You can run it directly: python my_flow.py. No deployment needed for development. Add retries to individual tasks. The whole thing is just Python.

When Prefect Makes Sense

Prefect fits specific scenarios better than others.

You’re building data pipelines in Python. This is the sweet spot. If your team writes Python for data work, Prefect feels natural. No context switching to YAML or special DSLs.

You want fast development cycles. Prefect lets you run flows locally without any infrastructure. Test workflows on your laptop. Deploy when ready. This beats deploying to Airflow and debugging through the web UI.

Your workflows are dynamic. Need to generate tasks at runtime based on data? Easy with Prefect. Airflow’s DAG structure makes this painful. Prefect flows are just Python, so dynamic logic works naturally.

You value developer experience. Prefect invested heavily in making workflows feel like normal code. The API is clean. Error messages are helpful. Documentation is good.

You want flexible execution. Run flows anywhere. Local development on your machine. Production on Kubernetes. Quick jobs on serverless. The same flow code works everywhere.

Common Use Cases

ETL and ELT Pipelines

Prefect handles data pipelines well. Extract from APIs, databases, or files. Transform using pandas, polars, or custom logic. Load into warehouses or data lakes.

A typical pattern: scheduled flows that run hourly or daily. Tasks handle extraction with retries. Transformation tasks process data in chunks. Loading tasks use connection pooling.

The caching system helps avoid redundant work. If extraction fails but you’ve cached intermediate results, retries skip the successful parts.

Data Science Workflows

Data scientists like Prefect because it doesn’t force them to learn DevOps concepts. They write Python. Prefect handles orchestration.

Model training workflows are common. One task loads training data. Another does feature engineering. The main task trains the model. A final task evaluates and logs metrics.

Hyperparameter sweeps work well. Generate tasks dynamically for each parameter combination. Run them in parallel. Collect results.

API Integrations

Many workflows involve calling external APIs. Prefect makes this cleaner than alternatives.

Tasks with retry logic handle transient failures. Rate limiting works through custom task runners or simple sleeps. Authentication credentials live in blocks and stay out of code.

Webhook-triggered flows respond to events. GitHub webhooks, Stripe events, or internal system notifications can trigger workflows immediately.

Scheduled Maintenance Tasks

Regular maintenance work fits Prefect. Database cleanup, cache warming, report generation, backup verification.

These workflows often run on schedules but need visibility when they fail. Prefect’s notifications and monitoring make sure you know when maintenance fails.

Architecture Deep Dive

Prefect’s architecture is simpler than it seems.

The Prefect API is the central coordination point. It stores workflow metadata, deployment configurations, flow run history, and logs. This can be Prefect Cloud or a self-hosted Prefect Server.

Agents and Workers are the execution layer. They poll the API for scheduled flow runs, pull the code, and execute it. Workers are the newer model (introduced in Prefect 2.0) and more flexible than agents.

Your flow code lives wherever you want. In containers, Lambda functions, or directly on worker machines. Prefect doesn’t care where the code is, as long as workers can access it.

Blocks store configuration separately from code. Database connections, cloud credentials, Slack webhooks. Define them once, reference them in flows.

When you deploy a flow, you create a deployment. The deployment references your flow code, sets a schedule if needed, and specifies which work pool should execute it.

When it’s time to run, the API creates a flow run. A worker from the appropriate work pool picks it up, retrieves the code, executes it, and streams logs and results back.

This hybrid model is powerful. The control plane is centralized and managed. Execution is distributed and flexible.

Task Features

Tasks are where Prefect shines. They’re optional but provide significant value.

Retries are built in. Specify how many times to retry and how long to wait between attempts.

@task(retries=3, retry_delay_seconds=10)

def unreliable_api_call():

# This will retry up to 3 times

response = requests.get("https://flaky-api.com/data")

return response.json()

Caching avoids redundant computation. Tasks can cache based on inputs, preventing recomputation when results are already known.

@task(cache_key_fn=task_input_hash, cache_expiration=timedelta(hours=1))

def expensive_computation(x: int):

# This result is cached for 1 hour based on input

return heavy_calculation(x)

Concurrency limits prevent overwhelming downstream systems.

@task(task_run_name="process-{item}")

def process_item(item):

# Your processing logic

pass

Task run names help with observability when you’re running many similar tasks.

Dynamic Workflows

Prefect handles dynamic workflows elegantly. No special tricks needed.

Loop over data and create tasks:

@flow

def process_files(file_list: list[str]):

results = []

for file in file_list:

result = process_file.submit(file)

results.append(result)

return [r.result() for r in results]

Each file gets its own task. They run in parallel automatically. The .submit() method returns a future that you can await later.

Conditional logic works normally:

@flow

def conditional_flow(data_size: int):

if data_size > 1000:

result = big_data_processor(data)

else:

result = small_data_processor(data)

return result

No special operators needed. Just write Python.

Deployment Patterns

Prefect offers several deployment approaches.

Process-based is the simplest. A worker process runs on a machine and executes flows directly. Good for development and simple production scenarios.

Docker-based puts each flow run in a container. Better isolation. Easier dependency management. The worker pulls an image and runs it.

Kubernetes gives you full K8s benefits. Each flow run becomes a Job. Scale workers horizontally. Use node selectors for resource requirements.

Serverless functions work too. AWS Lambda, Google Cloud Functions, or Azure Functions can execute flows. Good for infrequent workflows or cost optimization.

Creating a deployment is straightforward:

from prefect import flow

from prefect.deployments import Deployment

from prefect.server.schemas.schedules import CronSchedule

@flow

def my_flow():

# Flow logic

pass

deployment = Deployment.build_from_flow(

flow=my_flow,

name="production-deployment",

schedule=CronSchedule(cron="0 0 * * *"),

work_pool_name="kubernetes-pool"

)

deployment.apply()

Or use the CLI:

prefect deployment build my_flow.py:my_flow -n production -p kubernetes-pool

prefect deployment apply my_flow-deployment.yaml

Observability and Monitoring

Prefect makes workflow monitoring straightforward.

The UI shows all flow runs, their status, and execution time. Filter by flow name, status, or tags. Click into a run to see logs, task-level details, and runtime graphs.

Logs stream in real time. Watch your flow execute. Search through historical logs. Logs are structured and searchable.

Notifications alert you when things go wrong. Slack, email, PagerDuty, or custom webhooks. Set up alerts for failures, long-running flows, or custom conditions.

Metrics and observability integrate with existing tools. Prefect exposes events that you can send to Datadog, Prometheus, or other monitoring systems.

Flow run history persists indefinitely in Prefect Cloud. See every execution, even from months ago. Track trends. Identify patterns.

The UI is well-designed. It doesn’t overwhelm you with information but provides what you need when debugging.

Error Handling and Recovery

Prefect gives you multiple layers of error handling.

Task-level retries handle transient failures. A task fails, waits, tries again. Configure retry behavior per task.

Flow-level logic can catch exceptions and respond:

@flow

def resilient_flow():

try:

result = risky_task()

except Exception as e:

# Log the error

logger.error(f"Task failed: {e}")

# Run fallback logic

result = fallback_task()

return result

State handlers let you hook into state transitions. Run cleanup code when a flow fails. Send notifications when tasks succeed.

Pausing flows for manual intervention is built in. A task can pause the flow, wait for human approval, then continue.

Crash detection helps with infrastructure failures. If a worker dies, Prefect detects it and can reschedule the run.

Scaling and Performance

Prefect scales in several dimensions.

Horizontal scaling of workers is simple. Run more worker processes or pods. Each pulls from the work pool and executes flows. Prefect coordinates everything.

Concurrent task execution happens automatically. Tasks submitted with .submit() run in parallel. The number of concurrent tasks depends on your infrastructure.

Resource optimization comes from flexible execution. Small flows run on small instances. Large flows get bigger resources. Serverless options save money on infrequent workflows.

Database performance matters at scale. Prefect Cloud handles this for you. Self-hosted deployments need proper PostgreSQL tuning for thousands of daily flow runs.

Real-world examples: companies run hundreds of thousands of tasks daily on Prefect. The architecture handles it. The key is configuring workers and infrastructure appropriately.

Integration Ecosystem

Prefect integrates with the tools you already use.

Data warehouses like Snowflake, BigQuery, and Redshift have blocks and utilities. Run queries, load data, manage tables.

Cloud platforms are well-supported. AWS S3, Azure Blob Storage, GCP Cloud Storage. Secrets management integrates with AWS Secrets Manager, Azure Key Vault, and GCP Secret Manager.

Databases work through SQLAlchemy or custom blocks. Postgres, MySQL, SQL Server, MongoDB.

Message queues like Kafka, RabbitMQ, and cloud-native options integrate for event-driven patterns.

Machine learning tools including MLflow, Weights & Biases, and various model registries.

Notifications to Slack, Microsoft Teams, PagerDuty, email, and custom webhooks.

The integration library keeps growing. Community contributions add support for new tools regularly.

Prefect Cloud vs Self-Hosted

You can use Prefect Cloud or run Prefect Server yourself.

Prefect Cloud is the managed option. No infrastructure to maintain. Automatic scaling. Built-in authentication and RBAC. SOC 2 compliant. Enhanced features like automations and service accounts.

The free tier is generous. Enough for small teams or personal projects. Paid tiers add features and support.

Prefect Server is the self-hosted option. You run the API server and database. Full control. No external dependencies. Good for air-gapped environments or specific compliance needs.

Self-hosting means maintaining PostgreSQL and the Prefect server. Not hard, but operational overhead exists.

For most teams, Prefect Cloud makes sense. The time saved on operations exceeds the cost.

Comparison with Alternatives

Prefect vs Airflow

This is the most common comparison.

Development experience: Prefect wins. Workflows are normal Python. Local testing is trivial. Airflow requires running the full stack.

Maturity and ecosystem: Airflow has more operators and integrations. It’s been around longer. More examples and blog posts exist.

Dynamic workflows: Prefect handles these naturally. Airflow requires workarounds or new features like dynamic task mapping.

Operations: Prefect is simpler. Airflow needs careful tuning. Celery, database, scheduler, web server all need configuration.

Community: Airflow has a larger community. More Stack Overflow answers. Prefect’s community is active but smaller.

Use Airflow if you need its specific integrations or your team already knows it. Use Prefect for new projects or if developer experience matters.

Prefect vs Dagster

Dagster and Prefect target similar users but with different philosophies.

Mental model: Dagster uses software-defined assets. You think about data, not tasks. Prefect uses flows and tasks, closer to traditional programming.

Type safety: Dagster emphasizes types and validation. Prefect is more permissive.

Testing: Both have good testing stories. Dagster’s type system catches more errors before runtime.

Deployment: Prefect’s deployment model is more flexible. Dagster ties code to a code location concept.

UI and observability: Both are good. Dagster focuses on asset lineage. Prefect focuses on flow execution.

Choose based on team preference. If you like thinking in assets and types, try Dagster. If you prefer lightweight and flexible, try Prefect.

Prefect vs Temporal

Temporal is fundamentally different. It’s a workflow engine for reliable distributed systems.

Use case: Temporal targets microservices and business workflows. Prefect targets data pipelines and analytics.

Durability: Temporal guarantees workflow completion even across infrastructure failures. Prefect focuses on retry logic and monitoring.

Language support: Temporal supports multiple languages. Prefect is Python-first.

Complexity: Temporal has a steeper learning curve. Prefect is approachable.

Use Temporal for critical business workflows that can’t fail. Use Prefect for data engineering and analytics.

Challenges and Limitations

Prefect isn’t perfect. Some pain points emerge.

Python-only limits polyglot teams. If you need to orchestrate non-Python work, you’re calling scripts or containers. Airflow’s operator model handles this more naturally.

Execution model changes between versions caused migration pain. Prefect 1.0 to 2.0 was a major breaking change. Teams had to rewrite workflows.

Smaller ecosystem than Airflow means fewer pre-built integrations. You’ll write more custom code.

Learning curve exists despite claims of simplicity. The hybrid execution model takes time to understand. Agents, workers, work pools, and deployments have subtle differences.

Limited built-in features compared to enterprise Airflow distributions. Data lineage, SLA monitoring, and advanced alerting require custom work or Prefect Cloud features.

Documentation gaps exist for edge cases. The core docs are good. Specific scenarios sometimes lack examples.

Best Practices

What works well in production:

Structure flows clearly. Keep flows small and focused. Compose larger workflows from smaller flows. This improves testability and reusability.

Use tasks strategically. Not everything needs to be a task. Tasks add overhead. Use them for retries, caching, or observability. Simple operations can stay as regular functions.

Test locally first. Run flows on your laptop before deploying. Catch errors early. Use small datasets for testing.

Manage configuration properly. Use blocks for credentials and connections. Keep secrets out of code. Use environment-specific configurations.

Set up proper logging. Use Python’s logging module. Prefect captures logs automatically. Add context to log messages.

Monitor what matters. Set up alerts for critical flows. Don’t alert on everything. Focus on business-critical workflows.

Version your deployments. Track changes to flows. Use version tags. Make deployments reproducible.

Handle failures gracefully. Design for failure. Add retries where appropriate. Clean up resources in finally blocks.

Use work pools effectively. Separate development and production. Use different pools for different resource requirements.

Keep dependencies clean. Pin versions in requirements files. Use virtual environments or containers for isolation.

Getting Started

Setting up Prefect is quick.

Install Prefect:

pip install prefect

Start the API (for local development):

prefect server start

Or connect to Prefect Cloud:

prefect cloud login

Create your first flow:

from prefect import flow, task

@task

def say_hello(name: str):

print(f"Hello, {name}!")

@flow

def hello_flow():

say_hello("World")

if __name__ == "__main__":

hello_flow()

Run it:

python hello.py

Check the UI at http://localhost:4200 (for local server) or Prefect Cloud.

Create a deployment:

prefect deployment build hello.py:hello_flow -n my-deployment

prefect deployment apply hello_flow-deployment.yaml

Start a worker:

prefect worker start --pool default-agent-pool

From here, explore scheduling, parameters, and integrations.

Real-World Adoption

Many companies trust Prefect in production.

Vanta uses Prefect for security data pipelines processing millions of events daily.

Twilio runs workflow orchestration for internal analytics and reporting.

DoorDash leverages Prefect for various data engineering workflows.

The New York Times uses it for content processing and data pipelines.

Prefect has paying customers across industries. Startups to enterprises. The commercial business validates the technology.

The Road Ahead

Prefect continues evolving rapidly.

Enhanced observability is a focus area. Better metrics, tracing, and integration with observability platforms.

More integrations are constantly added. Cloud services, databases, and third-party tools.

Performance improvements for very large workflows. Optimizing the API and database layer.

Enterprise features like better RBAC, audit logs, and compliance certifications.

AI integration for workflow creation and debugging using LLMs.

The company is well-funded and growing. Active development continues. New releases ship regularly.

Key Takeaways

Prefect offers a modern approach to workflow orchestration. It prioritizes developer experience without sacrificing production capabilities.

The Python-first design makes it feel natural. No special DSLs. No complex configuration files. Just Python functions and decorators.

Dynamic workflows are first-class. Generate tasks at runtime. Conditional logic works normally. This beats Airflow’s DAG model for complex scenarios.

The hybrid execution model separates control from execution. Centralized coordination with distributed execution. This flexibility supports many deployment patterns.

Observability is built in. The UI is clean. Logs stream in real time. Notifications keep you informed.

Challenges exist. The ecosystem is smaller than Airflow. It’s Python-only. Version migrations can be painful.

For new data engineering projects in Python, Prefect deserves strong consideration. The productivity gains are real. Teams ship workflows faster.

If you’re struggling with Airflow or building workflows from scratch, try Prefect. The investment in learning pays off quickly.

Tags: Prefect, workflow orchestration, Python workflows, data engineering, ETL pipelines, task orchestration, Prefect Cloud, data pipeline automation, modern orchestration, workflow engine, Python data pipelines, dynamic workflows, MLOps, data orchestration, workflow management