GitHub Actions: CI/CD Service Integrated with GitHub

In the evolving landscape of software development, automation has become indispensable for delivering high-quality code efficiently. GitHub Actions, launched in 2019, has rapidly emerged as a powerful and deeply integrated CI/CD (Continuous Integration/Continuous Delivery) solution that seamlessly extends GitHub’s collaborative platform. By enabling developers to automate workflows directly within their repositories, GitHub Actions has transformed how teams build, test, and deploy software.

The Power of Native Integration

What sets GitHub Actions apart from other CI/CD solutions is its native integration with the GitHub platform. Rather than requiring developers to juggle multiple services and accounts, GitHub Actions lives where the code does. This deep integration creates a seamless experience with several distinctive advantages:

- Contextual awareness: Actions have native access to repository information, including branches, issues, pull requests, and releases

- Simplified permissions management: Leverages existing GitHub authentication and authorization

- Reduced context switching: Developers stay within the GitHub ecosystem for the entire development lifecycle

- Event-driven workflow execution: Easily trigger workflows based on repository events

Understanding GitHub Actions Architecture

At its core, GitHub Actions follows a simple but powerful architecture:

1. Workflows

Workflows are automated procedures defined in YAML files stored in the .github/workflows directory of your repository. Each workflow contains a set of jobs that execute in response to specific events:

name: Data Processing Pipeline

on:

push:

branches: [ main ]

schedule:

- cron: '0 0 * * *' # Run daily at midnight

jobs:

process:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.10'

- name: Install dependencies

run: |

python -m pip install --upgrade pip

pip install -r requirements.txt

- name: Process data

run: python scripts/process_data.py

This structure allows for remarkable flexibility, from simple validation tasks to complex multi-stage pipelines.

2. Events

Events trigger workflow executions and can be repository-based (like pushes, pull requests, or issue comments) or external (via webhook or scheduled). This event-driven model ensures workflows run only when needed, optimizing resource utilization.

Common events include:

- Code-related:

push,pull_request,workflow_dispatch - Issue and PR interactions:

issues,issue_comment,pull_request_review - Repository changes:

create,delete,fork,release - Scheduled tasks:

schedulewith cron syntax - Manual triggers:

workflow_dispatch,repository_dispatch

3. Jobs and Steps

Jobs are the execution units within a workflow, running on specified environments (runners). Each job contains steps—individual tasks that either run commands or use actions. Jobs can run in parallel or sequentially, with configurable dependencies:

jobs:

test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Run tests

run: npm test

deploy:

needs: test

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Deploy to production

run: ./deploy.sh

4. Actions

Actions are reusable units of code that perform common tasks. They can be:

- Published in the GitHub Marketplace

- Created in your own repositories

- Referenced from public repositories

This ecosystem of reusable components significantly reduces the effort required to implement complex workflows.

5. Runners

Runners are the execution environments where your workflows run. GitHub provides:

- GitHub-hosted runners: Ready-to-use VMs with common software preinstalled

- Self-hosted runners: Your own machines registered with GitHub, ideal for specialized hardware needs or security requirements

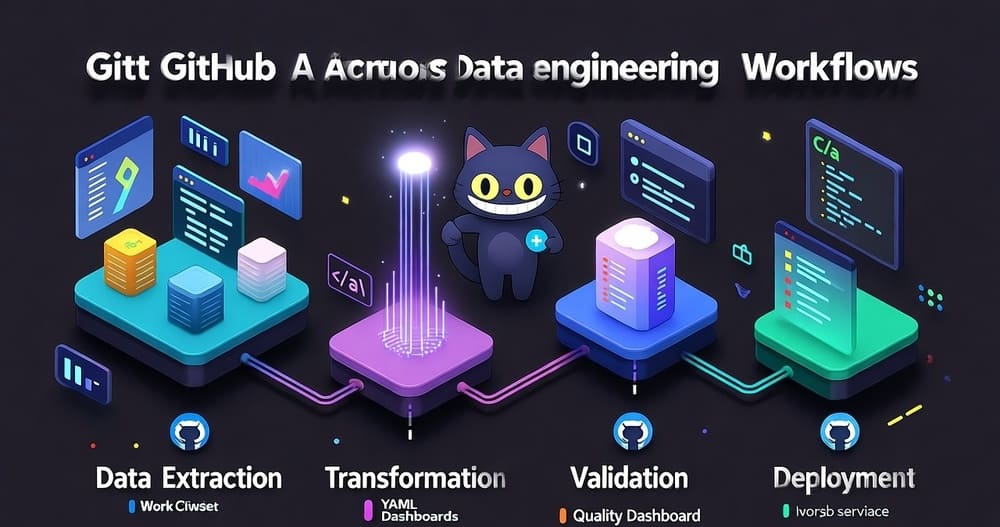

GitHub Actions for Data Engineering Workflows

For data engineers, GitHub Actions offers powerful capabilities to automate data pipelines, quality checks, and deployments:

Data Pipeline Automation

name: ETL Pipeline

on:

schedule:

- cron: '0 */6 * * *' # Run every 6 hours

jobs:

etl_process:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.10'

- name: Install dependencies

run: |

pip install -r requirements.txt

- name: Extract data

run: python scripts/extract.py

- name: Transform data

run: python scripts/transform.py

- name: Load data to warehouse

run: python scripts/load.py

env:

DATABASE_URL: ${{ secrets.DATABASE_URL }}

- name: Notify on completion

uses: slackapi/slack-github-action@v1.23.0

with:

payload: |

{"text": "ETL Pipeline completed successfully!"}

env:

SLACK_WEBHOOK_URL: ${{ secrets.SLACK_WEBHOOK_URL }}

This workflow automatically runs your ETL process on a schedule, maintaining data freshness without manual intervention.

Data Quality Validation

name: Data Quality Checks

on:

workflow_dispatch: # Manual trigger

pull_request:

paths:

- 'data/**'

- 'models/**'

jobs:

validate:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.10'

- name: Install dependencies

run: pip install great_expectations pandas

- name: Run data quality checks

run: |

great_expectations checkpoint run data_quality_checkpoint

- name: Upload validation results

uses: actions/upload-artifact@v3

with:

name: quality-reports

path: great_expectations/uncommitted/data_docs/

This workflow ensures that data changes meet quality standards before they’re merged, preventing data quality regressions.

Machine Learning Model Training and Deployment

name: ML Model CI/CD

on:

push:

branches: [ main ]

paths:

- 'model/**'

- 'data/training/**'

jobs:

train:

runs-on: large-runner

steps:

- uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.10'

- name: Install dependencies

run: pip install -r requirements.txt

- name: Train model

run: python model/train.py

- name: Evaluate model

run: python model/evaluate.py

- name: Save model artifacts

uses: actions/upload-artifact@v3

with:

name: model-artifacts

path: model/artifacts/

deploy:

needs: train

if: github.ref == 'refs/heads/main'

runs-on: ubuntu-latest

environment: production

steps:

- uses: actions/checkout@v3

- name: Download model artifacts

uses: actions/download-artifact@v3

with:

name: model-artifacts

path: model/artifacts/

- name: Deploy model to endpoint

run: python scripts/deploy_model.py

env:

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

This workflow automates the entire machine learning lifecycle, from training to deployment, ensuring reproducibility and streamlining the path to production.

Advanced GitHub Actions Features for Data Projects

1. Matrix Builds for Testing Across Environments

Matrix builds allow you to run workflows across multiple configurations simultaneously:

jobs:

test:

runs-on: ${{ matrix.os }}

strategy:

matrix:

os: [ubuntu-latest, windows-latest]

python-version: ['3.9', '3.10', '3.11']

steps:

- uses: actions/checkout@v3

- name: Set up Python ${{ matrix.python-version }}

uses: actions/setup-python@v4

with:

python-version: ${{ matrix.python-version }}

- name: Run tests

run: pytest

This feature is invaluable for ensuring data processing code works consistently across different environments.

2. Workflow Reuse and Modularization

For complex data projects, you can create reusable workflow components:

# .github/workflows/reusable-data-validation.yml

name: Reusable Data Validation

on:

workflow_call:

inputs:

dataset-path:

required: true

type: string

jobs:

validate:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Validate dataset

run: python validate.py ${{ inputs.dataset-path }}

Then call this workflow from other workflows:

jobs:

process_and_validate:

steps:

- name: Process data

run: python process.py

- name: Validate results

uses: ./.github/workflows/reusable-data-validation.yml

with:

dataset-path: 'data/processed/'

This approach promotes consistency and reduces duplication across data workflows.

3. Environment-Specific Deployments

GitHub Environments provide targeted deployment configurations with optional protection rules:

jobs:

deploy_staging:

runs-on: ubuntu-latest

environment: staging

steps:

- uses: actions/checkout@v3

- name: Deploy to staging

run: ./deploy.sh

env:

DB_CONNECTION: ${{ secrets.STAGING_DB_CONNECTION }}

deploy_production:

needs: deploy_staging

runs-on: ubuntu-latest

environment: production

steps:

- uses: actions/checkout@v3

- name: Deploy to production

run: ./deploy.sh

env:

DB_CONNECTION: ${{ secrets.PRODUCTION_DB_CONNECTION }}

This structure ensures careful progression through environments, with appropriate access controls at each stage.

4. Self-Hosted Runners for Data-Intensive Workloads

For data engineering tasks requiring specialized hardware or enhanced security, self-hosted runners offer a powerful solution:

jobs:

process_large_dataset:

runs-on: self-hosted

steps:

- uses: actions/checkout@v3

- name: Process data

run: python process_big_data.py

These runners can access internal resources securely and provide the computational resources necessary for intensive data processing.

Best Practices for GitHub Actions in Data Engineering

Based on industry experience, here are some best practices for effectively using GitHub Actions in data projects:

1. Store Sensitive Information Securely

Use GitHub’s secrets management for sensitive data:

steps:

- name: Connect to database

run: python connect.py

env:

DB_PASSWORD: ${{ secrets.DATABASE_PASSWORD }}

API_KEY: ${{ secrets.API_KEY }}

Never hardcode credentials or sensitive configuration in your workflow files.

2. Implement Thoughtful Caching

For data processing workflows, proper caching improves performance:

steps:

- uses: actions/checkout@v3

- uses: actions/cache@v3

with:

path: ~/.cache/pip

key: ${{ runner.os }}-pip-${{ hashFiles('**/requirements.txt') }}

- name: Install dependencies

run: pip install -r requirements.txt

- uses: actions/cache@v3

with:

path: .processed_data

key: processed-data-${{ hashFiles('data/raw/**') }}

- name: Process data

run: python process.py

This approach avoids redundant processing of unchanged data.

3. Set Appropriate Triggers

Be deliberate about when workflows run to conserve resources:

on:

push:

branches: [ main ]

paths:

- 'data/**'

- 'scripts/**'

- 'models/**'

pull_request:

paths:

- 'data/**'

- 'scripts/**'

- 'models/**'

This configuration ensures the workflow only runs when relevant files change.

4. Implement Comprehensive Monitoring and Notifications

Keep your team informed about data pipeline status:

steps:

- name: Run pipeline

id: pipeline

run: python pipeline.py

continue-on-error: true

- name: Notify success

if: steps.pipeline.outcome == 'success'

uses: slackapi/slack-github-action@v1.23.0

with:

channel-id: 'pipeline-alerts'

slack-message: "Pipeline completed successfully!"

env:

SLACK_BOT_TOKEN: ${{ secrets.SLACK_BOT_TOKEN }}

- name: Notify failure

if: steps.pipeline.outcome == 'failure'

uses: slackapi/slack-github-action@v1.23.0

with:

channel-id: 'pipeline-alerts'

slack-message: "⚠️ Pipeline failed! Check the logs: ${{ github.server_url }}/${{ github.repository }}/actions/runs/${{ github.run_id }}"

env:

SLACK_BOT_TOKEN: ${{ secrets.SLACK_BOT_TOKEN }}

This approach ensures timely responses to pipeline issues.

Real-World Example: A Complete Data Engineering Workflow

Let’s explore a comprehensive GitHub Actions workflow for a data engineering project:

name: End-to-End Data Pipeline

on:

schedule:

- cron: '0 2 * * *' # Run daily at 2 AM

workflow_dispatch: # Allow manual triggers

jobs:

extract:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.10'

- name: Install dependencies

run: pip install -r requirements.txt

- name: Extract data from sources

run: python scripts/extract.py

env:

API_KEY: ${{ secrets.API_KEY }}

- name: Upload raw data

uses: actions/upload-artifact@v3

with:

name: raw-data

path: data/raw/

retention-days: 1

transform:

needs: extract

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.10'

- name: Install dependencies

run: pip install -r requirements.txt

- name: Download raw data

uses: actions/download-artifact@v3

with:

name: raw-data

path: data/raw/

- name: Transform data

run: python scripts/transform.py

- name: Upload transformed data

uses: actions/upload-artifact@v3

with:

name: transformed-data

path: data/transformed/

retention-days: 1

validate:

needs: transform

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.10'

- name: Install dependencies

run: pip install -r requirements.txt

- name: Download transformed data

uses: actions/download-artifact@v3

with:

name: transformed-data

path: data/transformed/

- name: Validate data quality

id: validation

run: python scripts/validate.py

continue-on-error: true

- name: Upload validation report

uses: actions/upload-artifact@v3

with:

name: validation-report

path: reports/validation/

- name: Check validation result

if: steps.validation.outcome != 'success'

run: |

echo "Data validation failed. Skipping load step."

exit 1

load:

needs: validate

runs-on: ubuntu-latest

environment: production

steps:

- uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.10'

- name: Install dependencies

run: pip install -r requirements.txt

- name: Download transformed data

uses: actions/download-artifact@v3

with:

name: transformed-data

path: data/transformed/

- name: Load data to warehouse

run: python scripts/load.py

env:

WAREHOUSE_CONNECTION: ${{ secrets.WAREHOUSE_CONNECTION }}

- name: Generate data freshness report

run: python scripts/generate_freshness_report.py

- name: Upload data freshness report

uses: actions/upload-artifact@v3

with:

name: data-freshness-report

path: reports/freshness/

notify:

needs: [extract, transform, validate, load]

if: always()

runs-on: ubuntu-latest

steps:

- name: Check workflow result

id: check

run: |

if [[ "${{ needs.extract.result }}" == "success" && "${{ needs.transform.result }}" == "success" && "${{ needs.validate.result }}" == "success" && "${{ needs.load.result }}" == "success" ]]; then

echo "status=success" >> $GITHUB_OUTPUT

else

echo "status=failure" >> $GITHUB_OUTPUT

fi

- name: Send notification

uses: slackapi/slack-github-action@v1.23.0

with:

payload: |

{

"text": "Data Pipeline Status: ${{ steps.check.outputs.status == 'success' && 'SUCCESS ✅' || 'FAILURE ❌' }}",

"blocks": [

{

"type": "header",

"text": {

"type": "plain_text",

"text": "Data Pipeline Status: ${{ steps.check.outputs.status == 'success' && 'SUCCESS ✅' || 'FAILURE ❌' }}"

}

},

{

"type": "section",

"fields": [

{

"type": "mrkdwn",

"text": "*Extract:* ${{ needs.extract.result }}"

},

{

"type": "mrkdwn",

"text": "*Transform:* ${{ needs.transform.result }}"

},

{

"type": "mrkdwn",

"text": "*Validate:* ${{ needs.validate.result }}"

},

{

"type": "mrkdwn",

"text": "*Load:* ${{ needs.load.result }}"

}

]

},

{

"type": "section",

"text": {

"type": "mrkdwn",

"text": "View run details: ${{ github.server_url }}/${{ github.repository }}/actions/runs/${{ github.run_id }}"

}

}

]

}

env:

SLACK_WEBHOOK_URL: ${{ secrets.SLACK_WEBHOOK_URL }}

This comprehensive workflow showcases a complete ETL pipeline with data validation, environment-specific deployment, and detailed notifications—all orchestrated through GitHub Actions.

The Future of GitHub Actions in Data Engineering

Looking ahead, several trends are emerging in how GitHub Actions is evolving to better serve data engineering needs:

- Enhanced compute options: GitHub is expanding the available runner types, including larger memory options crucial for data processing

- Improved artifact handling: Better support for large data artifacts, including compression and selective downloading

- Deeper integration with data tools: Growing ecosystem of actions specific to data engineering tools and platforms

- Advanced visualization and reporting: Enhanced capabilities for presenting data quality reports and pipeline metrics

- Expanded event sources: More sophisticated triggering options, especially for data-related events like dataset updates

Conclusion

GitHub Actions has transformed how data engineering teams approach automation by providing a deeply integrated CI/CD solution within the familiar GitHub environment. Its event-driven architecture, flexible workflow configuration, and rich ecosystem of reusable components make it an ideal platform for automating data pipelines, quality checks, and deployments.

By leveraging GitHub Actions, data engineering teams can achieve:

- Increased reliability through consistent, automated processes

- Enhanced collaboration with workflows directly tied to code changes

- Improved visibility into pipeline status and health

- Greater agility with automated testing and deployment

- Reduced operational overhead by consolidating tools within the GitHub platform

Whether you’re building data transformation pipelines, implementing automated quality checks, or deploying machine learning models, GitHub Actions provides a powerful and flexible foundation for modern data engineering workflows. By following the best practices and patterns outlined in this article, you can leverage GitHub Actions to build robust, efficient, and maintainable data pipelines that scale with your organization’s needs.

Keywords: GitHub Actions, CI/CD, continuous integration, continuous delivery, GitHub, workflow automation, data engineering, ETL pipeline, automated testing, deployment automation, data quality, pipeline automation, YAML, event-driven, matrix builds, reusable workflows

#GitHubActions #CICD #DevOps #DataEngineering #ETLAutomation #WorkflowAutomation #DataOps #PipelineAutomation #GitHubIntegration #ContinuousIntegration #ContinuousDelivery #AutomatedTesting #DataQuality #CloudAutomation #DataPipelines

Official : https://github.com/features/actions