Databricks vs. Snowflake

Speed grabs headlines, but 2025 performance is about something deeper than raw throughput numbers. While vendors battle over benchmark victories and impressive demos, real-world data teams are discovering that performance isn’t just about how fast your queries run—it’s about how much effort it takes to achieve that speed, how predictable your costs remain as you scale, and whether your architecture actually serves your business needs.

The Databricks vs. Snowflake debate has evolved beyond simple feature comparisons. Both platforms can crunch massive datasets and deliver impressive query performance. But beneath the surface lies a more nuanced reality: these platforms represent fundamentally different philosophies about where complexity should live in your data stack.

Databricks bets that data teams want control—the ability to tune, optimize, and squeeze every ounce of performance from their infrastructure. Snowflake bets that data teams want simplicity—automated optimization that delivers consistent performance without manual intervention.

The choice between them isn’t really about which is “better”—it’s about which philosophy aligns with your team’s capabilities, your workload patterns, and your tolerance for complexity. Let’s explore what drives real value for data teams in 2025.

The Effort Equation: Manual Mastery vs. Automated Intelligence

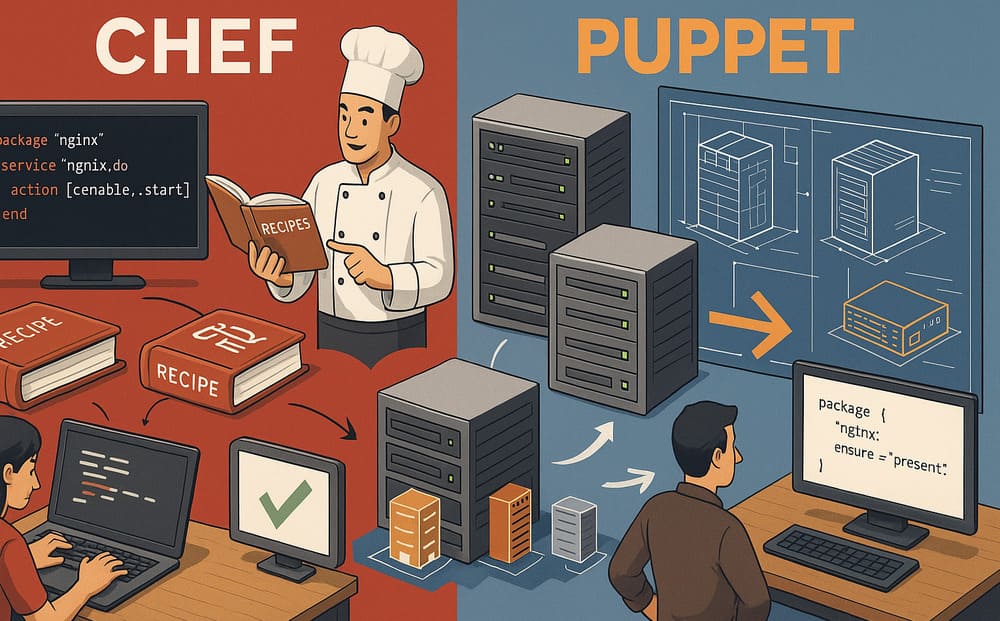

Databricks: The High-Touch Performance Machine

Databricks gives you the keys to a Formula 1 race car. It’s incredibly powerful, but it expects you to know how to drive it. Peak performance requires deep understanding of distributed systems, careful attention to data organization, and ongoing maintenance that can consume significant engineering resources.

The Optimization Burden Getting optimal performance from Databricks involves a constellation of decisions:

- Partitioning Strategy: Choose the wrong partition columns, and your queries scan unnecessary data. Choose too many, and small file problems emerge.

- Z-Ordering: This advanced optimization technique can dramatically improve query performance, but it’s particularly tricky with smaller datasets (under 1TB) where the benefits don’t always justify the complexity.

- Delta Lake Maintenance: Regular VACUUM operations to remove old file versions, OPTIMIZE commands to compact small files, and careful management of table statistics.

- Spark Configuration: Memory allocation, shuffle partitions, broadcast thresholds—dozens of parameters that affect performance.

Real-World Complexity Consider a typical enterprise scenario: you’re analyzing customer behavior data that grows by 100GB daily. On Databricks, optimal performance requires:

- Initial Setup: Determine optimal partition strategy (by date? by customer segment? by geography?)

- Ongoing Maintenance: Schedule regular OPTIMIZE and VACUUM operations

- Performance Monitoring: Track query patterns to adjust Z-ordering strategies

- Cost Management: Right-size clusters for different workload patterns

- Knowledge Transfer: Ensure team members understand these optimizations

This isn’t necessarily bad—teams that master these techniques can achieve exceptional performance. But it requires dedicated expertise and ongoing attention.

Snowflake: The Self-Tuning Alternative

Snowflake takes a different approach: hide the complexity behind intelligent automation. Their micro-partitioned, multi-cluster architecture automatically handles many optimizations that require manual intervention in Databricks.

Automatic Optimizations Snowflake’s performance advantages often come from what you don’t have to do:

- Micro-Partitioning: Automatic partitioning based on ingestion order, with intelligent pruning that eliminates irrelevant data blocks

- Query Optimization: Cost-based optimizer that automatically chooses optimal execution plans

- Auto-Clustering: Automatic reorganization of data to improve query performance over time

- Result Caching: Intelligent caching that serves repeated queries instantly

The Ease Factor Using the same customer behavior analysis example, Snowflake’s approach simplifies the workflow:

- Initial Setup: Load data into Snowflake tables (minimal schema design required)

- Ongoing Maintenance: Automatic clustering and optimization run transparently

- Performance Monitoring: Query performance remains consistent without manual tuning

- Cost Management: Auto-suspend and resume features manage compute costs automatically

- Knowledge Transfer: Standard SQL skills are sufficient for most optimizations

The Trade-off This simplicity comes with less granular control. You can’t fine-tune every aspect of query execution, but for many use cases, Snowflake’s automated optimizations deliver better performance than manual tuning attempts.

Architecture Deep Dive: Smart Engineering vs. Brute Force

Snowflake’s Intelligent Query Engine

Snowflake’s performance edge often comes from architectural decisions that prioritize efficiency over raw power. Their query engine combines several advanced techniques:

Vectorized Execution Snowflake processes data in columnar format with vectorized operations that can handle multiple values simultaneously. This approach is particularly effective for analytical workloads common in business intelligence.

Cost-Based Optimization The query optimizer analyzes table statistics, data distribution, and join patterns to choose the most efficient execution plan. This intelligence often compensates for having fewer compute resources.

Intelligent Pruning Micro-partitions store metadata about data ranges, allowing the query engine to skip entire sections of tables that don’t contain relevant data.

Real Performance Example A recent benchmark comparing analytical workloads showed Snowflake’s 64 cores outperforming Databricks’ 224 cores by 20% on a skewed join operation. This wasn’t due to superior hardware—it was the result of intelligent query planning that minimized unnecessary data movement and computation.

Databricks’ Photon: Power with Complexity

Databricks’ Photon engine represents a significant performance improvement, especially for large-scale data processing. Built in C++ rather than Java, Photon can achieve impressive throughput for well-tuned workloads.

Where Photon Excels

- Large Dataset Processing: Photon’s performance advantages become more pronounced with larger datasets

- Complex Transformations: CPU-intensive operations benefit from Photon’s optimized execution

- Streaming Workloads: Real-time processing scenarios where consistent throughput matters

Where Photon Struggles

- Business Intelligence Planning: Photon often requires more compute resources than Snowflake for similar BI workloads

- Small to Medium Datasets: The optimization overhead doesn’t always justify the complexity for smaller workloads

- Ad-hoc Analytics: Interactive queries benefit more from intelligent pruning than raw processing power

The CSV Ingestion Revelation

A telling example of architectural differences emerged in data ingestion testing. A simple configuration change in Snowflake—adjusting CSV parsing settings—reduced ingestion time from 67 seconds to 12 seconds, dramatically outpacing Databricks’ single-threaded CSV processing approach.

This reveals a deeper truth: Snowflake’s architecture is optimized for common data engineering tasks out of the box, while Databricks requires more thoughtful configuration to achieve similar results.

Cost Dynamics: Serverless Simplicity vs. Cluster Complexity

Snowflake’s Transparent Pricing Model

Snowflake’s serverless approach creates predictable cost patterns that align with actual usage:

Auto-Suspend Benefits Warehouses automatically suspend when not in use, ensuring you only pay for active computation. Even massive 512-node warehouses can scale down to zero cost when idle.

Predictable Scaling Need more performance? Scale up your warehouse size. Need less? Scale down. The relationship between performance and cost remains linear and predictable.

Hidden Efficiencies Snowflake’s shared storage architecture means multiple warehouses can access the same data without duplication, reducing storage costs compared to compute-coupled architectures.

Databricks’ DBU Complexity

Databricks’ compute pricing through Database Units (DBUs) creates more complex cost dynamics:

Cluster Sizing Challenges Achieving consistent SLA performance often requires oversized cluster configurations, leading to higher baseline costs even for variable workloads.

Sprawl Risk Different workload types (batch processing, streaming, ML training) often require separate, specialized clusters, leading to resource sprawl and cost complexity.

Optimization Tax The performance optimizations that make Databricks shine—pre-warmed clusters, optimized instance types, reserved capacity—often require upfront investment and careful capacity planning.

Real-World Cost Scenario Consider a data team running mixed workloads:

- Daily batch processing (4 hours)

- Interactive analytics (8 hours, sporadic)

- ML model training (2 hours, weekly)

Snowflake Approach:

- Single warehouse that auto-suspends between workloads

- Predictable per-second billing

- No idle resource costs

Databricks Approach:

- Separate clusters for batch, interactive, and ML workloads

- Potential idle time between workloads

- Complex optimization to minimize waste

Workload Fit: Choosing Your Battles

Snowflake’s Sweet Spots

SQL-First Analytics Snowflake excels when your primary workload involves SQL-based analysis. Business analysts, financial reporting, and traditional BI use cases benefit from Snowflake’s optimization for analytical queries.

Compliance and Governance Features like column-level security, dynamic data masking, and comprehensive audit trails make Snowflake attractive for regulated industries. Financial services teams particularly appreciate Snowflake’s encryption and compliance certifications.

Data Sharing and Collaboration Snowflake’s data sharing capabilities allow secure data distribution without copying, making it ideal for organizations that need to share data with partners or across business units.

Semi-Structured Data Handling The VARIANT data type elegantly handles JSON and Avro data, allowing SQL-based analysis of complex nested structures without complex ETL processes.

Databricks’ Advantages

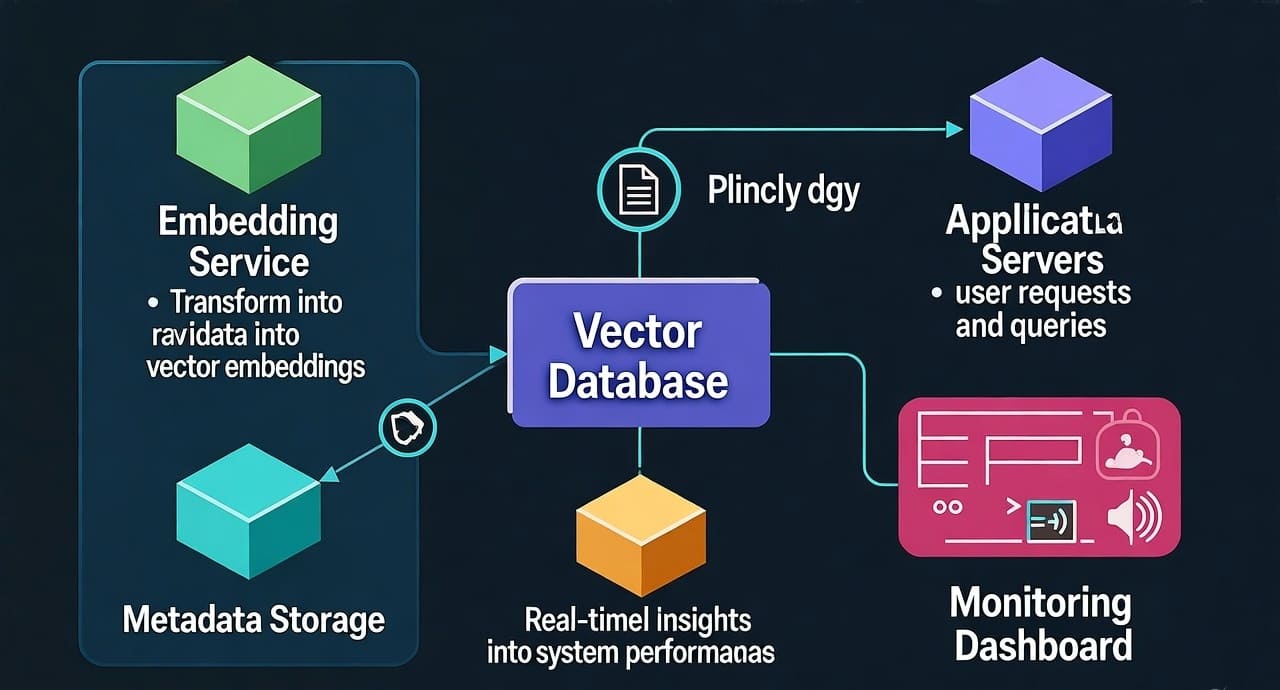

Streaming and Real-Time Processing Databricks’ integration with Apache Spark makes it superior for streaming workloads, real-time data processing, and scenarios requiring low-latency data pipelines.

Machine Learning Lifecycle MLflow, Unity Catalog, and integrated notebook environments create a comprehensive ML platform that’s difficult to replicate in Snowflake.

Unstructured Data Analysis Image processing, natural language processing, and other unstructured data workloads benefit from Databricks’ Python and Scala support.

Complex Data Engineering When you need fine-grained control over data processing logic, custom transformations, or integration with specialized libraries, Databricks provides more flexibility.

Engineering-Heavy Teams Organizations with strong data engineering capabilities can leverage Databricks’ flexibility to build highly optimized, custom solutions.

The Hidden Costs of Complexity

Databricks: The Engineering Tax

Skill Requirements Maximizing Databricks performance requires expertise in:

- Apache Spark optimization techniques

- Delta Lake best practices

- Cluster configuration and management

- Python/Scala programming for custom solutions

- Distributed systems troubleshooting

Ongoing Maintenance Peak performance requires continuous attention:

- Monitoring cluster utilization and right-sizing

- Optimizing data layouts and partitioning strategies

- Managing library dependencies and version compatibility

- Troubleshooting performance regressions

Knowledge Transfer Risk Databricks implementations often rely on specialized knowledge held by individual team members. When these experts leave, performance can degrade until replacement expertise is developed.

Snowflake: The Simplicity Premium

Lower Skill Barriers Snowflake’s performance comes from standard SQL skills that are widely available in the job market. This reduces hiring complexity and knowledge transfer risks.

Reduced Operational Overhead Automatic optimization means less time spent on performance tuning and more time available for actual data analysis and business value creation.

Predictable Performance Snowflake’s consistent performance characteristics reduce the need for extensive testing and optimization when deploying new workloads.

2025 Performance Reality Check

Beyond Speed: Total Cost of Performance

Real-world performance in 2025 isn’t just about query execution time—it’s about the total effort required to achieve and maintain that performance.

The True Performance Equation:

Real Performance = (Query Speed × Consistency) ÷ (Engineering Effort + Ongoing Maintenance + Cost Variability)By this measure, Snowflake often delivers superior “real performance” even when raw query times are similar to Databricks, because the denominator—total effort and complexity—is significantly lower.

Smart Scaling vs. Brute Force

The most successful data teams in 2025 focus on intelligent scaling rather than maximum throughput:

Smart Scaling Characteristics:

- Performance that grows predictably with workload

- Costs that align with business value delivered

- Minimal manual intervention required

- Consistent performance across different query patterns

Brute Force Scaling Problems:

- High performance requires constant tuning

- Cost growth outpaces business value

- Performance varies significantly based on optimization quality

- Heavy dependency on specialized expertise

ROI-Focused Performance

Modern data teams increasingly evaluate performance through ROI lenses:

Questions That Matter:

- How much engineering time does optimal performance require?

- What’s the business impact of performance variations?

- How does performance scale with team size and complexity?

- What’s the total cost of ownership including operational overhead?

Decision Framework: Choosing Your Platform

When Snowflake Makes Sense

Ideal Scenarios:

- SQL-heavy analytical workloads

- Business analyst-heavy teams

- Regulatory compliance requirements

- Need for predictable costs and performance

- Limited data engineering resources

- Emphasis on data sharing and collaboration

Team Characteristics:

- Strong SQL skills, limited programming expertise

- Preference for managed services over custom solutions

- Focus on business analysis over infrastructure optimization

- Small to medium data engineering teams

When Databricks Excels

Ideal Scenarios:

- Machine learning and AI workloads

- Streaming and real-time processing

- Complex data transformations

- Unstructured data analysis

- Need for fine-grained performance control

- Custom algorithm implementation

Team Characteristics:

- Strong programming skills (Python, Scala, Java)

- Data engineering expertise and capacity

- Willingness to invest in optimization

- Large, specialized data teams

- Focus on building differentiated data products

The Hybrid Reality

Many organizations don’t choose exclusively—they use both platforms for different use cases:

Common Hybrid Patterns:

- Databricks for data engineering and ML model training

- Snowflake for business intelligence and reporting

- Databricks for streaming data processing

- Snowflake for data sharing and collaboration

This approach maximizes the strengths of each platform while avoiding their weaknesses.

Key Decision Questions

Before choosing between Databricks and Snowflake, honestly assess your situation:

Team Capability Assessment

- Can your team handle Databricks’ tuning requirements, or does Snowflake’s automation save valuable time?

- What’s your current expertise level with distributed systems and Spark optimization?

- How much time can you dedicate to performance tuning vs. business analysis?

Budget and Cost Model

- Does your budget fit Databricks’ potential for cost sprawl, or do you need Snowflake’s predictable pricing clarity?

- What’s your tolerance for variable costs based on optimization quality?

- How important is cost predictability for your planning processes?

Workload Alignment

- SQL-focused analytics or Spark-based data engineering—which better fits your primary needs?

- What percentage of your workload is traditional BI vs. advanced analytics?

- Do you need real-time processing capabilities?

Growth and Evolution

- How will your workload patterns change as you scale?

- What’s your timeline for developing advanced data engineering capabilities?

- How important is flexibility for future, unknown requirements?

The 2025 Performance Takeaway

Performance in 2025 is about intelligent scaling and ROI optimization, not just raw speed metrics. The platforms that deliver the best “real performance” are those that align with your team’s capabilities and business objectives.

Snowflake offers a low-maintenance, analyst-friendly solution that delivers consistent performance through intelligent automation. It excels when you want to focus on data analysis rather than infrastructure optimization.

Databricks provides powerful capabilities for AI, streaming, and complex data engineering, but shifts significant optimization responsibility to users. It excels when you have the expertise to leverage its flexibility and the business requirements that justify the additional complexity.

The choice isn’t about which platform is objectively better—it’s about which platform better serves your specific context, team capabilities, and business objectives.

Your Performance Story

The real test of any platform isn’t benchmark results—it’s how it performs in your specific environment with your team and your workloads.

Which platform drives your data wins?

Have you found Snowflake’s automation saves enough engineering time to justify potentially higher per-query costs? Or has Databricks’ flexibility allowed you to build solutions that wouldn’t be possible on Snowflake?

Share your experience:

- What performance surprises have you discovered?

- Where do benchmark promises meet real-world reality?

- How has your platform choice affected your team’s productivity and job satisfaction?

- What would you choose differently if starting over today?

Your real-world insights help the entire data community make better platform decisions. The performance edge that matters most is the one that works for your specific situation—not the one that looks best on paper.

Leave a Reply