Data API in Production: Connectionless Patterns for Lambda & Containers

IAM auth, Secrets Manager flows, retries, and hard limits—no fairy tales.

Introduction — why this matters

You ship a bursty Lambda fleet or spiky containers behind an API. Traffic pops from 50 RPS to 3,000 in seconds. Traditional DB client pools melt because thousands of short-lived workers fight for a finite number of database sockets. You don’t want to babysit credentials either. “Connectionless” patterns—HTTP-style database access or on-demand auth tokens—let you scale requests without multiplying connections or hoarding passwords. This is how to do it in production.

Architecture — two practical “connectionless” paths

1) RDS Data API (HTTP to Aurora Serverless/Provisioned)

- Your code calls a regional HTTPS endpoint; AWS fans the query to the DB. No client TCP connection, no warm pool to manage.

- Wins: spiky Lambda traffic, zero drivers, simple auth via IAM.

- Watchouts: per-request latency, payload/size/throughput quotas; treat it like any external API (retry/jitter, idempotency keys). See AWS limits & throttling guidance. (AWS Documentation)

2) IAM Database Authentication + (optionally) RDS Proxy

- Your Lambda/container requests a short-lived auth token (SigV4) instead of storing a password. Token lifetime is 15 minutes. (AWS Documentation)

- With RDS Proxy in front, thousands of workers reuse a controlled pool of DB connections; you cap max backend sockets and tune idle/percent limits. (AWS Documentation)

- Wins: real drivers, full SQL features, central connection control.

- Watchouts: token refresh cycles, misconfigured proxy caps during traffic spikes.

End-to-end request flow (Lambda & ECS/EKS)

Secrets path (preferred for passwords or app keys):

- Code requests secret value (cached in memory) → AWS Secrets Manager.

- Use secret to auth (or to sign a token call).

- Cache aggressively; respect service P50s and regional quotas. (As of 2025,

GetSecretValuesupports very high RPS, but you should still cache.) (Amazon Web Services, Inc.)

IAM auth path (no passwords):

- Code calls

rds.generate_db_auth_token(...)to mint a 15-minute token. (AWS Documentation) - Connect via RDS Proxy (recommended for Lambda) or directly to RDS using the token.

- Re-mint before expiration or on 1045/28P01 auth errors.

Retry path (everything is an API now):

- Treat Data API, Secrets Manager, and your own API Gateway as external services: exponential backoff with jitter and idempotency safeguards. Lambda’s async invokes add their own retries (two by default for async), plus a long-lived internal queue; know how that stacks with your retries. (AWS Documentation)

A crisp comparison

| Concern | Data API | IAM Auth + RDS Proxy |

|---|---|---|

| Connection mgmt | None (HTTPS request) | Centralized pool; you cap backends (AWS Documentation) |

| Credentials | IAM only | IAM token (15 min) or password in Secrets Manager (AWS Documentation) |

| Cold starts | Minimal client init | Keep driver global, proxy hides DB handshakes |

| Throughput scaling | Constrained by API quotas; add retries/jitter (AWS Documentation) | Constrained by proxy/backends; tune max/idle % (AWS Documentation) |

| Dev ergonomics | No driver, JSON in/out | Full SQL/driver features |

| Gotchas | Payload size, statement timeouts, per-account throttling | Token rotation, idle timeouts, mis-sized pools |

Production snippets (Python)

A) Lambda with RDS Data API (Aurora) — minimal client, safe retries

import os, json, time, random

import boto3

from botocore.config import Config

rds = boto3.client("rds-data", config=Config(retries={"max_attempts": 0})) # we handle retries

ARN_DB = os.environ["DB_ARN"]

ARN_SEC = os.environ["SECRET_ARN"]

DB_NAME = os.environ["DB_NAME"]

def _sleep_backoff(attempt):

base = min(0.25 * (2 ** attempt), 5.0) # cap step

time.sleep(random.uniform(0, base)) # full jitter

def exec_sql(sql, params=None, max_tries=6):

for attempt in range(max_tries):

try:

return rds.execute_statement(

resourceArn=ARN_DB,

secretArn=ARN_SEC,

database=DB_NAME,

sql=sql,

parameters=params or []

)

except rds.exceptions.ThrottlingException:

_sleep_backoff(attempt) # API throttling: back off + jitter (treat like any HTTP)

except rds.exceptions.BadRequestException as e:

raise RuntimeError(f"SQL error: {e}") # non-retryable

raise TimeoutError("RDS Data API throttled too long")

Why: You own backoff/jitter because the Data API is a regional HTTPS service with its own throttling; don’t rely solely on the default SDK retries. (AWS Documentation)

B) Containers/Lambda with IAM token + RDS Proxy

import os, pymysql, boto3, time

from urllib.parse import quote_plus

region = os.environ["AWS_REGION"]

host = os.environ["DB_ENDPOINT"] # RDS Proxy endpoint

user = os.environ["DB_USER"]

dbname = os.environ["DB_NAME"]

rds = boto3.client("rds")

def token():

return rds.generate_db_auth_token(

DBHostname=host, Port=3306, DBUsername=user, Region=region

) # valid ~15 minutes

_conn = None

_exp = 0

def get_conn():

global _conn, _exp

now = time.time()

if not _conn or now > _exp - 60:

pwd = token()

_exp = now + 14*60 # refresh early to avoid expiry mid-query

_conn = pymysql.connect(host=host, user=user, password=pwd,

db=dbname, ssl={"ssl":{}})

return _conn

Why: Refresh before the 15-minute deadline; keep the connection global (outside handler) to minimize cold-start tax; let RDS Proxy cap backend sockets. (AWS Documentation)

Hard limits & behaviors you must design for

- Secrets Manager:

GetSecretValue&DescribeSecrethave very high RPS ceilings today, but you should still cache in memory (e.g., 5–10 minutes) and exponential-backoff on 429s. (Amazon Web Services, Inc.) - IAM DB Auth: tokens expire 15 minutes after issue; reconnect or re-token before that. Long-running queries started with an about-to-expire token can fail mid-flight. (AWS Documentation)

- API Gateway/Data API: expect throttling; implement idempotency keys and full-jitter backoff; push heavy writes to queues (SQS/SNS/EventBridge) to smooth bursts. (AWS Documentation)

- Lambda async: AWS will retry failed async invocations twice by default and queue for hours; combine with your app-level retries carefully to avoid retry storms; use DLQs/on-failure destinations. (AWS Documentation)

- RDS Proxy: tune idle timeout and

MaxConnectionsPercent/MaxIdleConnectionsPercent; don’t assume defaults match your DB’smax_connections. Monitor pool metrics—“healthy” ≠ “right-sized.” (AWS Documentation)

Best practices (battle-tested)

- Pick the path by workload shape

- Many tiny, stateless requests? Start with Data API.

- Chatty sessions / driver features / strict latency? IAM + RDS Proxy.

- Cache secrets and tokens

- Cache Secrets Manager reads in memory; rotate on TTL or version change.

- Pre-emptively refresh IAM tokens at T-60s; reconnect on auth errors. (AWS Documentation)

- Make all writes idempotent

- Use request IDs (e.g.,

Idempotency-Keyheader, or composite primary keys) so client retries don’t double-insert.

- Retry with full jitter

- Exponential backoff with jitter; cap total time; surface 429/5xx as 503 to upstreams. (Repost)

- Right-size RDS Proxy

- Start with 80%

MaxConnectionsPercent, 15%MaxIdleConnectionsPercent, then load test; watch backend saturation during Lambda concurrency spikes. (AWS Documentation)

- Separate hot paths

- Drain high-variance writes into SQS/EventBridge; let consumers batch to DB. This reduces connection pressure and deadlocks.

- Observability first

- Emit attempt counters, retry reasons, token age, and pool stats. Alarms: Secrets 429s, Data API 4xx/5xx, Proxy connection errors, Lambda DLQ depth.

Common pitfalls (and the fix)

- Reading Secrets Manager on every invoke. Cache it; treat Secrets as config, not data. (AWS Documentation)

- Letting IAM tokens lapse mid-query. Refresh early; on auth error, re-mint and reconnect. (AWS Documentation)

- Assuming Lambda retries “handle it.” They don’t for sync calls; async adds two retries only—you still need idempotency and backoff. (AWS Documentation)

- Oversizing Proxy idle pool. Idle ≠ free; it consumes backend slots. Tune or you’ll starve real traffic. (AWS Documentation)

- Ignoring API Gateway throttling. Model quotas per stage/key and test 429 paths. (AWS Documentation)

Conclusion & takeaways

Connectionless doesn’t mean “no database”—it means you scale calls, not connections. Choose Data API for pure burst elasticity and easy ops; choose IAM + RDS Proxy for richer SQL with bounded sockets. Either way, the production game is the same: cache secrets, refresh tokens early, engineer retries with jitter, enforce idempotency, and right-size your proxy or quotas. Do that, and your Lambda/containers will surge without shattering the database.

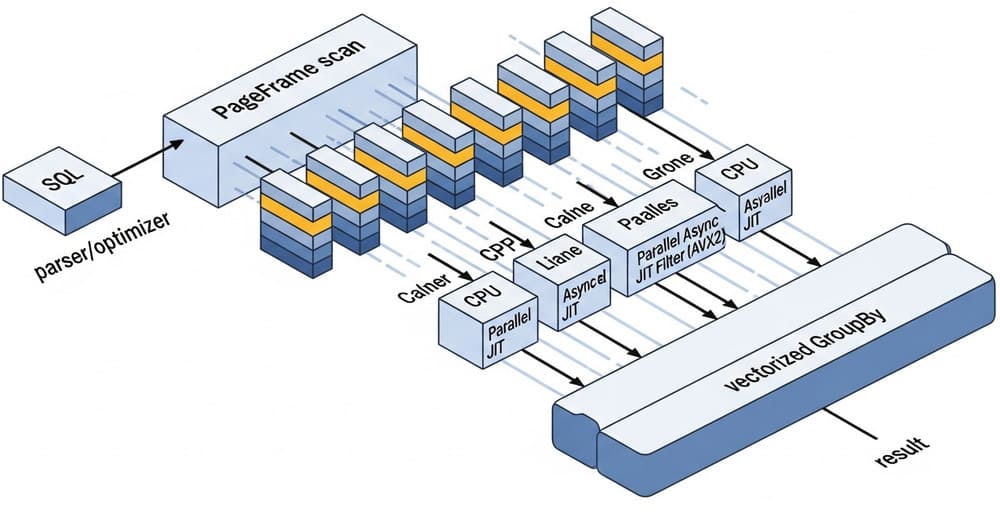

Image prompt (feed to Midjourney/DALL·E)

“A clean, modern data architecture diagram showing two paths: (1) Lambda → API Gateway → RDS Data API → Aurora, and (2) Lambda/Containers → RDS Proxy → RDS, with IAM token and Secrets Manager side-flows. Minimalistic, high contrast, 3D isometric style, labeled arrows for retries/backoff and token TTL.”

Tags

#Serverless #AWSLambda #RDSProxy #RDSDataAPI #IAM #SecretsManager #PostgreSQL #Aurora #Scalability #Resilience

Leave a Reply