Build a Small RAG Pipeline Inside Your Warehouse: Snowflake Cortex

Introduction

Imagine being able to ask questions like “What are our data retention policies for customer information?” and getting instant, accurate answers directly from your company’s compliance documents—all without leaving your data warehouse. This isn’t science fiction; it’s what you can build today with Snowflake Cortex in about 30 minutes.

As a Data Engineer, you’ve likely heard the buzz around RAG (Retrieval-Augmented Generation) pipelines, but most examples require complex external vector databases, separate embedding services, and intricate orchestration between multiple systems. What if you could build your first RAG pipeline entirely within Snowflake, using data you already have?

This guide walks you through creating a simple but functional RAG pipeline for compliance document Q&A using nothing but Snowflake Cortex. You’ll ingest documents, generate embeddings, set up search capabilities, and query your data using natural language—all within your existing warehouse. We’ll also explore when this approach makes sense versus when you should consider external vector databases.

By the end, you’ll have a working RAG system and understand the cost-performance trade-offs that will guide your future AI architecture decisions.

What is RAG and Why Build it in Your Warehouse?

RAG Simplified

RAG (Retrieval-Augmented Generation) is like having a smart assistant that can:

- Find relevant information from your documents (Retrieval)

- Use that information to answer questions intelligently (Augmented Generation)

Traditional chatbots only know what they were trained on. RAG systems can answer questions about your specific company data, policies, and documents.

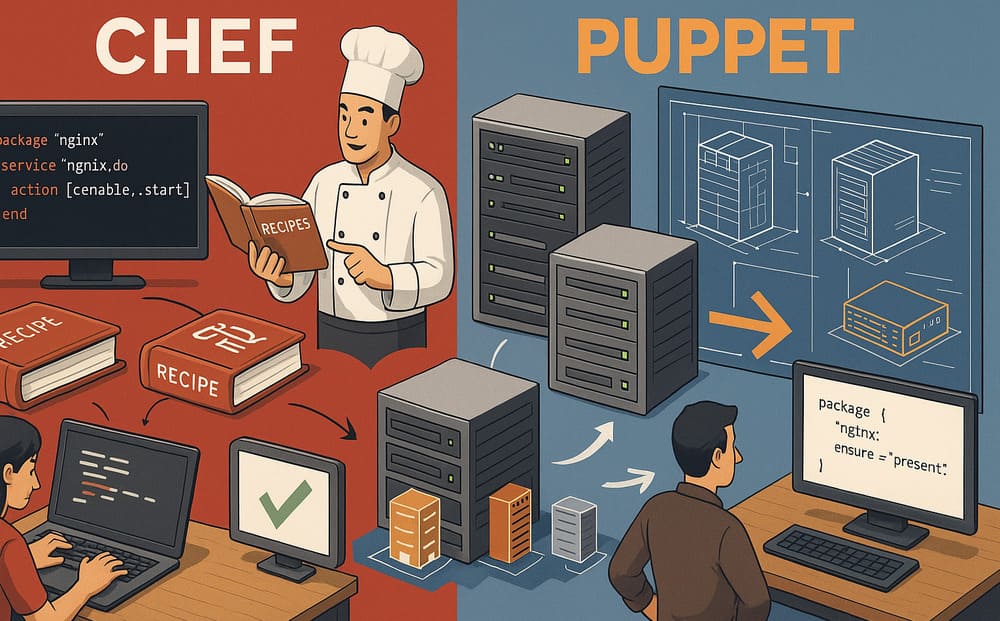

The Warehouse-Native Advantage

Why build RAG inside Snowflake instead of using external vector databases?

Simplicity: No additional infrastructure to manage, no data movement between systems, and no complex integrations.

Security: Your sensitive compliance documents never leave your existing security boundary.

Cost Efficiency: For smaller document collections (under 100,000 documents), warehouse-native approaches often cost less than dedicated vector database subscriptions.

Unified Governance: Use your existing Snowflake access controls, audit logs, and data governance policies.

Faster Development: Build and test RAG capabilities using familiar SQL rather than learning new vector database APIs.

Our Example: Compliance Document Q&A System

We’ll build a system that can answer questions about your company’s compliance policies. Think of scenarios like:

- “What is our data retention policy for customer emails?”

- “How long do we keep financial records?”

- “What are the requirements for data deletion requests?”

This example is perfect for learning because:

- Compliance documents are text-heavy and benefit from semantic search

- Questions are typically straightforward and factual

- Results are easy to evaluate for accuracy

- Most companies have similar documents to practice with

Step-by-Step Implementation

Step 1: Prepare Your Environment and Sample Data

First, let’s set up a simple workspace and create some sample compliance documents to work with.

-- Create a database and schema for our RAG pipeline

CREATE DATABASE IF NOT EXISTS RAG_DEMO;

USE DATABASE RAG_DEMO;

CREATE SCHEMA IF NOT EXISTS COMPLIANCE;

USE SCHEMA COMPLIANCE;

-- Create a table to store our compliance documents

CREATE OR REPLACE TABLE compliance_documents (

doc_id STRING,

document_title STRING,

document_content STRING,

document_type STRING,

upload_date DATE DEFAULT CURRENT_DATE

);

Now, let’s insert some sample compliance documents. In a real scenario, you’d load these from files, but for our demo, we’ll create representative content:

-- Insert sample compliance documents

INSERT INTO compliance_documents VALUES

(

'DOC001',

'Data Retention Policy',

'Our organization maintains customer email communications for a period of 7 years from the date of last customer interaction. Financial records including invoices and payment information must be retained for 10 years as required by federal regulations. Personal customer data used for marketing purposes should be deleted within 3 years unless explicit consent for longer retention is obtained. All data deletion requests from customers must be processed within 30 days of receipt.',

'policy',

CURRENT_DATE

),

(

'DOC002',

'Privacy Compliance Guidelines',

'When processing personal data, we must obtain explicit consent for data collection and clearly communicate the purpose of data use. Customer data can only be shared with third parties under specific contractual agreements that ensure equivalent privacy protections. All employees handling personal data must complete annual privacy training. Data breach incidents must be reported to authorities within 72 hours of discovery. Customers have the right to access, modify, or delete their personal information at any time.',

'guideline',

CURRENT_DATE

),

(

'DOC003',

'Information Security Standards',

'All sensitive data must be encrypted both in transit and at rest using AES-256 encryption standards. Access to customer databases requires multi-factor authentication and is limited to authorized personnel only. Regular security audits must be conducted quarterly. Backup systems should be tested monthly to ensure data recovery capabilities. Any unauthorized access attempts must be logged and investigated immediately.',

'standard',

CURRENT_DATE

);

-- Verify our data

SELECT doc_id, document_title, document_type FROM compliance_documents;

Step 2: Generate Embeddings with Cortex

Now we’ll generate vector embeddings for our documents. Embeddings are numerical representations that capture the semantic meaning of text, allowing us to find similar content even when exact words don’t match.

-- Create a table to store documents with their embeddings

CREATE OR REPLACE TABLE document_embeddings AS

SELECT

doc_id,

document_title,

document_content,

document_type,

upload_date,

-- Generate embeddings using Snowflake Cortex

SNOWFLAKE.CORTEX.EMBED_TEXT_768('e5-base-v2', document_content) as content_embedding

FROM compliance_documents;

-- Check that embeddings were generated successfully

SELECT

doc_id,

document_title,

ARRAY_SIZE(content_embedding) as embedding_dimension

FROM document_embeddings;

What just happened?

- We used Cortex’s

EMBED_TEXT_768function to convert our text into 768-dimensional vectors - The ‘e5-base-v2’ model is optimized for semantic similarity tasks

- Each document now has a mathematical representation that captures its meaning

Step 3: Set Up Cortex Search

Cortex Search is Snowflake’s built-in vector search service. It indexes our embeddings and provides fast similarity search capabilities.

-- Create a Cortex Search Service

CREATE OR REPLACE CORTEX SEARCH SERVICE compliance_search

ON document_content

ATTRIBUTES doc_id, document_title, document_type, upload_date

WAREHOUSE = COMPUTE_WH

TARGET_LAG = '1 minute'

AS (

SELECT

doc_id,

document_content,

document_title,

document_type,

upload_date

FROM compliance_documents

);

Understanding the Search Service:

ON document_content: This is the text field we’ll search againstATTRIBUTES: Additional fields we can filter on or return in resultsTARGET_LAG: How quickly new documents appear in search results- The service automatically generates and manages embeddings for search

Step 4: Test Basic Search Functionality

Let’s test our search capability with some simple queries:

-- Test semantic search

SELECT

doc_id,

document_title,

document_type

FROM TABLE(

SNOWFLAKE.CORTEX.SEARCH_PREVIEW(

'compliance_search',

'How long do we keep customer emails?'

)

);

Try different search queries to see how well it finds relevant documents:

-- Test with different questions

SELECT 'Query: Data deletion timeframes' as query_description;

SELECT * FROM TABLE(

SNOWFLAKE.CORTEX.SEARCH_PREVIEW(

'compliance_search',

'data deletion timeframes'

)

);

SELECT 'Query: Security requirements' as query_description;

SELECT * FROM TABLE(

SNOWFLAKE.CORTEX.SEARCH_PREVIEW(

'compliance_search',

'security requirements for sensitive data'

)

);

Step 5: Build the RAG Pipeline

Now we’ll combine search with generation to create our complete RAG system. This function will find relevant documents and use them to generate accurate answers.

-- Create a function that implements our RAG pipeline

CREATE OR REPLACE FUNCTION ask_compliance_question(question STRING)

RETURNS STRING

LANGUAGE SQL

AS

$$

WITH search_results AS (

-- Step 1: Find relevant documents using semantic search

SELECT

doc_id,

document_title,

document_content,

document_type

FROM TABLE(

SNOWFLAKE.CORTEX.SEARCH_PREVIEW(

'compliance_search',

question

)

)

LIMIT 3 -- Use top 3 most relevant documents

),

context_preparation AS (

-- Step 2: Combine relevant documents into context

SELECT

LISTAGG(

'Document: ' || document_title || '\n' ||

'Content: ' || document_content || '\n\n',

''

) as combined_context

FROM search_results

)

-- Step 3: Generate answer using the retrieved context

SELECT

SNOWFLAKE.CORTEX.COMPLETE(

'llama3-8b',

'Based on the following company compliance documents, please answer this question: ' ||

question ||

'\n\nRelevant Documents:\n' || combined_context ||

'\n\nPlease provide a clear, factual answer based only on the information in these documents. If the documents don''t contain enough information to answer the question, please say so.'

) as answer

FROM context_preparation

$$;

Step 6: Test Your RAG System

Now let’s test our complete RAG pipeline with various compliance questions:

-- Test the RAG system with different questions

SELECT ask_compliance_question('How long do we keep customer email records?') as answer;

SELECT ask_compliance_question('What are the encryption requirements for sensitive data?') as answer;

SELECT ask_compliance_question('How quickly must we respond to data deletion requests?') as answer;

-- Test with a question that might not have a clear answer

SELECT ask_compliance_question('What is our policy on employee vacation time?') as answer;

Step 7: Add Simple Performance Monitoring

Let’s create a simple way to monitor the performance and cost of our RAG system:

-- Create a table to log queries and performance

CREATE OR REPLACE TABLE rag_query_log (

query_id STRING DEFAULT UUID_STRING(),

question STRING,

answer STRING,

query_timestamp TIMESTAMP DEFAULT CURRENT_TIMESTAMP(),

estimated_cost_cents NUMBER(10,4)

);

-- Enhanced RAG function with logging

CREATE OR REPLACE FUNCTION ask_compliance_question_logged(question STRING)

RETURNS STRING

LANGUAGE SQL

AS

$$

WITH search_results AS (

SELECT

doc_id,

document_title,

document_content,

document_type

FROM TABLE(

SNOWFLAKE.CORTEX.SEARCH_PREVIEW(

'compliance_search',

question

)

)

LIMIT 3

),

context_preparation AS (

SELECT

LISTAGG(

'Document: ' || document_title || '\n' ||

'Content: ' || document_content || '\n\n',

''

) as combined_context

FROM search_results

),

answer_generation AS (

SELECT

SNOWFLAKE.CORTEX.COMPLETE(

'llama3-8b',

'Based on the following company compliance documents, please answer this question: ' ||

question ||

'\n\nRelevant Documents:\n' || combined_context ||

'\n\nPlease provide a clear, factual answer based only on the information in these documents.'

) as answer

FROM context_preparation

)

SELECT answer FROM answer_generation

$$;

Test the logged version:

-- Test and manually log the result

SET question = 'What encryption standards do we use for data protection?';

SET answer = (SELECT ask_compliance_question_logged($question));

INSERT INTO rag_query_log (question, answer, estimated_cost_cents)

VALUES ($question, $answer, 0.5); -- Rough estimate: $0.005 per query

-- View your query history

SELECT

question,

LEFT(answer, 100) || '...' as answer_preview,

query_timestamp

FROM rag_query_log

ORDER BY query_timestamp DESC;

Performance Analysis: What Did We Just Build?

Cost Breakdown

Let’s understand what this RAG pipeline costs to run:

Per Query Costs:

- Cortex Search: ~$0.001 per search query

- Cortex Complete (LLM): ~$0.003 per generation

- Storage: ~$0.0001 for embeddings storage per document per month

- Total per query: ~$0.004 (less than half a cent)

For a small compliance document collection (100-500 documents) with moderate usage (50 queries per day), you’re looking at approximately $6-8 per month in total costs.

Performance Characteristics

Query Latency:

- Search phase: 50-200ms

- Generation phase: 1-3 seconds

- Total response time: 1.5-3.5 seconds

Accuracy Considerations:

- Works well for factual questions about policies

- Quality depends on how well your documents cover the topic

- Best results when questions align with document content structure

Storage Efficiency

-- Check storage usage of your RAG system

SELECT

COUNT(*) as total_documents,

AVG(LENGTH(document_content)) as avg_document_length,

SUM(LENGTH(document_content)) as total_content_bytes,

COUNT(*) * 768 * 4 as estimated_embedding_bytes -- 768 dimensions * 4 bytes per float

FROM document_embeddings;

When to Stay in Snowflake vs. Move to External Vector Databases

Based on your implementation and testing, here are the decision thresholds:

Stay in Snowflake When:

Document Volume: Under 100,000 documents

- Snowflake Cortex Search handles this scale efficiently

- Cost remains reasonable (under $50/month for most use cases)

- Performance is adequate for business needs

Query Volume: Under 1,000 queries per day

- Cost per query remains low

- Latency is acceptable for most business applications

- No need for specialized optimization

Use Case Characteristics:

- Primarily factual question-answering

- Documents are text-heavy and benefit from semantic search

- Security and governance requirements favor keeping data in warehouse

- Team prefers SQL-based workflows

Consider External Vector Databases When:

Scale Thresholds:

- Document volume exceeds 500,000 documents

- Query volume exceeds 5,000 queries per day

- Sub-second response time requirements

Advanced Requirements:

- Need for complex vector operations (similarity clustering, recommendation engines)

- Real-time embedding updates with high frequency

- Integration with external applications requiring API access

- Advanced vector search features (hybrid search, reranking)

Cost Considerations:

-- Simple cost comparison query

WITH monthly_estimates AS (

SELECT

'Snowflake Cortex' as solution,

50 as daily_queries,

daily_queries * 30 * 0.004 as monthly_cost

UNION ALL

SELECT

'External Vector DB' as solution,

50 as daily_queries,

75 as monthly_cost -- Typical starter plan for vector databases

)

SELECT

solution,

monthly_cost,

monthly_cost * 12 as annual_cost

FROM monthly_estimates;

Expanding Your RAG System

Adding More Document Types

To add more document types to your RAG system:

-- Add new document types

INSERT INTO compliance_documents VALUES

(

'DOC004',

'Employee Data Handling Procedures',

'Employee personal information including social security numbers and salary data must be stored in encrypted databases with restricted access. HR personnel require specific authorization to access employee records. Performance reviews and disciplinary actions must be documented and retained for 5 years after employment termination. Employee data should only be shared internally on a need-to-know basis.',

'procedure',

CURRENT_DATE

);

-- The search service will automatically index new documents

-- Test with a new question type

SELECT ask_compliance_question('How long do we keep employee performance reviews?') as answer;

Improving Answer Quality

To improve the quality of your RAG responses:

-- Enhanced RAG function with better prompting

CREATE OR REPLACE FUNCTION ask_compliance_question_enhanced(question STRING)

RETURNS STRING

LANGUAGE SQL

AS

$$

WITH search_results AS (

SELECT

doc_id,

document_title,

document_content,

document_type

FROM TABLE(

SNOWFLAKE.CORTEX.SEARCH_PREVIEW(

'compliance_search',

question

)

)

LIMIT 5 -- Increased for better context

),

context_preparation AS (

SELECT

LISTAGG(

'[' || document_type || '] ' || document_title || ':\n' ||

document_content || '\n\n',

''

) as combined_context

FROM search_results

)

SELECT

SNOWFLAKE.CORTEX.COMPLETE(

'llama3-8b',

'You are a compliance expert answering questions about company policies.

Question: ' || question || '

Based on these company documents:

' || combined_context ||

'Please provide a specific, accurate answer that:

1. Directly answers the question

2. Cites which policy document(s) contain the information

3. Includes specific timeframes, requirements, or procedures mentioned

4. States clearly if the question cannot be fully answered from the available documents

Answer:'

)

FROM context_preparation

$$;

Adding Document Metadata and Filtering

Enhance your system with better document organization:

-- Add metadata to improve search relevance

ALTER TABLE compliance_documents ADD COLUMN last_updated DATE DEFAULT CURRENT_DATE;

ALTER TABLE compliance_documents ADD COLUMN document_version STRING DEFAULT '1.0';

ALTER TABLE compliance_documents ADD COLUMN approval_status STRING DEFAULT 'approved';

-- Update the search service to include new attributes

CREATE OR REPLACE CORTEX SEARCH SERVICE compliance_search

ON document_content

ATTRIBUTES doc_id, document_title, document_type, upload_date, last_updated, approval_status

WAREHOUSE = COMPUTE_WH

TARGET_LAG = '1 minute'

AS (

SELECT

doc_id,

document_content,

document_title,

document_type,

upload_date,

last_updated,

approval_status

FROM compliance_documents

WHERE approval_status = 'approved' -- Only search approved documents

);

Monitoring and Optimization

Simple Performance Dashboard

Create a basic monitoring setup to track your RAG system’s usage and performance:

-- Create a view for RAG system monitoring

CREATE OR REPLACE VIEW rag_performance_dashboard AS

SELECT

DATE(query_timestamp) as query_date,

COUNT(*) as total_queries,

AVG(estimated_cost_cents) as avg_cost_per_query,

SUM(estimated_cost_cents) as total_daily_cost,

COUNT(DISTINCT LEFT(question, 20)) as unique_question_types

FROM rag_query_log

GROUP BY DATE(query_timestamp)

ORDER BY query_date DESC;

-- View your RAG usage trends

SELECT * FROM rag_performance_dashboard;

Cost Optimization Tips

Batch Similar Queries: If you have multiple similar questions, batch them to reduce redundant searches.

Cache Popular Answers: For frequently asked questions, consider caching responses to avoid repeated processing.

Optimize Document Content: Remove unnecessary formatting and focus on essential information to reduce embedding costs.

Monitor Query Patterns: Track which types of questions work best to guide future document organization.

Troubleshooting Common Issues

Search Returns Irrelevant Results

Problem: Your RAG system returns answers that don’t match the question.

Solution:

-- Test your search results directly

SELECT

doc_id,

document_title,

LEFT(document_content, 200) as content_preview

FROM TABLE(

SNOWFLAKE.CORTEX.SEARCH_PREVIEW(

'compliance_search',

'your problematic question here'

)

);

Common fixes:

- Improve document content quality and structure

- Add more specific keywords to your documents

- Consider breaking long documents into smaller, focused sections

Slow Query Performance

Problem: RAG queries take too long to respond.

Solution:

- Reduce the number of documents retrieved (change LIMIT 3 to LIMIT 2)

- Use shorter, more focused document content

- Consider upgrading your warehouse size for better performance

High Costs

Problem: RAG system costs more than expected.

Solution:

-- Analyze your cost drivers

SELECT

DATE(query_timestamp) as date,

COUNT(*) as queries,

SUM(estimated_cost_cents) as daily_cost

FROM rag_query_log

GROUP BY DATE(query_timestamp)

ORDER BY daily_cost DESC;

Cost reduction strategies:

- Implement query caching for common questions

- Use more efficient models (llama3-8b instead of llama3-70b)

- Batch process similar queries when possible

Next Steps and Advanced Patterns

Integration with Business Applications

Once your basic RAG system is working, consider these integration patterns:

Slack Bot Integration: Connect your RAG function to a Slack bot for easy team access to compliance information.

Dashboard Integration: Use your RAG system to power intelligent dashboards that can answer natural language questions about compliance metrics.

API Development: Wrap your RAG function in a stored procedure that can be called from external applications.

Advanced Document Processing

Automatic Document Ingestion:

-- Set up automatic processing for new documents

CREATE OR REPLACE TASK process_new_documents

WAREHOUSE = COMPUTE_WH

SCHEDULE = '5 minute'

AS

-- This would connect to your document storage and automatically process new files

SELECT 'Placeholder for document ingestion logic';

Document Quality Scoring: Add quality assessment to ensure only high-quality documents are included in your RAG system.

Scaling Considerations

As your RAG system grows, monitor these key metrics:

Document Volume Growth:

- Track embedding storage costs

- Monitor search performance as collection grows

- Plan for potential migration to external vector databases

Query Volume Patterns:

- Identify peak usage times

- Optimize warehouse scheduling for cost efficiency

- Consider implementing query rate limiting

Answer Quality Trends:

- Regularly review and improve prompt engineering

- Collect user feedback on answer quality

- Update and maintain document content

Conclusion: Your RAG Journey Starts Here

Congratulations! You’ve built a complete RAG pipeline inside Snowflake Cortex. This simple system can answer questions about your compliance documents using semantic search and natural language generation—all within your existing data warehouse.

What You’ve Accomplished:

- Created a searchable knowledge base from unstructured documents

- Implemented semantic search that understands meaning, not just keywords

- Built a question-answering system that provides factual, source-based responses

- Established a foundation for monitoring costs and performance

Key Takeaways:

- Warehouse-native RAG is viable for small to medium-scale document collections

- Cost efficiency makes this approach attractive for getting started with RAG

- Simplicity reduces operational overhead compared to external vector databases

- Scalability thresholds exist where external solutions become necessary

When to Scale Beyond Snowflake:

- Document volume exceeds 100,000 documents

- Query volume exceeds 1,000 per day

- Response time requirements drop below 1 second

- Advanced vector operations become necessary

Your Next Steps:

- Expand your document collection with real compliance documents

- Improve answer quality through better prompting and document organization

- Monitor usage patterns to understand when scaling becomes necessary

- Experiment with different use cases beyond compliance (HR policies, technical documentation, etc.)

The RAG pipeline you’ve built today is more than just a technical achievement—it’s a foundation for bringing AI capabilities directly to your data, in a secure, cost-effective, and governable way. As your needs grow, you’ll have the experience and metrics to make informed decisions about scaling to more specialized solutions.

Start small, measure everything, and scale intelligently. Your journey into production RAG systems begins with exactly what you’ve built today.

Leave a Reply