Vector Databases in Production: What ML Engineers Need to Know in 2025

Why This Article Matters

If you’re building AI applications today, you’re probably dealing with embeddings. And if you’re dealing with embeddings at scale, you need a vector database. But here’s what most tutorials won’t tell you: choosing and implementing a vector database for production is way more complex than the demos suggest.

This guide covers what actually matters when you’re deploying vector search in production environments. We’ll skip the basic “what is a vector” explanations and focus on the decisions that will make or break your system.

The Real Cost of Vector Search

Before we get into the technical details, let’s talk money. Vector databases can get expensive fast, and not just in obvious ways.

Your costs come from three main areas. First, storage. A single 1536-dimensional embedding (standard for OpenAI’s models) takes about 6KB. Got a million documents? That’s 6GB just for vectors, not counting metadata or indexes. Second, compute. Vector similarity search is computationally intensive. The more dimensions and documents you have, the more CPU or GPU power you need. Third, memory. Most vector databases keep hot data in RAM for performance. With high-dimensional vectors, memory requirements explode.

Here’s what this looks like in practice. A startup I worked with indexed 10 million product descriptions. Their monthly bill went from $500 to $8,000 overnight. They hadn’t planned for the memory requirements of keeping frequently-accessed vectors in RAM.

Choosing Your Vector Database: Beyond the Marketing

Every vendor claims to be the fastest and most scalable. Here’s how to cut through the noise and pick what actually works for your use case.

When to Use Pinecone

Pinecone makes sense when you need to get to production fast and don’t want to manage infrastructure. It’s fully managed, scales automatically, and has solid performance out of the box. The tradeoff? You’re locked into their pricing model, which gets steep at scale. Also, you can’t run it on-premise if compliance requires it.

Best for: SaaS products, rapid prototyping, teams without dedicated infrastructure engineers.

When to Use Weaviate

Weaviate shines when you need hybrid search (combining vector and keyword search) or want to run everything in your own infrastructure. It has built-in modules for different embedding models and supports GraphQL queries, which some teams love and others hate.

Best for: E-commerce search, content recommendation systems, teams comfortable with Kubernetes.

When to Use Qdrant

Qdrant stands out for its filtering capabilities. If you need to do vector search with complex metadata filters (like “find similar products but only in the electronics category under $500”), Qdrant handles this better than most alternatives. It’s also written in Rust, so performance is excellent.

Best for: Marketplaces, filtered recommendation systems, high-performance requirements.

When to Use pgvector

Here’s the controversial take: pgvector (PostgreSQL extension) is often the right choice. If you’re already using PostgreSQL and your dataset is under 1 million vectors, pgvector saves you from managing another system. The performance won’t match specialized databases, but the operational simplicity often wins.

Best for: Small to medium datasets, teams already using PostgreSQL, avoiding additional infrastructure.

Indexing Strategies That Actually Work

The default HNSW (Hierarchical Navigable Small World) index works great until it doesn’t. Here’s when to use different strategies.

HNSW: The Default Choice

HNSW gives you the best recall/speed tradeoff for most use cases. Set m (number of connections) between 16-32 and ef_construction around 200 as a starting point. Higher values improve recall but slow down indexing.

# Example configuration for Weaviate

class_obj = {

"class": "Product",

"vectorIndexConfig": {

"distance": "cosine",

"ef": 256, # Higher for better recall during search

"efConstruction": 200,

"maxConnections": 32

}

}

IVF: When Memory Matters

IVF (Inverted File Index) uses less memory than HNSW but requires training. If you’re working with billions of vectors and memory is tight, IVF becomes attractive. The catch? You need representative training data, and updates are more complex.

Flat Index: Don’t Dismiss It

For datasets under 100K vectors, flat (brute force) search often beats fancy algorithms. It’s 100% accurate, simple to implement, and with modern hardware, surprisingly fast at small scales.

Production Patterns That Save Your Weekends

After debugging vector search at 3 AM too many times, these patterns will save you pain.

Pattern 1: Dual-Write for Zero Downtime Migration

Never migrate vector databases in place. Instead, write to both old and new systems during migration, gradually shift read traffic, then remove the old system. This approach has saved me from multiple failed migrations.

def index_document(doc):

# Write to both systems during migration

try:

old_db.index(doc)

except Exception as e:

log.error(f"Old DB indexing failed: {e}")

try:

new_db.index(doc)

except Exception as e:

log.error(f"New DB indexing failed: {e}")

# Depending on your requirements, you might want to fail here

Pattern 2: Embedding Versioning

Embeddings aren’t static. When you change models or preprocessing, you need to reindex everything. Always version your embeddings and support multiple versions during transitions.

def create_embedding(text, version="v2"):

if version == "v1":

return old_model.encode(text)

elif version == "v2":

return new_model.encode(preprocess_v2(text))

# Store version with metadata

metadata = {

"text": original_text,

"embedding_version": "v2",

"created_at": datetime.now()

}

Pattern 3: Chunking Strategy

For long documents, your chunking strategy matters more than your embedding model. Overlapping chunks improve retrieval but increase storage. A 30% overlap with 512-token chunks works well for most text documents.

def chunk_document(text, chunk_size=512, overlap=0.3):

tokens = tokenizer.encode(text)

stride = int(chunk_size * (1 - overlap))

chunks = []

for i in range(0, len(tokens), stride):

chunk_tokens = tokens[i:i + chunk_size]

chunks.append(tokenizer.decode(chunk_tokens))

return chunks

Monitoring: Metrics That Matter

Most teams monitor the wrong things. Here are the metrics that actually predict problems.

Query latency percentiles (not averages). Your p99 latency tells you what your slowest users experience. If p99 is 10x your p50, you have a problem.

Recall degradation over time. As you add vectors, recall often drops. Set up regular evaluation sets and track recall metrics. A 5% drop might mean it’s time to retrain your index.

Memory fragmentation. Vector databases can suffer from memory fragmentation after lots of updates. Monitor RSS memory vs. actual data size. If the ratio grows beyond 2x, you might need to rebuild your index.

Index build time. This tells you how long disaster recovery will take. If rebuilding your index takes 12 hours, you need a different disaster recovery strategy.

Common Pitfalls and How to Avoid Them

Pitfall 1: Not Planning for Updates

Many vector databases handle inserts well but struggle with updates. If your documents change frequently, benchmark update performance early. Some databases require full reindexing for updates.

Pitfall 2: Ignoring Metadata Storage

Your vectors are useless without metadata. But metadata can easily become larger than your vectors. Plan for metadata storage and filtering performance from day one.

Pitfall 3: Over-Optimizing Dimensions

Yes, reducing dimensions from 1536 to 768 cuts storage in half. But the recall drop might kill your use case. Test dimension reduction with your actual data, not benchmarks.

Pitfall 4: Not Testing at Scale

Vector search performance isn’t linear. A system that works great with 100K vectors might fall apart at 10M. Load test with realistic data volumes before committing to an architecture.

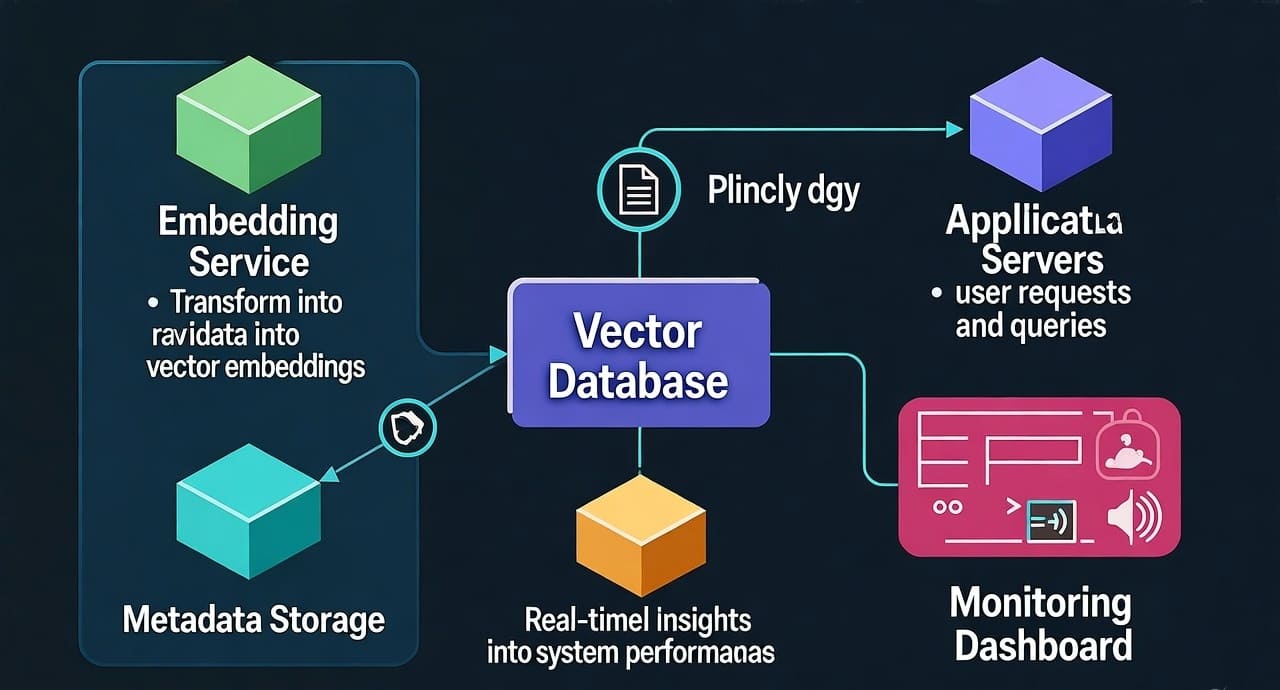

Real-World Architecture: RAG System Example

Here’s a production architecture for a RAG (Retrieval-Augmented Generation) system that’s actually running in production, handling 5M+ queries daily.

The ingestion pipeline starts with documents flowing into a preprocessing queue (we use AWS SQS). Workers pull documents, chunk them with overlap, generate embeddings using a containerized embedding service, and index into our primary vector database (Qdrant) with metadata. We simultaneously store everything in S3 for disaster recovery.

The query path keeps things simple. User queries hit our API gateway, get embedded using the same service (crucial for consistency), search Qdrant with metadata filters, and return top-k results with scores. We cache frequent queries in Redis with 1-hour TTL.

The system scales horizontally. Add more workers for ingestion, scale the embedding service based on CPU usage, and Qdrant handles its own scaling. The bottleneck is usually the embedding service, so we run multiple replicas behind a load balancer.

The Future: What’s Coming in 2025

Binary quantization is becoming mainstream. Reducing vectors to binary representations cuts storage by 32x with surprisingly small recall drops for many use cases.

Learned indexes are replacing HNSW in some scenarios. These ML models learn the structure of your data and can be more efficient than traditional indexes.

Multi-modal search is everywhere. Vectors aren’t just for text anymore. Image, audio, and video search using the same infrastructure is becoming standard.

Hybrid databases are winning. The separation between vector and traditional databases is blurring. Expect your regular database to handle vectors competently soon.

Key Takeaways

- Cost modeling beats performance benchmarks. The fastest solution you can’t afford is worthless.

- Start simple, even if it means pgvector. You can always migrate later when you understand your requirements better.

- Version everything. Your embeddings, your chunks, your preprocessing. You’ll thank yourself during the inevitable migrations.

- Plan for failure. Vector databases are complex systems. Have a plan for when (not if) they fail.

- Measure what matters. User-facing metrics beat synthetic benchmarks every time.

Next Steps

Ready to implement vector search? Start with these actions:

- Calculate your vector storage requirements. Number of documents × embedding dimensions × 4 bytes = minimum storage needed.

- Benchmark with your actual data. Don’t trust vendor benchmarks. Your data distribution is unique.

- Build a proof of concept with pgvector first. It’s often good enough and will teach you what you actually need.

- Set up monitoring from day one. You can’t optimize what you don’t measure.

Leave a Reply