Snowflake and LLMs: The Complete Guide to Enterprise AI with Snowflake Cortex

The convergence of data warehousing and artificial intelligence has reached a pivotal moment with Snowflake’s comprehensive AI platform, Snowflake Cortex. As organizations struggle to bridge the gap between their vast data repositories and the transformative potential of Large Language Models (LLMs), Snowflake has emerged as a pioneering solution that brings enterprise-grade AI directly to where data lives.

Snowflake Cortex gives you instant access to industry-leading large language models (LLMs) trained by researchers at companies like Anthropic, Mistral, Reka, Meta, and Google, including Snowflake Arctic, an open enterprise-grade model developed by Snowflake. This integration represents a fundamental shift from traditional AI development approaches that require complex data movements and separate infrastructure management.

Unlike standalone LLM services that operate in isolation from enterprise data, Snowflake Cortex provides a unified platform where data governance, security, and AI capabilities converge. This approach eliminates the traditional friction points that have prevented many organizations from successfully deploying AI at scale, offering a secure, governed environment where sensitive enterprise data never leaves the Snowflake ecosystem.

Snowflake Arctic: A Purpose-Built Enterprise LLM

At the heart of Snowflake’s LLM strategy lies Snowflake Arctic, a groundbreaking model that challenges conventional assumptions about LLM development costs and accessibility. Arctic was trained on a cluster of 1,000 GPUs over the course of three weeks, which amounted to a $2 million investment, demonstrating that state-of-the-art enterprise LLMs don’t require the hundreds of millions typically associated with large model training.

Architectural Innovation: Arctic’s unique mixture-of-experts (MoE) architecture, combined with its relatively small size and openness, will enable companies to use it to build and train their own chatbots, co-pilots, and other GenAI apps. The model employs a dense-MoE hybrid transformer architecture that routes requests to one of 128 experts, significantly more than the 8-16 experts used in competing models.

Enterprise-Focused Design: Enterprises want to use LLMs to build conversational SQL data copilots, code copilots and RAG chatbots. From a metrics perspective, this translates to LLMs that excel at SQL, code, complex instruction following and the ability to produce grounded answers. Arctic was specifically optimized for these enterprise intelligence tasks, demonstrating superior performance in SQL generation, coding assistance, and instruction following compared to other open-source models in its class.

Cost and Efficiency: Despite being equipped with 480 billion parameters, only 17 billion of which are used at any given time for training or inference, making it extremely efficient for production deployments. This efficiency translates to practical benefits: customers will be able to fine tune Arctic and run inference workloads with a single server equipped with 8 GPUs.

Open Source Commitment: Available under Apache 2.0 license, it provides ungated access to weights and code, allowing organizations to customize and deploy the model according to their specific requirements without licensing restrictions.

Snowflake Cortex: The Complete AI Platform

Snowflake Cortex represents more than just access to LLMs—it’s a comprehensive AI platform designed specifically for enterprise data environments. The platform addresses the fundamental challenges organizations face when trying to implement AI at scale: data governance, security, infrastructure management, and integration complexity.

Serverless AI Infrastructure: Because these LLMs are fully hosted and managed by Snowflake, using them requires no setup. Your data stays within Snowflake, giving you the performance, scalability, and governance you expect. This serverless approach eliminates the need for organizations to manage GPU clusters, model deployment, or scaling infrastructure.

Comprehensive Model Selection: The platform provides access to a diverse ecosystem of leading LLMs, including Meta Llama 3 8B and 70B: The Llama 3 models are powerful open source models that demonstrate impressive performance on a wide range of natural language processing tasks and Reka Core: This LLM is a state-of-the-art multimodal model demonstrating a comprehensive understanding of images, videos and audio, along with text.

Built-in Safety and Governance: Llama Guard, an LLM-based input-output safeguard model, comes natively integrated with Snowflake Arctic, and will soon be available for use with other models in Cortex LLM Functions, ensuring that AI applications maintain appropriate safety standards without additional configuration.

Core Cortex LLM Functions and Capabilities

Snowflake Cortex provides a rich set of SQL and Python functions that enable developers to integrate AI capabilities directly into their data workflows:

General Purpose Functions:

- COMPLETE: The COMPLETE function is a general purpose function that can perform a wide range of user-specified tasks, such as aspect-based sentiment classification, synthetic data generation, and customized summaries

- TRY_COMPLETE: A safer variant that returns NULL instead of errors when execution fails

Task-Specific Functions:

- CLASSIFY_TEXT: Given a piece of text, classifies it into one of the categories that you define

- EXTRACT_ANSWER: Given a question and unstructured data, returns the answer to the question if it can be found in the data

- SUMMARIZE: Automatically generates concise summaries of text content

- TRANSLATE: Provides multilingual translation capabilities across dozens of languages

- SENTIMENT: Analyzes emotional tone and sentiment in text data

Helper Functions:

- COUNT_TOKENS: Given an input text, returns the token count based on the model or Cortex function specified, helping prevent model limit violations

Vector and Embedding Functions: Arctic embed is now available as an option in the Cortex EMBED function. The EMBED and vector distance functions, alongside VECTOR, as a native data type in Snowflake enable sophisticated retrieval-augmented generation (RAG) implementations without external vector databases.

Advanced Cortex AI Capabilities

Beyond basic LLM functions, Snowflake Cortex offers sophisticated AI experiences that address complex enterprise use cases:

Document AI: Document AI leverages a purpose-built, multimodal LLM that is natively integrated within the Snowflake platform. Customers can easily and securely extract content, such as invoice amounts or contractual terms from documents, and fine-tune results using a visual interface and natural language. This capability transforms unstructured document processing from a complex integration challenge into a simple SQL operation.

Cortex Search: Quickly and securely find information by asking questions within a given set of documents with fully managed text embedding, hybrid search (semantic + keyword) and retrieval. This functionality enables organizations to build sophisticated document chatbots and knowledge retrieval systems without external search infrastructure.

Cortex Analyst: A natural language interface that enables business users to ask complex questions about their data using conversational language, automatically generating and executing appropriate SQL queries.

Cortex Fine-Tuning: Customize LLMs securely and effortlessly to increase the accuracy and performance of models for use-case specific tasks, allowing organizations to adapt foundation models to their specific domain knowledge and terminology.

Universal Search: Universal Search allows users to quickly find a wide array of objects and resources across their accounts. It enables users to search for database objects, data products in the Snowflake Marketplace, relevant Snowflake documentation, and community knowledge base articles using natural language queries.

Real-World Use Cases and Applications

Organizations across industries are leveraging Snowflake Cortex for diverse AI applications that directly impact business outcomes:

Customer Analytics and Segmentation: A major video-hosting platform struggled with converting free users to paid subscriptions due to limited data insights from their text-based user interactions. By running semantic search tasks with the embedding and vector distance functions, they were able to more clearly define target segments. The platform then used foundation models to create personalized emails, running these operations as batch processes to continuously improve conversion rates.

Predictive Analytics: For instance, a retailer can use Cortex to forecast product demand during the holiday season. By analyzing past sales data and external factors like weather or promotions, Cortex provides accurate predictions that help the retailer optimize inventory levels and reduce stockouts.

Document Processing and Information Extraction: For businesses dealing with high volumes of documents, Cortex automates data extraction from invoices, receipts, forms, and reports. You can use Cortex to process handwritten forms and convert them to digital documents.

Legal and Compliance Applications: For example, a legal team can use Cortex Search to locate clauses in contracts by simply typing natural language queries like, “Find all contracts with non-compete agreements.” This streamlines document reviews and ensures no critical details are overlooked.

Customer Service Automation: For instance, an e-commerce business can use Cortex to generate auto-replies for common customer queries, ensuring quick and consistent communication.

Key Driver Analysis: Top Insights is an ML Function for key driver analysis, helping you to identify drivers of a metric’s change over time or explain differences in a metric among various verticals, enabling organizations to understand the underlying factors driving business performance changes.

Technical Implementation and Integration

Implementing Snowflake Cortex requires understanding both the technical architecture and best practices for enterprise deployment:

Access Control and Security: The CORTEX_USER database role in the SNOWFLAKE database includes the privileges that allow users to call Snowflake Cortex LLM functions. By default, the CORTEX_USER role is granted to the PUBLIC role. Organizations can customize this access pattern based on their security requirements.

Programming Interfaces: Snowflake Cortex LLM functions are accessible not only through SQL but also can be leveraged in Python using the Snowpark ML library, providing flexibility for different development workflows and integration patterns.

Performance Optimization: Cortex LLM SQL Functions are optimized for throughput. We recommend using these functions to process numerous inputs such as text from large SQL tables. Batch processing is typically better suited for LLM Functions. For interactive applications requiring low latency, Snowflake provides REST APIs.

Regional Availability: Snowflake Cortex LLM functions are currently available in the following regions: AWS US East (N. Virginia) and other select regions, with ongoing expansion to additional geographic locations.

Integration with the Broader Data Ecosystem

Snowflake Cortex’s power lies not just in its AI capabilities, but in how it integrates with the broader data and analytics ecosystem:

Streamlit Integration: With the integration of Streamlit in Snowflake (public preview soon), Snowflake customers can use Streamlit to develop and deploy powerful UIs for their LLM-powered apps and experiences entirely in Snowflake. This enables rapid development of AI-powered applications with sophisticated user interfaces.

Native Apps Ecosystem: To make it even easier and secure for customers to take advantage of leading LLMs, Snowpark Container Services can be used as part of a Snowflake Native App, so customers will be able to get direct access to leading LLMs via the Snowflake Marketplace.

Partnership Integrations: Strategic partnerships with companies like Informatica provide a blueprint for enterprise-grade generative AI application development for Snowflake Cortex AI based on a foundation of rich metadata, trusted data and no-code orchestration.

BI Tool Integration: Modern BI platforms like Sigma are integrating directly with Cortex capabilities, enabling LLM Interpretation in Sigma: Leverage AI using Snowflake Cortex to deliver human-friendly summaries or explanations within familiar analytics interfaces.

Cost Optimization and ROI Considerations

Understanding the economic implications of implementing Snowflake Cortex is crucial for enterprise decision-making:

Transparent Pricing: The mixtral-8x7b model is now 56% more cost-efficient, with the credits per million tokens cut to 0.22 from 0.50, demonstrating Snowflake’s commitment to making AI capabilities economically accessible.

Reduced Infrastructure Costs: By eliminating the need for separate GPU infrastructure, model deployment pipelines, and vector databases, organizations can significantly reduce their total cost of ownership for AI initiatives.

Operational Efficiency: Cost savings vs. other service providers result from the elimination of data movement costs, reduced integration complexity, and simplified operations management.

Security, Governance, and Compliance

Enterprise AI implementations must address stringent security and compliance requirements, areas where Snowflake Cortex provides significant advantages:

Data Residency: All processing occurs within the Snowflake environment, ensuring that sensitive enterprise data never leaves the governed security perimeter.

Access Controls: Granular role-based access controls enable organizations to precisely manage who can access different AI capabilities and data sources.

Audit and Monitoring: Comprehensive logging and monitoring capabilities provide full visibility into AI usage patterns and data access for compliance reporting.

Regulatory Compliance: Built-in compliance frameworks support requirements like GDPR, HIPAA, and industry-specific regulations without additional configuration.

Best Practices for Enterprise Implementation

Successful deployment of Snowflake Cortex in enterprise environments requires following established best practices:

Start with Pilot Projects: Begin with well-defined use cases that demonstrate clear business value and can be implemented quickly to build organizational confidence and expertise.

Data Quality Foundation: Ensure that underlying data quality is sufficient for AI applications, as LLMs will amplify existing data quality issues.

Governance Framework: Establish clear guidelines for AI usage, including acceptable use policies, data access controls, and performance monitoring standards.

User Training and Adoption: Invest in training programs that help business users understand AI capabilities and limitations, enabling self-service AI adoption.

Performance Monitoring: Implement comprehensive monitoring of AI application performance, including accuracy metrics, latency tracking, and cost optimization.

Future Developments and Roadmap

Snowflake continues to expand Cortex capabilities with regular updates and new features:

Enhanced Model Support: We’re pleased to announce that the Llama 3.1 collection of multilingual large language models (LLMs) are now available in Snowflake Cortex AI, providing enterprises with secure, serverless access to Meta’s most advanced open source models, with llama3.1-405b, the largest openly available model. 128K context length, improved reasoning & coding capabilities.

Multimodal Capabilities: Expansion beyond text to include image, video, and audio processing capabilities that leverage the same unified data platform.

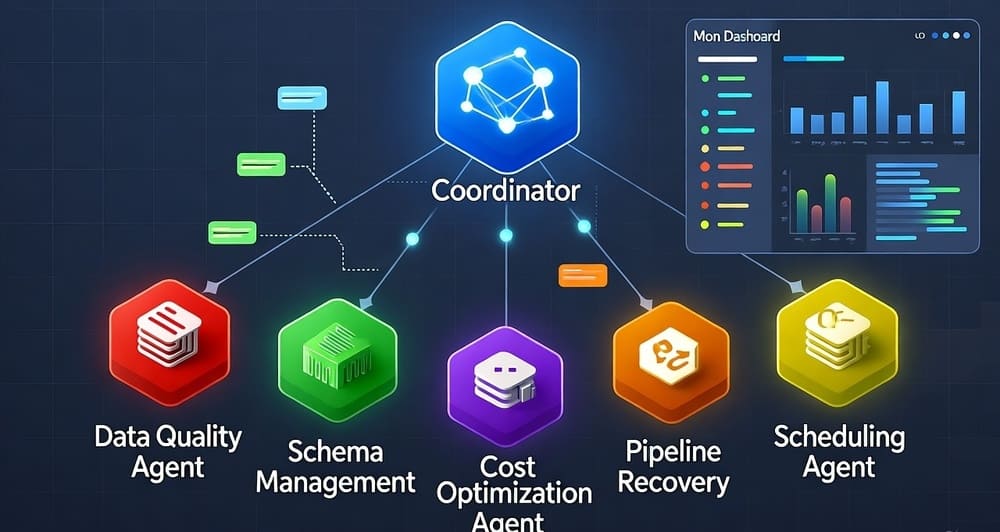

Advanced Agent Frameworks: Cortex Agents (public preview): Orchestrate across both structured and unstructured data sources to deliver insights represent the evolution toward more sophisticated AI agents that can perform complex tasks autonomously.

Industry-Specific Solutions: Development of specialized models and templates optimized for specific industries and use cases.

Getting Started with Snowflake Cortex

Organizations ready to begin their Snowflake Cortex journey should follow a structured approach:

Assessment and Planning: Evaluate current data infrastructure, identify high-value AI use cases, and assess organizational readiness for AI adoption.

Environment Setup: Configure Snowflake environment with appropriate roles, permissions, and access controls for AI development and deployment.

Pilot Implementation: CREATE DATABASE IF NOT EXISTS cortex_demo CREATE SCHEMA IF NOT EXISTS cortex_schema and begin with simple use cases like sentiment analysis or document summarization.

Scaling Strategy: Develop a roadmap for expanding AI capabilities across the organization, including integration with existing business processes and systems.

Performance Optimization: Continuously monitor and optimize AI applications for performance, cost, and accuracy.

Key Takeaways

Snowflake Cortex represents a paradigm shift in how organizations approach enterprise AI implementation. By bringing LLM capabilities directly to where data lives, eliminating infrastructure complexity, and providing comprehensive governance frameworks, Snowflake has created a platform that makes enterprise AI both accessible and practical.

The combination of Snowflake Arctic’s enterprise-focused design, Cortex’s comprehensive AI platform, and the broader Snowflake ecosystem creates unprecedented opportunities for organizations to leverage AI at scale. Success requires understanding both the technical capabilities and the strategic implications of this integrated approach.

Organizations that embrace this unified data and AI platform will be better positioned to realize the transformative potential of artificial intelligence while maintaining the security, governance, and performance requirements essential for enterprise success. As the platform continues to evolve with new models, capabilities, and integrations, early adopters will gain significant competitive advantages in their respective markets.

The future of enterprise AI lies not in isolated model deployments, but in integrated platforms that seamlessly combine data management, AI capabilities, and business applications. Snowflake Cortex exemplifies this vision, providing a foundation for the next generation of data-driven, AI-powered enterprises.

Leave a Reply