RAG on Databricks: Production Patterns with Mosaic AI Vector Search

Why this matters

You’ve shipped a proof-of-concept chatbot. It works—until real users throw messy PDFs, fast-changing policies, and vague questions at it. The model is fine; the retrieval isn’t. On Databricks, Mosaic AI Vector Search plus solid data engineering turns flaky RAG into a maintainable product with SLAs. This guide shows the patterns that actually hold up in production. Databricks Documentation

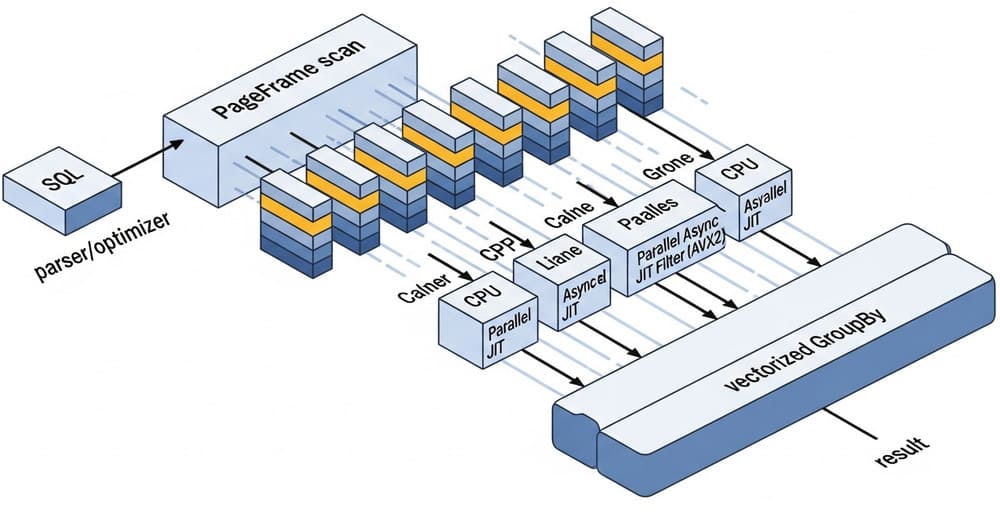

Architecture at a glance

Core pieces

- Data lakehouse (Delta/UC): documents, tables, and metadata with governance in Unity Catalog. Databricks Documentation

- Ingestion & chunking: pipelines standardize formats, chunk, and embed. Databricks Documentation+1

- Mosaic AI Vector Search: managed indexes + endpoints; hybrid search and reranking for quality. Databricks Documentation+2Databricks+2

- Model Serving: host your LLM or call external models via endpoints. Databricks Documentation+1

- RAG app / agents: query index → rank → ground LLM → log, evaluate, and retrain. Databricks Documentation+1

Data flow

- Land files to a UC Volume → 2) Ingest & normalize to Delta → 3) Chunk + embed → 4) Upsert to Vector Index → 5) Query with hybrid + rerank → 6) Ground LLM via Model Serving → 7) Evaluate and iterate. Databricks Documentation+5Databricks Documentation+5Databricks Documentation+5

Indexing patterns that scale

1) Delta-sourced, auto-synced indexes

Use Delta tables as the source of truth and let Mosaic AI Vector Search sync changes to the index. It keeps ingestion simple and supports near-real-time updates without redeploys. Databricks

When to use: steady trickle of documents, frequent small updates.

2) Storage-optimized endpoints for large corpora

If you expect tens of millions of chunks, pick a storage-optimized endpoint and enable hybrid retrieval from day one to avoid expensive rework. Databricks Documentation

3) Hybrid search by default

Combine dense vectors + keyword (BM25) to handle numbers, acronyms, and rare terms. This shipped as GA and is the pragmatic default. Databricks

4) Reranking for precision@k

Turn on reranking (Public Preview) to boost top-k precision without changing chunking. We’ve seen double-digit gains on enterprise sets with minimal plumbing. Databricks

Practical chunking & metadata

- Semantic chunking: split by headings or layout, not fixed tokens. Store SECTION_TITLE, DOC_ID, PAGE_RANGE. Databricks Community

- Tight chunks (300–800 tokens): reduce hallucinations; include a breadcrumb (title > h2 > h3) in the chunk text. Databricks Community

- Cross-doc dedupe: hash normalized text to drop near-duplicates before embedding.

- Freshness windows: track EFFECTIVE_FROM/TO to answer “according to the latest policy.”

Example Delta schema (simplified)

| column | type | notes |

|---|---|---|

| doc_id | STRING | stable ID |

| section_path | STRING | e.g., Manual > Safety > PPE |

| chunk_text | STRING | cleaned text |

| source_uri | STRING | UC volume path |

| effective_from | TIMESTAMP | for recency filtering |

| embedding | VECTOR | model-specific |

Creating and querying a Vector Search index

Python: create endpoint & index

# Databricks notebook

from databricks.vector_search.client import VectorSearchClient

vsc = VectorSearchClient()

# 1) Create endpoint (choose "standard" or "storage_optimized")

vsc.create_endpoint(

name="vs-prod",

endpoint_type="storage_optimized", # plan for scale

)

# 2) Create an index sourced from a Delta table

vsc.create_delta_sync_index(

endpoint_name="vs-prod",

index_name="uc.catalog.rag_chunks_idx",

source_table="uc.catalog.rag_chunks", # has 'embedding' vector column + metadata

pipeline_type="TRIGGERED" # or CONTINUOUS

)

Docs: endpoint & index creation in Mosaic AI Vector Search. Databricks Documentation

SQL: query with vector_search()

-- Retrieve k=8 candidates via hybrid retrieval (vectors + keywords)

SELECT *

FROM vector_search(

index => 'uc.catalog.rag_chunks_idx',

query => 'What are the PPE requirements for lab B?',

num_results => 8,

search_type => 'HYBRID' -- DENSE | SPARSE | HYBRID

);

Docs: vector_search() function (Public Preview) for Databricks SQL. Microsoft Learn

Python: rerank + metadata filter

results = vsc.query(

endpoint_name="vs-prod",

index_name="uc.catalog.rag_chunks_idx",

query_text="What are the PPE requirements for lab B?",

filters={"effective_from": {">=": "2025-01-01"}},

num_results=20,

search_type="HYBRID",

rerank=True, # enable preview reranker

rerank_top_k=10

)

Reranking in Vector Search (Public Preview) improves retrieval quality with a single parameter. Databricks

Orchestrating the RAG pipeline on Databricks

Ingestion → Normalization → Chunking → Embedding → Index upsert → Evals & logging. Use UC Volumes for raw files, Delta Live Tables or Jobs for pipelines, and log all retrievals/answers for offline eval. Databricks Documentation+1

Model Serving hosts your LLM (open-source or custom) or connects to external providers through external model endpoints. Keep grounding strict: pass only top-k chunks, their citations, and metadata. Databricks Documentation

Retrieval strategies that work

- Two-stage retrieval: HYBRID (k=50) → Rerank to top-10 → LLM context (k=5). Databricks+1

- Time-aware recall: filter by effective_from or last N days; helpful for policy/data versioning.

- Fielded filters: product line, region, version—kept as index metadata to avoid post-filtering drops. Databricks Documentation

- Answer synthesis: instruct the LLM to quote sources with

doc_idandsection_path.

Evaluation & observability (don’t skip)

- Golden sets: curated Q/A with references; track exact-match and faithfulness.

- Retrieval metrics: recall@k, MRR, rerank deltas; alert when recall slips. Databricks Documentation

- Drift monitors: new document distributions, chunk length stats, embedding norms.

- Human review loop: sample low-confidence answers; write back labels for training.

Databricks’ RAG guidance and cookbook materials are good starting points for eval workflows. Databricks Documentation+1

Security & governance

- Unity Catalog controls who can read raw files, tables, and vector indexes.

- PII hygiene: redact before chunking; embargo sensitive classes from retrieval.

- Data residency: align serving endpoints and storage to the right geo. Databricks notes residency for foundation model APIs and serving. Databricks Documentation

Common pitfalls (and fixes)

- Oversized chunks → context bloat, hallucinations. Fix: 300–800 tokens, semantic splits. Databricks Community

- Vector-only search → misses rare terms and IDs. Fix: default to HYBRID. Databricks

- No rerank → on-topic but wrong top-1. Fix: enable reranking. Databricks

- Index drift → stale answers. Fix: Delta-synced indexes, CI tests to confirm coverage. Databricks

- Opaque answers → trust issues. Fix: show citations with

doc_idandsection_path.

Reference implementation (end-to-end snippet)

# 1) Ingest → chunk → embed -> write to Delta

# columns: doc_id, section_path, chunk_text, effective_from, embedding

# 2) Ensure index is synced to uc.catalog.rag_chunks_idx (see earlier)

# 3) Query + compose prompt

q = "Summarize PPE requirements for lab B and cite the source."

hits = vsc.query(

endpoint_name="vs-prod",

index_name="uc.catalog.rag_chunks_idx",

query_text=q,

num_results=32,

search_type="HYBRID",

rerank=True,

metadata_filters={"section_path": {"contains": "Safety"}}

)

context = "\n\n".join([

f"[{h['doc_id']} | {h['section_path']}] {h['chunk_text']}"

for h in hits[:6]

])

prompt = f"""You are a compliance assistant.

Use only CONTEXT to answer and cite [doc_id | section_path].

QUESTION: {q}

CONTEXT:

{context}

"""

# 4) Call Model Serving endpoint with prompt (omitted: requests to endpoint)

Production checklist (copy/paste)

- UC Volumes for raw files; Delta bronze/silver tables. Databricks Documentation

- Semantic chunking + breadcrumbs; dedupe. Databricks Community

- Delta-synced Mosaic AI Vector Search index. Databricks

- HYBRID retrieval; reranking enabled. Databricks+1

- Retrieval logging; golden sets; eval jobs. Databricks Documentation

- Model Serving with strict grounding + citations. Databricks Documentation

Internal link ideas

- Designing semantic chunking pipelines on Databricks

- Choosing embedding models and dimensionality for enterprise RAG

- Evaluating RAG with golden sets and automated drift checks

- Governance patterns with Unity Catalog for vector indexes

- Cost-aware scaling: standard vs storage-optimized endpoints

Summary & CTA

RAG fails when retrieval fails. On Databricks, you stabilize it with Delta-sourced, auto-synced indexes, HYBRID retrieval, and reranking, stitched together by well-governed pipelines and Model Serving. Start with the checklist above, ship a thin slice, and instrument the heck out of it. Your LLM will look smarter because your retrieval is. Want a turn-key notebook + jobs YAML tailored to your stack? Say “RAG kit” and I’ll generate one.

Image prompt

“A clean, modern data architecture diagram illustrating a Databricks RAG pipeline: UC Volumes/Delta ingestion, chunking/embeddings, Mosaic AI Vector Search with hybrid search + reranking, Model Serving, and evaluation loop — minimalistic, high contrast, 3D isometric style.”

Tags

#RAG #Databricks #MosaicAI #VectorSearch #LLMOps #UnityCatalog #DataEngineering #HybridSearch #ModelServing

Leave a Reply