MLOps and Data Engineering Synergy: Bridging the Gap for Smarter Workflows

In today’s fast-evolving tech landscape, integrating MLOps with data engineering isn’t just a trend—it’s a necessity. As machine learning models become pivotal in business decision-making, harmonizing ML workflows with robust data pipelines can dramatically improve efficiency, speed up iterations, and ensure reliable outcomes. This article explores how to bridge these disciplines with actionable tips, real-world tool examples, and best practices on CI/CD, automated model monitoring, and version control.

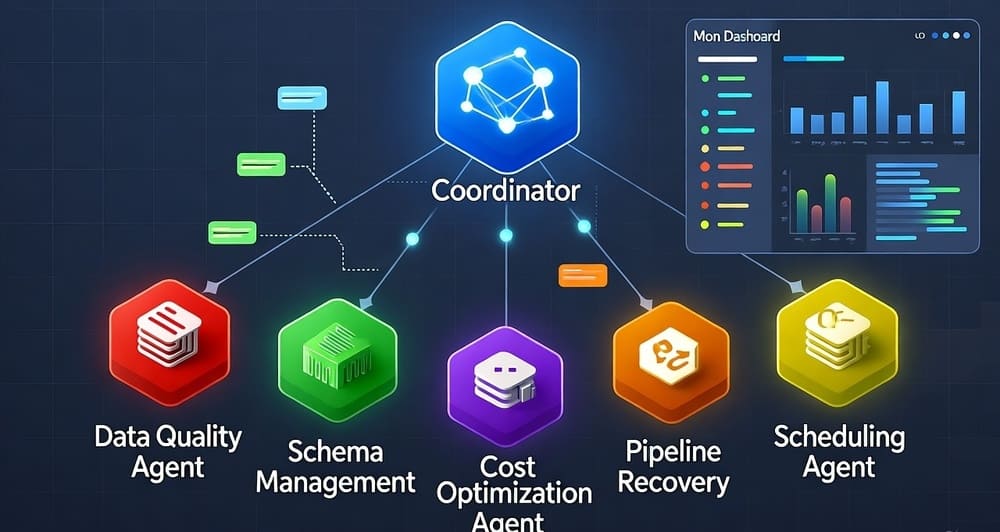

The Convergence of ML and Data Engineering

Data engineering traditionally focuses on reliable data ingestion, transformation, and storage. In contrast, MLOps—an evolution of DevOps for machine learning—streamlines the entire model lifecycle from experimentation to production. Their synergy leads to:

- Streamlined Workflows: Unified pipelines reduce redundancy.

- Improved Collaboration: Shared tools and processes bridge gaps between data engineers and ML practitioners.

- Enhanced Quality Control: Automated testing and monitoring ensure both data and models remain performant.

Best Practices for CI/CD in Data Systems

Implementing continuous integration and continuous deployment (CI/CD) in data pipelines is essential for maintaining quality and agility. Consider these strategies with real tool examples:

- Automated Testing:

- Incremental Deployments:

- Robust Monitoring:

- Tip: Implement standardized data schemas and logging mechanisms.

Automated Model Monitoring

Once your ML model is in production, continuous monitoring is essential to catch issues before they impact business outcomes:

- Real-Time Metrics:

- Data Drift Detection:

- Comprehensive Logging:

- Feedback Loops:

Version Control for Datasets and Models

Version control is as critical for datasets and models as it is for code:

- Dataset Versioning:

- Model Archiving:

- CI/CD Integration:

- Detailed Documentation:

Actionable Tips for Seamless Integration

Tip 1: Standardize Everything Adopt uniform data schemas (using Apache Avro or JSON Schema) and logging practices (via ELK or Splunk) to streamline integration and debugging.

Tip 2: Automate Wherever Possible Incorporate automation in testing (using PyTest and Airflow), monitoring (with Prometheus/Grafana or Evidently AI), and version control (using DVC or MLflow) to reduce manual errors and accelerate iterations.

Tip 3: Prioritize Collaboration Facilitate regular communication between data engineers and ML practitioners. Tools like GitHub, GitLab, or Bitbucket combined with shared dashboards (e.g., Grafana) ensure everyone’s on the same page.

Tip 4: Audit and Iterate Regularly review your data pipelines and ML workflows with monitoring tools like Datadog or New Relic. Identify bottlenecks and refactor processes to enhance efficiency.

Tip 5: Implement Incremental Deployments Mitigate risks by deploying updates incrementally using Kubernetes, AWS CodeDeploy, or similar orchestration platforms. This strategy allows for controlled testing and quick rollback if needed.

Bringing It All Together

The synergy between MLOps and data engineering creates an agile, scalable ecosystem where data pipelines and ML models work seamlessly together. By adopting CI/CD practices with tools like Airflow, Kubernetes, and MLflow, automating model monitoring using Prometheus or Evidently AI, and employing robust version control with DVC or MLflow’s model registry, organizations can enhance innovation and reliability.

Embrace these actionable tips to optimize your workflows, reduce technical debt, and empower your teams to drive data-driven success.

What strategies or tools have you implemented to integrate MLOps with your data pipelines? Share your experiences and join the conversation!

Leave a Reply