Lakeflow Jobs Playbook: Orchestrating Mixed SQL, DLT, and Notebook Pipelines on Databricks

Meta description (157 chars):

Practical playbook for Databricks Lakeflow Jobs: how to orchestrate SQL, Delta Live Tables, and notebooks with dependencies, retries, and CI/CD.

Why this matters

You’ve got SQL transformations, a DLT pipeline, and a few notebooks with business logic. They all run… until they don’t—because a warehouse wasn’t warm, a table wasn’t refreshed, or a dependency silently failed. This playbook shows how to design one reliable workflow that coordinates SQL tasks + DLT pipelines + notebooks with guardrails, observability, and CI/CD-friendly configs using Lakeflow Jobs. Databricks Documentation+1

What is Lakeflow Jobs? (quick refresher)

Lakeflow Jobs is Databricks’ workflow engine for scheduling and orchestrating tasks—SQL queries/dashboards, DLT pipelines, notebooks, wheels/JARs, and control-flow steps (if/else, for-each). You define dependencies, retries, timeouts, and notifications; Jobs executes and tracks the lineage of runs. Databricks Documentation+1

Where it fits in Lakeflow:

- Connect – ingestion to the lake.

- Declarative Pipelines (DLT) – managed ETL with expectations.

- Jobs – orchestration that stitches everything together. Databricks+1

The mixed-mode pattern (SQL ➜ DLT ➜ Notebook)

Use case:

- Warm up/validate with SQL (idempotent checks, quality queries).

- Run the DLT pipeline for your bronze/silver/gold flow.

- Post-process with a notebook for business rules, feature prep, or downstream triggers.

Task graph shape

[SQL: prechecks] ──▶ [DLT: pipeline run] ──▶ [Notebook: postprocess]

▲

└── optional [SQL: backfill params]

- SQL task executes against a SQL Warehouse and can refresh dashboards or alerts. Databricks Documentation

- Pipeline task (DLT) runs a declarative pipeline as a job task. Microsoft Learn

- Notebook task handles Python/Scala logic or calls into external APIs. Databricks Documentation

Choosing the right task type

| Need | Best task | Why |

|---|---|---|

| Data quality probes, idempotent transforms, dashboard refresh | SQL task | Simple, warehouse-optimized, good for lightweight checks and reporting. Databricks Documentation |

| Managed ETL with data quality expectations, SCDs, CDC, Auto Loader | Pipeline (DLT) task | Declarative, built-in expectations, optimized autoscaling, lineage + monitoring. Microsoft Learn+1 |

| Custom logic, feature engineering, API calls, orchestration glue | Notebook task | Full flexibility, library reuse, quick iteration for teams. Databricks Documentation |

| Reusable packaged code | Python wheel task | Versioned, testable, CI/CD friendly entry points. Databricks Documentation+1 |

Minimal, production-friendly setup

1) Define tasks & dependencies

- SQL prechecks: validate source freshness, enforce row-count thresholds.

- DLT pipeline: run the bronze→silver→gold pipeline.

- Notebook postprocess: compute aggregates, update downstream tables, or call webhooks.

You can configure tasks in the Jobs UI or as code (e.g., Databricks Asset Bundles with job task types), enabling repeatable deployments. Databricks Documentation+1

2) Example: Bundle-style job snippet

# bundle.yml (excerpt)

resources:

jobs:

lakeflow_mixed_pipeline:

name: lakeflow-mixed-sql-dlt-notebook

tasks:

- task_key: sql_prechecks

sql:

query: "SELECT count(*) FROM raw.orders WHERE _ingest_ts > now() - INTERVAL 1 HOUR"

warehouse_id: ${var.sql_warehouse_id}

max_retries: 2

timeout_seconds: 600

- task_key: dlt_pipeline

depends_on: [sql_prechecks]

pipeline_task:

pipeline_id: ${var.dlt_pipeline_id}

max_retries: 1

timeout_seconds: 7200

- task_key: nb_postprocess

depends_on: [dlt_pipeline]

notebook_task:

notebook_path: "/Repos/team/analytics/notebooks/postprocess"

base_parameters:

run_date: "{{job.run_date}}"

max_retries: 2

timeout_seconds: 1800

(Task types and fields align with Jobs task configuration capabilities; see official docs for the full schema and options.) Databricks Documentation+1

3) Control flow & fan-out

- Add if/else for conditional branches (e.g., skip heavy DLT if no new data).

- Use for-each to parallelize runs per region/table. Databricks Documentation

Delta Live Tables notes for orchestration

- Include the pipeline directly as a Pipeline task in the workflow.

- DLT handles state, expectations, and autoscaling so your job graph stays clean.

- Monitor run history and events from the Jobs UI. Microsoft Learn+1

Tip: Keep pre-DLT checks in SQL and post-DLT enrichments in notebooks to isolate concerns and simplify debugging.

Observability & operations

- Run page & history: inspect DAG, durations, logs, and task-level retries.

- Notifications: Slack/email on failure or SLA breach.

- Reruns with repair: re-run failed tasks without redoing the entire pipeline.

- Auditability: use run IDs and export logs to your SIEM. Databricks Documentation

Best practices (battle-tested)

- Single source of truth in VCS

Use bundles or IaC to define jobs as code. Keep warehouse IDs, pipeline IDs, and paths parameterized per env. Databricks Documentation - Trust-but-verify with SQL prechecks

Short SQL probes catch 80% of preventable failures before your DLT spin-up. Databricks Documentation - Let DLT own the heavy lifting

Put core ETL in DLT, not notebooks. You’ll get built-in expectations, autoscaling, and lineage. Microsoft Learn+1 - Keep notebooks thin

Limit notebooks to orchestration glue and business-specific transforms that don’t fit declarative patterns. Databricks Documentation - Right-size retries and timeouts

Use fewer, longer retries for warehouse spin-up; shorter, more frequent retries for flaky APIs. Configure at the task level. Databricks Documentation - SLA-aware scheduling

Use cron or triggers aligned with upstream availability; stagger fan-out tasks to avoid warehouse thrash. Databricks Documentation - Clear failure contracts

Fail fast on prechecks; emit structured errors. Downstream tasks should not run when inputs are invalid. Databricks Documentation - Observe first, optimize second

Start with conservative cluster policies; use run history to tune parallelism and warehouse sizing. Databricks Documentation

Common pitfalls (and fixes)

- Putting everything in notebooks

Fix: Move repeatable ETL to DLT; keep notebooks for glue. Microsoft Learn - Underusing SQL tasks

Fix: Add SQL probes for freshness, counts, and schema checks—fast and cheap. Databricks Documentation - No code-based definitions

Fix: Adopt bundles for promotion across dev/test/prod with the same DAG definitions. Databricks Documentation - Weak observability

Fix: Rely on run history, notifications, and logs; standardize run tags and emit metrics. Databricks Documentation

Quick-start recipes

A. Lightweight daily batch

- SQL:

REFRESH TABLE gold.sales_mv; - DLT: Bronze→Silver sync from CDC.

- Notebook: Rebuild semantic aggregates; notify BI.

B. Fan-out regional loads

- for-each over

["us", "eu", "apac"]running the same DLT pipeline with region param; postprocess consolidates to a global mart. Databricks Documentation

C. Packaged logic via wheel

- Move complex postprocessing into a Python wheel task for versioning and unit tests; notebooks become thin wrappers if needed. Databricks Documentation

Realistic SQL precheck snippet

-- Fail early if source lag exceeds 90 minutes

SELECT

CASE WHEN max(_ingest_ts) < (current_timestamp() - INTERVAL 90 MINUTES)

THEN error('Source too stale') END AS _must_fail

FROM raw.orders;

Run this as the first SQL task; if it errors, the DAG stops before burning compute. Databricks Documentation

Monitoring playbook

- Per-task dashboards: track run duration, retry count, data volumes.

- Notifications: route failures to on-call; route SLA warnings to owners.

- Postmortems: use run IDs and logs to build a timeline within the Jobs UI. Databricks Documentation

Internal link ideas (for your site)

- “Delta Live Tables expectations: designing contracts for data quality”

- “Unity Catalog lineage with DLT and Jobs”

- “CI/CD with Databricks Asset Bundles: from repo to prod”

- “SQL Warehouse sizing strategies for mixed workloads”

- “Alerting patterns for Lakeflow Jobs and BI dashboards”

Summary & call to action

Mixed-mode orchestration doesn’t have to be fragile. Put SQL in front for fast checks, DLT in the middle for managed ETL, and notebooks/wheels at the edges for business logic. Define it as code, add guardrails, and observe ruthlessly. Start by converting one brittle notebook chain into a Lakeflow Jobs DAG and measure the failure rate drop and runtime consistency. Then scale the pattern across domains. Databricks Documentation+2Databricks Documentation+2

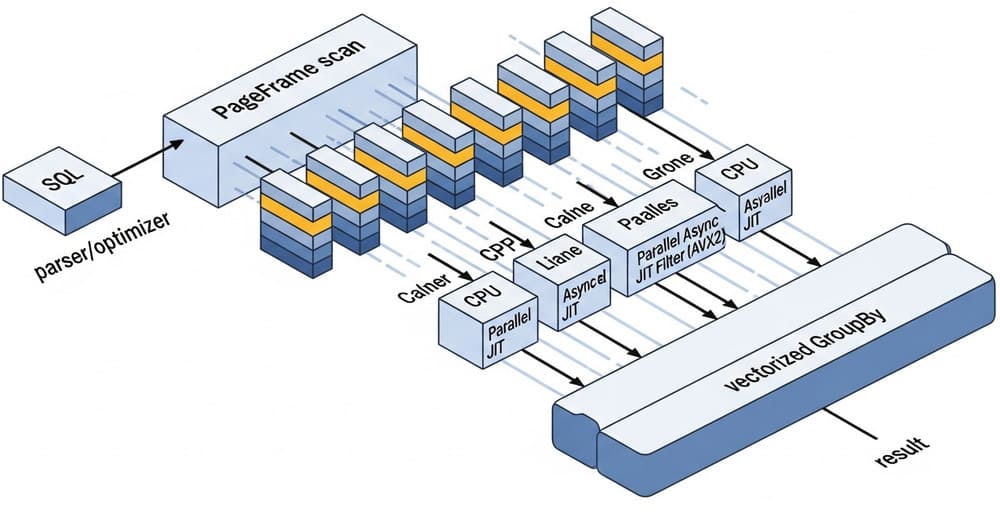

Image prompt

“A clean, modern architecture diagram of a Databricks Lakeflow Jobs workflow orchestrating a SQL task, a DLT pipeline task, and a notebook task with dependencies, retries, and notifications — minimalistic, high-contrast, 3D isometric style.”

Tags

#Databricks #Lakeflow #DeltaLiveTables #DataEngineering #Orchestration #ETL #SQL #DLT #Workflows #CI_CD

Leave a Reply