Kafka → VeloDB in 60 Minutes: A Real-Time Streaming Blueprint (Apache Doris)

Meta description (158 chars):

Build a Kafka→VeloDB pipeline in under an hour. Learn table design, Routine Load, async MVs, and verification steps for sub-second analytics on Apache Doris.

Why this matters (and what you’ll get in 60 minutes)

Your PM wants a live dashboard that feels instant. Your warehouse hates high-QPS, low-latency queries. VeloDB—a commercial distribution of Apache Doris—is designed for real-time OLAP with familiar SQL and simple ops. In this guide you’ll stand up a Kafka → VeloDB stream, model the data for speed, add a materialized view for query acceleration, and verify performance—fast and clean. (VeloDB)

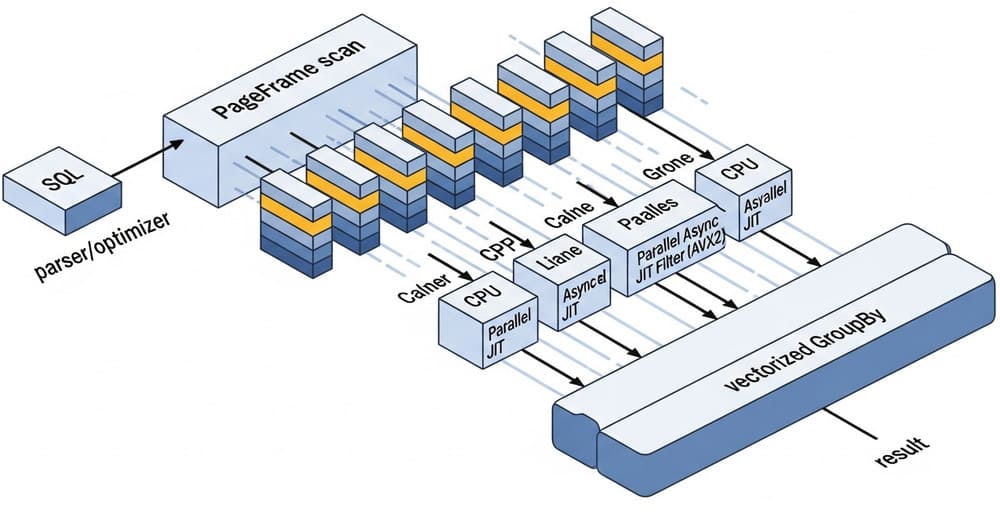

Architecture at a glance

Kafka topic (events/json)

│

▼

Routine Load (Doris-native, exactly-once)

│

▼

VeloDB (Apache Doris FE/BE)

- Raw events table (Duplicate Key)

- Async Materialized View (rollups)

│

▼

BI / APIs (sub-second queries)

- Routine Load continuously consumes from Kafka with exactly-once semantics and supports CSV/JSON.

- Duplicate Key tables keep raw event detail with efficient scans.

- Async Materialized Views (MVs) rewrite queries transparently for fast rollups. (Apache Doris)

Prerequisites (5–10 min)

- A running VeloDB warehouse (SaaS or BYOC). For regulated networks, BYOC supports PrivateLink/private endpoints so data stays in your VPC. (VeloDB Docs)

- A Kafka broker and a topic (e.g.,

orders). - FE SQL access (MySQL protocol) to VeloDB/Doris. (Apache Doris)

Tip for BYOC folks: confirm private connectivity from your app VPC to the VeloDB control plane/endpoints before loading data. (VeloDB Docs)

Step-by-step: Kafka → VeloDB in 60 minutes

T-50 — Create a raw events table (Duplicate Key)

Use a Duplicate Key model for append-only event streams. Keep the sort key short (≤3 cols) and partition by time for pruning. (Apache Doris)

CREATE DATABASE IF NOT EXISTS rt_demo;

USE rt_demo;

CREATE TABLE orders_events (

event_time DATETIME NOT NULL,

order_id BIGINT NOT NULL,

customer_id BIGINT NOT NULL,

status VARCHAR(16),

amount_usd DECIMAL(12,2)

)

DUPLICATE KEY(event_time, order_id)

PARTITION BY RANGE(event_time) (

FROM ("2025-11-01") TO ("2025-12-01") INTERVAL 1 DAY

)

DISTRIBUTED BY HASH(order_id) BUCKETS 16;

T-40 — Produce a trickle of JSON events (optional helper)

If you don’t already have a producer, send simple JSON like:

{"event_time":"2025-11-20T15:00:00","order_id":101,"customer_id":42,"status":"PAID","amount_usd":39.90}

Routine Load understands JSON and can map fields with jsonpaths, json_root, and strip_outer_array. (Apache Doris)

T-35 — Start Routine Load from Kafka

This runs server-side, continuously pulling from Kafka with exactly-once guarantees:

CREATE ROUTINE LOAD rt_demo.load_orders

ON orders_events

COLUMNS(event_time, order_id, customer_id, status, amount_usd)

PROPERTIES

(

"desired_concurrent_number" = "3",

"max_batch_interval" = "5",

"max_batch_rows" = "200000",

"max_batch_size" = "209715200", -- 200MB

"strict_mode" = "false",

"format" = "json",

"jsonpaths" = "[\"$.event_time\",\"$.order_id\",\"$.customer_id\",\"$.status\",\"$.amount_usd\"]"

)

FROM KAFKA

(

"kafka_broker_list" = "broker1:9092,broker2:9092",

"kafka_topic" = "orders",

"property.group.id" = "doris.orders.rt.v1",

"kafka_partitions" = "0,1,2"

);

Useful knobs later: kafka_offsets, max_filter_ratio, partition pinning, etc. (doris.incubator.apache.org)

Why Routine Load first? Fewer moving parts. If you need CDC merges or heavy transforms, the Flink Doris Connector is your next step. (Apache Doris)

T-25 — Verify ingestion

-- Job status

SHOW ROUTINE LOAD FOR rt_demo.load_orders;

-- Data sanity

SELECT status, COUNT(*)

FROM orders_events

WHERE event_time >= now() - INTERVAL 10 MINUTE

GROUP BY status

ORDER BY 2 DESC;

T-20 — Add an Async Materialized View for rollups

Async MVs transparently accelerate queries; the planner rewrites to the MV when it matches. Great for minute-bucket aggregates. (Apache Doris)

CREATE ASYNC MATERIALIZED VIEW mv_orders_1min

BUILD IMMEDIATE

REFRESH ASYNC

PARTITION BY date_trunc('day', event_time)

DISTRIBUTED BY HASH(order_id) BUCKETS 16

AS

SELECT

date_trunc('minute', event_time) AS ts_minute,

COUNT(*) AS orders,

SUM(amount_usd) AS revenue,

SUM(CASE WHEN status='FAILED' THEN 1 ELSE 0 END) AS failed

FROM rt_demo.orders_events

GROUP BY date_trunc('minute', event_time);

MV refresh behavior, data-lake support, and rewrite rules are documented in detail here. (Apache Doris)

T-10 — Query the MV (what your dashboard should run)

SELECT ts_minute, orders, revenue, failed

FROM mv_orders_1min

WHERE ts_minute >= now() - INTERVAL 1 HOUR

ORDER BY ts_minute DESC

LIMIT 60;

If you query the base table with an equivalent shape, Doris can rewrite to the MV automatically. (Apache Doris)

T-5 — (Optional) Stream with Flink instead

If your team needs CDC joins or richer transforms, use the Flink Doris Connector sink:

-- Flink SQL sketch

CREATE TABLE src_orders (...) WITH (...); -- Kafka source

CREATE TABLE sink_doris_orders_events (...) WITH (...); -- Doris sink

INSERT INTO sink_doris_orders_events

SELECT * FROM src_orders;

Connector docs (source/sink, version matrix) are here. (Apache Doris)

Best practices (that save you pain)

- Pick the right table model: Use Duplicate Key for raw streams; don’t force UNIQUE unless you truly need upserts. (Apache Doris)

- Keep sort keys tight: ≤3 columns; include your main time column if queries are time-bounded. (Apache Doris)

- Partition to your SLA: Daily is sane; go hourly only when needed. Align MV

PARTITION BYwith the base table’s partitioning. (Apache Doris) - Materialize actual query shapes: Minute/hour buckets, top-N dimensions—don’t create speculative MVs. (Apache Doris)

- BYOC networking: Lock down private connectivity (PrivateLink/priv endpoints) before load tests. (VeloDB Docs)

Common pitfalls

- JSON mapping errors: If you see nulls, check

jsonpaths,json_root, andstrip_outer_arraysettings. (Apache Doris) - One mega cluster for everything: Separate ingest and BI if concurrency spikes. (VeloDB makes this easy at the control plane.) (VeloDB)

- MV sprawl: A dozen overlapping MVs will burn compute and cache. Start with one that your dashboard actually uses. (Apache Doris)

FAQ (quick hits)

- Is this exactly-once from Kafka? Yes—Routine Load provides exactly-once semantics. (Apache Doris)

- Do I need a custom driver? VeloDB/Doris speaks MySQL protocol, so most SQL tools work out of the box. (Apache Doris)

- Can I start with CSV? Yes, but prefer JSON for evolving schemas. Routine Load supports both. (Apache Doris)

Summary & call to action

In an hour, you can go from Kafka topic to a VeloDB table plus an async MV that feeds a sub-second dashboard. Start slim—Duplicate Key table, one Routine Load job, one MV—then scale out only when user queries prove the need. If you later require CDC merges or transforms, layer in Flink Doris Connector without re-platforming. (Apache Doris)

Next step: Ship this to staging, chart the MV directly, and baseline p95/p99 latency before widening scope.

Internal link ideas (for your site)

- “Choosing Duplicate vs Unique vs Aggregate in Doris/VeloDB”

- “Designing Daily vs Hourly Partitions for Streaming Workloads”

- “Async Materialized Views: Rewrite Patterns that Actually Hit”

- “BYOC Networking for VeloDB: PrivateLink and VPC Patterns”

Image prompt (for DALL·E / Midjourney)

“A clean isometric diagram of a Kafka→VeloDB pipeline: Kafka topic, Routine Load, FE/BE nodes, an async materialized view, and a BI dashboard. Minimalist, high-contrast, modern.”

Tags

#VeloDB #ApacheDoris #Kafka #RealTimeAnalytics #MaterializedViews #DataEngineering #Streaming #BYOC #Flink #OLAP

Leave a Reply