QuestDB Ingestion at Millions of Rows/Second: ILP vs PGWire vs Kafka (When to Use Each)

Meta description:

Choosing between ILP, PGWire, and Kafka for QuestDB ingestion? See when each path wins, with real configs, code, and trade-offs to hit millions of rows/sec reliably.

Introduction: you’ve got data on firehose mode

You’ve stood up QuestDB, producers are screaming, and dashboards can’t lag. The choice that decides whether you ship or struggle isn’t SQL—it’s ingestion path. Use the wrong one and you’ll fight backpressure, duplicated rows, or random latency spikes. Use the right one and QuestDB happily gulps millions of rows/second with predictable behavior. Here’s the no-BS guide for mid-level engineers.

The TL;DR (spoiler)

- ILP (InfluxDB Line Protocol) is the default for high-throughput writes. It’s built for speed and is the path QuestDB recommends for ingestion. (py-questdb-client.readthedocs.io)

- PGWire (Postgres protocol) is mainly for querying. It works for small/occasional inserts, but it’s not the recommended path for heavy data ingest. (QuestDB)

- Kafka → QuestDB via the official Kafka Connect sink is the sweet spot for streaming pipelines and CDC—recommended for most teams. (QuestDB)

Architecture choices, clearly

What each path looks like

- ILP (HTTP/TCP)

Producers write directly to QuestDB using the ILP clients. Default ports: HTTP 9000, TCP 9009. HTTP is preferred for error feedback and retries. (QuestDB) - PGWire (Postgres protocol, port 8812)

Reuse existing Postgres drivers for queries; inserts work but aren’t optimized for firehose ingestion. (QuestDB) - Kafka Connect sink

Kafka topics → QuestDB Kafka Connector (uses ILP under the hood) → QuestDB. Recommended for most users; easy CDC with Debezium. (QuestDB)

Comparison table

| Dimension | ILP (HTTP/TCP) | PGWire | Kafka Connect Sink |

|---|---|---|---|

| Primary use | High-throughput ingestion | Querying (light writes) | Streaming ingestion / CDC |

| Throughput | Highest (designed for writes) | Lower | High; scales with Connect |

| Ordering | Per-producer; late/out-of-order handled by table design | Same | Topic partition order; good for CDC |

| Error handling | HTTP has clear feedback; TCP is minimal | Driver-level | Built-in retries/offsets |

| Operational effort | Low | Low (but not for heavy ingest) | Moderate (Connect ops) |

| Typical pick | Sensors, metrics, tick data | Ad-hoc inserts, admin tools | Event streams, CDC, microservices |

(“ILP is the fastest way to insert data” and “PGWire is primarily recommended for querying” are QuestDB’s official stances.) (py-questdb-client.readthedocs.io)

Real examples (copy–paste friendly)

A) ILP via Python (HTTP, port 9000)

# pip install -U questdb[dataframe]

from questdb.ingress import Sender, TimestampNanos

conf = "http::addr=localhost:9000;"

with Sender.from_conf(conf) as sender:

sender.row(

"trades",

symbols={"symbol": "BTC-USD", "side": "buy"},

columns={"price": 39269.98, "amount": 0.001},

at=TimestampNanos.now()

)

sender.flush()

QuestDB’s official Python client implements ILP and explicitly positions ILP as the fastest insert path. (py-questdb-client.readthedocs.io)

Notes

- Use HTTP unless you have a reason to choose TCP; HTTP gives better error reporting & retries. (QuestDB)

- Default ILP ports: 9000 (HTTP), 9009 (TCP). (QuestDB)

B) PGWire for light writes (and queries)

-- Connect with any PostgreSQL driver to port 8812

-- Example insert (okay for small volumes)

INSERT INTO device_heartbeat (device_id, status, ts)

VALUES ('gw-17', 'ok', now());

Use PGWire mainly to query QuestDB; it’s compatible with typical Postgres clients. For heavy ingest, prefer ILP. Also note timestamp handling nuances over PGWire (TIMESTAMP WITHOUT TIME ZONE in transit). (QuestDB)

C) Kafka → QuestDB with the official sink

Create questdb-connector.properties:

name=questdb-sink

connector.class=io.questdb.kafka.QuestDBSinkConnector

client.conf.string=http::addr=localhost:9000;

topics=example-topic

table=example_table

# converters (JSON example)

key.converter=org.apache.kafka.connect.storage.StringConverter

value.converter=org.apache.kafka.connect.json.JsonConverter

value.converter.schemas.enable=false

Start Kafka Connect and you’re ingesting. This is recommended for most users; the connector uses ILP and plays nicely with Debezium for CDC. (QuestDB)

How to choose (decision guide)

Pick ILP if…

- You control producers, want max throughput, and need simple, direct writes (metrics, IoT, ticks). ILP is the insertion fast path. (py-questdb-client.readthedocs.io)

Pick Kafka Connect if…

- You already have Kafka, need durable streaming, backpressure handling, transformations, or CDC via Debezium. QuestDB’s connector is first-party and the docs literally label it “recommended for most people.” (QuestDB)

Pick PGWire if…

- You’re query-centric and only do small administrative inserts (migrations, quick scripts). For sustained ingest, switch to ILP. (QuestDB)

Performance realities & what “millions/sec” actually means

QuestDB’s own docs state that each primary node can sustain up to ~5 million rows/sec in TSBS benchmarks (hardware and schema dependent). That’s why the ingestion path matters so much. (QuestDB)

Best practices (what saves you in production)

- Use WAL tables + dedup for idempotent ingest

Turn on deduplication and set correct UPSERT KEYS so retries or replays don’t create duplicates. (QuestDB) - Choose ILP/HTTP unless you truly need TCP

HTTP brings error feedback and simpler ops; TCP is mainly for legacy/compat. (QuestDB) - Mind ports & security

Expose only what you need: 9000 (HTTP/REST/ILP), 8812 (PGWire), 9009 (ILP/TCP)—lock down with firewalls/TLS where appropriate. (QuestDB) - Kafka Connect configs: keep it boring

Start with JSON, then move to Avro/Protobuf if needed; let Connect handle retries and offsets. The QuestDB sink uses ILP under the hood. (QuestDB) - Design timestamps & partitions first

Even perfect ingestion chokes on poor schema. Set the designated timestamp and partitions appropriately before you push load. (See QuestDB schema & ingestion overview.) (QuestDB) - Watch write amplification and out-of-order data

Track write amplification; high out-of-order rates can degrade throughput. (QuestDB)

Common pitfalls (how teams shoot their own foot)

- Using PGWire for the firehose. It’ll work… until it doesn’t. Switch heavy writes to ILP. (QuestDB)

- Skipping UPSERT KEYS with dedup. Dedup without proper keys won’t prevent duplicates. (QuestDB)

- TCP ILP with Kafka Connect. The connector docs advise HTTP; TCP lacks delivery guarantees for this path. (QuestDB)

- Timezone confusion over PGWire. Timestamps are transmitted as TIMESTAMP WITHOUT TIME ZONE; configure clients to treat them as UTC. (QuestDB)

Internal link ideas (official only)

- Ingestion Overview (how to ingest: ILP, PGWire, Kafka, Spark, etc.). (QuestDB)

- ILP Overview & Advanced Settings (HTTP vs TCP, auth/TLS, message format). (QuestDB)

- PGWire Client Intro (when to use, timestamp caveats). (QuestDB)

- Kafka Ingestion Overview + QuestDB Kafka Connector docs. (QuestDB)

- Python ILP client (quickstart & config). (py-questdb-client.readthedocs.io)

- Deduplication &

ALTER TABLE … DEDUP ENABLE. (QuestDB)

Conclusion & takeaways

If you remember one thing:

Use ILP for raw speed, Kafka Connect for streaming & CDC, and PGWire for querying (and only light inserts). That mapping alone prevents 80% of production pain.

Quick checklist

- Ingestion path chosen: ILP | Kafka | PGWire

- WAL + dedup + UPSERT KEYS configured

- Designated timestamp & partitions set

- Ports & TLS locked down

- Dashboards validated under load

Call to action: Want a follow-up deep dive? I can write “QuestDB Time-Series SQL in Practice: SAMPLE BY, LATEST ON, ASOF JOIN” next—so your ingestion turns straight into fast analytics.

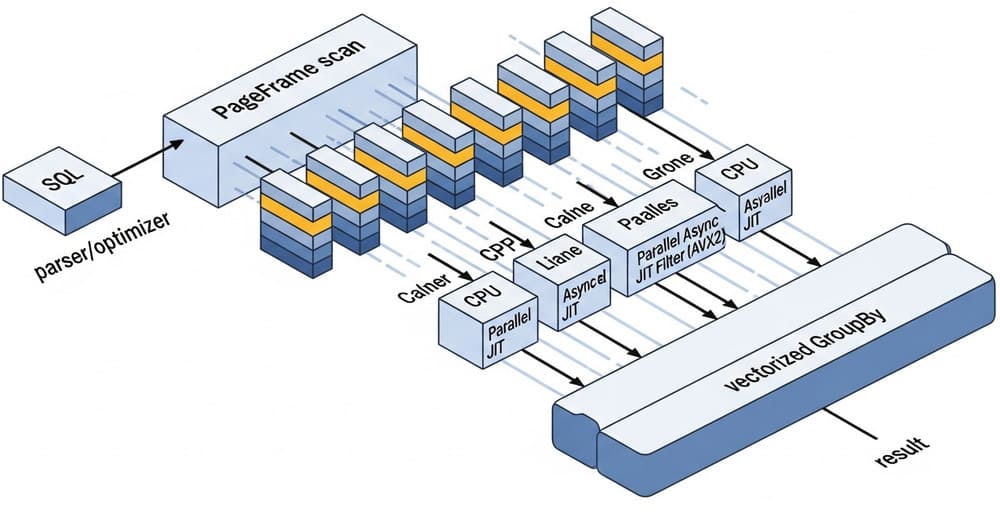

Image prompt (for DALL·E/Midjourney)

“A clean, modern data architecture diagram showing three ingestion paths into a QuestDB cluster: ILP (HTTP 9000), PGWire (8812), and Kafka Connect (topics → sink → ILP). Minimalistic, high-contrast, 3D isometric style.”

Tags

#QuestDB #TimeSeries #ILP #Kafka #CDC #Postgres #PGWire #DataIngestion #Streaming #DataEngineering

Leave a Reply