Idempotent Ingest in QuestDB: WAL Deduplication & UPSERT KEYS (the Practical Guide)

Meta description:

Make QuestDB ingest idempotent with WAL deduplication and UPSERT KEYS. Learn how it works, when to use it, performance trade-offs, and step-by-step SQL + Python examples (real world ready).

Why you should care (quick story)

You’re replaying a Kafka topic after a bad deploy. The data pipeline starts from T-30 minutes to be “safe.” Your dashboards spike, alerts fire, and suddenly you’re debugging ghosts—duplicates.

QuestDB’s deduplication on WAL tables lets you replay safely and keep a single source of truth: send data again, and the database reconciles it for you. (QuestDB)

What “idempotent ingest” means in QuestDB

Idempotent ingest = You can write the same logical events more than once and end up with exactly one canonical row per event.

In QuestDB this is implemented at the storage level for WAL tables using deduplication with UPSERT KEYS:

- You tell QuestDB which columns uniquely identify an event (your UPSERT KEYS).

- On insert, QuestDB replaces any existing row that matches all those key columns (a last-writer-wins update).

- Dedup applies only to new inserts after it’s enabled; it doesn’t rewrite historical duplicates. (QuestDB)

Important boundaries

• WAL-only: Dedup works only on WAL tables.

• Replace semantics: Today it’s last-writer-wins; “skip/merge” variants are on the roadmap. (QuestDB)

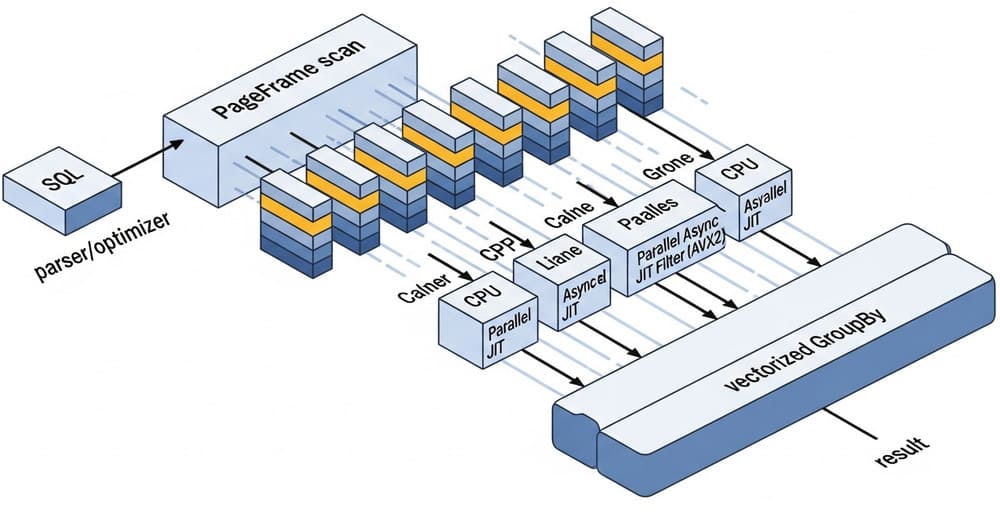

Quick mental model (diagram)

Imagine a mailbox in front of the table:

[Write-Ahead Log] --(conflict check on UPSERT KEYS)--> [Table Partitions by time]

|

(match? replace : insert)

WAL gives durability and concurrency; the dedup step checks your key set, then insert or replace. (QuestDB)

Choosing good UPSERT KEYS (the make-or-break step)

Pick a key set that uniquely identifies an event at the intended grain:

- IoT/metrics:

(ts, device_id)or(ts, metric, tag) - Trades/orders:

(ts, trade_id)or(ts, symbol, exchange, seq) - Logs:

(ts, source, line_hash)

Practical tips:

- Prefer

SYMBOLfor repetitive tags (device, symbol, region). It saves space and can be indexed for fast filters. (QuestDB) - Don’t key on floats that can drift (e.g.,

price). - Keep the key count minimal—more keys = more work per insert during conflict checks. (QuestDB)

Step-by-step: enable dedup (new or existing tables)

A) Create a new WAL table with dedup + keys

CREATE TABLE trades (

ts TIMESTAMP,

symbol SYMBOL CAPACITY 1024 CACHE,

trade_id LONG,

price DOUBLE,

size INT

)

TIMESTAMP(ts)

PARTITION BY DAY

WAL

DEDUP UPSERT KEYS (ts, trade_id);

WALensures durability and parallel ingest;DEDUP UPSERT KEYS (ts, trade_id)turns on last-writer-wins for those keys. (QuestDB)

B) Turn on dedup for an existing WAL table

ALTER TABLE trades

DEDUP ENABLE UPSERT KEYS (ts, trade_id);

- Works only if

tradesis a WAL table. - Does not clean historical duplicates; it applies to new inserts going forward. (QuestDB)

Verify configuration:

SELECT column, upsertKey

FROM table_columns('trades')

ORDER BY column;

(Helpful to confirm which columns are keys.) (GitHub)

What actually happens? (truth table)

| You insert… | Keys | Result |

|---|---|---|

| A new event never seen before | (ts, trade_id) | Insert |

Same (ts, trade_id) with different fields | (ts, trade_id) | Replace existing row (LWW) |

| Multiple duplicates in the batch/stream | (ts, trade_id) | Table keeps one (the last) |

Mechanics: QuestDB checks for rows that match all UPSERT KEYS and replaces them with the new one. (QuestDB)

Replays & backfills (the safe way)

Scenario: an ingest failure at 12:34. You fix the app and decide to replay from 12:00 to cover any missed data. With dedup enabled:

- It’s safe to resend that window; duplicates are resolved on write. (QuestDB)

- If a WAL table got suspended (disk full, OS limits), fix the cause and resume:

ALTER TABLE trades RESUME WAL; -- or skip a bad txn explicitly: ALTER TABLE trades RESUME WAL FROM TXN 5;Monitor suspended tables via metrics. (QuestDB)

Example: Python ILP + replay loop

# pip install questdb

import time

from questdb.ingress import Sender, TimestampNanos

ILP_HOST, ILP_PORT = "127.0.0.1", 9009

def send_trade(sender, ts_ns, symbol, trade_id, price, size):

sender.row(

table="trades",

symbols={"symbol": symbol},

columns={"trade_id": trade_id, "price": price, "size": size},

at=ts_ns

)

# normal ingest

with Sender(ILP_HOST, ILP_PORT) as sender:

send_trade(sender, TimestampNanos.now(), "AAPL", 123, 189.25, 100)

# later: replay a safe window (duplicates will be replaced)

replay_epoch_ns = TimestampNanos.now() - 30 * 60 * 1_000_000_000

with Sender(ILP_HOST, ILP_PORT) as sender:

# idempotent resend; same trade_id at same ts will replace

send_trade(sender, replay_epoch_ns, "AAPL", 123, 189.30, 100)

- ILP is the recommended high-throughput ingest path; PGWire is best for querying. (QuestDB)

- Python ILP client reference if you need lower-level control. (py-questdb-client.readthedocs.io)

Verification query:

-- Expect exactly one row for a given (ts, trade_id)

SELECT count() FROM trades WHERE ts = $1 AND trade_id = $2;

Performance: what to expect (and how to tune)

- Dedup adds work per insert; the cost rises with more UPSERT KEYS and higher conflict rates. Test with real replay patterns. (QuestDB)

- Favor ILP for heavy ingest; keep PGWire for ad-hoc writes and reads. (QuestDB)

- Use

SYMBOLfor tags; consider indexing frequently filtered symbols. Mind cardinality. (QuestDB) - Monitor WAL health and apply latency (Prometheus metrics for suspended tables & apply rates). (QuestDB)

Common pitfalls (and how to avoid them)

- Not a WAL table: Dedup won’t work. Ensure

WAL(or convert at restart withALTER TABLE … SET TYPE WAL). (QuestDB) - Wrong keys: If keys don’t uniquely identify the event, you’ll “replace” legitimate distinct rows. Re-check data grain.

- Expecting retroactive cleanup: Enabling dedup does not deduplicate old data automatically—only new inserts. Use SQL to repair history if needed. (QuestDB)

- Too many keys: Each extra key increases comparison work; start with the minimal unique set. (QuestDB)

End-to-end checklist (copy/paste)

- Schema

TIMESTAMP(ts) PARTITION BY <granularity>WALDEDUP UPSERT KEYS (<minimal unique set>)(QuestDB)

- Ingest

- Use ILP clients for throughput; batch and flush appropriately. (QuestDB)

- Replay

- Re-send a fixed window with confidence; dedup will replace matches.

- If a table is suspended, RESUME WAL (optionally from a txn). Monitor with Prometheus. (QuestDB)

Internal link ideas (official)

- Data Deduplication (concepts & trade-offs) — how and why, replay guidance. (QuestDB)

- ALTER TABLE … DEDUP ENABLE (reference) — syntax, WAL-only note, forward-only effect. (QuestDB)

- CREATE TABLE (reference) —

WAL,DEDUP UPSERT KEYS, semantics = replace matches. (QuestDB) - Write-Ahead Log (concept) — WAL properties and requirements. (QuestDB)

- ILP Overview (ingestion) — recommended path for high-throughput writes. (QuestDB)

- PGWire Intro (querying) — why it’s great for reads, not bulk ingest. (QuestDB)

- ALTER TABLE RESUME WAL — recover from suspended WAL tables, optional txn skip. (QuestDB)

- Monitoring & alerting — metrics for suspended tables / apply rates. (QuestDB)

Conclusion & takeaways

- Idempotency is non-negotiable for real pipelines; QuestDB gives you a storage-level solution with WAL + dedup.

- Pick UPSERT KEYS that truly represent event identity; start small.

- Use ILP for firehose ingest; replay with confidence; monitor WAL health. (QuestDB)

Call-to-action:

Want a follow-up where we benchmark conflict rates (1%, 10%, 50%) across different key sets and show the impact? Say the word—I’ll publish the scripts and charts.

Image prompt (for DALL·E / Midjourney)

“A clean, modern diagram of a QuestDB WAL ingest pipeline with a deduplication gate comparing UPSERT KEYS (ts, trade_id) before writing into partitioned time-series storage — minimalistic, high contrast, 3D isometric style.”

Tags

#QuestDB #TimeSeries #IdempotentIngest #Deduplication #WAL #UPSERT #ILP #DataEngineering #StreamingData #Kafka

Leave a Reply