QuestDB PGWire: How to Query with PostgreSQL Clients—Without the Gotchas

Meta description (158 chars):

Query QuestDB using PostgreSQL clients over PGWire the right way: connection settings, timestamp handling, pooling, metadata, and when to use ILP instead.

Why this matters (quick story)

You’ve got dashboards and services already speaking PostgreSQL. QuestDB enters the stack for fast time-series analytics—and the easiest path is PGWire so those clients “just work.” Then the surprises show up: odd timestamps, missing pg_catalog tables, sluggish inserts. This guide cuts straight to what works, what doesn’t, and how to avoid the traps.

PGWire in QuestDB, at a glance

- What it is: QuestDB implements the PostgreSQL wire protocol (PGWire) so you can connect with standard Postgres drivers/tools. (QuestDB)

- What it’s best for: Querying. For high-throughput ingestion, use InfluxDB Line Protocol (ILP) clients instead. (QuestDB)

- Default port:

8812. (QuestDB) - Footgun to avoid: Some Postgres features/metadata aren’t implemented; clients expecting full PostgreSQL catalogs may break. (QuestDB)

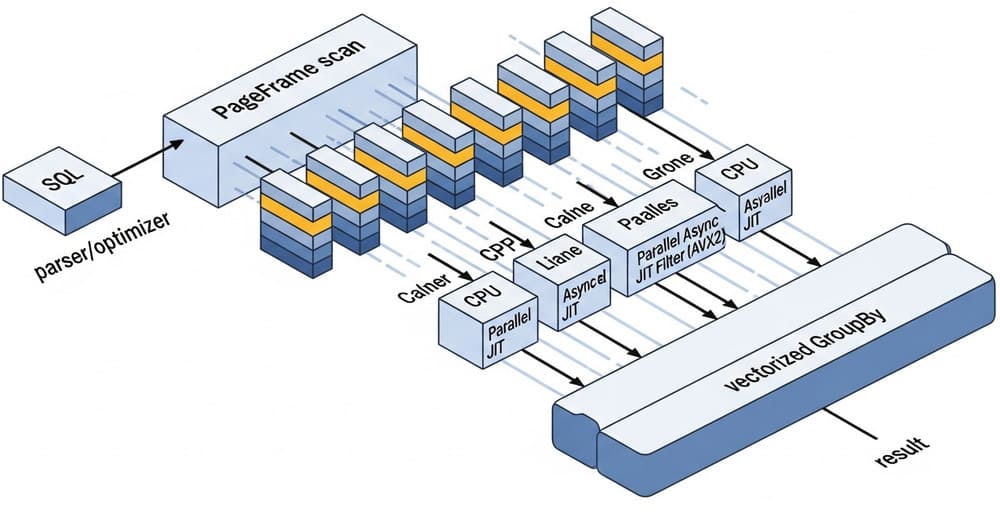

Architecture — where PGWire fits

Think of your stack as two lanes:

- Read lane (PGWire → SQL): services, BI tools, notebooks reuse Postgres drivers to query QuestDB.

- Write lane (ILP → ingestion): apps, stream processors (Kafka Connect, Debezium, etc.) push millions of rows/sec via ILP. (QuestDB)

A clean split keeps your reads simple and your writes fast.

Minimal client setup (works everywhere)

Python (psycopg 3)

import psycopg as pg

conn = pg.connect("host=127.0.0.1 port=8812 dbname=qdb user=admin password=quest", autocommit=True)

# Always treat timestamps as UTC in the app layer

sql = """

SELECT

ts,

symbol,

avg(price) AS avg_price

FROM trades

WHERE ts >= dateadd('d', -7, now())

SAMPLE BY 1h

"""

for row in conn.execute(sql):

print(row)

- Uses the PGWire port

8812. - Shows a time-series-friendly

SAMPLE BYquery.

Docs: PGWire intro, Postgres API reference. (QuestDB)

Node.js (pg)

process.env.TZ = 'UTC'; // Make timestamp handling predictable

const { Client } = require('pg');

const client = new Client({

host: '127.0.0.1', port: 8812, user: 'admin', password: 'quest', database: 'qdb'

});

(async () => {

await client.connect();

const { rows } = await client.query(

"SELECT symbol, last(price) AS px, last(ts) AS ts FROM trades LATEST ON ts PARTITION BY symbol LIMIT 10"

);

console.log(rows);

await client.end();

})();

- QuestDB timestamps are stored in UTC; some clients default to local time—force UTC in your app to avoid confusion. (QuestDB)

Common pitfalls (and how to fix them)

1) “Why do my metadata queries fail?”

Many ORMs/BI tools probe pg_catalog or information_schema tables that QuestDB doesn’t fully emulate. Use QuestDB meta functions instead: tables(), table_columns(), version() (also available as pg_catalog.version()). (QuestDB)

Quick checks:

SELECT version(); -- PostgreSQL protocol version (QuestDB reports compatible version)

SELECT * FROM tables(); -- list tables, partitions, WAL, TTL, etc.

SELECT * FROM table_columns(); -- per-column metadata

(QuestDB)

2) “Inserts over PGWire are slow.”

They will be, relative to ILP. PGWire is great for queries and small admin writes, not for firehose ingestion. Use first-party ILP clients (Node, .NET, Java, Python, etc.). (QuestDB)

3) “My timestamps look off.”

QuestDB stores timestamps in UTC, and PGWire represents them in ways that some clients interpret as local time. Normalize to UTC in your client or follow language-specific PGWire notes. (QuestDB)

4) “My driver expects full PostgreSQL behaviors.”

QuestDB’s storage model differs from PostgreSQL; some features simply don’t exist. Keep your usage to standard SQL + QuestDB’s time-series extensions and avoid deep Postgres-specific features in drivers/ORMs. (QuestDB)

Best practices for PGWire querying

- Pool connections in the client driver; reuse sockets for low-latency reads. (See per-language PGWire guides.) (QuestDB)

- Limit result sets: time-series tables are huge—use

WHERE ts >=+LIMIT. (Design for performance guidance.) (QuestDB) - Lean on time-series SQL:

- Downsample with

SAMPLE BY - “Latest per key” with

LATEST ON … PARTITION BY - Align streams with

ASOF JOIN(optionally withTOLERANCE)

(QuestDB)

- Downsample with

- Prefer symbols to strings for high-cardinality tags; cheaper storage & faster filters. (QuestDB)

- Inspect with meta functions (

tables(),table_storage()) rather thanpg_catalog. (QuestDB) - Keep reads and writes separated: small admin INSERTs via PGWire are fine; bulk/stream writes go to ILP. (QuestDB)

Comparison cheat-sheet

| Use case | PGWire (PostgreSQL clients) | ILP (QuestDB clients) |

|---|---|---|

| Primary fit | Querying | High-throughput ingestion |

| Typical port | 8812 | 9009 (TCP) / HTTP endpoints via ILP clients |

| Pros | Easy reuse of Postgres drivers; BI-tool friendly | Millions of rows/sec; schema auto-creation; streaming-friendly |

| Cons | Slower for bulk ingest; partial metadata emulation | Write-only; not a query protocol |

| When to choose | App reads, dashboards, ad-hoc SQL | Pipelines, Kafka/Debezium, batched sensor/event ingest |

Sources: networking layer & language-specific PGWire guides; ILP client docs. (QuestDB)

Real-world snippet: “Latest price per symbol” from a service

Python (psycopg):

sql = """

SELECT symbol, last(price) AS px, last(ts) AS ts

FROM trades

LATEST ON ts PARTITION BY symbol

LIMIT 100

"""

rows = conn.execute(sql).fetchall()

This query is stable and fast for dashboards showing a per-symbol “last tick.” (QuestDB)

Troubleshooting quick hits

- Connection refused? Ensure QuestDB is listening on 8812 and credentials are correct. (QuestDB)

- Tool can’t introspect schema? Use

tables()/table_columns()or QuestDB’s Web Console Schema Explorer. (QuestDB) - Timezone mismatch? Force UTC in the client or cast timestamps to epoch and convert app-side. (QuestDB)

Summary & call-to-action

Use PGWire to query QuestDB with your existing Postgres clients; use ILP when you need to go fast on ingest. Normalize timestamps to UTC, use meta functions instead of pg_catalog, and lean on time-series SQL (SAMPLE BY, LATEST ON, ASOF JOIN). Your reward: minimal code changes, maximum performance.

Next step: wire up your service with PGWire, and move the ingestion lane to ILP. If you hit a driver quirk, check the language-specific PGWire guide first.

Internal link ideas (official)

- PGWire Client Overview (index): (QuestDB)

- Networking layer (ports, protocols, caveats): (QuestDB)

- PostgreSQL & PGWire reference: (QuestDB)

- Node PGWire guide: (QuestDB)

- .NET PGWire guide: (QuestDB)

- Meta functions (tables, table_columns, version): (QuestDB)

- Time-series SQL (

ASOF JOIN,TOLERANCE): (QuestDB)

Image prompt

“A clean, modern diagram showing a service layer using PostgreSQL clients over PGWire (port 8812) to query QuestDB, while a separate pipeline ingests via ILP. Minimalistic, high-contrast, 3D isometric style.”

Tags

#QuestDB #PGWire #PostgreSQL #TimeSeries #DataEngineering #ILP #Scalability #Performance #SQL #Architecture

Leave a Reply