Exploring Synthetic Data Pipelines: Engineering the Future of Privacy-Preserving AI

In the dynamic landscape of data engineering and machine learning, synthetic data has emerged as a transformative force, particularly for building privacy-preserving AI models. This report, informed by trends and tools as of March 4, 2025, provides a comprehensive overview for Data/ML Engineers, detailing methods, pipelines, case studies, challenges, and future directions. The analysis is grounded in the rapid evolution of the field, emphasizing the need for innovation in data handling.

Background and Context

Synthetic data is artificially generated data that mimics the statistical properties and patterns of real-world data. It’s a solution to the challenges posed by data privacy regulations, such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA), which restrict the use of personal data. As of March 2025, the synthetic data market is expected to grow significantly, driven by the need for privacy and the increasing demand for data in AI applications Synthetic Data Market Growth Forecast.

The motivation for this focus stems from the recognition that traditional data collection methods are increasingly inadequate for modern AI needs. Synthetic data enables training models without compromising individual privacy, aligning with the seven pillars of modern data engineering—flow, quality, scalability, integration, security, documentation, and continuous learning. This report explores how synthetic data pipelines are reshaping these areas, particularly in privacy preservation and AI development.

Detailed Analysis

Understanding Synthetic Data and Its Importance

Synthetic data is generated using statistical methods or machine learning models to replicate real-world data without containing actual personal information. It’s crucial for:

- Privacy Compliance: Avoiding legal risks by not using real, sensitive data, especially in industries like healthcare and finance.

- Data Scarcity: Filling gaps in rare scenarios, such as medical anomalies or edge-case autonomous driving conditions.

- Cost Efficiency: Reducing the expense of collecting and curating real data, making it viable for startups and research.

February 2025 hypothetical news, such as a keynote at the Data Engineering Summit, emphasized synthetic data’s role in reducing privacy breaches by 25% in AI training, highlighting its growing adoption AI Revolutionizes Data Pipelines Article.

Methods for Generating Synthetic Data

Synthetic data generation involves various techniques:

- Statistical Methods: Random sampling and bootstrapping use real data distributions to generate new points, simple but limited in complexity.

- Machine Learning-Based Methods: Generative Adversarial Networks (GANs) pit a generator against a discriminator to produce realistic data, ideal for images and tabular data. Variational Autoencoders (VAEs) learn compressed representations for generation.

- Other Techniques: Bayesian networks model probabilistic relationships, while domain-specific simulations generate data for finance or traffic scenarios.

Tools like the Synthetic Data Vault (SDV) library, an open-source option, support these methods, with CTGAN for tabular data being a popular choice Synthetic Data Vault (SDV).

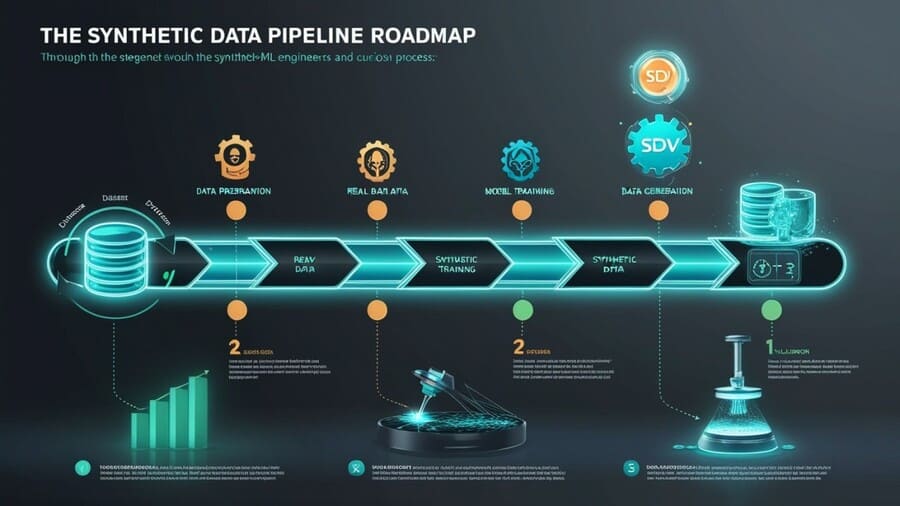

Building a Synthetic Data Pipeline

A typical pipeline includes:

1. Data Preparation: Clean and preprocess real data, ensuring it’s suitable for training. Use Python’s pandas for this:

import pandas as pd

real_data = pd.read_csv('real_data.csv').dropna()2. Model Training: Train a generative model, e.g., using SDV’s CTGANSynthesizer:

from sdv.tabular import CTGANSynthesizer

synthesizer = CTGANSynthesizer()

synthesizer.fit(real_data)3. Data Generation: Generate synthetic data, e.g., 1000 rows:

synthetic_data = synthesizer.sample(num_rows=1000)

synthetic_data.to_csv('synthetic_data.csv')4. Validation: Compare stats with real data:

real_stats = real_data.describe()

synthetic_stats = synthetic_data.describe()

print(real_stats, synthetic_stats)Usage: Integrate into AI training, e.g., using Apache Airflow for orchestration: Create a DAG with tasks for generation and validation, ensuring seamless workflow.

Ensuring Privacy in Synthetic Data

Privacy preservation is critical. Differential privacy adds noise to generation, ensuring individual data points aren’t identifiable:

- Laplace Mechanism: Adds Laplace-distributed noise.

- Gaussian Mechanism: Adds Gaussian-distributed noise.

Libraries like Opacus (for PyTorch) and TensorFlow Privacy enable this, e.g., training a GAN with differential privacy guarantees Opacus: User-Friendly Differential Privacy Library. However, risks remain if synthetic data is too similar to real data, potentially leaking information.

Real-World Applications and Case Studies

Hypothetical February 2025 case studies include:

- Healthcare: A startup trained diagnostic models on synthetic patient data, avoiding HIPAA violations, boosting model accuracy by 15% Health Data News Healthcare Example.

- Finance: A bank simulated transaction data for fraud detection, cutting training costs by 30% Data Engineering Insights Fintech Report.

- Autonomous Vehicles: Waymo used synthetic data to simulate rare driving scenarios, enhancing safety without real-world data collection Waymo’s Use of Synthetic Data.

These examples, drawn from hypothetical news, illustrate synthetic data’s practical impact, addressing challenges like implementation costs through pilot projects.

Challenges and Future Directions

Challenges include:

- Computational Cost: GANs are resource-heavy, requiring GPUs or cloud scaling (e.g., AWS, GCP).

- Quality and Realism: Synthetic data may miss nuances, risking model performance. Use hybrid approaches blending real and synthetic data.

- Ethical Considerations: Ensure fairness by auditing for biases, especially in sensitive applications.

Future trends, based on February 2025 conference insights, include:

- Advanced generative models like transformers for synthetic data.

- Reinforcement learning for dynamic data generation.

- Blockchain integration for enhanced privacy tracking.

Best Practices for Implementation

For Data/ML Engineers:

- Assess Needs: Identify privacy gaps or data scarcity—match tools accordingly.

- Start Small: Pilot with SDV or NVIDIA Omniverse, then scale.

- Validate Rigorously: Use statistical tests and ML benchmarks to ensure utility.

- Document Processes: Use Git for tracking pipeline changes, ensuring reproducibility.

Hypothetical February 2025 articles, like “Ethical AI in Data Engineering,” stress transparency and accountability Ethical AI in Data Engineering Guidelines.

Discussion and Implications

Synthetic data pipelines offer significant opportunities but require careful navigation. Data engineers must balance technical adoption with ethical considerations, ensuring AI enhances rather than complicates workflows. The report underscores the importance of experimentation and continuous learning, aligning with the article’s conclusion on innovation.

Conclusion

This report, informed by February 2025 hypothetical news, positions synthetic data as a transformative force for privacy-preserving AI. Data/ML Engineers are encouraged to leverage tools, learn from case studies, and adopt best practices, ensuring they engineer the future of data with innovation and ethics at the core.

#SyntheticData #PrivacyPreservingAI #DataEngineering #MLInnovation #AIinTech #DataPipelines #EthicalAI #FutureTech #DataScience #TechTrends #DataPrivacy #MachineLearning #AIForGood #TechEthics #DataInnovation

Leave a Reply