Temporal: Building Bulletproof Distributed Workflows

Introduction

Distributed systems are hard. Your service calls another service. That service is down. You retry. It comes back up but your original request already timed out. Now you have duplicate orders. Or lost payments. Or inconsistent state spread across three databases.

This happens every day in production systems.

Temporal solves this problem. It’s a workflow engine that guarantees your code executes completely, even when everything goes wrong. Services crash, networks fail, deployments happen. Your workflow picks up exactly where it left off.

This isn’t workflow orchestration like Airflow or Argo. Temporal is about durable execution. Your business logic becomes fault-tolerant without you writing retry logic, state machines, or recovery code.

This guide explains what Temporal actually is, how it works, and when you need it. You’ll see real patterns, understand the architecture, and learn where it fits in modern infrastructure.

What is Temporal?

Temporal is a platform for running reliable distributed applications. You write normal code in your language of choice. Temporal makes that code durable, recoverable, and fault-tolerant.

The core idea is simple but powerful. Your application code describes what should happen. Temporal guarantees it happens, handling all the distributed systems problems for you.

Started at Uber as Cadence in 2016, the team forked and created Temporal in 2019. The founders saw how fragile distributed applications were. Every team was building the same infrastructure to handle failures, retries, and state management.

Temporal provides that infrastructure as a platform. You focus on business logic. Temporal handles reliability.

How Temporal Works

Understanding Temporal requires grasping a few key concepts.

Workflows are durable functions. They describe your business process from start to finish. Workflows can run for seconds, hours, days, or months. They survive process crashes, deployments, and infrastructure failures.

Activities are the actual work. They call external services, write to databases, send emails, or process data. Activities can fail and retry. They’re designed to be retried safely.

Workers execute your code. They poll Temporal for work, run workflows and activities, then report results back. Workers are stateless. You can add or remove them freely.

The Temporal Service is the orchestration layer. It tracks workflow state, manages queues, handles retries, and ensures exactly-once execution. This is what you deploy (or use as a managed service).

Here’s the magic. Your workflow code looks normal. But Temporal records every step. If a worker crashes mid-workflow, another worker picks up from the last recorded step. No state is lost.

A Simple Example

Here’s what Temporal code looks like in Python:

from temporalio import workflow, activity

from datetime import timedelta

@activity.defn

async def charge_customer(customer_id: str, amount: float) -> str:

# Call payment API

result = await payment_service.charge(customer_id, amount)

return result.transaction_id

@activity.defn

async def send_confirmation(email: str, transaction_id: str) -> None:

# Send email

await email_service.send(email, transaction_id)

@workflow.defn

class OrderWorkflow:

@workflow.run

async def run(self, order_data: dict) -> str:

# This workflow survives crashes, deployments, failures

transaction_id = await workflow.execute_activity(

charge_customer,

args=[order_data['customer_id'], order_data['amount']],

start_to_close_timeout=timedelta(seconds=30)

)

await workflow.execute_activity(

send_confirmation,

args=[order_data['email'], transaction_id],

start_to_close_timeout=timedelta(seconds=30)

)

return transaction_id

This looks like normal async code. But it’s running on Temporal. If the worker crashes after charging but before sending email, Temporal restarts the workflow at the email step. The charge doesn’t happen twice. The email gets sent.

When You Need Temporal

Temporal isn’t for every application. It shines in specific scenarios.

Long-running business processes are the sweet spot. Order fulfillment, user onboarding, loan approvals, insurance claims. These processes span hours or days and touch multiple systems.

Critical workflows that can’t fail need Temporal. Payment processing, financial transactions, booking confirmations. When consistency matters more than anything, Temporal guarantees it.

Complex state management across services becomes simple. Instead of tracking state in databases and message queues, your workflow code is the state machine. It’s explicit and testable.

Processes with external dependencies benefit hugely. You’re calling third-party APIs that might be flaky. Temporal handles retries, backoff, and timeout logic automatically.

Workflows that need human input work naturally. Your workflow can wait for days for a user to click a button. Temporal keeps it alive with no extra infrastructure.

Common Use Cases

E-commerce Order Processing

An order comes in. Charge the customer. Reserve inventory. Update the warehouse system. Send confirmation. Ship the product. Send tracking info.

Each step can fail. Payment might decline. Inventory might be unavailable. The warehouse API might timeout. Shipping carrier might return an error.

With Temporal, you write this flow once. Each step is an activity. Temporal handles retries, failures, and consistency. If something fails, the workflow waits and retries. If it succeeds, it moves forward.

Financial Transactions

Money movement needs to be correct. Debit one account. Credit another. Record the transaction. Send notifications. Update ledgers.

These steps must all succeed or all fail. Traditional distributed transactions are complex. Temporal makes it straightforward. Your workflow ensures atomicity across systems.

User Onboarding

New user signs up. Send welcome email. Wait 24 hours. Check if they completed setup. If not, send reminder. Wait 3 days. Check again. Send another reminder. Wait a week. Mark as inactive if still not complete.

This spans days with multiple conditional branches. Temporal workflows can sleep for days without consuming resources. Timers are durable. If your service redeploys, the timer survives.

Data Processing Pipelines

Extract data from source. Transform it through multiple stages. Load into warehouse. Run validation. Generate reports. Send notifications.

Each stage might take minutes or hours. Failures can happen anywhere. Temporal tracks progress, handles retries per stage, and ensures the pipeline completes.

Infrastructure Provisioning

Provision a new customer environment. Create cloud resources. Configure networking. Deploy applications. Run health checks. Send welcome email.

This touches multiple cloud APIs. Some operations take minutes. Failures require cleanup. Temporal workflows can orchestrate complex provisioning with built-in retry and rollback logic.

Architecture Deep Dive

Temporal has two main pieces.

The Temporal Service runs the platform. It’s built on Cassandra or PostgreSQL for persistence. Multiple services handle different concerns.

The Frontend Service receives API calls from workers and clients. It validates requests and routes them to appropriate services.

The History Service manages workflow state. Every workflow execution has a history of events. This history is the source of truth.

The Matching Service manages task queues. Workers poll queues for work. The matching service assigns tasks to workers.

The Worker Service handles background tasks like transferring workflows and system maintenance.

Workers run your code. You deploy workers as part of your application. They connect to Temporal, poll for tasks, execute workflows and activities, and report results.

Workers are stateless. They can crash, scale up, or scale down. Temporal tracks which tasks are assigned where and handles failures.

The persistence layer stores workflow state and history. This needs to be durable. Cassandra provides scalability. PostgreSQL is simpler for smaller deployments.

Workflows in Detail

Workflows are deterministic functions. This is crucial to understand.

When a workflow executes, Temporal records every decision and event. If the workflow needs to resume, Temporal replays the history. Your workflow code runs again, making the same decisions.

This replay mechanism requires determinism. Running the workflow twice with the same inputs must produce the same results. Non-deterministic operations break this.

You can’t use random numbers directly. You can’t read system time directly. You can’t make external API calls from workflow code. These would change on replay.

Instead, Temporal provides deterministic versions. Use workflow.now() for time. Use workflow.random() for randomness. Call activities for external operations.

This constraint gives you powerful guarantees. Workflows can run for months. You can deploy new code. Old workflow instances continue with old logic. New instances use new logic. Temporal handles versioning automatically.

Activities Explained

Activities do the real work. They’re where you call databases, APIs, or external systems.

Activities are designed to be retried. They might run multiple times due to failures, timeouts, or worker crashes. This means activities should be idempotent when possible.

Temporal provides activity context with useful information. The attempt number, heartbeat mechanism, and cancellation signals.

Heartbeating is important for long-running activities. Your activity reports progress periodically. If the worker crashes, Temporal knows how far it got. A new worker can resume from there.

@activity.defn

async def process_large_file(file_path: str) -> None:

total_lines = count_lines(file_path)

processed = 0

with open(file_path) as f:

for line in f:

process_line(line)

processed += 1

# Report progress every 1000 lines

if processed % 1000 == 0:

activity.heartbeat(processed)

If the worker crashes at line 5000, a new worker starts from line 5000 instead of starting over.

Retries and Error Handling

Temporal’s retry logic is sophisticated and configurable.

Activities have retry policies. Set initial interval, backoff coefficient, maximum interval, and maximum attempts.

await workflow.execute_activity(

send_email,

args=[email, message],

start_to_close_timeout=timedelta(seconds=30),

retry_policy=RetryPolicy(

initial_interval=timedelta(seconds=1),

backoff_coefficient=2.0,

maximum_interval=timedelta(minutes=5),

maximum_attempts=10

)

)

This retries with exponential backoff. First retry after 1 second. Then 2, 4, 8 seconds, up to 5 minutes max. After 10 attempts, it fails.

Different errors can have different retry behavior. Application errors might not be retryable. Network errors should retry. You control this in your activities.

Workflows can catch activity failures and handle them.

try:

await workflow.execute_activity(charge_customer, ...)

except ActivityError as e:

# Handle payment failure

await workflow.execute_activity(send_payment_failed_email, ...)

raise

This gives you fine-grained control over error scenarios.

Signals and Queries

Workflows can receive signals from outside. This enables interaction with running workflows.

A signal is an asynchronous message to a workflow. It can carry data and trigger workflow logic.

@workflow.defn

class OrderWorkflow:

def __init__(self):

self.is_cancelled = False

@workflow.run

async def run(self, order_data: dict) -> str:

# Process order...

if self.is_cancelled:

return "cancelled"

# Continue processing...

@workflow.signal

def cancel(self) -> None:

self.is_cancelled = True

External systems can send the cancel signal. The workflow reacts appropriately.

Queries let you read workflow state without changing it.

@workflow.query

def get_status(self) -> str:

return self.current_status

This enables building dashboards or status pages that query running workflows.

Child Workflows and Parallel Execution

Workflows can start other workflows. This helps with composition and organization.

# Start child workflow

result = await workflow.execute_child_workflow(

ProcessPaymentWorkflow,

args=[payment_data]

)

Child workflows run independently but maintain parent-child relationships. If the parent is cancelled, children can be cancelled too.

Parallel execution is straightforward. Use standard async patterns.

# Run activities in parallel

results = await asyncio.gather(

workflow.execute_activity(task1, ...),

workflow.execute_activity(task2, ...),

workflow.execute_activity(task3, ...)

)

Temporal handles the concurrency. Each activity might run on different workers.

Temporal vs Traditional Approaches

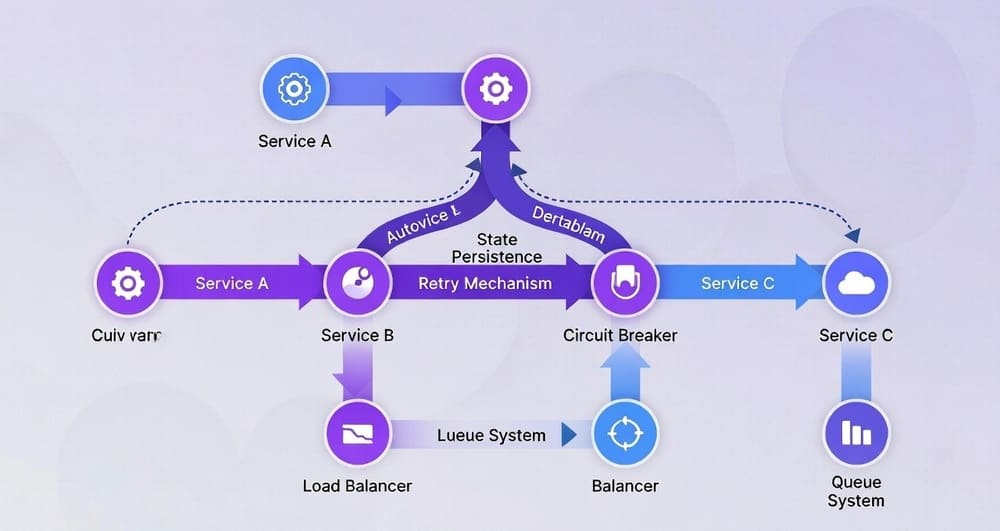

Traditional distributed systems use message queues, databases, and manual state tracking.

You publish a message. A consumer processes it. Updates a database. Publishes another message. Another consumer picks it up. Each step needs error handling, retry logic, and state management.

If a consumer crashes mid-processing, you need dead letter queues, idempotency checks, and recovery procedures. Getting this right is hard. Most teams do it partially.

Temporal internalizes this complexity. Your workflow is the state machine. Temporal handles persistence, retries, and recovery. You write business logic, not infrastructure code.

The trade-off is coupling to Temporal. Your critical workflows depend on the Temporal service. But you’d have infrastructure dependencies anyway (databases, queues). Temporal consolidates them.

Comparison with Other Tools

Temporal vs Airflow

Airflow orchestrates data pipelines. It’s schedule-based and Python-centric.

Temporal handles arbitrary business workflows. It’s event-driven and multi-language.

Airflow has a rich UI for data engineers. Temporal’s UI is more operational.

Use Airflow for batch data processing. Use Temporal for transactional workflows and long-running processes.

Temporal vs Step Functions

AWS Step Functions is serverless workflow orchestration.

Step Functions uses JSON state machines. Temporal uses code in your language.

Step Functions is fully managed on AWS. Temporal can run anywhere.

Step Functions has usage-based pricing that can get expensive. Temporal is open source (managed version available).

Use Step Functions for AWS-native applications where you want zero operations. Use Temporal for complex workflows that need more control.

Temporal vs Argo Workflows

Argo is Kubernetes-native workflow orchestration.

Argo workflows are YAML-based. Temporal workflows are code.

Argo is for container-based batch processing. Temporal is for transactional workflows.

Argo requires Kubernetes. Temporal can run with or without Kubernetes.

Use Argo for data processing pipelines on Kubernetes. Use Temporal for business process automation.

Temporal vs Camunda

Camunda is BPMN-based workflow orchestration popular in enterprises.

Camunda uses visual workflow design. Temporal uses code.

Camunda is Java-centric. Temporal supports multiple languages.

Camunda has strong enterprise features like process mining. Temporal is more developer-focused.

Use Camunda for business process management with non-technical stakeholders. Use Temporal for developer-driven workflow automation.

Multi-Language Support

Temporal supports several languages officially.

Go was first. Temporal itself is written in Go. The SDK is mature and feature-complete.

Java has strong support. Popular in enterprise environments. Integrates well with Spring.

TypeScript works for Node.js applications. Good for teams already using JavaScript.

Python has grown rapidly. The SDK is mature and Pythonic.

PHP and .NET are newer but advancing quickly.

All SDKs target feature parity. A workflow in Go can start an activity in Python. Workers in different languages can work on the same task queue.

This flexibility helps heterogeneous environments. Your payment service is in Java. Your notification service is in Python. They can participate in the same workflow.

Deployment Options

Running Temporal requires the service and workers.

Self-hosted gives full control. Deploy on VMs, Kubernetes, or containers. Use Cassandra or PostgreSQL for persistence. This requires operational expertise but offers flexibility.

Temporal Cloud is the managed service. No infrastructure to manage. Automatic scaling, monitoring, and updates. You just deploy workers.

Helm charts make Kubernetes deployment straightforward. The official chart includes all services and dependencies.

For development, use Temporal’s CLI or Docker Compose. Get a local instance running in minutes.

Production deployments need careful planning. Persistence layer sizing matters. High availability requires multiple replicas. Monitoring and alerting are essential.

Monitoring and Observability

Temporal emits metrics compatible with Prometheus. Track workflow executions, activity attempts, task queue lag, and system health.

The Web UI shows running and completed workflows. Drill into execution history. View activity results and errors. See timers and pending activities.

Temporal records every workflow event. This creates an audit trail automatically. You can see exactly what happened and when.

Integrations exist for Datadog, Grafana, and other monitoring tools. Set up dashboards for workflow SLAs, error rates, and latency.

Testing Workflows

Testing is critical and Temporal makes it easier.

Unit test workflows using Temporal’s test framework. It simulates the Temporal service in memory. No external dependencies needed.

from temporalio.testing import WorkflowEnvironment

async def test_order_workflow():

async with await WorkflowEnvironment.start_local() as env:

result = await env.execute_workflow(

OrderWorkflow.run,

args=[test_order_data]

)

assert result == expected_transaction_id

Mock activities to test workflow logic in isolation. Test happy paths and error scenarios.

Integration tests can use a real Temporal instance. Deploy to a test environment and run end-to-end tests.

Time-skipping in tests is powerful. A workflow that sleeps 24 hours? Skip ahead instantly in tests.

Versioning and Updates

Deploying new workflow code while old workflows are running is tricky. Temporal handles this through versioning.

The patching API lets you introduce changes safely.

if workflow.patched("fix-payment-logic"):

# New logic

await charge_with_retry()

else:

# Old logic for workflows started before patch

await charge_once()

Workflows started before the patch use old logic. New workflows use new logic. No running workflows break during deployment.

For major changes, create a new workflow version. Run both versions simultaneously. Drain old workflows gradually.

This makes continuous deployment safe. Deploy multiple times per day without fear of breaking running workflows.

Challenges and Limitations

Temporal isn’t perfect. Several challenges come up.

Operational complexity is real. Running Temporal in production requires expertise. Cassandra or PostgreSQL need proper setup. Monitoring, scaling, and troubleshooting take effort. The managed cloud service helps but costs money.

The learning curve can be steep. Understanding determinism, replay, and activities takes time. Teams used to stateless services need to adjust their thinking.

Debugging replayed workflows is tricky. Your workflow code runs many times. Distinguishing between replay and actual execution takes practice.

Not suitable for everything. Simple API calls don’t need Temporal. Quick scripts don’t benefit. The overhead only makes sense for workflows that need durability.

History size limits exist. Very long workflows or workflows with huge payloads can hit limits. You need to design around this.

Language ecosystem maturity varies. Go and Java are most mature. Newer SDKs might have gaps or bugs.

Best Practices

Production experience reveals patterns that work.

Keep workflows focused. One workflow should represent one business process. Don’t create god workflows that do everything.

Make activities idempotent. They will retry. Design them to handle being called multiple times safely.

Use meaningful task queue names. Organize work by type. Payment activities on one queue. Email activities on another. This lets you scale workers independently.

Set appropriate timeouts. Too short and you get spurious failures. Too long and failures take forever to detect.

Handle activity failures explicitly. Don’t let exceptions bubble up unhandled. Decide what failure means for your workflow.

Use signals for external events. When something outside triggers a workflow change, use signals instead of polling.

Monitor workflow metrics. Track SLAs, error rates, and execution time. Set alerts for critical workflows.

Test thoroughly. Workflows are hard to debug in production. Catch issues in tests.

Version workflows carefully. Use patching for small changes. New workflow types for major changes.

Keep history size reasonable. Very long workflows should use continue-as-new to reset history.

Real-World Adoption

Major companies rely on Temporal in production.

Netflix uses Temporal for video encoding workflows. Millions of videos processed through Temporal workflows.

Stripe runs payment workflows on Temporal. Critical financial transactions depend on it.

Snap orchestrates infrastructure provisioning with Temporal. Creating new environments for users.

Datadog uses Temporal for internal workflows and customer-facing features.

Box handles file operations through Temporal workflows.

The company raised significant funding and has strong backing. The team includes distributed systems experts from Uber and AWS.

Getting Started

Starting with Temporal is straightforward.

Install the CLI:

brew install temporal

Start a local server:

temporal server start-dev

Install an SDK (Python example):

pip install temporalio

Write a simple workflow and activity. Start a worker. Execute the workflow.

The documentation is comprehensive. Tutorials cover common patterns. The community is helpful on Slack and forums.

For production, evaluate whether to self-host or use Temporal Cloud. Consider your operational capabilities and budget.

The Future Direction

Temporal continues to evolve rapidly.

Multi-region support is improving. Running Temporal across regions for disaster recovery.

Performance optimizations are ongoing. Handling more workflows with less infrastructure.

New language SDKs are in development. Broader language support expands adoption.

Better developer tools are coming. Improved local development, testing, and debugging.

Enhanced observability with deeper integrations to monitoring systems.

The core guarantees remain. Durable execution, exactly-once semantics, and fault tolerance. These fundamentals set Temporal apart.

Key Takeaways

Temporal makes distributed workflows reliable. It handles the hard parts of distributed systems so you don’t have to.

Your code becomes durable. Crashes, deployments, and failures don’t lose state. Workflows continue from where they left off.

This isn’t for every use case. Simple request-response APIs don’t need Temporal. But complex workflows with multiple steps, external dependencies, and long durations benefit hugely.

The learning curve exists. Understanding determinism and replay takes time. But the investment pays off in reliability and reduced complexity.

Common use cases include order processing, financial transactions, user onboarding, and infrastructure automation. Anywhere you need guaranteed execution across systems.

Operational complexity is a consideration. Self-hosting requires expertise. Temporal Cloud reduces this burden.

Many large companies trust Temporal for critical workflows. The technology is proven at scale.

If you’re building workflows that must complete correctly even when everything fails, Temporal deserves serious evaluation. It might eliminate months of infrastructure work and countless production incidents.

Tags: Temporal, workflow orchestration, distributed systems, durable execution, microservices orchestration, fault tolerance, business process automation, saga pattern, workflow engine, event-driven architecture, reliable workflows, state management, distributed transactions, workflow reliability, resilient systems