Nextflow: Scientific Workflow Orchestration Built for Reproducibility

Introduction

Bioinformatics has a reproducibility problem. You run an analysis today, get results, publish a paper. Three years later, someone tries to replicate your work. The software versions changed. The dependencies broke. The compute environment is different. The results don’t match.

Nextflow was built to solve this. It’s a workflow orchestration tool designed specifically for scientific computing, especially bioinformatics and computational biology. The core idea is simple: make it trivial to write workflows that run anywhere, reproduce exactly, and scale from your laptop to a supercomputer.

Unlike general-purpose orchestration tools, Nextflow understands the needs of computational scientists. Long-running analyses that take days or weeks. Complex dependencies between software packages. Heterogeneous compute environments. The need to reproduce results years later.

This guide explains what Nextflow is, why it dominates bioinformatics, and whether it fits your needs. You’ll learn the core concepts, see real patterns, and understand how it compares to alternatives.

What is Nextflow?

Nextflow is a workflow orchestration engine and domain-specific language (DSL) for data-intensive computational pipelines. It was created by Paolo Di Tommaso at the Centre for Genomic Regulation in Barcelona and first released in 2013.

The language is based on Groovy, which runs on the Java Virtual Machine. But you don’t need to know Groovy or Java to use it. The DSL is designed to be readable and expressive.

Nextflow handles three critical challenges in scientific computing:

Portability. Write once, run anywhere. The same workflow runs on your laptop, an HPC cluster, AWS, Google Cloud, or Azure without changes.

Reproducibility. Workflows specify exact software versions using containers. The same workflow produces identical results today and five years from now.

Scalability. Start small and scale up. Develop on a laptop with sample data. Run production on a cluster with terabytes of data. Nextflow handles the complexity.

The project is open source and actively developed. Major research institutions, pharmaceutical companies, and biotech startups use it daily.

Why Bioinformatics Adopted Nextflow

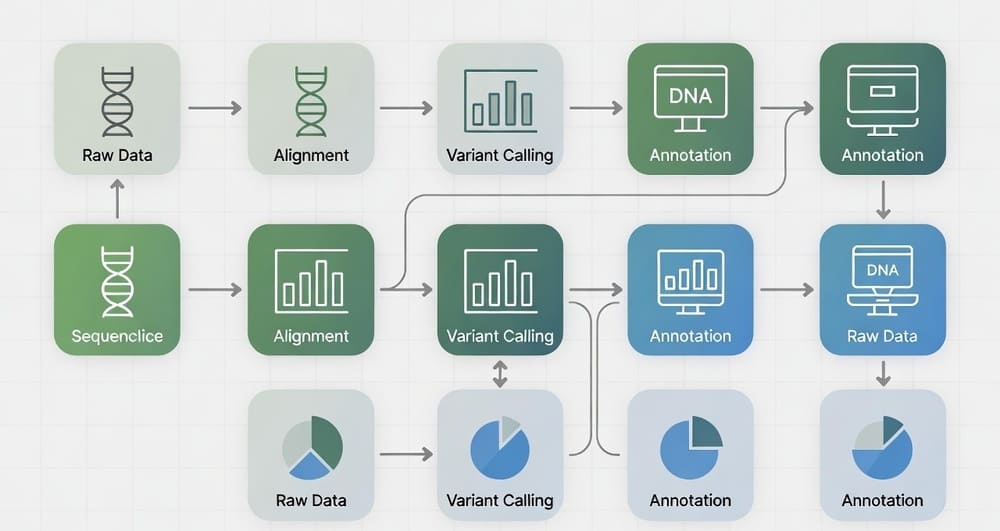

Genomics workflows are complex. A typical whole-genome sequencing pipeline has dozens of steps. Quality control, alignment, variant calling, annotation, filtering. Each step uses specialized tools with specific requirements.

These tools are developed by different groups. They have conflicting dependencies. Some need Python 2.7, others Python 3.9. Some require specific versions of libraries. Managing this by hand is painful.

Nextflow addresses this through containerization. Each process runs in its own container with exact dependencies specified. Docker, Singularity, or Podman. The workflow definition includes container images, so everything is self-contained.

The domain has other challenges Nextflow handles well:

Large datasets. Genomic data is huge. A single whole-genome sample is 100+ GB. Workflows process hundreds or thousands of samples. Nextflow’s dataflow model makes parallel processing natural.

Long-running jobs. Some analyses run for days. If a job fails after 20 hours, you don’t want to restart from scratch. Nextflow has sophisticated resume capabilities. Rerun the workflow, and only failed tasks execute.

Heterogeneous compute. Research labs have diverse infrastructure. Local workstations, shared HPC clusters, cloud resources. Nextflow abstracts this. The same workflow runs everywhere.

Collaboration and sharing. Scientists share workflows through platforms like nf-core (more on this later). Nextflow makes it easy to package and distribute complete pipelines.

Core Concepts

Understanding Nextflow requires grasping a few key ideas.

Processes

A process is a unit of execution. It defines inputs, outputs, and what to run. Here’s a simple example:

process FASTQC {

container 'biocontainers/fastqc:v0.11.9'

input:

path reads

output:

path "fastqc_${reads}_logs"

script:

"""

fastqc $reads

"""

}

This process runs FastQC (a quality control tool) on sequencing reads. The input is a file, the output is a directory. The script section contains the actual command. The container specifies the Docker image to use.

Channels

Channels are how data flows between processes. Think of them as asynchronous queues or streams.

Channel

.fromPath('data/*.fastq.gz')

.set { reads_ch }

FASTQC(reads_ch)

This creates a channel from all .fastq.gz files in the data directory. Each file goes through the FASTQC process independently. Nextflow handles parallelization automatically.

Channels are either value channels (single values) or queue channels (multiple items). Queue channels are consumed by processes. Value channels can be reused.

Workflows

Workflows compose processes into pipelines.

workflow {

reads_ch = Channel.fromPath('data/*.fastq.gz')

FASTQC(reads_ch)

ALIGN(reads_ch)

CALL_VARIANTS(ALIGN.out)

}

This workflow runs quality control, aligns reads, then calls variants. The output of ALIGN feeds into CALL_VARIANTS. Nextflow figures out the execution order based on dependencies.

Operators

Operators transform channels. They let you filter, map, combine, or split data.

Channel

.fromPath('data/*.fastq.gz')

.map { file -> [file.baseName, file] }

.set { reads_ch }

This maps each file to a tuple of (sample_name, file). Useful for tracking sample identity through the pipeline.

Common operators include map, filter, groupTuple, join, combine, and splitCsv. They enable complex data transformations without writing loops.

Containers

Every process can specify a container image.

process SAMTOOLS_SORT {

container 'biocontainers/samtools:1.15.1'

input:

path bam

output:

path "${bam.baseName}.sorted.bam"

script:

"""

samtools sort $bam > ${bam.baseName}.sorted.bam

"""

}

Nextflow pulls the image if needed and runs the process inside it. This ensures exact software versions and dependencies.

A Real Example: Variant Calling Pipeline

Here’s a simplified but realistic variant calling workflow:

#!/usr/bin/env nextflow

params.reads = 'data/*_{1,2}.fastq.gz'

params.reference = 'reference/genome.fa'

process FASTQC {

container 'biocontainers/fastqc:v0.11.9'

publishDir 'results/qc'

input:

tuple val(sample), path(reads)

output:

path "*.html"

script:

"""

fastqc ${reads}

"""

}

process BWA_MEM {

container 'biocontainers/bwa:0.7.17'

input:

tuple val(sample), path(reads)

path reference

output:

tuple val(sample), path("${sample}.sam")

script:

"""

bwa mem ${reference} ${reads[0]} ${reads[1]} > ${sample}.sam

"""

}

process SAMTOOLS_SORT {

container 'biocontainers/samtools:1.15.1'

input:

tuple val(sample), path(sam)

output:

tuple val(sample), path("${sample}.sorted.bam")

script:

"""

samtools sort ${sam} -o ${sample}.sorted.bam

"""

}

process BCFTOOLS_CALL {

container 'biocontainers/bcftools:1.15.1'

publishDir 'results/variants'

input:

tuple val(sample), path(bam)

path reference

output:

path "${sample}.vcf"

script:

"""

bcftools mpileup -f ${reference} ${bam} | \

bcftools call -mv -o ${sample}.vcf

"""

}

workflow {

reads_ch = Channel

.fromFilePairs(params.reads)

reference_ch = Channel

.fromPath(params.reference)

FASTQC(reads_ch)

BWA_MEM(reads_ch, reference_ch)

SAMTOOLS_SORT(BWA_MEM.out)

BCFTOOLS_CALL(SAMTOOLS_SORT.out, reference_ch)

}

This pipeline runs quality control, aligns reads to a reference genome, sorts the alignments, and calls variants. Each step uses a different container. The workflow automatically parallelizes across all samples.

Execution Models

Nextflow supports multiple execution backends. The workflow code stays the same, but where it runs changes based on configuration.

Local Execution

The simplest mode. Everything runs on your local machine.

nextflow run workflow.nf

Processes run as separate tasks. Nextflow manages parallelism based on available CPUs.

HPC Schedulers

Most research clusters use job schedulers. Nextflow submits each process as a job.

Supported schedulers include SLURM, PBS, LSF, SGE, and HTCondor.

Configuration example for SLURM:

process {

executor = 'slurm'

queue = 'short'

cpus = 4

memory = '8 GB'

}

Nextflow handles job submission, monitoring, and cleanup. Failed jobs can be automatically resubmitted.

Cloud Platforms

Nextflow runs on AWS, Google Cloud, and Azure.

AWS Batch is common. Nextflow submits tasks as Batch jobs. Auto-scaling handles compute resources.

Google Cloud Life Sciences (formerly Pipelines API) provides managed execution on GCP.

Azure Batch works similarly to AWS.

Cloud execution requires more setup but scales effortlessly. Pay only for what you use.

Kubernetes

Nextflow can use Kubernetes as an executor. Each process runs as a Pod.

Useful for organizations standardizing on Kubernetes or using managed Kubernetes services.

Configuration:

process {

executor = 'k8s'

}

k8s {

namespace = 'nextflow'

serviceAccount = 'nextflow'

}

Resume Capability

This is a killer feature. Scientific workflows are long and expensive. If something fails halfway through, you don’t want to restart everything.

Nextflow caches task results. When you resume, only tasks that failed or had upstream changes rerun.

Run a workflow:

nextflow run workflow.nf

It fails partway through. Fix the issue and resume:

nextflow run workflow.nf -resume

Nextflow checks which tasks completed successfully. Those tasks are skipped. Only necessary work happens.

The cache is based on task inputs. Change an input file or script, and that task (and downstream tasks) rerun. Otherwise, cached results are used.

This saves enormous amounts of time and money in production.

nf-core: Community Pipelines

nf-core is a community effort to create curated, production-ready Nextflow pipelines for common bioinformatics analyses.

Started in 2018, it now has over 80 pipelines covering:

- RNA sequencing analysis

- Whole-genome sequencing

- ChIP-seq and ATAC-seq

- Metagenomics

- Single-cell RNA-seq

- Proteomics

- And many more

These pipelines follow best practices. They’re well-tested, documented, and maintained. Using an nf-core pipeline is faster than building your own.

Install and run an nf-core pipeline:

nextflow run nf-core/rnaseq -profile docker \

--input samplesheet.csv \

--outdir results \

--genome GRCh38

The pipeline downloads automatically. It includes all necessary containers. You just provide input data and parameters.

nf-core pipelines are production-grade. Pharmaceutical companies use them for drug discovery. Research institutions use them for patient diagnostics. They’re thoroughly validated.

The nf-core project also provides tools for pipeline development, testing, and release management.

Configuration and Profiles

Nextflow uses configuration files to separate workflow logic from execution details.

A nextflow.config file might look like:

params {

reads = 'data/*_{1,2}.fastq.gz'

outdir = 'results'

}

process {

cpus = 2

memory = '4 GB'

}

profiles {

standard {

process.executor = 'local'

}

cluster {

process.executor = 'slurm'

process.queue = 'short'

}

aws {

process.executor = 'awsbatch'

process.queue = 'my-queue'

aws.region = 'us-east-1'

}

docker {

docker.enabled = true

}

singularity {

singularity.enabled = true

}

}

Run with different profiles:

nextflow run workflow.nf -profile docker

nextflow run workflow.nf -profile cluster,singularity

This separation makes workflows portable. The same workflow runs locally during development, on a cluster for testing, and in the cloud for production.

Resource Management

Scientific workflows have diverse resource needs. Some processes need lots of memory. Others need many CPUs. Some need GPUs.

Nextflow lets you specify resources per process:

process ALIGN {

cpus 8

memory '32 GB'

time '24h'

script:

"""

bwa mem -t ${task.cpus} ...

"""

}

process ANNOTATE {

cpus 1

memory '4 GB'

time '1h'

script:

"""

annotate.py ...

"""

}

The ${task.cpus} variable passes the CPU count to the tool. This makes processes adaptive. Run with more resources, and tools use them.

You can also use dynamic directives:

process SORT {

memory { 4.GB * task.attempt }

errorStrategy 'retry'

maxRetries 3

script:

"""

sort -S ${task.memory.toGiga()}G data.txt

"""

}

If the task fails with an out-of-memory error, Nextflow retries with more memory.

Error Handling and Retries

Scientific workflows encounter errors. Network timeouts, temporary file system issues, intermittent cluster problems.

Nextflow provides error strategies:

process DOWNLOAD {

errorStrategy 'retry'

maxRetries 3

script:

"""

wget https://example.com/data.tar.gz

"""

}

If the download fails, Nextflow retries up to three times.

Other strategies include:

ignore – Continue the workflow even if this task fails terminate – Stop the entire workflow finish – Complete running tasks but don’t start new ones

You can also use conditional error handling:

process ALIGN {

errorStrategy { task.exitStatus == 130 ? 'retry' : 'terminate' }

script:

"""

bwa mem ...

"""

}

This retries only for specific exit codes.

Monitoring and Reporting

Nextflow provides several monitoring tools.

The console output shows progress in real time. Tasks appear as they start and complete. Errors are highlighted.

The -with-dag option generates a workflow diagram:

nextflow run workflow.nf -with-dag flowchart.png

This creates a visual representation of the workflow structure.

The -with-report option generates an HTML report:

nextflow run workflow.nf -with-report report.html

The report includes execution time, resource usage, and task details.

The -with-timeline option creates a timeline visualization:

nextflow run workflow.nf -with-timeline timeline.html

This shows when each task ran and how long it took. Useful for identifying bottlenecks.

Nextflow Tower is a monitoring and management platform. It provides a web interface for running workflows, viewing logs, and analyzing execution. There’s a free tier for individual use and a commercial version for teams.

Common Use Cases

Genomics Pipelines

The most common use case. Whole-genome sequencing, exome sequencing, RNA-seq, ChIP-seq, ATAC-seq.

Workflows often follow similar patterns:

- Quality control of raw reads

- Alignment to reference genome

- Post-processing (duplicate marking, base recalibration)

- Variant calling or quantification

- Annotation and filtering

These pipelines process hundreds of samples in parallel. Each sample follows the same steps independently.

Metagenomics

Analyzing microbial communities from environmental samples. Metagenomics workflows are complex with many steps:

- Read trimming and quality filtering

- Taxonomic classification

- Assembly

- Gene prediction

- Functional annotation

Metagenomic datasets are enormous. A single soil sample can generate terabytes of data. Nextflow’s parallel processing is essential.

Single-Cell Analysis

Single-cell RNA sequencing analyzes thousands of individual cells. The computational challenge is processing millions of reads per sample.

Workflows include:

- Demultiplexing

- Cell barcode and UMI extraction

- Alignment

- Counting

- Quality control

- Clustering and cell type identification

Tools like Cell Ranger and Seurat integrate with Nextflow pipelines.

Proteomics

Mass spectrometry proteomics generates complex datasets. Workflows search experimental spectra against protein databases.

Steps include:

- Raw data conversion

- Database search

- Post-search validation

- Quantification

- Statistical analysis

These analyses are computationally intensive. Nextflow handles parallelization across multiple search engines and parameter combinations.

Image Analysis

Microscopy and medical imaging workflows use Nextflow too. Processing thousands of images with tools like ImageJ, CellProfiler, or QuPath.

Parallel processing is natural. Each image or region of interest is an independent task.

Machine Learning Pipelines

Training models on biological data. Feature extraction, model training, validation, and prediction.

Hyperparameter tuning fits well. Run training with different parameters in parallel. Nextflow orchestrates and collects results.

Comparison with Alternatives

Nextflow vs Snakemake

Snakemake is Nextflow’s main competitor in bioinformatics.

Language: Snakemake uses Python-based rules. Nextflow uses Groovy-based DSL. This is mostly personal preference.

Execution model: Snakemake is pull-based (works backward from outputs). Nextflow is push-based (follows data flow). Snakemake can be more intuitive for some users.

Container support: Both support containers well. Nextflow has slightly better integration.

Community: Both have strong communities. Snakemake has a longer history. Nextflow has nf-core, which is very active.

Scalability: Both scale well. Nextflow has better cloud support.

Choose Snakemake if you prefer Python and want tighter integration with Python tools. Choose Nextflow for better cloud execution and the nf-core ecosystem.

Nextflow vs Cromwell

Cromwell is developed by the Broad Institute for genomics workflows. It uses WDL (Workflow Description Language).

Language: WDL is declarative. Nextflow is more imperative. WDL can be simpler for straightforward workflows.

Ecosystem: Cromwell integrates with Terra (Broad’s cloud platform). Nextflow is more general-purpose.

Adoption: Cromwell is popular in large genomics centers. Nextflow has broader adoption across different domains.

Cloud support: Both support cloud execution. Cromwell is optimized for Google Cloud.

Use Cromwell if you’re in the Broad ecosystem or primarily using Terra. Use Nextflow for more flexibility.

Nextflow vs Common Workflow Language (CWL)

CWL is a standard for describing workflows. It’s not an execution engine itself.

Philosophy: CWL focuses on interoperability. Nextflow focuses on usability.

Adoption: CWL has support from major organizations. Nextflow has more active daily users.

Ease of use: Nextflow is generally easier to learn and use. CWL is more verbose.

CWL makes sense if you need to share workflows across multiple execution engines. Nextflow is better if you want to get work done quickly.

Nextflow vs General-Purpose Tools (Airflow, Prefect)

Tools like Airflow weren’t designed for scientific computing.

Reproducibility: Nextflow emphasizes exact reproducibility. General tools don’t prioritize this.

Resume capability: Nextflow’s resume is sophisticated. General tools have basic retry logic.

Container integration: Nextflow makes containers first-class. General tools added container support later.

Domain features: Nextflow understands scientific computing needs. General tools are broader but shallower.

Use Nextflow for scientific workflows. Use general tools for business data pipelines.

Challenges and Limitations

Nextflow isn’t perfect. Several issues come up regularly.

Learning curve. The DSL is new to most users. Understanding channels and dataflow takes time. The documentation is good but dense.

Debugging complexity. When something goes wrong, figuring out why can be hard. Processes run in containers in different environments. Error messages might not be clear.

Resource estimation. Determining memory and CPU needs for each process requires trial and error. Under-allocate and jobs fail. Over-allocate and you waste resources.

Container overhead. Every process runs in a container. This adds startup time and complexity. For very short tasks, overhead becomes significant.

Groovy quirks. The language has edge cases. String interpolation, closures, and variable scoping can surprise new users.

Configuration complexity. Making workflows portable requires careful configuration. Managing profiles for different environments takes effort.

Dependency on Java. Nextflow requires Java runtime. This is usually fine but adds a dependency.

Best Practices

Here’s what works well in production.

Use nf-core pipelines when possible. Don’t reinvent the wheel. If an nf-core pipeline exists for your analysis, start there.

Specify exact container versions. Don’t use latest tags. Pin exact versions for reproducibility.

Make workflows modular. Break complex workflows into subworkflows. Easier to test and reuse.

Use configuration profiles. Separate execution details from workflow logic. Makes portability easier.

Test with small datasets. Develop and debug with small sample datasets. Run full production data only when the workflow is solid.

Document parameters. Explain what each parameter does. Include example values.

Version control everything. Workflows, configuration, and documentation go in Git.

Monitor resource usage. Check actual resource consumption. Adjust requests based on real data.

Implement checkpoints. For very long workflows, create intermediate results that can be reused.

Handle failures gracefully. Use appropriate error strategies. Don’t let temporary failures crash entire workflows.

Getting Started

Installing Nextflow is simple:

curl -s https://get.nextflow.io | bash

Or use conda:

conda install -c bioconda nextflow

Or use Homebrew on macOS:

brew install nextflow

Run the hello world example:

nextflow run hello

Try an nf-core pipeline:

nextflow run nf-core/rnaseq -profile test,docker

The test profile uses small datasets. Good for learning without waiting hours.

Build your first workflow:

#!/usr/bin/env nextflow

process SAY_HELLO {

input:

val name

output:

stdout

script:

"""

echo "Hello, ${name}!"

"""

}

workflow {

names = Channel.of('World', 'Nextflow', 'Science')

SAY_HELLO(names).view()

}

Save as hello.nf and run:

nextflow run hello.nf

From here, explore the documentation, join the community on Slack, and start building real workflows.

The Future of Nextflow

The project continues to evolve actively.

DSL2 is now the standard. It provides better modularity, cleaner syntax, and improved workflow composition. DSL1 is deprecated.

Nextflow Tower is becoming the standard monitoring platform. More features for workflow management and execution are being added.

Performance improvements continue. Better caching, faster startup, more efficient resource usage.

Cloud integration keeps improving. Better support for spot instances, auto-scaling, and cost optimization.

Community growth is strong. More pipelines, more users, more contributions. The nf-core community is particularly active.

Nextflow is becoming the de facto standard for computational biology workflows. It’s used in clinical diagnostics, drug discovery, and basic research globally.

Key Takeaways

Nextflow is purpose-built for scientific workflow orchestration. It solves real problems in computational biology.

Reproducibility is built in through container integration and exact versioning. Run the same workflow years later and get identical results.

Portability lets you develop locally and deploy anywhere. No code changes needed.

The resume capability saves enormous time and money. Failed workflows restart from where they stopped.

nf-core provides production-ready pipelines for common analyses. Don’t build from scratch if you don’t have to.

The learning curve is real but worthwhile. Invest time upfront, benefit for years.

Nextflow works best for batch-oriented, data-intensive scientific computing. If that describes your work, it’s worth serious consideration.

The community is welcoming and helpful. Resources are available. You’re not alone in learning.

Start small. Run example workflows. Adapt nf-core pipelines. Build your own when you understand the patterns.

Nextflow won’t solve every problem. But for scientific workflow orchestration, especially in bioinformatics, it’s the best tool available today.

Tags: Nextflow, bioinformatics workflows, scientific computing, workflow orchestration, computational biology, genomics pipelines, reproducible research, nf-core, containerized workflows, HPC workflows, cloud genomics, RNA-seq, variant calling, metagenomics, workflow reproducibility, scientific data pipelines