Flyte: A Deep Dive into Lyft’s Kubernetes-Native Workflow Orchestrator

Introduction

Flyte doesn’t get the attention Airflow does. But teams at Lyft, Spotify, and Fidelity rely on it to run thousands of workflows daily.

Lyft built Flyte to solve a specific problem. Their data scientists and ML engineers were struggling to move from notebooks to production. Code that worked locally broke in production. Experiments weren’t reproducible. Versioning was a mess.

Flyte fixed this. It makes workflows reproducible by default. Type safety catches errors before runtime. The same code runs locally and in production without changes.

This guide explains what Flyte is, how it works, and whether it fits your needs. You’ll learn the core concepts, see practical examples, and understand the trade-offs.

What Makes Flyte Different

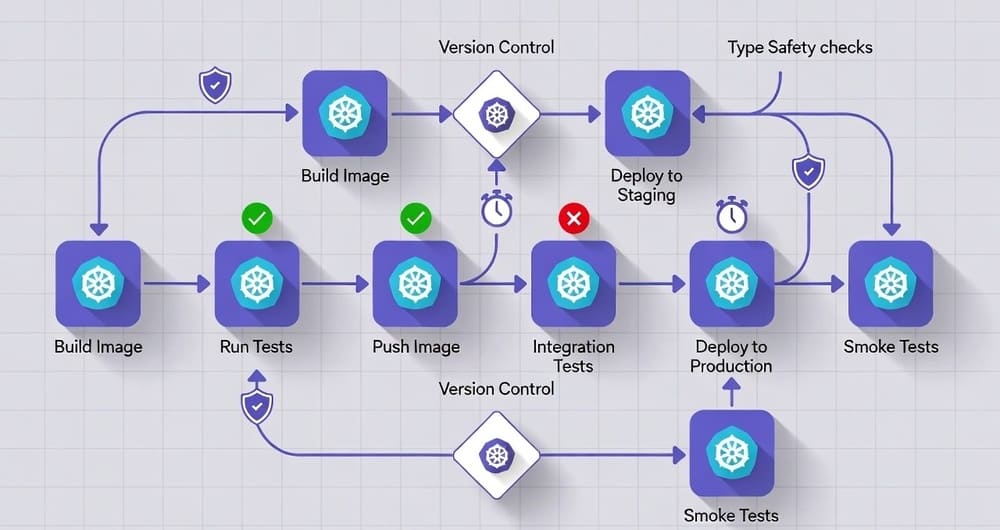

Most orchestrators focus on scheduling tasks. Flyte focuses on reproducibility and type safety.

Reproducibility is built into the core. Every workflow execution captures its complete context. Inputs, outputs, code version, container image, resource configuration. You can rerun any workflow from six months ago and get identical results.

Type safety prevents runtime errors. Flyte uses Python type hints to validate data flowing between tasks. Pass the wrong type and Flyte catches it before execution starts. This seems minor until you’ve debugged a workflow that failed after running for three hours.

Kubernetes-native architecture means Flyte scales naturally. Each task runs in its own container with specified resources. No shared state between tasks. No resource conflicts.

Multi-tenancy is first-class. Projects and domains separate workflows. Teams can share infrastructure without stepping on each other.

Core Concepts

Understanding Flyte requires grasping a few key concepts.

Tasks

Tasks are the basic unit of work. A Python function decorated with @task becomes a Flyte task.

from flytekit import task

@task

def process_data(input_path: str) -> int:

# Your processing logic here

records = read_file(input_path)

return len(records)

The type hints matter. Flyte uses them to validate inputs and outputs. The function signature becomes a contract.

Tasks are containerized automatically. Flyte builds a Docker image with your code and dependencies. This happens behind the scenes.

Workflows

Workflows connect tasks together. They define the execution graph.

from flytekit import workflow

@workflow

def data_pipeline(raw_data: str) -> int:

cleaned = clean_data(input_path=raw_data)

validated = validate_data(input_path=cleaned)

count = process_data(input_path=validated)

return count

Workflows are also type-safe. The output type of one task must match the input type of the next.

Launch Plans

Launch plans are how you trigger workflows. They bind default inputs and set schedules.

from flytekit import LaunchPlan, CronSchedule

daily_pipeline = LaunchPlan.get_or_create(

data_pipeline,

name="daily_data_pipeline",

schedule=CronSchedule(cron_expression="0 0 * * *"),

default_inputs={"raw_data": "s3://bucket/data/"}

)

Launch plans separate workflow logic from execution configuration. Same workflow, different schedules or inputs.

Projects and Domains

Projects organize related workflows. Domains separate environments.

A typical setup has one project per team or product area. Each project has three domains: development, staging, production.

This structure enforces workflow isolation. Development experiments don’t interfere with production runs.

How Flyte Works Under the Hood

Flyte has three main components.

FlyteAdmin is the control plane. It handles API requests, stores metadata, and manages workflow registrations. When you trigger a workflow, you’re talking to FlyteAdmin.

FlytePropeller is the execution engine. It runs on Kubernetes and watches for new workflow executions. When one appears, FlytePropeller creates the necessary Kubernetes resources and monitors progress.

FlyteConsole is the web UI. It shows workflow executions, task details, logs, and outputs. Teams use it to monitor and debug workflows.

The data plane is separate. Task outputs don’t flow through the control plane. Instead, Flyte uses blob storage (S3, GCS, or similar). Tasks write outputs to storage and pass references between tasks.

This architecture scales well. The control plane handles metadata. The data plane handles actual data. They scale independently.

Working with Flyte: Practical Examples

Let’s look at real workflows beyond basic examples.

Data Processing Pipeline

A common pattern is cleaning and transforming data before analysis.

from flytekit import task, workflow

from typing import List

import pandas as pd

@task

def load_raw_data(s3_path: str) -> pd.DataFrame:

return pd.read_csv(s3_path)

@task

def remove_duplicates(df: pd.DataFrame) -> pd.DataFrame:

return df.drop_duplicates()

@task

def filter_invalid_rows(df: pd.DataFrame) -> pd.DataFrame:

return df[df['value'] > 0]

@task

def compute_statistics(df: pd.DataFrame) -> dict:

return {

'mean': df['value'].mean(),

'median': df['value'].median(),

'count': len(df)

}

@workflow

def data_quality_pipeline(input_path: str) -> dict:

raw = load_raw_data(s3_path=input_path)

deduped = remove_duplicates(df=raw)

clean = filter_invalid_rows(df=deduped)

stats = compute_statistics(df=clean)

return stats

Each task is self-contained. Flyte handles passing DataFrames between tasks through serialization.

Machine Learning Training Workflow

ML workflows benefit from Flyte’s reproducibility guarantees.

from flytekit import task, workflow

from dataclasses import dataclass

@dataclass

class ModelMetrics:

accuracy: float

precision: float

recall: float

@task

def prepare_features(dataset_path: str) -> tuple:

# Load and split data

X_train, X_test, y_train, y_test = load_and_split(dataset_path)

return X_train, X_test, y_train, y_test

@task(cache=True, cache_version="1.0")

def train_model(X_train, y_train, learning_rate: float):

model = build_model(learning_rate=learning_rate)

model.fit(X_train, y_train)

return model

@task

def evaluate_model(model, X_test, y_test) -> ModelMetrics:

predictions = model.predict(X_test)

return calculate_metrics(y_test, predictions)

@workflow

def ml_training_pipeline(

dataset: str,

learning_rate: float = 0.001

) -> ModelMetrics:

X_train, X_test, y_train, y_test = prepare_features(dataset_path=dataset)

model = train_model(X_train=X_train, y_train=y_train, learning_rate=learning_rate)

metrics = evaluate_model(model=model, X_test=X_test, y_test=y_test)

return metrics

The cache=True parameter on the training task is powerful. If you rerun with the same inputs, Flyte skips execution and returns cached results. This saves time and compute during experimentation.

Dynamic Workflows

Sometimes you don’t know the workflow structure until runtime. Flyte supports dynamic workflows.

from flytekit import task, dynamic, workflow

@task

def process_file(file_path: str) -> int:

# Process a single file

return count_records(file_path)

@dynamic

def process_all_files(file_list: List[str]) -> List[int]:

results = []

for file_path in file_list:

result = process_file(file_path=file_path)

results.append(result)

return results

@task

def list_files(bucket: str) -> List[str]:

# List all files in bucket

return get_file_list(bucket)

@workflow

def batch_processing_pipeline(bucket_name: str) -> List[int]:

files = list_files(bucket=bucket_name)

counts = process_all_files(file_list=files)

return counts

The @dynamic decorator creates a workflow dynamically at runtime. The structure depends on how many files exist.

Advanced Features

Resource Allocation

Flyte lets you specify CPU, memory, and GPU requirements per task.

from flytekit import Resources

@task(requests=Resources(cpu="2", mem="4Gi"), limits=Resources(cpu="4", mem="8Gi"))

def heavy_computation(data: pd.DataFrame) -> pd.DataFrame:

# Resource-intensive processing

return transform_data(data)

This prevents resource contention. Small tasks get small containers. Big tasks get what they need.

Retry and Timeout Configuration

Production workflows need robust error handling.

from flytekit import task

from flytekit.core.resources import Resources

@task(

retries=3,

timeout=timedelta(hours=2),

interruptible=True

)

def flaky_external_api_call(endpoint: str) -> dict:

response = call_api(endpoint)

return response

The retries parameter handles transient failures. timeout prevents tasks from running forever. interruptible=True allows using spot instances for cost savings.

Conditional Execution

Workflows can branch based on task outputs.

from flytekit import task, workflow, conditional

@task

def check_data_quality(df: pd.DataFrame) -> bool:

return df.isnull().sum().sum() == 0

@task

def process_clean_data(df: pd.DataFrame) -> pd.DataFrame:

return standard_processing(df)

@task

def process_dirty_data(df: pd.DataFrame) -> pd.DataFrame:

return robust_processing(df)

@workflow

def adaptive_pipeline(input_path: str) -> pd.DataFrame:

data = load_data(path=input_path)

is_clean = check_data_quality(df=data)

return (

conditional("quality_check")

.if_(is_clean.is_true())

.then(process_clean_data(df=data))

.else_()

.then(process_dirty_data(df=data))

)

This pattern adapts to data conditions at runtime.

Custom Types

Flyte supports custom data types beyond primitives and DataFrames.

from dataclasses import dataclass

from flytekit import task, workflow

from dataclasses_json import dataclass_json

@dataclass_json

@dataclass

class CustomerSegment:

segment_id: str

customer_count: int

avg_value: float

features: List[str]

@task

def identify_segments(data: pd.DataFrame) -> List[CustomerSegment]:

segments = run_clustering(data)

return [CustomerSegment(...) for cluster in segments]

@task

def score_segments(segments: List[CustomerSegment]) -> pd.DataFrame:

return create_scoring_model(segments)

@workflow

def segmentation_pipeline(input_data: str) -> pd.DataFrame:

data = load_data(path=input_data)

segments = identify_segments(data=data)

scores = score_segments(segments=segments)

return scores

Custom types make workflows more readable. The data structures are explicit.

Local Development and Testing

One of Flyte’s strengths is local execution. Your workflow code runs locally without Kubernetes.

if __name__ == "__main__":

result = data_pipeline(raw_data="local/path/to/data.csv")

print(f"Processed {result} records")

This is normal Python. No mocking required. You can debug with breakpoints and print statements.

For unit testing, tasks are just functions.

def test_remove_duplicates():

test_df = pd.DataFrame({'id': [1, 1, 2], 'value': [10, 10, 20]})

result = remove_duplicates(df=test_df)

assert len(result) == 2

Integration testing uses the @workflow as normal functions too.

def test_pipeline():

result = data_quality_pipeline(input_path="test_data.csv")

assert result['count'] > 0

assert result['mean'] > 0

This development experience reduces friction. You’re writing Python, not configuration files.

Deploying Flyte Workflows

Getting workflows into production requires a few steps.

Register your workflows with FlyteAdmin. This uploads metadata about tasks and workflows.

pyflyte register workflows.py

Flyte builds container images automatically. It packages your code, dependencies, and Python environment.

Set up a schedule through launch plans or the UI.

Monitor executions through FlyteConsole or programmatically through the SDK.

The deployment model is declarative. You register what the workflow is. Flyte figures out how to run it.

Integration with the Broader Ecosystem

Flyte doesn’t exist in isolation. It integrates with common data and ML tools.

Spark integration lets you run Spark jobs as Flyte tasks. The cluster management is handled automatically.

AWS plugins provide tasks for SageMaker, Athena, and Batch. You can trigger SageMaker training jobs directly from workflows.

Papermill integration executes Jupyter notebooks as tasks. Data scientists can keep using notebooks while getting reproducibility.

Great Expectations integration validates data quality within workflows.

MLflow and Weights & Biases integrations track experiments and models.

These integrations reduce custom code. Use proven tools through Flyte’s interface.

When Flyte Makes Sense

Flyte fits specific scenarios well.

You’re running on Kubernetes. Flyte is built for Kubernetes. If you’re not on K8s and don’t plan to be, other tools may fit better.

Reproducibility matters. Research teams, regulated industries, and ML teams need to recreate past results. Flyte makes this automatic.

You have multiple teams sharing infrastructure. The multi-tenancy features shine with many teams. Project and domain isolation prevents conflicts.

Your workflows are compute-intensive. Flyte handles resource allocation well. Tasks can request GPUs, large memory, or specific instance types.

Type safety reduces debugging time. If your team values catching errors early, Flyte’s type system helps.

You’re doing machine learning at scale. The ML-specific features (caching, versioning, resource management) are purpose-built for this.

When to Look Elsewhere

Flyte isn’t always the right choice.

You’re not on Kubernetes. Setting up Kubernetes just for Flyte is a big undertaking. Managed Flyte Cloud exists, but adds cost.

Your workflows are simple scheduled jobs. If you’re running cron-style tasks without complex dependencies, Flyte may be overkill.

Your team doesn’t know Python well. Flyte is Python-first. Support for other languages exists but is less mature.

You need a mature ecosystem of connectors. Airflow has hundreds of operators. Flyte’s ecosystem is growing but smaller.

You want minimal operational overhead. Flyte requires running and maintaining several services. Managed options help but aren’t free.

Learning curve is a blocker. Flyte’s concepts (tasks, workflows, launch plans, domains) take time to internalize.

Comparison with Other Tools

Flyte vs Airflow

Airflow is more mature with a bigger ecosystem. Flyte has better reproducibility and type safety.

Airflow’s task-based model is simpler to understand initially. Flyte’s type system catches more errors upfront.

Airflow operators make integrations easy. Flyte requires more custom code but gives more control.

Both run on Kubernetes, but Flyte is designed for it while Airflow adapted to it.

Flyte vs Kubeflow Pipelines

Both target ML workflows on Kubernetes. Kubeflow Pipelines is part of the broader Kubeflow ecosystem for ML.

Flyte has better local development. Kubeflow Pipelines requires more YAML configuration.

Kubeflow integrates tightly with Kubernetes native features. Flyte abstracts Kubernetes more.

Teams already using Kubeflow for training often use Kubeflow Pipelines. Teams wanting standalone orchestration prefer Flyte.

Flyte vs Prefect

Prefect has a better developer experience for Python developers. The learning curve is gentler.

Flyte has stronger reproducibility guarantees. Prefect is more flexible but less opinionated.

Prefect works without Kubernetes. Flyte really wants Kubernetes.

Prefect’s hybrid execution model (cloud control plane, local execution) differs from Flyte’s Kubernetes-native approach.

Both are good modern Python orchestrators. Choose based on infrastructure and reproducibility needs.

Real-World Usage Patterns

Feature Engineering Pipeline

Teams use Flyte for offline feature computation.

@task(cache=True, cache_version="2.0")

def compute_user_features(user_ids: List[str], date: datetime) -> pd.DataFrame:

# Expensive feature computation

return calculate_features(user_ids, date)

@task

def write_to_feature_store(features: pd.DataFrame) -> str:

feature_store.write(features)

return "success"

@workflow

def feature_pipeline(date: datetime) -> str:

users = get_active_users(date=date)

features = compute_user_features(user_ids=users, date=date)

result = write_to_feature_store(features=features)

return result

The caching prevents recomputing features for the same date and users.

Hyperparameter Tuning

Running multiple experiments in parallel.

@task

def train_with_params(dataset: str, params: dict) -> float:

model = build_model(**params)

score = train_and_evaluate(model, dataset)

return score

@dynamic

def hyperparameter_search(dataset: str, param_grid: List[dict]) -> dict:

scores = []

for params in param_grid:

score = train_with_params(dataset=dataset, params=params)

scores.append((params, score))

best = max(scores, key=lambda x: x[1])

return best[0]

@workflow

def tuning_pipeline(dataset_path: str) -> dict:

param_combinations = generate_param_grid()

best_params = hyperparameter_search(dataset=dataset_path, param_grid=param_combinations)

return best_params

Each parameter combination runs in parallel. Flyte handles the scheduling.

ETL with Data Quality Checks

Validating data before downstream processing.

from great_expectations import DataContext

@task

def extract_data(source: str) -> pd.DataFrame:

return pull_from_source(source)

@task

def validate_data(df: pd.DataFrame) -> bool:

context = DataContext()

results = context.run_validation_operator(

"validate_data",

assets_to_validate=[df]

)

return results.success

@task

def transform_data(df: pd.DataFrame) -> pd.DataFrame:

return apply_transformations(df)

@task

def load_data(df: pd.DataFrame, destination: str) -> str:

write_to_warehouse(df, destination)

return "loaded"

@workflow

def etl_pipeline(source: str, destination: str) -> str:

raw = extract_data(source=source)

is_valid = validate_data(df=raw)

if not is_valid:

raise ValueError("Data quality checks failed")

transformed = transform_data(df=raw)

result = load_data(df=transformed, destination=destination)

return result

The workflow fails fast if data quality is bad. Downstream tasks don’t run with bad data.

Operational Considerations

Running Flyte in production requires thinking through a few areas.

Resource management is important. Set appropriate requests and limits. Monitor actual usage and adjust.

Cost optimization comes from using spot instances where possible. Mark tasks as interruptible when they can tolerate preemption.

Monitoring and alerting should cover workflow failures, task duration anomalies, and resource usage spikes. Flyte exports Prometheus metrics.

Logging is critical for debugging. Flyte captures stdout and stderr from tasks. Structured logging helps when searching through logs.

Secrets management integrates with Kubernetes secrets. Don’t hardcode credentials in task code.

Version control your workflows like application code. Use CI/CD to test and deploy workflow changes.

Access control through Flyte’s project and domain model. Limit who can trigger production workflows.

The Future of Flyte

The project keeps evolving. Recent developments point to future directions.

Better UI and visualization features are coming. The team is investing in making FlyteConsole more powerful.

Improved Python debugging support. The local development story is good but can get better.

More language support beyond Python. Go SDK exists. Java and other languages are possible.

Enhanced ML features like automated model versioning and deployment integrations.

Performance improvements for large-scale workflows with thousands of tasks.

Simplified deployment to reduce operational complexity. Making it easier to get started.

The community is active. Contributions come from Lyft, Spotify, Fidelity, and others. The project graduated to CNCF incubation.

Getting Started with Flyte

If you want to try Flyte, here’s how to start.

Run the sandbox locally. Flyte provides a local Kubernetes environment for testing.

flytectl demo start

This spins up a complete Flyte installation locally.

Write a simple workflow. Start with the examples in this article. Get comfortable with tasks and workflows.

Test locally. Run your workflows as Python scripts before deploying.

Register with the sandbox. Use pyflyte register to upload workflows.

Trigger through the UI. FlyteConsole shows you what’s happening.

Read the documentation. Flyte’s docs are comprehensive. The examples cover common patterns.

Join the community. The Flyte Slack has active users who help newcomers.

Key Takeaways

Flyte solves the reproducibility problem in data and ML workflows. Every execution is recreatable with full context captured automatically.

Type safety catches errors before they waste compute time. The Python type hints become runtime validation.

Kubernetes-native architecture scales naturally. Resource isolation prevents conflicts between tasks.

Local development works without infrastructure. Write and test workflows as normal Python.

Multi-tenancy supports multiple teams on shared infrastructure. Projects and domains provide isolation.

The learning curve exists. Understanding tasks, workflows, and launch plans takes time.

Infrastructure requirements are real. You need Kubernetes or a managed Flyte deployment.

The ecosystem is smaller than Airflow but growing. Core integrations exist for common tools.

Flyte fits teams doing ML at scale, teams needing reproducibility, and teams already on Kubernetes. It may be overkill for simple scheduled jobs.

The tool keeps improving. The community is active and the project is maturing.

Tags: Flyte, workflow orchestration, Kubernetes, MLOps, machine learning pipelines, data engineering, workflow automation, Lyft, reproducible workflows, Python orchestration, data pipelines, ML engineering, type-safe workflows, container orchestration, cloud-native workflows