Apache Airflow: The Complete Guide to Workflow Orchestration

Introduction

Data pipelines break. Tasks fail at 3 AM. Dependencies get tangled. Someone needs to track what ran when and why it failed.

Apache Airflow solves these problems. It’s the most widely used workflow orchestration platform in data engineering. If you work with data pipelines, you’ve either used Airflow or you will.

Airflow started at Airbnb in 2014. Maxime Beauchemin built it to manage the company’s growing data workflows. Airbnb open-sourced it in 2015. By 2016, it joined the Apache Software Foundation. Today, it’s the de facto standard for orchestrating data pipelines.

This guide explains what Airflow actually does, how it works, and when it makes sense. You’ll learn the core concepts, see real patterns, and understand the trade-offs. No marketing fluff. Just what you need to know.

What is Apache Airflow?

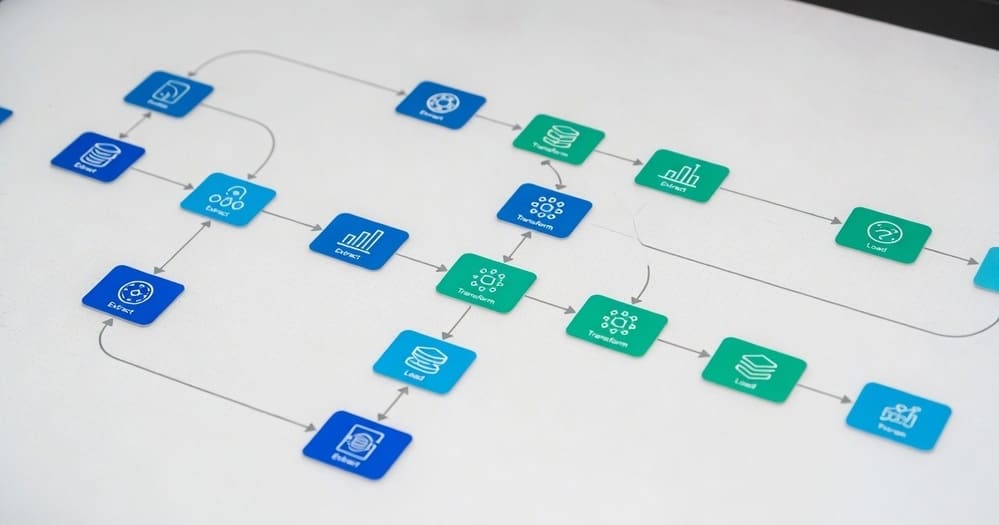

Airflow is a platform for programmatically authoring, scheduling, and monitoring workflows. You write workflows in Python as Directed Acyclic Graphs (DAGs). Airflow handles execution, scheduling, retries, and monitoring.

The key insight: workflows as code. Instead of clicking through UIs or writing XML configs, you write Python. This means version control, testing, code review, and all the practices that make software reliable.

A workflow in Airflow is a DAG. Each node is a task. Edges define dependencies. Task A must complete before Task B runs. Airflow figures out the execution order and runs tasks when their dependencies are met.

Airflow doesn’t process data itself. It orchestrates other systems. It calls APIs, runs SQL queries, triggers Spark jobs, moves files, sends notifications. The actual work happens elsewhere. Airflow just coordinates.

Core Concepts

Understanding Airflow means understanding a few fundamental ideas.

DAGs (Directed Acyclic Graphs) are workflows. Each DAG is a collection of tasks with dependencies. The “acyclic” part means no circular dependencies. Task A can depend on Task B, but B can’t depend on A.

Tasks are units of work. Each task does one thing: run a Python function, execute a SQL query, call an API, wait for a file. Tasks are instances of operators.

Operators define what tasks do. Airflow includes operators for common operations. BashOperator runs shell commands. PythonOperator runs Python functions. SQLExecuteQueryOperator runs SQL. You can also write custom operators.

Task instances are specific executions of a task. The same task runs multiple times (once per DAG run). Each execution is a separate task instance with its own state and logs.

DAG runs represent workflow executions. When a DAG is triggered, Airflow creates a DAG run. This run has a logical date (when it should have run) and an execution date (when it actually ran).

The scheduler determines when tasks run. It monitors DAGs, checks dependencies, and queues tasks for execution.

The executor runs tasks. Different executors work differently. The LocalExecutor runs tasks on the same machine. CeleryExecutor distributes tasks across workers. KubernetesExecutor creates Pods for each task.

The webserver provides the UI. You see DAG status, task logs, execution history, and metrics. You can trigger runs, pause DAGs, and investigate failures.

The metadata database stores everything. DAG definitions, task states, execution history, connections, variables. Usually PostgreSQL or MySQL in production.

A Simple Example

Here’s what a basic Airflow DAG looks like:

from airflow import DAG

from airflow.operators.python import PythonOperator

from datetime import datetime

def extract_data():

print("Extracting data...")

return {"records": 100}

def transform_data():

print("Transforming data...")

def load_data():

print("Loading data...")

with DAG(

dag_id='simple_etl',

start_date=datetime(2025, 1, 1),

schedule='@daily',

catchup=False

) as dag:

extract = PythonOperator(

task_id='extract',

python_callable=extract_data

)

transform = PythonOperator(

task_id='transform',

python_callable=transform_data

)

load = PythonOperator(

task_id='load',

python_callable=load_data

)

extract >> transform >> load

This DAG runs daily. It has three tasks that run sequentially. The >> operator sets dependencies. Extract runs first, then transform, then load.

When Airflow Makes Sense

Airflow isn’t for everything. It shines in specific scenarios.

You have complex data pipelines. Multiple steps with dependencies. Some run in parallel, others wait for upstream tasks. Airflow handles this complexity well.

You need scheduling. Run workflows daily, hourly, or on custom schedules. Airflow’s scheduler is robust and battle-tested.

You want workflows as code. Everything in Git. Code reviews for pipeline changes. CI/CD for deployment. Airflow fits this model perfectly.

You integrate with many systems. Databases, cloud services, Spark clusters, APIs. Airflow has hundreds of operators and hooks for common integrations.

You need visibility. Know what’s running, what failed, and why. The UI shows everything. Logs are easily accessible.

Your team knows Python. Airflow DAGs are Python code. If your team is comfortable with Python, the learning curve is manageable.

Common Use Cases

ETL and ELT Pipelines

This is Airflow’s bread and butter. Extract data from sources, transform it, load it into a warehouse.

A typical pattern: pull data from APIs or databases, clean and transform it, write to Snowflake or BigQuery. Each step is a task. Airflow handles scheduling and failure recovery.

Many teams combine Airflow with dbt. Airflow handles extraction and loading. dbt handles transformation in the warehouse. Airflow triggers dbt runs and monitors results.

Data Quality Checks

Run validation after data loads. Check row counts, null percentages, schema conformance. Send alerts when checks fail.

Airflow makes this easy with branching. If validation passes, continue the pipeline. If it fails, stop and alert someone.

Machine Learning Workflows

Train models on a schedule. Preprocess data, train, evaluate, deploy if metrics are good.

Airflow doesn’t train models itself. It calls MLflow, Kubeflow, SageMaker, or custom training scripts. It orchestrates the workflow and handles dependencies.

Feature engineering pipelines fit well. Generate features, validate them, update feature stores. Airflow keeps everything running smoothly.

Report Generation

Generate reports daily or weekly. Pull data, run calculations, create visualizations, email results.

Airflow’s EmailOperator or SlackWebhookOperator sends notifications. PythonOperator generates reports. The whole workflow runs on a schedule without manual intervention.

Data Migration

Move data between systems. From on-prem to cloud, from one database to another, from files to a warehouse.

These workflows often run once or periodically. Airflow handles the orchestration, retries failed migrations, and tracks progress.

Architecture Deep Dive

Airflow has several components working together.

The webserver is a Flask application. It serves the UI and API. Users interact with it to monitor DAGs, view logs, and trigger runs.

The scheduler is the heart of Airflow. It runs continuously, checking which tasks are ready to run. It reads DAG files, parses them, creates DAG runs, and queues tasks when dependencies are met.

The scheduler updates the metadata database with task states. It handles retries, timeouts, and failures. In production, you often run multiple scheduler instances for high availability.

The executor determines how tasks run. Several options exist:

The SequentialExecutor runs one task at a time. Only for testing.

The LocalExecutor runs tasks in parallel using multiprocessing. Good for single-machine setups.

The CeleryExecutor distributes tasks across Celery workers. Scales horizontally. Requires a message broker (Redis or RabbitMQ).

The KubernetesExecutor creates a Pod for each task. Tasks run in Kubernetes. No standing worker pools needed.

The CeleryKubernetesExecutor combines both. Use Celery for light tasks, Kubernetes for heavy ones.

Workers execute tasks. With CeleryExecutor, workers are separate processes polling for work. With KubernetesExecutor, each task gets its own ephemeral Pod.

The metadata database stores everything. DAG metadata, task instances, DAG runs, connections, variables, user information. This database is critical. If it goes down, Airflow stops working.

PostgreSQL is the recommended choice for production. MySQL works but has quirks. SQLite is only for local development.

The message broker (for CeleryExecutor) queues tasks. Redis is common and fast. RabbitMQ is more feature-rich but heavier.

Operators and Hooks

Operators define what tasks do. Airflow ships with many built-in operators.

PythonOperator runs a Python function. Most flexible. You can do anything Python can do.

BashOperator executes shell commands. Good for running scripts or system commands.

SQLExecuteQueryOperator runs SQL queries. Works with various databases through connections.

EmailOperator sends emails. Useful for notifications.

HttpOperator makes HTTP requests. Call REST APIs.

S3Operators interact with Amazon S3. Upload, download, check for files.

BigQueryOperator runs queries in BigQuery.

SnowflakeOperator executes SQL in Snowflake.

Cloud providers have their own operator packages. The AWS, GCP, and Azure packages include dozens of operators for their services.

Hooks provide interfaces to external systems. Operators use hooks under the hood. The PostgresHook handles Postgres connections. The S3Hook talks to S3.

You can use hooks directly in PythonOperator for more control:

from airflow.providers.postgres.hooks.postgres import PostgresHook

def query_database():

hook = PostgresHook(postgres_conn_id='my_db')

records = hook.get_records("SELECT * FROM users")

return records

Task Dependencies

Dependencies determine execution order. Airflow provides several ways to define them.

Bitshift operators are the most common:

task_a >> task_b # task_b runs after task_a

task_a << task_b # task_a runs after task_b

Multiple dependencies work too:

task_a >> [task_b, task_c] # b and c run after a in parallel

[task_a, task_b] >> task_c # c runs after both a and b

Cross-DAG dependencies let tasks in one DAG wait for tasks in another:

from airflow.sensors.external_task import ExternalTaskSensor

wait_for_upstream = ExternalTaskSensor(

task_id='wait_for_other_dag',

external_dag_id='upstream_dag',

external_task_id='final_task'

)

Dynamic dependencies can be created based on runtime conditions, though this requires careful implementation.

Task Communication

Tasks need to pass data sometimes. Airflow provides mechanisms for this.

XComs (cross-communications) let tasks share small amounts of data. One task pushes data to XCom, another pulls it:

def push_data(**context):

context['ti'].xcom_push(key='my_key', value='some_value')

def pull_data(**context):

value = context['ti'].xcom_pull(key='my_key', task_ids='push_task')

print(value)

XComs are stored in the metadata database. Don’t use them for large data. They’re for metadata, small results, or references to data stored elsewhere.

TaskFlow API (Airflow 2.0+) makes this cleaner:

from airflow.decorators import task

@task

def extract():

return {"data": [1, 2, 3]}

@task

def transform(data):

return [x * 2 for x in data['data']]

@task

def load(data):

print(data)

data = extract()

transformed = transform(data)

load(transformed)

The TaskFlow API handles XCom passing automatically. Much cleaner than manual XCom operations.

Scheduling and Backfilling

Airflow’s scheduler handles when DAGs run.

Schedule intervals define how often DAGs execute. You can use cron expressions or presets:

schedule='@daily' # Midnight every day

schedule='@hourly' # Top of every hour

schedule='0 0 * * 0' # Midnight every Sunday

schedule='*/15 * * * *' # Every 15 minutes

Logical dates (formerly execution dates) represent the period a DAG run covers. A daily DAG with logical date 2025-01-01 processes data for January 1st, even if it runs on January 2nd.

This confuses people at first. The logical date is when the data is from, not when the DAG runs.

Start dates determine when a DAG begins. The first DAG run has a logical date equal to the start date.

Catchup determines whether Airflow runs missed DAG runs. If catchup is True and you deploy a DAG with a start date in the past, Airflow creates runs for all missed intervals.

with DAG(

dag_id='my_dag',

start_date=datetime(2025, 1, 1),

schedule='@daily',

catchup=True # Run all days since Jan 1

):

pass

This is powerful for backfilling data but dangerous if you’re not expecting it.

Backfilling manually reruns historical DAG runs. Useful when you fix bugs or need to reprocess data:

airflow dags backfill my_dag \

--start-date 2025-01-01 \

--end-date 2025-01-31

Error Handling

Failures happen. Airflow provides several mechanisms to handle them.

Retries automatically rerun failed tasks:

task = PythonOperator(

task_id='flaky_task',

python_callable=some_function,

retries=3,

retry_delay=timedelta(minutes=5)

)

After a failure, Airflow waits 5 minutes and tries again. It does this up to 3 times.

Exponential backoff increases delay between retries:

retry_exponential_backoff=True

max_retry_delay=timedelta(hours=1)

On-failure callbacks run when tasks fail:

def alert_on_failure(context):

send_slack_message(f"Task failed: {context['task_instance']}")

task = PythonOperator(

task_id='important_task',

python_callable=critical_function,

on_failure_callback=alert_on_failure

)

Timeouts prevent tasks from running forever:

task = PythonOperator(

task_id='might_hang',

python_callable=some_function,

execution_timeout=timedelta(hours=2)

)

After 2 hours, Airflow kills the task.

Sensors wait for conditions before proceeding. S3KeySensor waits for a file in S3:

from airflow.providers.amazon.aws.sensors.s3 import S3KeySensor

wait_for_file = S3KeySensor(

task_id='wait_for_data',

bucket_name='my-bucket',

bucket_key='data/file.csv',

timeout=3600,

poke_interval=60

)

It checks every 60 seconds for up to 1 hour.

Monitoring and Observability

The Airflow UI shows everything happening in your system.

The DAG view lists all DAGs with their schedules and recent runs. You see which are paused, running, or failed.

The grid view shows task instances across DAG runs. Each cell represents one task instance. Colors indicate states: green for success, red for failed, yellow for running.

The graph view visualizes DAG structure. You see tasks and dependencies as a directed graph.

The calendar view shows success and failure rates over time. Helpful for spotting patterns or recurring issues.

Task logs are accessible from the UI. Click on any task instance to see stdout and stderr from its execution.

Gantt charts show task timing. You can identify bottlenecks or tasks that take unexpectedly long.

Metrics are exposed for Prometheus. Track DAG success rates, task duration, scheduler performance, and queue lengths.

Alerting can be set up through callbacks, email operators, or monitoring integrations. Get notified when critical workflows fail.

Connections and Variables

Airflow stores configuration separately from code.

Connections hold credentials for external systems. Database URLs, API keys, cloud credentials. They’re stored encrypted in the metadata database.

You create connections through the UI or CLI:

airflow connections add 'my_postgres' \

--conn-type 'postgres' \

--conn-host 'db.example.com' \

--conn-login 'user' \

--conn-password 'pass' \

--conn-port 5432

Tasks reference connections by ID:

query = SQLExecuteQueryOperator(

task_id='run_query',

conn_id='my_postgres',

sql='SELECT * FROM users'

)

Variables store configuration values. Environment names, thresholds, file paths. Anything that might change between environments.

Set variables through the UI or CLI:

airflow variables set my_var "some_value"

Access them in DAGs:

from airflow.models import Variable

threshold = Variable.get("threshold", default_var=100)

Variables can be JSON for complex configuration:

config = Variable.get("my_config", deserialize_json=True)

Scaling Airflow

Small Airflow deployments run on a single machine. As you grow, you need to scale.

Horizontal scaling adds more workers. With CeleryExecutor, deploy more worker nodes. Tasks distribute across them automatically.

Multiple schedulers (Airflow 2.0+) improve scheduler throughput. Run 2-3 scheduler instances for high availability and better performance.

Database optimization becomes critical at scale. Use connection pooling. Tune PostgreSQL for your workload. Archive old task instances and DAG runs.

DAG parsing can become a bottleneck. The scheduler parses DAG files to detect changes. With hundreds of DAGs, this takes time. Minimize DAG file complexity. Use top-level imports sparingly.

Task concurrency limits prevent overwhelming downstream systems:

with DAG(

dag_id='my_dag',

max_active_runs=3,

concurrency=10

) as dag:

pass

This limits the DAG to 3 concurrent runs and 10 concurrent tasks per run.

Pool-based task queuing manages resource contention:

task = PythonOperator(

task_id='database_task',

python_callable=query_db,

pool='database_pool'

)

Create a pool with limited slots. Tasks using that pool queue when slots are full.

KubernetesExecutor scales differently. Each task gets its own Pod. No standing worker pools. Resources are allocated per task. This works well for variable workloads.

Best Practices

Production Airflow deployments follow certain patterns.

Keep DAGs simple. Complex logic belongs in functions or external libraries, not in DAG definition files. DAG files should be declarative and quick to parse.

One DAG per file. Don’t define multiple DAGs in the same file. It makes debugging harder and can cause issues with the scheduler.

Use version control. All DAGs should be in Git. Review changes before merging. Use CI/CD to deploy DAGs.

Test DAGs before deploying. Run unit tests on task logic. Use pytest to verify DAG structure and dependencies.

Set appropriate timeouts and retries. Every task should have a timeout. Critical tasks should retry. Non-critical tasks might fail fast.

Don’t use SubDAGs. They have known issues and are deprecated. Use TaskGroups instead for logical grouping.

Minimize top-level code. Code at the module level runs on every scheduler loop. Keep it minimal. Expensive operations belong in tasks, not in DAG definition.

Use the TaskFlow API. For Python tasks, the TaskFlow API is cleaner than traditional operators.

Monitor resource usage. Track database size, scheduler performance, worker resource consumption. Set up alerts before problems occur.

Archive old data. Task instances and DAG runs accumulate. Archive them to keep the database manageable.

Use connections and variables. Never hardcode credentials or environment-specific config in DAG files.

Document your DAGs. Add doc strings explaining what each DAG does, when it runs, and who owns it.

Common Pitfalls

Several mistakes show up repeatedly.

Misunderstanding execution dates. The execution date is when the data is from, not when the DAG runs. This trips up newcomers constantly.

Not setting start dates correctly. Start dates must be in the past and static. Don’t use datetime.now() as a start date.

Expecting immediate execution. When you unpause a DAG, it doesn’t run immediately. It waits for the next scheduled interval.

Using XComs for large data. XComs go in the metadata database. Passing large dataframes or files through XComs causes problems. Store data externally and pass references.

Making DAGs too granular. Having hundreds of tiny DAGs creates overhead. Group related logic into reasonable DAGs.

Creating dynamic DAGs poorly. Generating DAGs programmatically can lead to parse time issues. Be careful with loops that create tasks.

Not handling timezones. Airflow uses UTC internally. Schedule intervals and execution dates are in UTC unless you explicitly configure otherwise.

Ignoring database maintenance. The metadata database grows over time. Archive old data or it will slow everything down.

Airflow 2.0 vs Earlier Versions

Airflow 2.0 (released December 2020) was a major overhaul.

Better scheduler performance. The new scheduler is significantly faster and more reliable.

Multiple schedulers. High availability is built in. Run multiple scheduler instances for redundancy.

TaskFlow API. Python tasks are much cleaner with decorators and automatic XCom handling.

Removal of experimental features. SubDAGs are deprecated. Kubernetes Executor is stable.

Full REST API. Programmatic access to all Airflow features.

Improved UI. The grid view and other enhancements make monitoring easier.

Provider packages. Operators for external systems are now separate packages. You only install what you need.

If you’re still on Airflow 1.x, upgrading should be a priority. Support has ended and you’re missing significant improvements.

Managed Airflow Options

Running Airflow yourself requires effort. Managed options exist.

Google Cloud Composer is managed Airflow on GCP. Google handles infrastructure, updates, and monitoring. You write DAGs and upload them. Pricing is based on environment size.

Amazon MWAA (Managed Workflows for Apache Airflow) is AWS’s offering. Similar model. AWS manages the infrastructure. You focus on workflows.

Astronomer provides both cloud and self-hosted managed Airflow. They offer enterprise features, support, and tooling beyond standard Airflow.

Managed services cost more than self-hosting but save operations effort. For smaller teams or those without DevOps resources, they make sense.

Comparison with Alternatives

Airflow isn’t the only option. How does it compare?

vs Prefect: Prefect has a cleaner Python API and better development experience. But Airflow has a larger ecosystem and more battle-testing. Use Prefect for new projects if you value developer experience. Use Airflow if you need proven reliability at scale.

vs Dagster: Dagster’s asset-oriented model is fundamentally different. It’s better for data quality and lineage. Airflow is better for task orchestration. They solve slightly different problems.

vs Luigi: Luigi is older and simpler. Airflow has surpassed it in features and community. New projects should use Airflow.

vs Argo Workflows: Argo is Kubernetes-native. If you’re all-in on K8s, Argo might be simpler. But Airflow integrates with more systems and has better data-specific features.

vs Step Functions: Step Functions is serverless and AWS-only. Airflow is portable and self-hosted. Step Functions is easier to operate. Airflow is more flexible.

vs Temporal: Temporal excels at long-running, stateful workflows with complex business logic. Airflow is better for scheduled data pipelines. Different strengths.

The Future of Airflow

Airflow continues to evolve. The community is active and releases are regular.

Better Kubernetes integration is ongoing. The KubernetesExecutor keeps improving. Airflow on K8s is becoming the preferred deployment model.

Performance improvements continue. Scheduler optimizations, better database queries, faster DAG parsing.

Enhanced observability with better metrics, logging, and tracing. Integration with modern observability platforms.

Data-aware scheduling is being explored. Schedule DAGs based on data availability rather than just time.

Improved data lineage tracking. Better integration with data catalog tools.

Security enhancements including better secret management and role-based access control.

The project has momentum. Major companies depend on it. Development is well-funded through Astronomer and other commercial entities.

Key Takeaways

Apache Airflow is the most mature and widely-used workflow orchestration platform.

It’s built for data engineering. ETL pipelines, data quality checks, report generation, ML workflows all fit naturally.

The learning curve is real. You need to understand DAGs, scheduling, executors, and many concepts. But the investment pays off.

Workflows as code is the killer feature. Version control, testing, code review, and CI/CD make pipelines reliable.

The ecosystem is massive. Hundreds of operators and hooks. Extensive documentation and community support.

Challenges include operational complexity, resource requirements, and occasional quirks. But mature tools exist to address these.

Managed offerings remove operational burden. Consider them if you lack DevOps resources.

Airflow isn’t perfect. Newer tools have better UX. But nothing matches its combination of features, stability, and ecosystem.

If you’re building data pipelines, Airflow deserves serious consideration. It’s proven at scale and has the community behind it.

Tags: Apache Airflow, workflow orchestration, data pipelines, ETL, data engineering, Python workflows, DAG, task scheduling, pipeline automation, data orchestration, batch processing, workflow management, Airflow operators, data infrastructure, MLOps pipelines