Workflow Orchestration in 2026: Choosing the Right Tool for Your Data Pipelines

Introduction

Every data team faces the same problem. You have tasks that need to run in a specific order. Some depend on others finishing first. Some need to retry when they fail. Some run once a day, others every few minutes.

This is workflow orchestration. It’s the backbone of modern data engineering.

The market is crowded with tools. Apache Airflow dominates mindshare, but newer platforms like Prefect, Dagster, and Temporal are gaining ground. Cloud-native options like AWS Step Functions offer different trade-offs. Specialized tools like dbt transformed how we build data models.

This guide breaks down 23 workflow orchestration tools. You’ll learn what each does best, where it falls short, and how to pick the right one for your needs.

What is Workflow Orchestration?

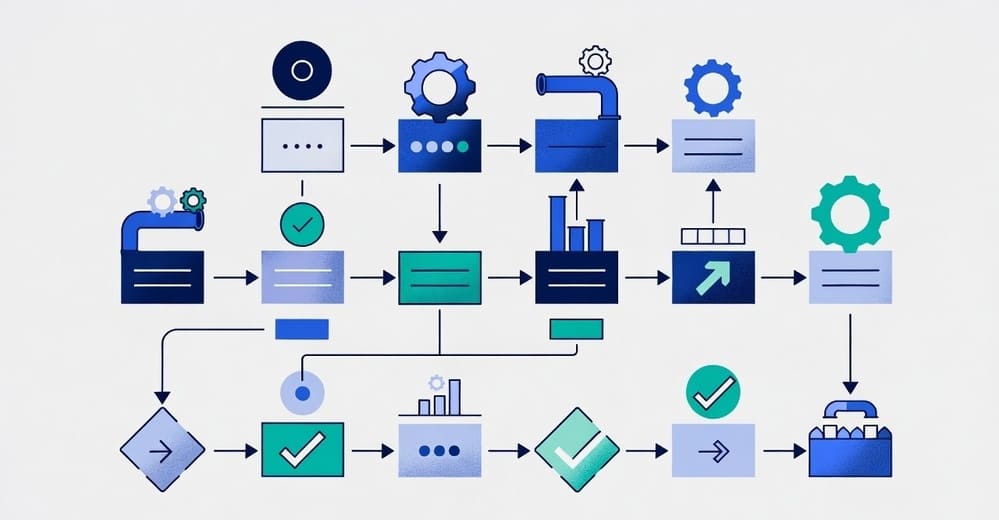

Workflow orchestration manages dependencies between tasks. It handles scheduling, monitoring, retries, and failures. Good orchestration tools let you define workflows as code, version them, and see what’s running at any moment.

The core concepts are simple. Tasks are individual units of work. Workflows connect tasks with dependencies. Schedulers trigger workflows at the right time. Executors run the actual work.

Modern orchestration goes beyond basic scheduling. It includes data lineage tracking, backfilling historical runs, dynamic workflow generation, and integration with cloud services.

The Major Players

Apache Airflow

Airflow is the 800-pound gorilla. It’s been around since 2014 when Airbnb open-sourced it. Most data teams have used it or considered it.

What it does well:

- Mature ecosystem with hundreds of operators

- Strong community support and extensive documentation

- Visual DAG interface for monitoring workflows

- Flexible Python-based workflow definition

Where it struggles:

- Complex setup and maintenance

- Resource-heavy for small workloads

- DAG definition can get messy in large projects

- Backfilling and reruns require careful handling

Airflow works best for batch processing workflows. If you’re building ETL pipelines that run daily or hourly, it’s a solid choice. The learning curve is real, but the investment pays off for teams running dozens or hundreds of workflows.

Common use cases: ETL/ELT pipelines, data warehouse updates, machine learning training workflows, report generation

Prefect

Prefect launched as a response to Airflow’s complexity. The team wanted something easier to develop with and simpler to operate.

What it does well:

- Clean Python API that feels natural

- Hybrid execution model (cloud coordination, local execution)

- Better error handling and retry logic

- No DAG limitations (dynamic workflows are easier)

Where it struggles:

- Smaller ecosystem than Airflow

- Less mature for very large scale deployments

- Community is growing but still smaller

Prefect shines when you want to move fast. The development experience is better than Airflow. You write normal Python functions and add decorators. Version 2.0 made significant improvements to the architecture.

Common use cases: Data science workflows, API-driven pipelines, event-driven automation, hybrid cloud/local processing

Dagster

Dagster takes a different approach. It treats data assets as first-class citizens. Instead of thinking about tasks, you think about the data you’re producing.

What it does well:

- Asset-based orchestration model

- Strong typing and data validation

- Excellent testing framework

- Built-in data lineage and cataloging

Where it struggles:

- Steeper learning curve if you’re used to task-based thinking

- Smaller community than Airflow or Prefect

- Some features still maturing

Dagster works best when data quality and lineage matter. If you need to track which datasets depend on which, Dagster makes it explicit. The software-defined assets paradigm changes how you think about pipelines.

Common use cases: Data platform development, analytics engineering, ML feature pipelines, data quality monitoring

Temporal

Temporal isn’t a data orchestration tool in the traditional sense. It’s a workflow engine for building reliable distributed applications. But data teams are using it more.

What it does well:

- Extremely reliable execution guarantees

- Handles long-running workflows (days, weeks, months)

- Built-in versioning and deployment strategies

- Language-agnostic (Python, Go, Java, TypeScript)

Where it struggles:

- Not purpose-built for data workflows

- Requires running infrastructure (though cloud version exists)

- Conceptual model takes time to understand

Temporal excels at complex business workflows that need bulletproof reliability. If your workflow involves multiple systems, long waits, or critical business logic, Temporal is worth considering.

Common use cases: Order processing, payment workflows, customer onboarding, complex ETL with external dependencies

dbt (data build tool)

dbt transformed analytics engineering. It’s not a general workflow orchestrator, but it orchestrates SQL transformations in your data warehouse.

What it does well:

- SQL-first approach for analytics engineers

- Built-in testing and documentation

- Dependency management through

ref()function - Version control and CI/CD friendly

Where it struggles:

- Limited to SQL transformations

- Needs external scheduler for production

- Not suitable for non-transformation tasks

dbt is essential for modern analytics stacks. It handles the T in ELT. You define models in SQL, dbt figures out the execution order. Most teams combine dbt with Airflow or Prefect for end-to-end orchestration.

Common use cases: Data warehouse transformations, dimensional modeling, analytics engineering, metric definitions

AWS Step Functions

Step Functions is AWS’s serverless orchestration service. It uses state machines defined in JSON.

What it does well:

- Fully managed (no infrastructure to maintain)

- Native AWS service integration

- Pay per use pricing model

- Visual workflow designer

Where it struggles:

- Locked into AWS ecosystem

- JSON configuration can get verbose

- Limited local development and testing

- More expensive at scale compared to self-hosted

Step Functions works well for AWS-centric architectures. If you’re all-in on AWS and want minimal operational overhead, it’s a natural fit.

Common use cases: AWS Lambda orchestration, microservice coordination, batch processing jobs, event-driven workflows

Luigi

Luigi came from Spotify in 2012. It predates Airflow and influenced its design.

What it does well:

- Simple Python-based task definition

- Built-in support for Hadoop and Spark

- Lightweight and easy to understand

- Good for smaller teams and projects

Where it struggles:

- Less active development than competitors

- Basic UI compared to modern tools

- Smaller ecosystem and community

- Missing features found in newer tools

Luigi still has users, but most teams are migrating to newer options. It’s fine for simple workflows, but lacks the features teams expect now.

Common use cases: Legacy data pipelines, simple ETL workflows, Hadoop job coordination

Cloud-Native and Kubernetes Options

Argo Workflows

Argo is Kubernetes-native. Each workflow step runs in a container. It’s part of the CNCF and widely used in cloud-native environments.

What it does well:

- Designed for Kubernetes from the ground up

- Container-based execution

- Support for complex DAG patterns

- Good for CI/CD and ML workflows

Where it struggles:

- Requires Kubernetes expertise

- YAML configuration can be verbose

- Steeper learning curve for non-DevOps teams

Argo fits teams already invested in Kubernetes. If you’re running microservices or ML workloads on K8s, Argo integrates naturally.

Common use cases: MLOps pipelines, data processing on Kubernetes, CI/CD workflows, batch processing

Kubeflow Pipelines

Kubeflow Pipelines is purpose-built for machine learning workflows on Kubernetes.

What it does well:

- ML-focused features (experiment tracking, model versioning)

- Container-based execution

- Integration with ML frameworks

- Component reusability

Where it struggles:

- Requires Kubernetes

- Complex setup and maintenance

- Overkill for non-ML workflows

Kubeflow Pipelines works best for ML teams running on Kubernetes. It handles the full ML lifecycle from data prep to deployment.

Common use cases: ML model training, hyperparameter tuning, model deployment pipelines, feature engineering

Flyte

Flyte came from Lyft. It’s another Kubernetes-native workflow engine with strong ML support.

What it does well:

- Type-safe workflow definition

- Versioning and reproducibility built-in

- Multi-tenancy support

- Good for ML and data science

Where it struggles:

- Requires Kubernetes infrastructure

- Smaller community than competitors

- Learning curve for new users

Flyte targets teams that need reproducible ML workflows at scale. The type system catches errors before runtime.

Common use cases: ML experimentation, data processing pipelines, reproducible research, batch predictions

Specialized and Domain-Specific Tools

Apache Beam

Beam is different from the others. It’s a unified programming model for batch and streaming data processing. You write your pipeline once, run it on multiple execution engines.

What it does well:

- Unified batch and streaming model

- Portable across engines (Spark, Flink, Dataflow)

- Strong windowing and state management

- Good for complex event processing

Where it struggles:

- Steep learning curve

- Abstraction can add complexity

- Debugging distributed failures is hard

Beam makes sense when you need processing logic that works in both batch and streaming modes. Or when you want to avoid lock-in to a specific execution engine.

Common use cases: Real-time analytics, event processing, streaming ETL, cross-engine data processing

Metaflow

Metaflow came from Netflix. It’s built for data scientists who want to move from notebooks to production.

What it does well:

- Designed for data scientists (not engineers)

- Easy transition from local to cloud execution

- Built-in versioning and experiment tracking

- AWS and Kubernetes support

Where it struggles:

- Less suitable for complex orchestration

- Smaller community

- Limited to specific use cases

Metaflow bridges the gap between experimentation and production. Data scientists can develop locally and deploy to production with minimal changes.

Common use cases: ML model development, data science workflows, A/B testing pipelines, research to production

Nextflow and Snakemake

These tools dominate bioinformatics and scientific computing. They’re designed for reproducible computational workflows.

What they do well:

- Reproducibility is core to the design

- Container integration (Docker, Singularity)

- Domain-specific features for scientific computing

- Strong version control and provenance tracking

Where they struggle:

- Less common outside scientific domains

- Smaller general-purpose ecosystem

Nextflow and Snakemake are the right choice for genomics, proteomics, and scientific data analysis. They handle complex computational workflows that need to be reproducible years later.

Common use cases: Genomics pipelines, bioinformatics workflows, scientific data processing, research reproducibility

Legacy and Specialized Tools

Cron

Cron is the original workflow scheduler. It’s been in Unix systems since 1975.

What it does well:

- Simple and universal

- No dependencies or setup

- Reliable for basic scheduling

- Built into every Linux system

Where it struggles:

- No dependency management

- No monitoring or alerting

- No retry logic

- Difficult to manage at scale

Cron still has a place for simple scheduled tasks. But modern data workflows need more sophisticated orchestration.

Common use cases: System maintenance tasks, simple scheduled scripts, backup jobs

Jenkins Job Builder

Jenkins is primarily a CI/CD tool, but teams use it for data workflows too.

What it does well:

- Strong plugin ecosystem

- Familiar to DevOps teams

- Good integration with version control

- Supports complex build and deploy logic

Where it struggles:

- Not designed for data workflows

- Configuration can get messy

- Better modern alternatives exist

Jenkins works if you’re already using it for CI/CD and have simple data workflows. But dedicated orchestration tools are better for complex data pipelines.

Common use cases: ETL triggered by code commits, data quality checks in CI, simple scheduled data jobs

Azkaban

Azkaban came from LinkedIn. It’s another older tool that influenced modern orchestrators.

What it does well:

- Web-based workflow designer

- Good permission and access control

- Reliable execution for batch jobs

Where it struggles:

- Development has slowed

- UI feels dated

- Limited ecosystem compared to newer tools

Azkaban is mostly seen in legacy systems now. Teams are migrating to more active projects.

Common use cases: Legacy Hadoop workflows, batch processing at companies with existing Azkaban infrastructure

Conductor

Conductor is Netflix’s workflow engine for microservices orchestration.

What it does well:

- Built for microservice coordination

- Good for long-running workflows

- Strong failure handling

Where it struggles:

- Less common in data engineering

- Requires running infrastructure

- Smaller community

Conductor fits teams building complex microservice applications. It’s less common in pure data engineering contexts.

Common use cases: Microservice orchestration, business process automation, saga patterns

Rundeck

Rundeck focuses on runbook automation and operations workflows.

What it does well:

- Operations-focused features

- Good access control and audit logging

- Self-service job execution

Where it struggles:

- Not optimized for data workflows

- Limited data engineering features

Rundeck works better for IT operations than data engineering. It’s about giving teams self-service access to operational tasks.

Common use cases: Runbook automation, infrastructure operations, incident response workflows

Apache NiFi

NiFi is a visual data flow tool. You drag and drop processors to build pipelines.

What it does well:

- Visual flow design

- Real-time data routing and transformation

- Built-in processors for many systems

- Good for complex data routing

Where it struggles:

- Can become unwieldy for complex logic

- Version control is harder with visual tools

- Steep learning curve for the UI

NiFi fits use cases where data routing and transformation logic is complex. The visual interface helps with understanding data flows.

Common use cases: Real-time data ingestion, IoT data processing, data routing and enrichment, log aggregation

Keboola

Keboola is a managed data platform with built-in orchestration.

What it does well:

- Fully managed platform

- No infrastructure to maintain

- Good for business users

- Quick setup and deployment

Where it struggles:

- Less flexibility than code-based tools

- Vendor lock-in concerns

- Can get expensive at scale

Keboola works for teams that want a complete platform rather than assembling tools. It’s faster to start but less flexible.

Common use cases: Small to medium business analytics, marketing data pipelines, rapid prototyping

Taverna

Taverna is a scientific workflow management system. It’s used in research and academia.

What it does well:

- Designed for scientific workflows

- Good provenance tracking

- Integration with scientific databases

Where it struggles:

- Less active development

- Limited use outside academia

- Dated interface

Taverna is mainly seen in academic and research settings now. Newer tools have overtaken it in most domains.

Common use cases: Scientific data analysis, research workflows, academic computing

How to Choose the Right Tool

The best orchestration tool depends on your specific needs. Here’s how to think through the decision.

Start with your execution environment. Are you on AWS, GCP, Azure? Running Kubernetes? Prefer serverless? This eliminates some options immediately.

Consider your team’s skills. If your team knows Python well, Airflow, Prefect, or Dagster make sense. If you’re Kubernetes-native, look at Argo or Flyte. If SQL is your strength, dbt is essential.

Think about scale. Running 10 workflows? Almost anything works. Running 1,000 workflows across multiple teams? You need something battle-tested like Airflow or Temporal.

Evaluate operational overhead. Managed services like Step Functions or Prefect Cloud reduce operations work. Self-hosted options give more control but require maintenance.

Check ecosystem integration. Which systems do you need to connect? Make sure your tool has good connectors or operators.

Consider workflow complexity. Simple scheduled jobs? Cron might suffice. Complex dependencies and dynamic workflows? You need a full orchestration platform.

Look at observability needs. How important is monitoring, alerting, and debugging? Some tools have better observability than others.

Common Patterns and Best Practices

Combine tools strategically. Many teams use multiple orchestrators. dbt for transformations, Airflow for overall orchestration, Temporal for critical business workflows.

Start simple. Don’t over-engineer early. Begin with a straightforward tool and migrate later if needed.

Treat workflows as code. Version control everything. Use CI/CD for workflow deployment. Test workflows before production.

Design for failure. Workflows will fail. Build in retries, alerting, and recovery mechanisms.

Monitor and measure. Track execution time, failure rates, and resource usage. Set up alerts for critical workflows.

Document dependencies. Make it clear what depends on what. Future you will thank present you.

Keep workflows focused. One workflow should do one logical thing. Avoid mega-workflows that do everything.

Use idempotent tasks. Tasks should produce the same result when run multiple times. This makes retries and backfills safer.

The Future of Workflow Orchestration

The field keeps evolving. Several trends are shaping what comes next.

Data-aware orchestration is becoming standard. Tools like Dagster that treat data as assets are influencing others. Expect more focus on data quality, lineage, and validation.

Event-driven workflows are growing. Instead of time-based scheduling, workflows trigger from events. Changes in data, API calls, or system events start workflows.

ML-specific orchestration is splitting from general data orchestration. Tools like Kubeflow and Flyte focus on ML workflow needs.

Serverless and managed options continue to grow. Teams want less infrastructure to manage. Cloud providers and startups are filling this space.

Standardization efforts are happening. Projects like OpenLineage aim to create common standards for data lineage across tools.

Better developer experience is a competitive differentiator. Tools compete on how easy they are to use, not just features.

Key Takeaways

Workflow orchestration is essential for modern data teams. The tool you choose shapes how you build and maintain data pipelines.

Airflow remains the most common choice for good reason. It’s mature, flexible, and well-supported. But it’s not always the best fit.

Prefect and Dagster offer better developer experiences with modern architectures. They’re worth considering for new projects.

Specialized tools like dbt and Temporal excel in their domains. Most teams end up using multiple orchestration tools.

Cloud-native options like Step Functions and Argo work well if you’re committed to specific platforms.

The right choice depends on your team, infrastructure, and requirements. Start with what solves your immediate problems. You can always migrate later.

Tags: workflow orchestration, Apache Airflow, Prefect, Dagster, data engineering, ETL pipelines, data pipelines, MLOps, dbt, Temporal, Kubernetes workflows, Argo Workflows, data orchestration tools, pipeline automation, Kubeflow, Metaflow, AWS Step Functions, data platform engineering