SQLAlchemy

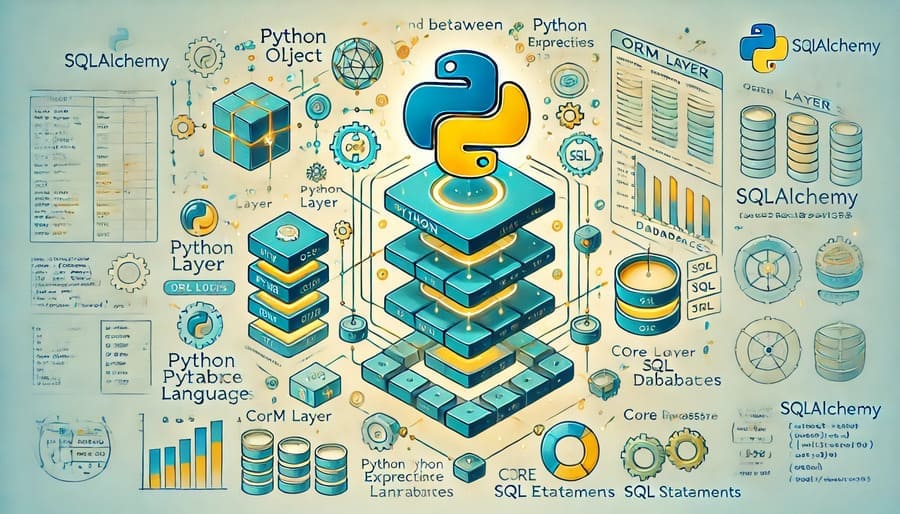

In the world of data engineering, bridging the gap between relational databases and object-oriented programming has long been a challenge. Enter SQLAlchemy—a powerful Python SQL toolkit and Object-Relational Mapping (ORM) library that has revolutionized how developers interact with databases. This comprehensive guide explores why SQLAlchemy has become an essential tool in the modern data engineer’s arsenal.

SQLAlchemy stands apart from other database libraries by providing not just a simple ORM layer, but a complete suite of tools for database interaction. Created by Mike Bayer in 2005, SQLAlchemy has evolved into the most sophisticated database toolkit available for Python, offering unprecedented flexibility and control.

# A simple SQLAlchemy example

from sqlalchemy import create_engine, Column, Integer, String

from sqlalchemy.ext.declarative import declarative_base

from sqlalchemy.orm import sessionmaker

# Create a connection to the database

engine = create_engine('postgresql://username:password@localhost/mydatabase')

# Create a base class for our models

Base = declarative_base()

# Define a model

class User(Base):

__tablename__ = 'users'

id = Column(Integer, primary_key=True)

name = Column(String)

email = Column(String, unique=True)

def __repr__(self):

return f"<User(name='{self.name}', email='{self.email}')>"

# Create the table in the database

Base.metadata.create_all(engine)

# Create a session to interact with the database

Session = sessionmaker(bind=engine)

session = Session()

# Add a new user

new_user = User(name='John Doe', email='john@example.com')

session.add(new_user)

session.commit()

# Query users

users = session.query(User).filter(User.name.like('%John%')).all()

for user in users:

print(user)

One of SQLAlchemy’s greatest strengths is its layered architecture that provides options for different levels of abstraction.

At its foundation, SQLAlchemy Core provides a SQL Expression Language that enables Python developers to construct SQL statements programmatically with full control over the generated SQL:

from sqlalchemy import Table, Column, Integer, String, MetaData, select

metadata = MetaData()

# Define a table

users = Table('users', metadata,

Column('id', Integer, primary_key=True),

Column('name', String),

Column('email', String)

)

# Create a query

query = select([users]).where(users.c.name.like('%John%'))

# Execute the query

with engine.connect() as conn:

result = conn.execute(query)

for row in result:

print(row)

This approach gives you SQL’s power with Python’s expressiveness—without sacrificing control over the SQL being generated.

Building on Core, SQLAlchemy’s ORM layer allows you to interact with your database using high-level Python objects. This approach lets you:

- Map database tables to Python classes

- Convert table rows to class instances

- Translate instance attributes to column values

- Express relationships between tables as object associations

# Define related models with the ORM

from sqlalchemy import ForeignKey

from sqlalchemy.orm import relationship

class Post(Base):

__tablename__ = 'posts'

id = Column(Integer, primary_key=True)

title = Column(String)

content = Column(String)

user_id = Column(Integer, ForeignKey('users.id'))

# Define relationship to User

author = relationship("User", back_populates="posts")

def __repr__(self):

return f"<Post(title='{self.title}')>"

# Add relationship to User model

User.posts = relationship("Post", back_populates="author")

# Query with relationships

user_with_posts = session.query(User).filter_by(name='John Doe').first()

for post in user_with_posts.posts:

print(f"Post by {post.author.name}: {post.title}")

SQLAlchemy supports nearly all major relational databases, allowing you to write code that works across:

- PostgreSQL

- MySQL

- SQLite

- Oracle

- Microsoft SQL Server

- And many others

This means you can develop against SQLite locally and deploy to PostgreSQL in production with minimal changes.

SQLAlchemy provides sophisticated connection pooling out of the box, optimizing database connections:

engine = create_engine(

'postgresql://user:pass@hostname/dbname',

pool_size=5,

max_overflow=10,

pool_timeout=30,

pool_recycle=3600

)

This ensures efficient use of database resources, automatically handling connection lifecycle management.

Transaction handling in SQLAlchemy gives you precise control over when changes are committed:

# Explicit transaction with error handling

try:

# Start a transaction

with session.begin():

user = User(name='Alice', email='alice@example.com')

post = Post(title='First Post', content='Hello World')

user.posts.append(post)

session.add(user)

# Transaction automatically committed if no exceptions

except:

# Transaction automatically rolled back on exception

print("Transaction failed")

When paired with Alembic (created by the same author), SQLAlchemy provides powerful schema migration capabilities:

# Alembic migration example

"""Create users table

Revision ID: 1a2b3c4d5e6f

Revises:

Create Date: 2023-01-15 10:30:00.000000

"""

from alembic import op

import sqlalchemy as sa

def upgrade():

op.create_table('users',

sa.Column('id', sa.Integer(), nullable=False),

sa.Column('name', sa.String(), nullable=True),

sa.Column('email', sa.String(), nullable=True),

sa.PrimaryKeyConstraint('id'),

sa.UniqueConstraint('email')

)

def downgrade():

op.drop_table('users')

SQLAlchemy excels at handling complex queries that would be challenging to write and maintain in raw SQL:

# Complex query example with joins, filtering, and ordering

recent_posts_with_authors = session.query(

Post, User.name.label('author_name')

).join(

User, Post.user_id == User.id

).filter(

Post.created_date > last_week_date

).order_by(

Post.created_date.desc()

).limit(10).all()

When working with legacy databases, SQLAlchemy can inspect and reflect the schema:

# Reflect an existing database

from sqlalchemy import MetaData, Table

metadata = MetaData()

# Automatically load table definitions from the database

metadata.reflect(bind=engine)

# Access the reflected tables

users = metadata.tables['users']

posts = metadata.tables['posts']

# Query using reflected tables

with engine.connect() as conn:

select_stmt = users.select().where(users.c.id == 1)

result = conn.execute(select_stmt)

user = result.fetchone()

print(user)

SQLAlchemy shines in Extract, Transform, Load (ETL) processes, allowing engineers to:

- Extract data from various database sources

- Transform data using Python’s rich ecosystem

- Load data into target databases with optimized bulk operations

# Bulk insert example for efficient data loading

from sqlalchemy.dialects.postgresql import insert

def bulk_upsert(engine, table, data, constraint_columns):

insert_stmt = insert(table).values(data)

# Define ON CONFLICT update action

update_columns = {c.name: c for c in insert_stmt.excluded if c.name not in constraint_columns}

# Build the ON CONFLICT DO UPDATE statement

upsert_stmt = insert_stmt.on_conflict_do_update(

index_elements=constraint_columns,

set_=update_columns

)

# Execute the statement

with engine.begin() as conn:

conn.execute(upsert_stmt)

When working with data warehouses like Redshift or Snowflake, SQLAlchemy provides adapters that help optimize for these platforms:

# Redshift-specific dialect example

from sqlalchemy.dialects import redshift

# Create Redshift engine

redshift_engine = create_engine('redshift+psycopg2://user:pass@hostname:port/dbname')

# Define table with Redshift-specific options

users_dimension = Table('users_dim', metadata,

Column('user_id', Integer, primary_key=True),

Column('user_name', String(50)),

Column('email', String(100)),

redshift_diststyle='KEY',

redshift_distkey='user_id',

redshift_sortkey=['user_name']

)

In microservice architectures, SQLAlchemy provides a clean data access layer:

# User service example

class UserRepository:

def __init__(self, session_factory):

self.session_factory = session_factory

def get_by_id(self, user_id):

with self.session_factory() as session:

return session.query(User).filter(User.id == user_id).first()

def create(self, user_data):

with self.session_factory() as session:

user = User(**user_data)

session.add(user)

session.commit()

return user

def update(self, user_id, update_data):

with self.session_factory() as session:

result = session.query(User).filter(User.id == user_id).update(update_data)

session.commit()

return result > 0

SQLAlchemy provides tools to understand and optimize your queries:

# Enable SQL query logging

import logging

logging.basicConfig()

logging.getLogger('sqlalchemy.engine').setLevel(logging.INFO)

# Use explain to analyze query execution

from sqlalchemy import text

with engine.connect() as conn:

explain_stmt = text(f"EXPLAIN ANALYZE {str(query.compile(dialect=engine.dialect))}")

result = conn.execute(explain_stmt)

for row in result:

print(row[0])

Control when relationship data is loaded:

# Lazy loading (default)

user = session.query(User).filter_by(id=1).first()

# This triggers a separate query when accessed

posts = user.posts

# Eager loading with joinedload

from sqlalchemy.orm import joinedload

user = session.query(User).options(joinedload(User.posts)).filter_by(id=1).first()

# No additional query needed, posts are already loaded

posts = user.posts

Optimize for inserting or updating many records:

# Bulk insert

users_to_add = [

User(name=f'User {i}', email=f'user{i}@example.com')

for i in range(1000)

]

session.bulk_save_objects(users_to_add)

session.commit()

# Bulk update

from sqlalchemy import update

stmt = update(User).where(User.active == True).values(last_login=datetime.now())

session.execute(stmt)

session.commit()

SQLAlchemy works seamlessly with pandas, enabling fluid movement between dataframes and databases:

import pandas as pd

# Read SQL query directly into pandas

df = pd.read_sql(

"SELECT * FROM users WHERE signup_date > '2023-01-01'",

engine

)

# Use SQLAlchemy expressions with pandas

query = select([users]).where(users.c.signup_date > '2023-01-01')

df = pd.read_sql(query, engine)

# Write pandas dataframe to database

df.to_sql('user_metrics', engine, if_exists='replace')

For modern async applications, SQLAlchemy 2.0 introduces first-class async support:

# Async SQLAlchemy example (2.0+)

from sqlalchemy.ext.asyncio import create_async_engine, AsyncSession

# Create async engine

async_engine = create_async_engine('postgresql+asyncpg://user:pass@hostname/dbname')

# Async session usage

async with AsyncSession(async_engine) as session:

result = await session.execute(select(User).filter_by(name='John'))

user = result.scalars().first()

user.email = 'new_email@example.com'

await session.commit()

A powerful combination for building modern APIs:

from fastapi import FastAPI, Depends, HTTPException

from sqlalchemy.orm import Session

app = FastAPI()

# Dependency to get DB session

def get_db():

db = SessionLocal()

try:

yield db

finally:

db.close()

@app.get("/users/{user_id}")

def read_user(user_id: int, db: Session = Depends(get_db)):

user = db.query(User).filter(User.id == user_id).first()

if user is None:

raise HTTPException(status_code=404, detail="User not found")

return user

@app.post("/users/")

def create_user(user: UserCreate, db: Session = Depends(get_db)):

db_user = User(name=user.name, email=user.email)

db.add(db_user)

db.commit()

db.refresh(db_user)

return db_user

Properly manage session lifecycle to prevent connection leaks:

# Context manager for session handling

from contextlib import contextmanager

@contextmanager

def session_scope():

"""Provide a transactional scope around a series of operations."""

session = Session()

try:

yield session

session.commit()

except:

session.rollback()

raise

finally:

session.close()

# Usage

with session_scope() as session:

user = session.query(User).filter_by(id=1).first()

user.name = "Updated Name"

Fine-tune your connection pool based on workload:

engine = create_engine(

'postgresql://user:pass@hostname/dbname',

# Maintain a pool of 10 connections

pool_size=10,

# Allow up to 20 connections at peak

max_overflow=20,

# Recycle connections older than 1 hour

pool_recycle=3600,

# Wait up to 30 seconds for an available connection

pool_timeout=30,

# Log connection pool events

echo_pool=True

)

Keep your models organized and maintainable:

# models/__init__.py

from sqlalchemy.ext.declarative import declarative_base

Base = declarative_base()

# models/user.py

from sqlalchemy import Column, Integer, String

from . import Base

class User(Base):

__tablename__ = 'users'

id = Column(Integer, primary_key=True)

name = Column(String)

email = Column(String)

# models/post.py

from sqlalchemy import Column, Integer, String, ForeignKey

from sqlalchemy.orm import relationship

from . import Base

class Post(Base):

__tablename__ = 'posts'

id = Column(Integer, primary_key=True)

title = Column(String)

user_id = Column(Integer, ForeignKey('users.id'))

author = relationship("User", back_populates="posts")

For modern Python applications, add type hints:

from sqlalchemy.orm import Session

from typing import List, Optional

from . import models

class UserRepository:

def __init__(self, session: Session) -> None:

self.session = session

def get_by_id(self, user_id: int) -> Optional[models.User]:

return self.session.query(models.User).filter(models.User.id == user_id).first()

def get_all(self) -> List[models.User]:

return self.session.query(models.User).all()

Implement robust testing with in-memory databases:

import pytest

from sqlalchemy import create_engine

from sqlalchemy.orm import sessionmaker

@pytest.fixture

def test_db():

# Create in-memory SQLite database for testing

engine = create_engine('sqlite:///:memory:')

Base.metadata.create_all(engine)

# Create test session

TestSession = sessionmaker(bind=engine)

session = TestSession()

# Provide session to test

yield session

# Clean up

session.close()

def test_create_user(test_db):

user = User(name='Test User', email='test@example.com')

test_db.add(user)

test_db.commit()

fetched_user = test_db.query(User).filter_by(email='test@example.com').first()

assert fetched_user is not None

assert fetched_user.name == 'Test User'

SQLAlchemy continues to evolve with the release of version 2.0, introducing:

- First-class async support

- Unified query API between ORM and Core

- Improved typing support

- Enhanced performance optimizations

Its importance in the Python ecosystem cannot be overstated. For data engineers, SQLAlchemy provides the perfect balance of abstraction and control—allowing you to focus on solving data problems rather than wrestling with database interfaces.

Whether you’re building ETL pipelines, microservices, data APIs, or analytic applications, SQLAlchemy offers the tools you need to interact with relational databases effectively and efficiently. By adopting SQLAlchemy in your data engineering workflows, you’ll gain productivity, maintainability, and flexibility that raw SQL simply cannot match.

#SQLAlchemy #DataEngineering #ORM #PythonDatabase #SQL #DatabaseMigration #Alembic #DataPipelines #ETL #RelationalDatabases #PostgreSQL #MySQL #DataModeling #DatabaseAbstraction #PythonORM #SQLToolkit #DataAccessLayer #PythonDevelopment #DatabaseProgramming #DataArchitecture