Prometheus

In the complex world of distributed systems and microservices, visibility into your infrastructure is not just valuable—it’s essential. Enter Prometheus, the open-source monitoring system and time series database that has revolutionized how engineering teams monitor their systems. From its humble beginnings at SoundCloud to becoming a cornerstone of the Cloud Native Computing Foundation (CNCF), Prometheus has established itself as the de facto standard for metrics monitoring in cloud-native environments.

Prometheus was born in 2012 at SoundCloud when the music streaming platform needed a monitoring solution for their microservices architecture. Unsatisfied with existing solutions, a small team developed what would become one of the most widely adopted monitoring systems in the industry.

By 2016, Prometheus had gained such significant traction that it became the second project (after Kubernetes) to be adopted by the Cloud Native Computing Foundation. This endorsement cemented its position as a key component in the cloud-native stack and accelerated its adoption across the industry.

Unlike many traditional monitoring systems that rely on agents pushing metrics to a central server, Prometheus follows a pull-based approach. The Prometheus server scrapes metrics from instrumented targets at regular intervals, with several advantages:

- Simplified deployment: Services don’t need to know about the monitoring infrastructure

- Better failure detection: Distinguishes between “service down” and “network partition”

- Centralized configuration: Control scraping logic from the Prometheus server

- Enhanced testability: Easier to validate that metrics are being exposed correctly

At its core, Prometheus utilizes a multi-dimensional data model where each time series is identified by a metric name and a set of key-value pairs called labels:

http_requests_total{method="POST", endpoint="/api/users", status="200"}

This dimensional approach enables powerful querying capabilities and makes it particularly well-suited for dynamic, container-based environments where traditional hierarchical naming would be cumbersome.

PromQL, Prometheus’s native query language, is perhaps its most distinctive feature. This functional query language allows users to:

- Select and aggregate data in real time

- Perform complex transformations on metrics

- Create alert conditions with sophisticated logic

- Generate dynamic dashboards with rich visualizations

Example of a PromQL query calculating the 95th percentile latency of HTTP requests by endpoint:

histogram_quantile(0.95, sum(rate(http_request_duration_seconds_bucket[5m])) by (le, endpoint))

Modern infrastructure is dynamic. Containers come and go, services scale up and down, and cloud instances are ephemeral. Prometheus embraces this reality with built-in service discovery mechanisms, including:

- File-based service discovery

- DNS-based service discovery

- Kubernetes service discovery

- Cloud provider integrations (AWS, GCP, Azure, etc.)

This allows Prometheus to automatically detect and monitor new services as they appear, making it ideal for elastic environments.

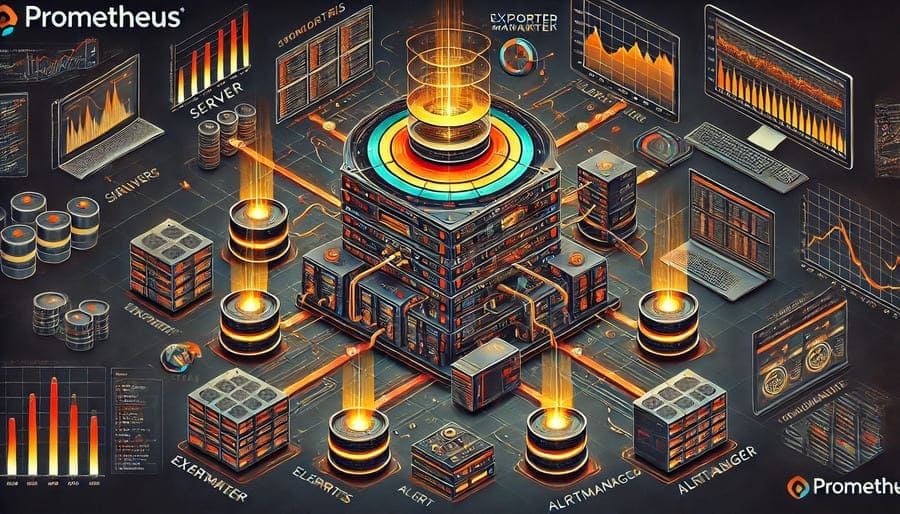

The heart of the system, responsible for:

- Scraping metrics from instrumented targets

- Storing metrics data efficiently

- Evaluating rule expressions for alerting and recording

- Providing a query API for dashboards and alerts

Exporters are specialized programs that expose metrics from systems that don’t natively support Prometheus’s metrics format. Popular exporters include:

- Node Exporter: System metrics (CPU, memory, disk, network)

- MySQL Exporter: Database performance metrics

- Blackbox Exporter: Probing endpoints for availability and response time

- NGINX Exporter: Web server performance metrics

- Redis Exporter: Cache performance metrics

The Alertmanager handles alerts sent by Prometheus, taking care of:

- Deduplication to avoid alert storms

- Grouping related alerts together

- Routing to the appropriate notification channel (email, Slack, PagerDuty)

- Silencing during maintenance periods

- Inhibition to suppress less important alerts when critical ones are firing

While Prometheus primarily uses a pull model, the Pushgateway allows short-lived jobs to push their metrics for later collection:

- Batch jobs that don’t live long enough to be scraped

- Edge case scenarios where the pull model isn’t suitable

- Legacy systems that can only push metrics

Before diving into implementation, define what aspects of your data infrastructure need monitoring:

- Infrastructure metrics: CPU, memory, disk, network

- Application metrics: Request rates, error rates, latencies

- Business metrics: Data processing rates, pipeline throughput

- Custom metrics: Domain-specific indicators

For Prometheus to collect metrics, your applications need to expose them. This process, called instrumentation, can be done in several ways:

Direct instrumentation using client libraries available for:

- Go, Java, Python, Ruby, Rust

- Node.js, PHP, C++, and more

Example in Python using the official client:

from prometheus_client import Counter, start_http_server

# Create a counter metric

data_processing_events = Counter('data_processing_events_total',

'Total number of processed data events',

['pipeline', 'event_type'])

# Increment the counter

data_processing_events.labels(pipeline='user-activity', event_type='click').inc()

# Start metrics endpoint

start_http_server(8000)

For existing applications, consider:

- Using appropriate exporters

- Implementing a sidecar pattern for non-instrumented services

- Leveraging service mesh telemetry (Istio, Linkerd)

Deploy Prometheus using one of these methods:

- Docker containers: Quick and easy for testing

- Kubernetes with Prometheus Operator: Best for production environments

- Binary installation: For traditional VM-based deployments

A sample Prometheus configuration file (prometheus.yml):

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'data-pipeline'

static_configs:

- targets: ['processor1:8000', 'processor2:8000']

labels:

environment: 'production'

- job_name: 'kafka'

static_configs:

- targets: ['kafka-exporter:9308']

- job_name: 'node'

kubernetes_sd_configs:

- role: node

Define alert rules to be notified of critical issues:

groups:

- name: data-pipeline-alerts

rules:

- alert: DataPipelineLag

expr: pipeline_lag_seconds > 300

for: 5m

labels:

severity: critical

annotations:

summary: "Data pipeline falling behind"

description: "Pipeline {{ $labels.pipeline }} is lagging by {{ $value }} seconds"

While Prometheus has a basic UI, Grafana is the standard choice for building comprehensive dashboards:

- Connect Grafana to Prometheus as a data source

- Create dashboards for different aspects of your data stack

- Set up dashboard templates for consistency

- Share dashboards with your team

- Follow the Four Golden Signals: Latency, traffic, errors, and saturation

- Use meaningful metric names: Follow the

namespace_subsystem_nameconvention - Apply labels judiciously: Labels create new time series, which consume resources

- Prefer histograms over summaries: Histograms allow for aggregate calculations

- Implement federation: For large-scale deployments

- Use recording rules: Pre-compute expensive queries

- Optimize retention policies: Balance data resolution and storage needs

- Consider remote storage: For long-term metric storage

Example recording rule to pre-compute daily error rates:

groups:

- name: recording-rules

interval: 5m

rules:

- record: job:request_errors:daily_rate

expr: sum(rate(http_requests_total{status=~"5.."}[1d])) by (job)

Remember to monitor Prometheus itself:

- Set up redundant Prometheus instances watching each other

- Monitor scrape durations and failures

- Track storage usage and compaction

- Set alerts for Prometheus health issues

Track the health and performance of your data pipelines:

- Processing rates: Events processed per second

- Processing latency: Time to process each batch

- Error rates: Failed processing attempts

- Backlog size: Number of pending items

Monitor your data stores:

- Query performance: Latency percentiles by query type

- Connection pools: Active and idle connections

- Cache hit ratios: Effectiveness of caching layers

- Storage metrics: Growth rates and capacity

For Kafka and other streaming platforms:

- Consumer lag: How far behind consumers are

- Partition leadership: Distribution across brokers

- Message rates: Production and consumption throughput

- Offset commit success/failure: Reliability indicators

Prometheus works best as part of a comprehensive observability strategy:

- Logging: Combine with ELK or Loki for log analysis

- Tracing: Integrate with Jaeger or Zipkin for distributed tracing

- Alerting: Feed alerts to PagerDuty, OpsGenie, or similar

- Visualization: Use Grafana for unified dashboards

While powerful, Prometheus does have constraints to be aware of:

- Not ideal for long-term storage: Default retention is time-limited

- Challenges with high-cardinality data: Too many labels can cause performance issues

- Pull model limitations: Sometimes a push model is necessary

- Learning curve for PromQL: Takes time to master

For enterprise-scale deployments, consider these Prometheus-compatible systems:

Thanos:

- Global query view across multiple Prometheus instances

- Long-term storage with object storage backends

- Downsampling for efficient long-term storage

Cortex:

- Horizontally scalable Prometheus as a service

- Multi-tenancy support

- Long-term storage with various backend options

The Prometheus ecosystem continues to evolve:

- OpenMetrics: Standardizing the exposition format

- PromQL enhancements: Expanding query capabilities

- Remote write improvements: Better long-term storage options

- Deeper integration: With OpenTelemetry and other observability tools

Prometheus has earned its place as a cornerstone of modern monitoring for good reason. Its pull-based architecture, dimensional data model, powerful query language, and deep integration with cloud-native ecosystems make it particularly well-suited for today’s dynamic infrastructure.

For data engineers, Prometheus offers the visibility needed to ensure reliable, performant data systems. Whether you’re running data pipelines, managing databases, or orchestrating ETL processes, Prometheus provides the metrics and alerting necessary to maintain a healthy data engineering stack.

As the landscape of data engineering continues to evolve, Prometheus remains adaptable, extensible, and community-driven—ready to meet the monitoring challenges of tomorrow’s data infrastructure.

#Prometheus #Monitoring #DataEngineering #TimeSeriesDatabase #CloudNative #DevOps #Observability #Metrics #PromQL #DataPipelines #SRE #CNCF #OpenSource #Alerting #Grafana #Kubernetes #Microservices #DataObservability #DataInfrastructure #MetricsMonitoring