Protocol Buffers: Google’s Language-Neutral, Platform-Neutral Extensible Mechanism

In the world of data serialization, few technologies have had as profound an impact as Protocol Buffers (often abbreviated as “Protobuf”). Developed by Google and battle-tested across their vast infrastructure, Protocol Buffers offer a structured, efficient, and versatile way to serialize structured data—making them indispensable for modern distributed systems, microservices architectures, and applications where performance matters.

Beyond Traditional Serialization

Before diving into Protocol Buffers, let’s understand why traditional serialization methods often fall short in demanding environments:

JSON and XML are human-readable and widely supported, but they come with significant drawbacks: verbose syntax, no schema enforcement, performance overhead, and type ambiguity. When dealing with high-throughput services or bandwidth-constrained environments, these limitations become increasingly problematic.

CSV is compact but lacks support for nested structures and has no built-in type system, making it unsuitable for complex data.

Custom binary formats can be efficient but typically lack cross-language support and require substantial maintenance.

Protocol Buffers address these limitations by providing a comprehensive solution that combines efficiency, strict typing, cross-language compatibility, and forward/backward compatibility in a single package.

What Are Protocol Buffers?

At their core, Protocol Buffers are a method for serializing structured data—similar to XML or JSON but smaller, faster, and strongly typed. The technology consists of three main components:

- Interface Definition Language (IDL): A language for defining data structures called “messages”

- Code Generation Tools: Compilers that generate code in various languages from the IDL definitions

- Runtime Libraries: Language-specific libraries that provide serialization/deserialization capabilities

Let’s look at a simple example of a Protocol Buffer definition:

protobufsyntax = "proto3";

message Person {

string name = 1;

int32 id = 2;

string email = 3;

enum PhoneType {

MOBILE = 0;

HOME = 1;

WORK = 2;

}

message PhoneNumber {

string number = 1;

PhoneType type = 2;

}

repeated PhoneNumber phones = 4;

}

This definition describes a Person message with several fields, including a nested message (PhoneNumber) and an enumeration (PhoneType). The numbers (1, 2, 3, 4) are field identifiers that are used in the binary encoding—they should not change once your protocol is in use.

Technical Advantages of Protocol Buffers

1. Compact Binary Representation

Protocol Buffers use a binary encoding that is significantly more compact than text-based formats:

// A JSON representation of a Person:

{

"name": "John Doe",

"id": 1234,

"email": "john@example.com",

"phones": [

{"number": "555-1234", "type": "MOBILE"}

]

}

// Size: 115 bytes

// The same data in Protocol Buffers (binary, shown as hex):

0A 08 4A 6F 68 6E 20 44 6F 65 10 D2 09 1A 10 6A 6F 68 6E 40 65 78 61 6D 70 6C 65 2E 63 6F 6D 22 0C 0A 08 35 35 35 2D 31 32 33 34 10 00

// Size: 47 bytesThis compact representation reduces network bandwidth, storage requirements, and serialization/deserialization time.

2. Strong Typing and Schema Validation

Unlike JSON or XML, Protocol Buffers enforce a schema. This provides several benefits:

- Early detection of errors (at compile time rather than runtime)

- Better documentation of the data model

- Type safety across language boundaries

- IDE autocompletion and better developer experience

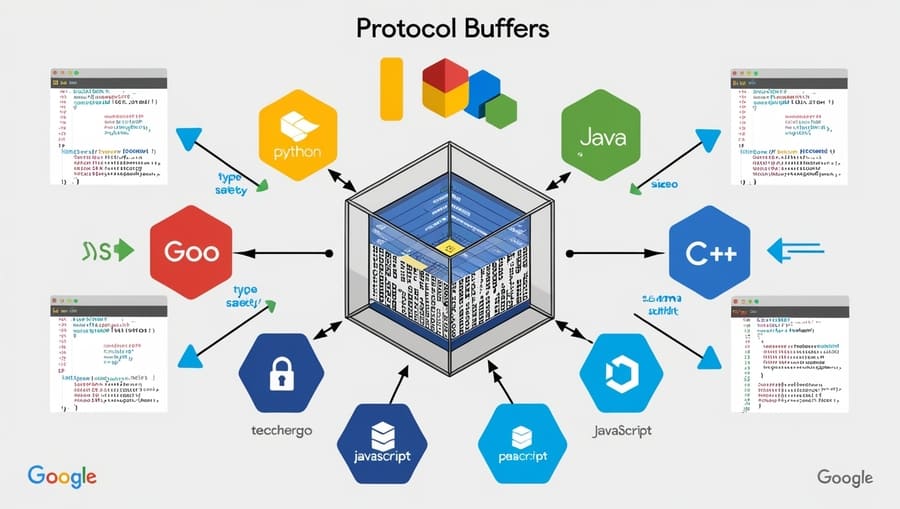

3. Cross-Language Compatibility

The Protocol Buffer compiler (protoc) can generate code for multiple languages from a single definition:

bash# Generate Python code

protoc --python_out=. person.proto

# Generate Java code

protoc --java_out=. person.proto

# Generate C++ code

protoc --cpp_out=. person.proto

Currently supported languages include C++, Java, Python, Go, Ruby, C#, Objective-C, JavaScript, PHP, Dart, and more.

4. Forward and Backward Compatibility

Protocol Buffers are designed for evolving systems. You can update your message definitions without breaking existing code:

- If you add new fields, old code will simply ignore them

- If old code reads data that’s missing some fields, those fields will take their default values

- As long as you follow certain rules (like not changing field numbers), compatibility is maintained

For example, if we update our Person definition:

protobufmessage Person {

string name = 1;

int32 id = 2;

string email = 3;

repeated PhoneNumber phones = 4;

string address = 5; // New field

bool is_active = 6; // New field

}

Old code that doesn’t know about address or is_active will still work with new data, and new code will handle old data without these fields gracefully.

5. Efficient Serialization and Deserialization

Protocol Buffers are designed for high-performance environments:

- Binary format requires minimal parsing

- Field identifiers eliminate the need for string comparisons

- Generated code is optimized for each language

- Incremental parsing is possible

Implementing Protocol Buffers

Let’s walk through implementing Protocol Buffers in a real-world scenario:

Step 1: Define Your Messages

Create a .proto file describing your data structure:

protobuf// order.proto

syntax = "proto3";

package ecommerce;

message Product {

string product_id = 1;

string name = 2;

string description = 3;

double price = 4;

}

message OrderItem {

Product product = 1;

int32 quantity = 2;

}

message Order {

string order_id = 1;

string customer_id = 2;

repeated OrderItem items = 3;

enum Status {

PENDING = 0;

PROCESSING = 1;

SHIPPED = 2;

DELIVERED = 3;

CANCELED = 4;

}

Status status = 4;

int64 created_at = 5; // Unix timestamp

}

Step 2: Generate Language-Specific Code

Use the Protocol Buffer compiler to generate code:

bashprotoc --python_out=./python --java_out=./java order.proto

Step 3: Use the Generated Code

Here’s how you might use the generated code in Python:

python# Python example

from ecommerce import order_pb2

# Create an order

order = order_pb2.Order()

order.order_id = "ORD-12345"

order.customer_id = "CUST-6789"

order.status = order_pb2.Order.PROCESSING

order.created_at = int(time.time())

# Add items to the order

item1 = order.items.add()

item1.quantity = 2

item1.product.product_id = "PROD-1"

item1.product.name = "Mechanical Keyboard"

item1.product.price = 149.99

# Serialize to binary

binary_data = order.SerializeToString()

# Send over network, store in database, etc.

# ...

# Later, deserialize

received_order = order_pb2.Order()

received_order.ParseFromString(binary_data)

And here’s the equivalent in Java:

java// Java example

import com.example.ecommerce.OrderProto.*;

// Create an order

Order.Builder orderBuilder = Order.newBuilder()

.setOrderId("ORD-12345")

.setCustomerId("CUST-6789")

.setStatus(Order.Status.PROCESSING)

.setCreatedAt(System.currentTimeMillis() / 1000);

// Add items to the order

Product keyboard = Product.newBuilder()

.setProductId("PROD-1")

.setName("Mechanical Keyboard")

.setPrice(149.99)

.build();

OrderItem item1 = OrderItem.newBuilder()

.setProduct(keyboard)

.setQuantity(2)

.build();

orderBuilder.addItems(item1);

Order order = orderBuilder.build();

// Serialize to binary

byte[] binaryData = order.toByteArray();

// Send over network, store in database, etc.

// ...

// Later, deserialize

Order receivedOrder = Order.parseFrom(binaryData);

Real-World Applications

Protocol Buffers have found widespread adoption across various domains:

Microservices Communication

In a microservices architecture, Protocol Buffers provide:

- A clear contract between services

- Efficient wire format for high-volume traffic

- Language-agnostic interface definitions

- Version compatibility as services evolve

gRPC

Google’s high-performance RPC framework uses Protocol Buffers as its Interface Definition Language:

protobuf// Service definition

service OrderService {

rpc CreateOrder(Order) returns (OrderResponse) {}

rpc GetOrder(OrderRequest) returns (Order) {}

rpc UpdateOrderStatus(OrderStatusUpdate) returns (Order) {}

}

This enables code generation not just for data structures but also for client and server stubs.

Data Storage

Protocol Buffers work well for:

- Time-series databases

- Event sourcing systems

- Log storage

- Any scenario where data schema might evolve over time

IoT and Mobile Applications

For bandwidth-constrained environments:

- Minimizes data transmission costs

- Reduces battery consumption through faster processing

- Provides strong typing for embedded systems

Protocol Buffers vs. Alternatives

How do Protocol Buffers compare to other serialization formats?

vs. JSON

- Size: 30-100% smaller

- Speed: 20-100x faster

- Schema: Enforced in Protobuf, optional in JSON

- Human-readability: JSON is readable, Protobuf is binary

- Ecosystem: JSON has broader support, Protobuf has better tooling

vs. Apache Avro

- Schema Evolution: Both handle it well, but with different approaches

- Default Values: Protobuf has language-specific defaults, Avro requires defaults in schema

- Reader/Writer Schema: Avro separates these, Protobuf uses a single schema

- Dynamic Languages: Avro has better dynamic language support

vs. Apache Thrift

- RPC Framework: Thrift includes its own RPC framework, Protobuf is often paired with gRPC

- Language Support: Comparable, but with different strengths in specific languages

- Community: Protobuf has broader adoption and Google’s backing

Advanced Protocol Buffer Features

OneOf Fields

When you have fields that are mutually exclusive:

protobufmessage PaymentMethod {

oneof method {

CreditCard credit_card = 1;

PayPal paypal = 2;

BankTransfer bank_transfer = 3;

}

}

Maps

For key-value pairs:

protobufmessage Features {

map<string, string> metadata = 1;

}

Any Type

For dynamic typing when needed:

protobufimport "google/protobuf/any.proto";

message ErrorResponse {

string error_code = 1;

string message = 2;

google.protobuf.Any details = 3;

}

Well-Known Types

Protocol Buffers include predefined types for common needs:

protobufimport "google/protobuf/timestamp.proto";

import "google/protobuf/duration.proto";

message Event {

string name = 1;

google.protobuf.Timestamp occurred_at = 2;

google.protobuf.Duration duration = 3;

}

Best Practices

Based on extensive industry experience, here are some Protocol Buffer best practices:

1. Field Numbering Strategy

- Use a consistent numbering strategy (e.g., group related fields in ranges)

- Reserve numbers for deleted fields to prevent accidental reuse

protobufmessage Account {

// User info: 1-10

string username = 1;

string email = 2;

// Account status: 11-20

bool is_active = 11;

reserved 3, 4, 5; // Previously used fields

reserved "password"; // Never reuse this field name

}

2. Package Naming

Use reverse domain notation for packages to avoid conflicts:

protobufsyntax = "proto3";

package com.example.myproject;

3. Message Evolution

- Never change field numbers

- Never reuse field numbers from deleted fields

- Use optional fields for future flexibility

- Add new fields with care, considering default values

4. Performance Considerations

- Prefer repeated fields over arrays of messages for better performance

- Use appropriate types (e.g., int32 vs. int64)

- Consider field alignment in performance-critical applications

The Future of Protocol Buffers

Protocol Buffers continue to evolve:

- Proto3 simplified the language compared to Proto2, removing required fields and adding new features

- Text Format improvements for better human readability when needed

- Custom Options for extending the protocol

- Reflection API enhancements for dynamic manipulation

Conclusion

Protocol Buffers have earned their place as a cornerstone technology in modern distributed systems. Their unique combination of performance, type safety, cross-language compatibility, and schema evolution capabilities makes them ideal for a wide range of applications.

While not a replacement for all serialization needs—JSON remains better for browser-based applications or when human readability is paramount—Protocol Buffers excel in scenarios where efficiency, strictness, and evolution matter most. In high-scale systems, microservices architectures, and performance-critical applications, Protocol Buffers often provide the optimal balance of features and constraints.

As distributed systems become increasingly complex and polyglot environments more common, technologies like Protocol Buffers that bridge language and platform boundaries become even more valuable. Whether you’re building the next high-performance microservice, optimizing mobile app communications, or designing a durable storage format, Protocol Buffers deserve serious consideration.

Hashtags: #ProtocolBuffers #Protobuf #DataSerialization #Microservices #gRPC #GoogleTech #BinaryEncoding #CrossPlatform #DistributedSystems #DataEngineering