Data Streaming & Messaging: The Backbone of Modern Data Architecture

Introduction

In today’s digital landscape, businesses generate and consume unprecedented volumes of data at ever-increasing velocities. Traditional batch processing approaches are no longer sufficient for organizations seeking to extract immediate value from their information assets. This fundamental shift has given rise to data streaming and messaging systems—technologies that enable real-time data movement, processing, and analysis across the enterprise. This comprehensive guide explores the evolution, core concepts, and leading platforms in the data streaming and messaging ecosystem, helping you navigate this critical component of modern data architecture.

Understanding Data Streaming and Messaging

The Evolution of Data Processing

Data processing architectures have evolved dramatically over the decades:

- Batch processing era: Data collected over time and processed periodically

- Service-oriented architecture: Systems communicating through synchronous requests

- Event-driven paradigm: Components reacting to events as they occur

- Stream processing revolution: Continuous analysis of data in motion

This evolution reflects the growing business need for real-time insights and immediate action. As digital transformation accelerates, the ability to process and respond to data instantly has become a critical competitive advantage.

Core Concepts and Terminology

To understand the landscape, we need to clarify key concepts:

- Messages: Discrete units of data exchanged between systems

- Events: Records of something that happened in the business domain

- Streams: Continuous, unbounded sequences of events

- Publishers/Producers: Systems that generate messages or events

- Subscribers/Consumers: Systems that receive and process messages or events

- Topics/Queues: Named destinations for organizing message flows

- Brokers: Intermediaries that handle message storage and delivery

- Partitions: Divisions of data streams for parallel processing

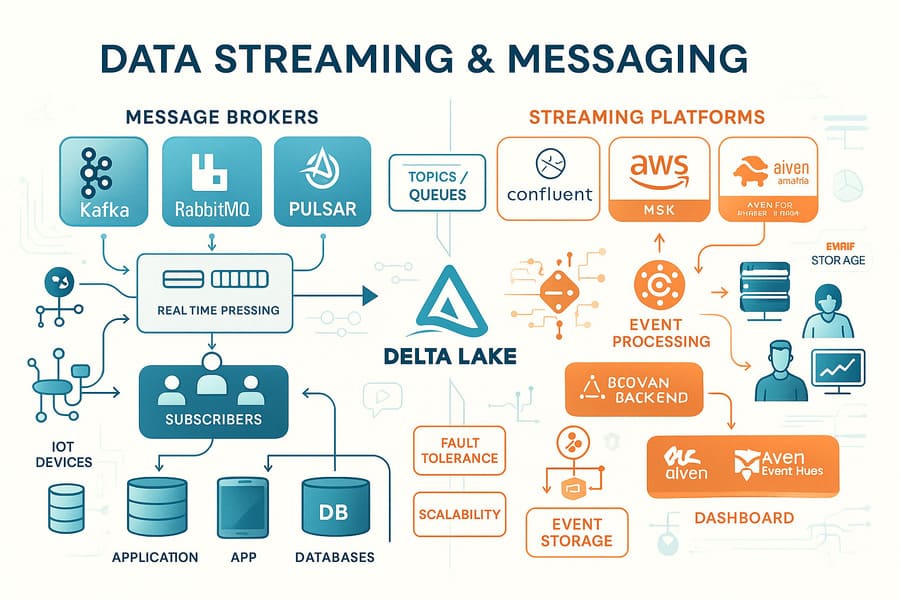

Message Brokers vs. Streaming Platforms

While often discussed together, message brokers and streaming platforms serve distinct but complementary roles:

Message Brokers primarily focus on:

- Reliable message delivery between applications

- Message routing and transformation

- Supporting various messaging patterns (point-to-point, pub-sub)

- Ensuring messages reach intended recipients

Streaming Platforms emphasize:

- Processing continuous data streams

- Storing event history for replay

- Enabling complex analytics on data in motion

- Supporting stateful stream processing

Modern systems often blur these lines, with streaming platforms incorporating messaging capabilities and message brokers adding streaming features.

Key Capabilities and Use Cases

Essential Capabilities

Leading data streaming and messaging systems typically offer:

- Scalability: Handling growing data volumes without performance degradation

- Reliability: Ensuring no data loss even during system failures

- Low latency: Minimizing delays between event creation and processing

- Persistence: Storing data for defined periods to enable replay

- Ordering guarantees: Maintaining sequence when required by applications

- Security: Providing authentication, authorization, and encryption

- Connectivity: Integrating with diverse systems across the organization

Transformative Use Cases

These technologies enable a wide range of business applications:

Real-Time Analytics

- Monitoring business metrics as they change

- Detecting fraud in financial transactions as they occur

- Analyzing customer behavior during active sessions

- Identifying operational anomalies immediately

Microservices Communication

- Enabling loose coupling between services

- Implementing event-driven architectures

- Supporting service choreography patterns

- Providing resilience through asynchronous interaction

IoT and Edge Computing

- Processing sensor data from connected devices

- Enabling edge-to-cloud data pipelines

- Supporting digital twin architectures

- Handling intermittent connectivity scenarios

Data Integration

- Implementing Change Data Capture (CDC) from databases

- Creating real-time ETL processes

- Synchronizing data across disparate systems

- Building event-driven data pipelines

Leading Solutions in the Ecosystem

Message Brokers

Apache Kafka

Originally developed at LinkedIn and now the most widely-adopted streaming platform, Kafka combines messaging capabilities with distributed storage, making it suitable for high-throughput, fault-tolerant streaming applications.

RabbitMQ

A mature, feature-rich message broker implementing the Advanced Message Queuing Protocol (AMQP), RabbitMQ excels at complex routing scenarios and traditional messaging patterns.

Apache Pulsar

A newer entrant combining messaging and streaming, Pulsar offers a unified messaging model with multi-tenancy, geo-replication, and storage separation from computing.

NATS

Focusing on simplicity and performance, NATS provides lightweight publish-subscribe messaging with minimal operational complexity.

ActiveMQ

A versatile, open-source message broker supporting multiple protocols and integration patterns, ActiveMQ has a long history in enterprise middleware.

Cloud Provider Solutions

Amazon Kinesis

AWS’s streaming data service for collecting, processing, and analyzing real-time data streams, tightly integrated with the broader AWS ecosystem.

Google Pub/Sub

A globally distributed messaging service on Google Cloud, designed for event-driven systems and asynchronous communication between applications.

Azure Event Hubs

Microsoft’s big data streaming platform and event ingestion service, capable of receiving and processing millions of events per second.

Enterprise Streaming Platforms

IBM Event Streams

An enterprise-grade event-streaming platform based on Apache Kafka, offering enhanced security, management, and integration with IBM’s middleware.

Confluent Platform

Founded by Kafka’s creators, Confluent extends Apache Kafka with additional tools, connectors, and enterprise features for comprehensive stream processing.

Amazon MSK

A fully managed Kafka service on AWS that simplifies the setup, scaling, and management of Kafka clusters.

Aiven for Kafka

A managed Kafka service available across multiple cloud providers, offering operational simplicity with cloud flexibility.

Red Hat AMQ Streams

A Kubernetes-native Kafka implementation designed for OpenShift environments, providing enterprise messaging in containerized deployments.

Choosing the Right Solution

Key Evaluation Criteria

When selecting a streaming or messaging solution, consider:

- Scalability requirements: Expected message volumes and growth projections

- Latency sensitivity: How quickly data must be processed

- Reliability needs: Consequences of potential data loss

- Integration landscape: Systems that must connect to the platform

- Operational expertise: Internal capabilities for managing the technology

- Deployment environment: On-premises, cloud, or hybrid requirements

- Budget constraints: Total cost of ownership considerations

Deployment Models

These technologies can be deployed in several ways:

- Self-managed: Complete control but higher operational responsibility

- Fully managed services: Reduced operational overhead but less customization

- Kubernetes-native: Containerized deployment for cloud-native environments

- Hybrid approaches: Combining self-managed and managed components

Implementation Best Practices

Architectural Considerations

Successful implementations typically follow these principles:

- Start with clear use cases: Define specific business requirements

- Design for growth: Plan for increasing data volumes and use cases

- Implement proper monitoring: Ensure visibility into performance and health

- Establish governance: Define policies for topics, schemas, and access

- Plan for disaster recovery: Implement appropriate backup and failover mechanisms

Common Pitfalls to Avoid

Watch out for these common challenges:

- Underestimating operational complexity: Many systems require significant expertise

- Neglecting schema management: Evolving data formats can break consumers

- Inappropriate sizing: Too many or too few partitions/topics

- Inadequate monitoring: Missing issues until they affect users

- Security afterthoughts: Adding security as an afterthought rather than by design

Future Trends and Evolution

Emerging Directions

The data streaming and messaging landscape continues to evolve:

- Serverless streaming: Event processing without infrastructure management

- Stream processing convergence: Tighter integration of messaging and analytics

- Edge-to-cloud streaming: Seamless data flow from edge devices to cloud

- AI integration: Machine learning within the streaming pipeline

- Streaming SQL standardization: Common query languages for stream processing

Conclusion

Data streaming and messaging technologies have become essential components of modern data architectures, enabling real-time data movement, processing, and analysis across the enterprise. By providing the infrastructure for event-driven systems, these platforms help organizations respond faster to business events, create more responsive customer experiences, and extract immediate value from their data assets.

Whether you’re implementing microservices communication, building real-time analytics pipelines, processing IoT data, or modernizing data integration, understanding the capabilities and characteristics of various streaming and messaging platforms is crucial for success. The right choice depends on your specific requirements, existing technology landscape, and organizational capabilities.

As data volumes continue to grow and business velocity increases, the importance of efficient, reliable streaming and messaging infrastructure will only become more pronounced. By investing in these technologies today, organizations can build the foundation for agile, data-driven operations that can adapt quickly to changing business conditions and customer expectations.

Hashtags

#DataStreaming #MessageBrokers #EventDriven #RealTimeData #ApacheKafka #RabbitMQ #ApachePulsar #Microservices #StreamProcessing #EventStreaming #CloudMessaging #DistributedSystems #DataArchitecture #DataIntegration #ModernDataStack