Apache Kafka Connect

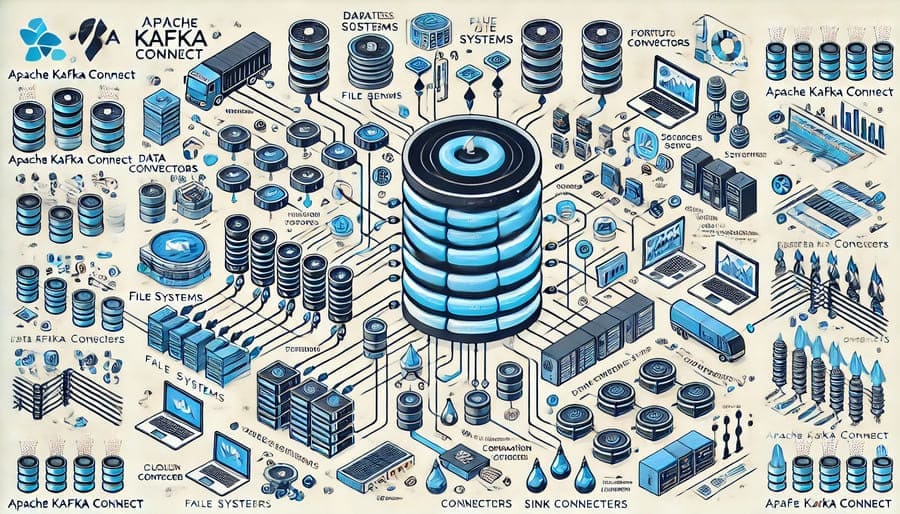

In today’s data-driven world, organizations face the constant challenge of efficiently connecting diverse systems to create unified data flows. Apache Kafka has emerged as a pivotal technology for real-time data streaming, but the complexity of integrating Kafka with external systems presented a significant hurdle—until Apache Kafka Connect arrived. This comprehensive framework has revolutionized how enterprises build reliable, scalable data pipelines between Kafka and virtually any external system, from databases and storage systems to APIs and applications.

Apache Kafka Connect is a robust integration framework that serves as a standardized bridge between Kafka and external data systems. Introduced as part of the Apache Kafka project, Kafka Connect provides a solution to the common challenge of moving data in and out of Kafka without writing custom integration code.

The framework operates on a simple yet powerful premise: create a standardized approach for data import/export that leverages Kafka’s core strengths while abstracting away the complexities of individual system integrations. This approach has transformed data integration from a custom coding exercise into a configuration-driven process, dramatically accelerating development cycles and improving reliability.

At its core, Kafka Connect employs a distributed architecture built around Connect workers:

- Standalone mode: Ideal for development and testing, where a single process runs all connectors and tasks

- Distributed mode: Production-ready deployment where multiple worker instances coordinate to provide scalability and fault tolerance

- Worker coordination: Automatic work distribution and rebalancing across available workers

- REST API: Administrative interface for deploying and managing connectors without service interruption

The functional units within Kafka Connect include:

- Connectors: Plugins that define how to interact with external systems

- Tasks: Individual units of data transfer managed by connectors

- Configurations: Declarative settings that control connector behavior

- Converters: Components that handle data format transformations between systems

- Transforms: Single-message modifications that can alter data during transfer

Kafka Connect supports two primary connector types:

Source connectors import data from external systems into Kafka:

- Database connectors: Capture changes from relational databases using technologies like Change Data Capture (CDC)

- File-based connectors: Monitor directories and stream file contents into Kafka

- API connectors: Poll external APIs and convert responses into Kafka messages

- IoT connectors: Collect data from devices and sensors for real-time processing

- Message queue connectors: Bridge legacy messaging systems with Kafka

Sink connectors export data from Kafka to external destinations:

- Data warehouse connectors: Stream records into analytical systems for business intelligence

- Storage connectors: Archive data to cloud storage services like S3 or HDFS

- Database connectors: Write Kafka records to relational or NoSQL databases

- Search engine connectors: Index Kafka data in search platforms like Elasticsearch

- Notification connectors: Trigger alerts or actions based on Kafka events

One of Kafka Connect’s greatest strengths is its thriving ecosystem of connectors:

- Confluent Hub: Centralized repository of pre-built, tested connectors

- Community connectors: Open-source implementations for common systems

- Commercial connectors: Enterprise-grade solutions with support and additional features

- Custom connectors: Framework for developing proprietary connectors for specialized systems

- Connector management tools: UI dashboards for monitoring and administering connectors

Kafka Connect dramatically reduces integration complexity:

- Configuration over code: Define data flows through configuration rather than custom code

- Standardized architecture: Consistent approach across different integrations

- Reduced maintenance: Less custom code means fewer bugs and maintenance issues

- Faster development: Deploy new integrations in hours instead of weeks

- Reusable patterns: Apply proven integration approaches across multiple systems

For mission-critical data flows, Kafka Connect provides:

- Fault tolerance: Automatic recovery from worker or task failures

- Exactly-once semantics: Prevent data duplication through offset tracking

- Monitoring hooks: Integration with observability platforms

- Automatic offset management: Tracking of progress to prevent data loss during restarts

- Schema evolution support: Graceful handling of data structure changes

As data volumes grow, Kafka Connect scales with your needs:

- Horizontal scaling: Add more workers to handle increased workloads

- Parallel processing: Multiple tasks can distribute work for a single connector

- Performance optimization: Fine-tune parallelism for maximum throughput

- Resource isolation: Configure resources per connector for predictable performance

- Incremental scaling: Add capacity without disrupting existing data flows

Organizations leverage Kafka Connect for real-time database replication:

- Capturing row-level changes from operational databases

- Synchronizing data across heterogeneous database platforms

- Creating real-time data lakes with complete change history

- Enabling microservices to maintain their own data views

- Supporting analytical systems with fresh, consistent data

Kafka Connect enables sophisticated event processing:

- Capturing events from legacy systems for modern event-driven applications

- Integrating IoT device data into event processing pipelines

- Building event sourcing patterns with reliable persistence

- Creating audit trails across distributed systems

- Implementing CQRS (Command Query Responsibility Segregation) patterns

For analytics environments, Kafka Connect provides:

- Continuous, incremental data warehouse updates

- Real-time ETL processes for fresher analytics

- Simplified pipeline management for multiple data sources

- Reduced load on operational systems through change-based replication

- Integrated data quality checks during transfer

Successful Kafka Connect implementations follow these principles:

- Start with distributed mode: Even for smaller deployments, distributed mode provides operational benefits

- Proper sizing: Allocate sufficient resources based on data volume and connector requirements

- Security planning: Implement authentication, authorization, and encryption from the beginning

- Monitoring setup: Establish comprehensive monitoring for the entire pipeline

- Disaster recovery: Include Connect configurations in your backup and DR strategies

Maximize performance and reliability through:

- Connector tuning: Adjust batch sizes, flush intervals, and retry parameters

- Worker configuration: Optimize heap settings, thread pools, and network parameters

- Converter selection: Choose appropriate serialization formats for your use case

- Transform pipelines: Use single message transformations judiciously to avoid performance impact

- Resource allocation: Ensure sufficient resources for both Kafka and Connect clusters

Address typical hurdles in Kafka Connect deployments:

- Schema management: Implement schema registry for evolving data structures

- Error handling: Configure dead letter queues for problematic records

- Monitoring gaps: Deploy comprehensive observability across the entire pipeline

- Connector conflicts: Manage dependencies to avoid classpath issues

- Scaling bottlenecks: Identify and address performance limitations proactively

Enhance data quality and compatibility through transformations:

- Field redaction: Remove sensitive information before storage

- Type conversion: Adjust data types for destination system compatibility

- Routing: Direct messages to different topics based on content

- Filtering: Exclude unnecessary records from processing

- Enrichment: Add derived or lookup data to messages during transfer

Manage data formats effectively:

- Schema-based converters: Avro, Protobuf, and JSON Schema for structured data

- Schemaless options: String and JSON converters for flexibility

- Custom serialization: Framework for specialized format handling

- Schema evolution: Strategies for handling schema changes over time

- Compatibility settings: Control schema version compatibility requirements

Manage your Connect infrastructure through:

- Programmatic control: RESTful API for connector lifecycle management

- Dynamic configuration: Update connector settings without restarts

- Status monitoring: Track connector and task health

- Pause and resume: Temporarily halt data flow without losing state

- Graceful scaling: Add or remove capacity with minimal disruption

Compared to building custom integrations, Kafka Connect offers:

- Reduced development time: Eliminate boilerplate integration code

- Built-in fault tolerance: Avoid implementing complex reliability patterns

- Community-tested solutions: Leverage connector implementations that have been battle-tested

- Standardized management: Consistent deployment and monitoring approach

- Focus on business logic: Spend development resources on unique business requirements

When compared to other integration platforms, Kafka Connect provides:

- Native Kafka integration: Deep alignment with Kafka’s architecture and guarantees

- Lightweight footprint: Purpose-built for Kafka without unnecessary features

- Horizontal scalability: Designed for distributed, high-throughput deployments

- Kafka-centric workflow: Optimized for event-streaming use cases

- Community alignment: Direct support from the Kafka community and ecosystem

The framework continues to evolve with:

- Enhanced security features: Finer-grained access controls and improved encryption

- Cloud-native adaptations: Better integration with containerized and serverless environments

- Improved monitoring: More detailed metrics and observability

- Performance optimizations: Reduced resource consumption and higher throughput

- Extended connector ecosystem: Support for emerging technologies and platforms

Apache Kafka Connect represents a transformative approach to data integration, bringing standardization and simplicity to the complex challenge of connecting Kafka with external systems. By providing a robust, scalable framework with a rich ecosystem of connectors, Kafka Connect enables organizations to build reliable data pipelines without reinventing the wheel for each integration.

As data ecosystems grow increasingly diverse and distributed, the value of a standardized integration layer becomes ever more apparent. Kafka Connect fills this critical role, allowing enterprises to focus on deriving value from their data rather than struggling with the mechanics of moving it between systems.

Whether you’re implementing change data capture from operational databases, feeding analytical systems with real-time data, or building sophisticated event-driven architectures, Kafka Connect provides the foundation for efficient, reliable data movement. By embracing this powerful framework, organizations can accelerate their data integration initiatives and unlock the full potential of their Kafka investment.

#ApacheKafka #KafkaConnect #DataIntegration #EventStreaming #DataPipelines #CDC #ETL #StreamProcessing #DataEngineering #RealTimeData #DistributedSystems #SourceConnector #SinkConnector #KafkaStreaming #DataArchitecture