The Essential Guide to Data Ingestion, ETL, and Reverse ETL: Building Modern Data Pipelines

Introduction

In today’s data-driven business landscape, the ability to efficiently collect, process, and distribute data has become a critical competitive advantage. Organizations must navigate a complex ecosystem of tools and approaches for moving data between systems—from traditional batch ETL processes to real-time streaming and the emerging field of reverse ETL. This comprehensive guide explores the essential tools and technologies that power modern data pipelines, helping you understand which solutions best fit your organization’s unique data integration needs.

Understanding Modern Data Movement

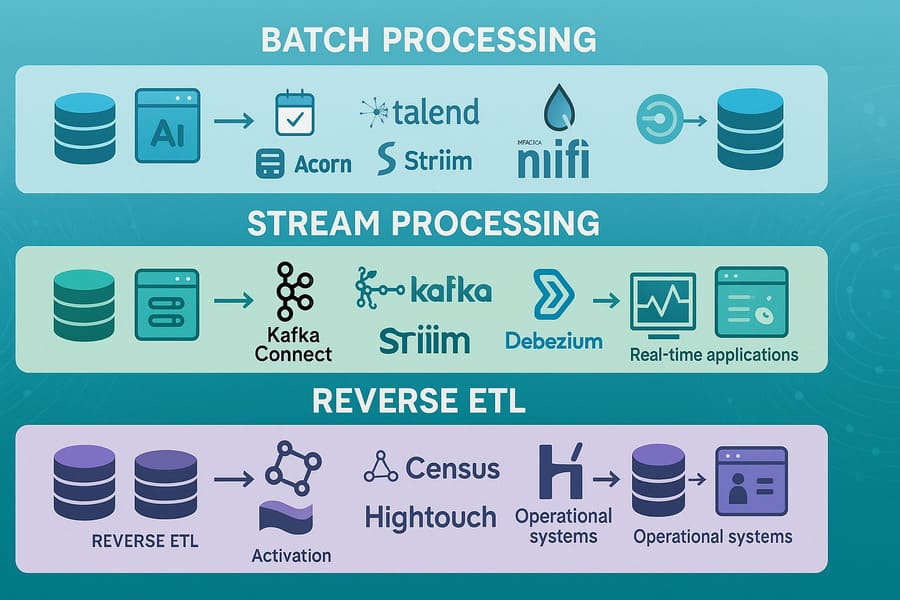

The data integration landscape has evolved dramatically over the past decade. What began as simple batch-oriented Extract, Transform, Load (ETL) processes has expanded into a diverse ecosystem that includes:

- Batch processing: Traditional scheduled data movement with thorough transformation

- Stream processing: Real-time data collection and processing for immediate insights

- Reverse ETL: Moving processed data from warehouses back to operational systems

Each approach serves different use cases and business requirements, often complementing rather than replacing one another in a comprehensive data strategy.

Batch Processing Tools: The Foundation of Data Integration

Batch processing remains the backbone of many data integration strategies, offering reliable, scheduled data movement with comprehensive transformation capabilities.

Apache NiFi: Data Flow Automation and Management

Apache NiFi provides an intuitive web-based interface for designing, controlling, and monitoring data flows. Built on the concepts of flow-based programming, NiFi excels at automating the movement of data between diverse systems.

Key strengths:

- Visual workflow design with drag-and-drop functionality

- Extensive component library for various data sources and destinations

- Data provenance for complete lineage tracking

- Fault-tolerant architecture with automated recovery

- Scalable from edge devices to enterprise clusters

Ideal for: Organizations seeking a flexible, open-source solution for complex data routing with strong governance requirements.

Talend: Enterprise Data Integration Platform

Talend offers a comprehensive suite of integration tools built around a visual development environment. Its code-generation approach provides both accessibility and performance for enterprise-scale integration needs.

Key strengths:

- Unified platform for batch, real-time, and API integration

- Extensive connector library for hundreds of applications and databases

- Built-in data quality and governance capabilities

- Robust metadata management

- Deployment flexibility across on-premises and cloud environments

Ideal for: Large enterprises with diverse integration requirements that need a consolidated platform with enterprise support.

Informatica PowerCenter: Enterprise Data Integration and Management

PowerCenter stands as one of the most established enterprise ETL platforms, known for its reliability and comprehensive features for large-scale data integration.

Key strengths:

- Mature, proven architecture for mission-critical workloads

- Advanced transformation capabilities for complex business logic

- Strong metadata management and governance features

- Robust error handling and recovery mechanisms

- Enterprise-grade security and compliance controls

Ideal for: Large organizations with complex data integration requirements, particularly in highly regulated industries.

Microsoft SSIS: SQL Server Integration Services for ETL Processes

As part of the SQL Server ecosystem, SSIS provides a powerful ETL tool that integrates seamlessly with other Microsoft data technologies.

Key strengths:

- Deep integration with SQL Server and Azure data services

- Familiar interface for organizations using Microsoft technologies

- Comprehensive transformation capabilities

- Excellent performance for SQL Server-centric workflows

- Strong debugging and monitoring tools

Ideal for: Organizations heavily invested in the Microsoft data stack looking for cost-effective integration.

Pentaho Data Integration (Kettle): Open-source ETL Tool

Pentaho Data Integration (Kettle) offers a powerful yet accessible approach to data integration through its visual interface and extensive component library.

Key strengths:

- Open-source foundation with commercial support options

- Intuitive visual ETL designer (Spoon)

- Extensive transformation capabilities

- Support for big data technologies

- Flexible deployment options

Ideal for: Organizations seeking a cost-effective ETL solution with a balance of usability and power.

Fivetran: Fully Managed Data Pipeline Service

Fivetran has revolutionized data pipeline management with its zero-maintenance, fully managed approach to data integration.

Key strengths:

- Automated schema detection and adaptation

- Pre-built connectors for hundreds of data sources

- Continuous synchronization with minimal configuration

- Self-healing connections that recover from errors

- Focus on ELT (Extract, Load, Transform) for modern data warehouses

Ideal for: Companies seeking to minimize data engineering overhead with a reliable, managed solution.

Matillion: Cloud-native Data Integration for Cloud Data Warehouses

Matillion provides a purpose-built integration platform designed specifically for cloud data warehouses like Snowflake, BigQuery, and Redshift.

Key strengths:

- Native optimization for cloud data warehouse platforms

- Visual transformation builder with advanced capabilities

- Data orchestration and scheduling

- Push-down processing leveraging warehouse compute

- Balance of accessibility and power for complex transformations

Ideal for: Organizations building cloud data warehouse environments seeking efficient, purpose-built integration.

Stitch: Simple, Extensible ETL Service

Stitch offers a straightforward, developer-friendly approach to data integration with its focus on simplicity and reliability.

Key strengths:

- Quick setup with minimal configuration

- Focus on data extraction and loading (EL)

- Support for common data sources and warehouses

- Open-source Singer specification for extensibility

- Transparent, consumption-based pricing

Ideal for: Small to mid-sized organizations looking for a straightforward, reliable solution for data warehouse loading.

Stream Processing Tools: Real-time Data Integration

As business requirements increasingly demand real-time insights, stream processing technologies have become essential components of modern data architectures.

Apache Kafka Connect: Framework for Connecting Kafka with External Systems

Kafka Connect extends the powerful Apache Kafka streaming platform with standardized connectors for integrating with external systems.

Key strengths:

- Seamless integration with Kafka’s distributed architecture

- Fault-tolerant, scalable connector framework

- Extensive ecosystem of source and sink connectors

- Schema management and evolution

- Exactly-once delivery semantics

Ideal for: Organizations building event-driven architectures around Apache Kafka as a central nervous system.

Striim: Real-time Data Integration and Streaming Analytics

Striim combines real-time data integration with in-memory stream processing for immediate analytical insights.

Key strengths:

- End-to-end platform for collection, processing, and delivery

- Low-impact change data capture from major databases

- In-memory stream processing with SQL-based analytics

- Rich visualization capabilities

- Support for both streaming and batch processing patterns

Ideal for: Enterprises requiring immediate insights from operational data with minimal latency.

Debezium: Change Data Capture (CDC) for Streaming Database Changes

Debezium specializes in change data capture, converting database transaction logs into standardized event streams.

Key strengths:

- Non-intrusive capture from database transaction logs

- Support for major databases including PostgreSQL, MySQL, SQL Server, and Oracle

- Integration with Apache Kafka ecosystem

- Exactly-once processing guarantees

- Preservation of transaction boundaries

Ideal for: Organizations implementing event-driven architectures based on database changes.

Confluent Platform: Enterprise-ready Event Streaming Platform

Built around Apache Kafka, Confluent Platform provides a comprehensive, enterprise-grade solution for event streaming.

Key strengths:

- Complete event streaming platform with enhanced Kafka

- Extensive connector ecosystem

- Schema Registry for data governance

- ksqlDB for stream processing with SQL

- Advanced security, monitoring, and management

Ideal for: Enterprises building business-critical event streaming infrastructure requiring security, reliability, and support.

StreamSets: Dataflow Performance Management Platform

StreamSets offers a unique approach to data integration focused on resilience to change and comprehensive dataflow management.

Key strengths:

- Visual pipeline design with real-time data preview

- Smart data drift handling for schema changes

- Comprehensive monitoring and performance management

- Support for both batch and streaming patterns

- Dataflow-as-code approach for DevOps integration

Ideal for: Organizations requiring resilient data pipelines that can adapt to changing schemas and sources.

Reverse ETL: Activating Data Warehouse Insights

The newest frontier in data integration, reverse ETL addresses the critical challenge of moving processed data from warehouses back to operational systems where it can drive action.

Omnata: Integration Platform for Salesforce and Snowflake

Omnata specializes in connecting Salesforce with Snowflake, enabling bidirectional data flow between CRM and the data warehouse.

Key strengths:

- Native Salesforce integration using Lightning components

- Direct Snowflake connectivity without middleware

- Real-time and batch synchronization options

- Embedded analytics capabilities

- Respect for Salesforce security model

Ideal for: Organizations heavily invested in both Salesforce and Snowflake seeking seamless integration.

Census: Operational Analytics Platform for Syncing Data to Business Tools

Census pioneered the reverse ETL category with its focus on synchronizing warehouse data to operational systems.

Key strengths:

- Extensive destination support for sales, marketing, and customer success tools

- SQL-based data modeling

- Visual sync builder for non-technical users

- Comprehensive monitoring and alerting

- Bi-directional syncing capabilities

Ideal for: Data-driven organizations looking to operationalize warehouse insights across business tools.

Hightouch: Data Activation Platform

Hightouch enables teams to leverage data warehouse insights in their everyday operational tools through its data activation platform.

Key strengths:

- Flexible audience building with visual or SQL-based models

- Extensive destination support

- Sophisticated identity resolution

- Incremental sync optimization

- Visual, no-code interfaces for business users

Ideal for: Organizations seeking to democratize data access and activate warehouse insights.

Polytomic: Enterprise Data Syncing Platform

Polytomic offers enterprise-grade data synchronization across the organization’s application ecosystem.

Key strengths:

- Comprehensive connectivity for enterprise applications

- Bidirectional sync capabilities

- Enterprise security and governance features

- Advanced transformation during synchronization

- Flexible deployment options including on-premises

Ideal for: Large enterprises requiring secure, scalable data synchronization across complex application landscapes.

Building a Comprehensive Data Integration Strategy

Modern organizations typically require a mix of batch, streaming, and reverse ETL technologies to create a complete data integration architecture. When designing your strategy, consider these key factors:

Data Latency Requirements

- Batch processing: Suitable for daily or hourly updates where immediate action isn’t critical

- Stream processing: Essential for real-time analytics, monitoring, and immediate decision-making

- Reverse ETL: Important for operational systems that need frequent updates from analytical data

Technical Expertise and Resources

- Managed services (like Fivetran and Stitch) minimize engineering overhead

- Visual platforms (like NiFi and Matillion) balance accessibility with customization

- Framework approaches (like Kafka Connect) offer maximum flexibility but require more expertise

Scale and Complexity

- Enterprise platforms (like Informatica and Talend) excel at complex, large-scale integration

- Specialized tools (like Debezium and Hightouch) address specific integration patterns efficiently

- Cloud-native solutions (like Matillion) optimize for modern cloud data warehouses

Conclusion

As data volumes continue to grow and business requirements demand ever-faster insights, building an effective data integration architecture has become essential for competitive advantage. By understanding the landscape of batch processing, stream processing, and reverse ETL tools, organizations can create a comprehensive strategy that enables data to flow seamlessly between systems—ensuring that valuable insights are available wherever decisions are made.

Whether you’re just beginning your data integration journey or looking to modernize existing processes, this guide provides a foundation for evaluating and selecting the right tools for your specific requirements. By thoughtfully combining these technologies, you can build a flexible, scalable data pipeline architecture that evolves with your organization’s needs.

Hashtags

#DataIntegration #ETL #ReverseETL #DataEngineering #StreamProcessing #BatchProcessing #DataPipelines #DataWarehouse #RealTimeData #ApacheKafka #ApacheNiFi #Fivetran #Matillion #Striim #Debezium #Census #Hightouch #ModernDataStack #DataActivation #ChangeDataCapture