Designing a Well-Architected Databricks Lakehouse: Ten Decisions That Matter

Why this matters

You’ve got Spark, Delta tables, and a dozen pipelines—and yet queries are slow, costs drift, and governance is messy. A well-architected Lakehouse doesn’t happen by accident. It’s a set of opinionated decisions you make up-front and enforce over time. Below are the ten choices that move the needle for mid-level data engineers building on Databricks.

The Ten Decisions

1) Data Format & Table Design: Delta Lake first

- Default to Delta for ACID, time travel, schema evolution, and fast reads.

- Table types: bronze (raw), silver (validated), gold (serving).

- Partitioning: only on low-cardinality, high-selectivity columns you actually filter by (e.g.,

ingest_date,event_date,country). - Indexing: use Z-ORDER to co-locate filter columns (e.g.,

customer_id,order_id) for large tables.

OPTIMIZE sales_gold ZORDER BY (customer_id, order_date);

Pitfalls: Over-partitioning (thousands of small folders) kills performance; Z-ORDER the columns you filter on, not every column someone might filter on.

2) Medallion Architecture & Data Contracts

- Bronze → Silver → Gold forces hygiene and separation of concerns.

- Attach data contracts at the silver boundary: define schema, semantics, SLAs, PII tags, and quality rules.

- Gold is for domain-oriented, analytics-ready models (star/snowflake).

Pitfalls: Skipping silver leads to brittle gold tables that fix data quality in the last mile. Don’t.

3) Catalog & Governance with Unity Catalog

- Centralize permissions, lineage, data masking, and audits in Unity Catalog.

- Adopt three-level naming:

catalog.schema.tablewith clear domains (FINANCE,SALES,MARKETING). - Use Row/Column-Level Security and data classification tags; restrict PII at the column.

Pitfalls: Mixing hive metastore and Unity Catalog causes chaos. Pick UC and migrate deliberately.

4) Ingestion Pattern: Auto Loader (cloudFiles) by default

- For files in object storage, Auto Loader handles schema inference, incremental discovery, and exactly-once semantics.

df = (spark.readStream.format("cloudFiles")

.option("cloudFiles.format", "json")

.load("s3://raw/transactions/"))

df.writeStream.format("delta").option("checkpointLocation","s3://chk/tx").table("bronze.transactions")

- For CDC or databases, choose partner connectors or native ingestion services and still land Bronze as Delta.

Pitfalls: DIY file listings or ad-hoc notebooks lead to missed files and duplicates.

5) Pipelines & Orchestration: DLT & Workflows

- Use Delta Live Tables (DLT) for declarative pipelines, expectations, and automatic retries/backfills.

- Use Workflows (jobs) to schedule notebooks, SQL, and DLT with robust dependencies.

DLT example (SQL):

CREATE LIVE TABLE silver_orders

TBLPROPERTIES ("quality" = "silver")

AS

SELECT * FROM LIVE.bronze_orders

WHERE _rescued_data IS NULL;

Pitfalls: Cron-style scripts without lineage or quality rules make debugging painful. Prefer DLT + expectations.

6) Streaming vs Batch: choose by semantics, not hype

- If the business needs sub-minute SLAs or event-time correctness, use structured streaming.

- If SLAs are hourly/daily and sources are batch, stay batch.

- You can unify with Delta: streaming in, batch out—or vice versa.

When to choose which

| Scenario | Choose | Why |

|---|---|---|

| Real-time fraud scoring | Streaming | Low latency + stateful aggregations |

| Hourly BI dashboards | Batch | Simple, cheaper |

| Clickstream sessionization | Streaming | Event time + watermarks |

| Daily finance close | Batch | Deterministic windows |

Pitfalls: Always-streaming is not a strategy; it’s an invoice.

7) Compute Strategy: Clusters vs SQL Warehouses

- All-purpose/Job clusters: flexible Spark with autoscaling; great for ETL/ML.

- SQL Warehouses: serverless or pro; optimized for BI concurrency, governed by UC, photon-accelerated.

Quick comparison

| Workload | Prefer |

|---|---|

| ELT with DLT, heavy transforms | Job cluster |

| Ad-hoc notebooks | All-purpose cluster |

| Dashboard/BI queries | SQL Warehouse |

| Mixed ETL + ad-hoc | Two endpoints: job + small all-purpose |

Pitfalls: One giant all-purpose cluster for everything → noisy neighbors, unpredictable costs.

8) Performance & Cost Controls

- Turn on Photon where possible (ETL and SQL), size clusters from small → up, and enable autoscaling with caps.

- Schedule OPTIMIZE + VACUUM (with retention policy) for your largest tables; compact small files.

- Track unit costs: $ per 1k rows, per job, per dashboard query; kill the worst offenders.

OPTIMIZE clicks_silver WHERE event_date >= DATE_SUB(CURRENT_DATE, 7);

VACUUM clicks_silver RETAIN 168 HOURS;

Pitfalls: Blind autoscaling, never compacting, and leaving default retention forever.

9) Security & Networking

- Prefer serverless where it fits; otherwise use VPC-to-VPC peering/PrivateLink, credential passthrough, and table ACLs in UC.

- Encrypt at rest (cloud default) and enforce workspace-per-environment (Dev, QA, Prod) with separate catalogs.

Pitfalls: Single shared workspace for all tiers; mixing dev experiments with prod data.

10) Observability & Reliability

- Use Lakehouse Monitoring/metrics and native observability (query history, job run logs, lineage).

- Instrument pipelines with event logs and capture SLAs (freshness, completeness, accuracy).

- Alert on late data, failed expectations, skew, small-file explosion.

Pitfalls: Treating the lakehouse like a black box—until an exec asks “why is the dashboard empty?”

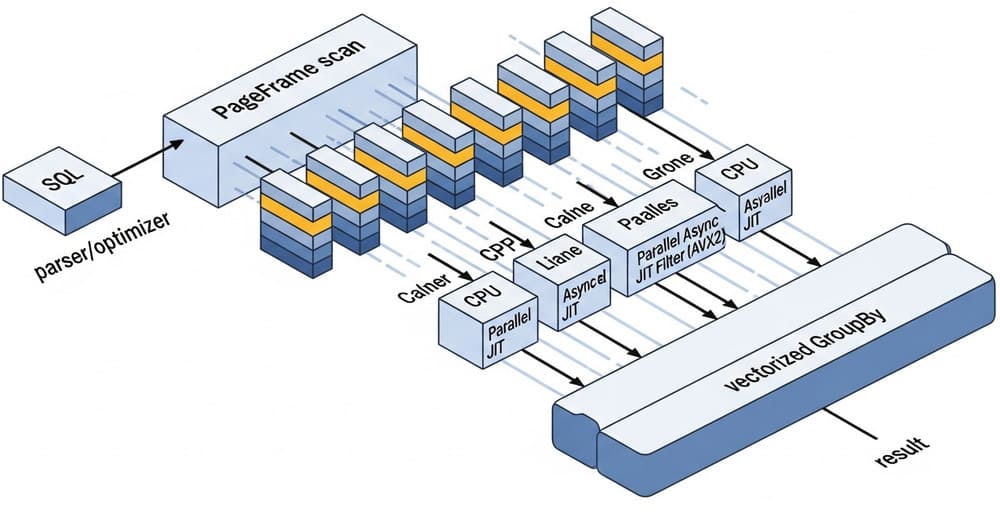

Reference Architecture at a Glance

- Storage: Object store + Delta tables.

- Governance: Unity Catalog (catalog→schema→table), data classification, RLS/CLS.

- Ingestion: Auto Loader to Bronze; connectors/CDC for DBs.

- Processing: DLT for pipeline DAGs; Workflows for orchestration.

- Serving: SQL Warehouses for BI; curated Gold tables per domain.

- Ops: Photon, autoscaling, OPTIMIZE/VACUUM, monitoring, cost budgets.

Example: Bronze→Silver with expectations (DLT, Python)

from dlt import *

@dlt.table(

comment="Validated transactions",

table_properties={"quality":"silver"}

)

@expect_or_drop("valid_amount", "amount >= 0")

def silver_transactions():

return dlt.read_stream("bronze_transactions").select(

"txn_id","customer_id","amount","currency","txn_ts"

)

This enforces data quality at the pipeline edge and keeps gold clean.

Common Pitfalls (and how to avoid them)

- Small-file hell: Consolidate with Auto Loader + OPTIMIZE, set sensible trigger intervals.

- Schema drift silently breaking jobs: Enable expectations and schema evolution with guardrails (fail on incompatible changes).

- One catalog to rule them all: Use multiple catalogs by environment and domain; keep blast radius small.

- Ad-hoc notebooks as production: Wrap in Workflows, version control, and code reviews.

- No ownership: Assign table and pipeline owners; publish SLAs in README tables.

Best Practices Checklist

- Delta everywhere; Z-ORDER on primary filters.

- Medallion layers with contracts at silver.

- Unity Catalog for all permissions & lineage.

- Auto Loader for files, DLT for pipelines.

- Clear split: clusters for ETL/ML, SQL Warehouses for BI.

- Photon + autoscaling + job caps.

- OPTIMIZE/VACUUM as scheduled maintenance.

- Private networking + workspace per env.

- Monitor freshness, volume, quality; alert on drift.

- Cost KPIs per pipeline and dashboard.

Conclusion & Takeaways

A Databricks Lakehouse scales when you decide—not when you hope. Commit to Delta, codify medallions and contracts, centralize governance in Unity Catalog, and use DLT/Workflows to make pipelines observable and repeatable. Split compute by workload, keep security tight, and be ruthless on performance and cost. Do these ten things, and you’ll get a lakehouse that’s fast, safe, and affordable.

Call to action:

Want a quick architecture review? Share your current layout (catalogs, clusters, and 3–5 critical tables). I’ll map gaps to this checklist and suggest a 30-day hardening plan.

Internal link ideas (topics you might interlink on your site)

- Delta Lake compaction strategies (OPTIMIZE, Z-ORDER, file sizing)

- Unity Catalog: RBAC, row/column security, and masking patterns

- DLT vs. Workflows: when to prefer each for pipelines

- Streaming design with watermarking and stateful aggregations

- FinOps for Databricks: unit economics and job budgeting

- Data contracts at the silver layer: schema governance in practice

Image prompt

“A clean, modern lakehouse architecture diagram for Databricks showing object storage with Delta tables (bronze/silver/gold), Unity Catalog governance, Auto Loader ingestion, DLT pipelines, SQL Warehouses for BI, and observability—minimalistic, high contrast, 3D isometric style.”

Tags

#Databricks #Lakehouse #DeltaLake #UnityCatalog #DataEngineering #DLT #Spark #DataGovernance #StreamingData #FinOps

Leave a Reply