Designated Timestamp & Partitions in QuestDB: How to Nail Schema Design for Fast Time-Series Analytics

Meta description (156 chars):

Learn how to choose the right designated timestamp and partition strategy in QuestDB. Pragmatic rules, examples, pitfalls, and SQL you can use today.

Why this matters (quick story)

You’ve got a firehose of metrics—devices, services, or trades—pouring into QuestDB. Queries are fine at first, then dashboards slow, retention chores hurt, and backfills get scary. Nine times out of ten, the root cause is schema: the designated timestamp and partitions. Get these right and most performance problems vanish.

What “designated timestamp” means (and why you care)

In QuestDB you elect one timestamp column as the time axis for the table. That choice unlocks the time-series engine: partition pruning, interval scans, ASOF/LATEST semantics, and more. Only timestamp or timestamp_ns columns can be designated, and there’s exactly one per table. (QuestDB)

You can also set a dynamic timestamp in a query with timestamp(column)—handy if you need to “pivot” to a different time column for analysis without changing the table. (QuestDB)

Create example:

CREATE TABLE sensor_readings (

ts TIMESTAMP, -- designated timestamp

device_id SYMBOL CAPACITY 1_000_000 NOCACHE,

temperature DOUBLE,

status SYMBOL,

location STRING

) TIMESTAMP(ts) PARTITION BY DAY WAL;

TIMESTAMP(ts)elects the designated timestamp.PARTITION BY DAYlays out files by day.WAL(write-ahead log) is the default for new tables and is what you want for reliability and ingestion concurrency. (See “Write-Ahead Log” concept.) (QuestDB)

Partitions: how QuestDB physically organizes your time

QuestDB splits data by time intervals: NONE, YEAR, MONTH, WEEK, DAY, HOUR. Partitioning is only available when you have a designated timestamp. ILP-created tables default to DAY (configurable via line.default.partition.by). (QuestDB)

Partitions are a performance superpower:

- The optimizer skips entire files for out-of-range queries (partition pruning).

- Retention is as simple as dropping old partitions.

- Out-of-order ingestion is handled efficiently at partition boundaries. (QuestDB)

Introspect partitions:

SHOW PARTITIONS FROM sensor_readings;

-- or

SELECT * FROM table_partitions('sensor_readings');

(QuestDB)

Choosing the right partition grain (practical rules)

Pick the coarsest partition that still prunes effectively for your queries. Too fine (e.g., HOUR) → lots of tiny files and higher overhead. Too coarse (e.g., YEAR) → poor pruning.

Decision table (rules of thumb):

| Typical query window | Ingestion rate | Cardinality (keys/tags) | Recommended PARTITION BY | Rationale |

|---|---|---|---|---|

| ≤ 24h (dashboards, alerts) | High (100k+ rows/sec) | High | DAY | Great pruning; manageable file counts. |

| 1–8 hours (low-latency ops) | Very high | Very high | HOUR | Enables tight pruning and parallelism; watch file counts. |

| 7–90 days (BI, finops) | Moderate | High | WEEK or MONTH | Coarser partitions = fewer files + easy retention. |

| ≥ 1 year (archives) | Low | Low | YEAR | Minimal overhead; queries usually pre-filter by year. |

Use SHOW PARTITIONS in staging to see how many files you create and adjust. (Concepts and “Design for performance” discuss interval choice and record counts per partition.) (QuestDB)

Symbols vs strings: model high-cardinality tags correctly

QuestDB’s SYMBOL stores repetitive strings via an internal dictionary (int↔string), boosting scan and filter speed and saving disk. You can set CAPACITY and CACHE/NOCACHE per column; index symbols when you filter on them often. (QuestDB)

Quick rules:

- Use

SYMBOLfor tags likedevice_id,region,status. - Use

STRINGfor free-text or large, unique values (location, error messages). - For very high cardinality (100k+ distinct), consider

NOCACHEto avoid heap pressure; still fast due to memory-mapped lookups. (QuestDB)

Query patterns that benefit from the designated timestamp

- Interval scans:

WHERE ts BETWEEN now()-24h AND now()prunes partitions before scanning. (QuestDB) - Time-series joins:

ASOF JOINuses the table’s designated timestamp by default to match “closest preceding” rows. (QuestDB) - Latest per key:

LATEST ON ts PARTITION BY device_idgets last observations cleanly. (QuestDB)

Real example: rolling up minute stats and querying

Load synthetic data

CREATE TABLE metrics (

ts TIMESTAMP,

host SYMBOL CAPACITY 10000 NOCACHE,

cpu DOUBLE,

mem DOUBLE

) TIMESTAMP(ts) PARTITION BY DAY WAL;

INSERT INTO metrics

SELECT

timestamp_sequence('2025-01-01T00', 1000L) as ts, -- 1 row/sec

rnd_symbol(100, 0, 9999, 0) as host,

rnd_double(0.0, 100.0, 2) as cpu,

rnd_double(0.0, 100.0, 2) as mem

FROM long_sequence(10_000_000);

Downsample with SAMPLE BY to 1-minute rollups (materialize if it’s a hot query):

-- Ad-hoc:

SELECT ts, host, avg(cpu) AS cpu_avg, avg(mem) AS mem_avg

FROM metrics

SAMPLE BY 1m ALIGN TO CALENDAR

FILL(LINEAR) BY host;

-- Materialized view (optional):

CREATE MATERIALIZED VIEW metrics_1m

AS SELECT ts, host, avg(cpu) cpu_avg, avg(mem) mem_avg

FROM metrics SAMPLE BY 1m ALIGN TO CALENDAR FILL(LINEAR) BY host;

Because ts is designated and the table is partitioned, the engine prunes day partitions and executes vectorized scans quickly. (See designated timestamp, partitions, and SQL reference.) (QuestDB)

Retention that won’t wake you at 2am

Retention is deleting time ranges. With partitions, it’s one command and non-blocking:

-- Drop everything before 2025-06-01:

ALTER TABLE metrics DROP PARTITION WHERE ts < '2025-06-01';

Dropping partitions is designed to be non-blocking; you get atomicity per partition, and it’s the recommended retention path. (QuestDB)

Important: You cannot change a table’s partitioning after creation. If you chose poorly (we’ve all done it), create a new table with the right

PARTITION BY, copy data, then swap. Plan the grain up front. (QuestDB)

Common pitfalls (and how to avoid them)

- Wrong grain (too fine):

HOURfor slow data → too many tiny partitions = overhead. PreferDAYunless you truly query small hour slices frequently. (QuestDB) - No designated timestamp: You lose partitioning, pruning, ASOF/LATEST defaults. Always set

TIMESTAMP(col)at creation. (QuestDB) - Storing tags as

STRING: Switch toSYMBOLwith proper capacity; considerNOCACHEfor huge cardinalities; index hot filters. (QuestDB) - Relying on PGWire for heavy ingest: Prefer ILP for raw throughput; ILP-created tables default to

PARTITION BY DAY(configurable). (QuestDB) - Retention with row deletes: Don’t. Use

ALTER TABLE … DROP PARTITIONinstead. (QuestDB)

Quick reference: patterns you’ll use weekly

- Create with day partitions

CREATE TABLE t (...) TIMESTAMP(ts) PARTITION BY DAY WAL; - Check partition layout

SHOW PARTITIONS FROM t; - Latest per symbol

SELECT LATEST ON ts PARTITION BY device_id * FROM t; - Time-proximity join

SELECT * FROM a ASOF JOIN b ON a.ts = b.ts AND a.device_id = b.device_id; - Dynamic timestamp at query time

SELECT * FROM t TIMESTAMP(other_ts);

References for these behaviors: designated timestamp, partitions, ASOF/LATEST docs. (QuestDB)

Internal link ideas (official)

- Designated timestamp — concept, properties, dynamic timestamp. (QuestDB)

- Partitions — available intervals, defaults, SHOW PARTITIONS. (QuestDB)

- CREATE TABLE — syntax for

TIMESTAMP(col)andPARTITION BY. (QuestDB) - Schema design essentials — best practices for symbols, partitions, TTL, dedup. (QuestDB)

- ALTER TABLE DROP PARTITION — non-blocking retention. (QuestDB)

- Symbol type — capacity, cache, indexing. (QuestDB)

Summary & call-to-action

If you remember just three things:

- Always elect a designated timestamp.

- Choose the coarsest partition that still prunes well for your queries (usually DAY).

- Model tags as SYMBOL, not

STRING, and plan retention with DROP PARTITION.

Want a hands-on follow-up for your stack (ILP vs PGWire, Kafka Connect, or Grafana dashboards)? Tell me your ingest rate, query windows, and tag cardinalities—I’ll propose a concrete schema and benchmark script.

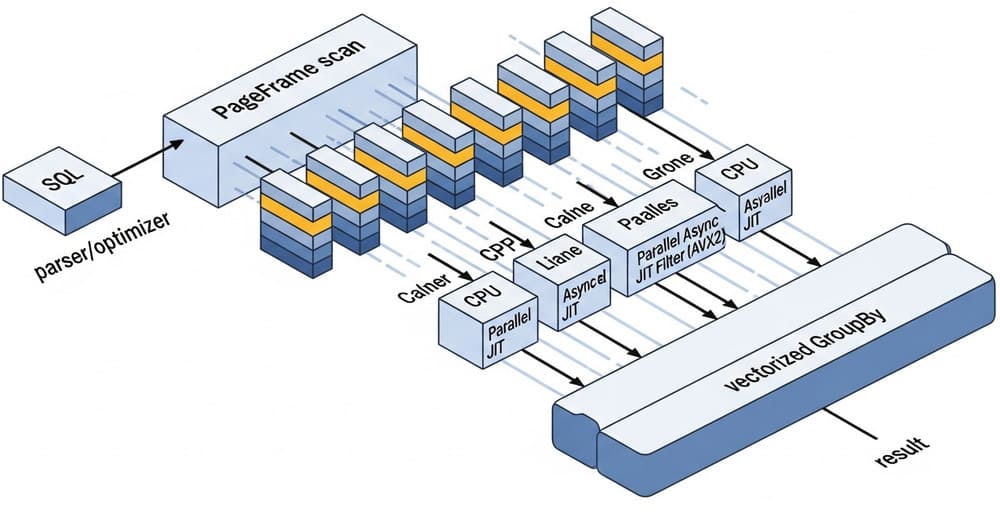

Image prompt (for AI tools)

“A clean, modern architecture diagram of a QuestDB time-series table showing a designated timestamp flowing into partitioned storage (YEAR/MONTH/WEEK/DAY/HOUR), with pruning and retention via DROP PARTITION — minimalistic, high contrast, 3D isometric style.”

Tags

#QuestDB #TimeSeries #DatabaseDesign #Partitions #DesignatedTimestamp #DataEngineering #Performance #SQL #Symbols #Retention

Leave a Reply