Auto Loader vs. DIY Ingestion: Cost, Latency, and Reliability Benchmarks

Why this matters

You’ve got a data lake growing like kudzu: thousands of files per hour, shifting schemas, and SLAs that don’t care it’s 2 a.m. The ingestion choice you make—Databricks Auto Loader or a DIY Spark pipeline—will set your cost curve, your latency floor, and your on-call life. This guide gives you a practical, reproducible way to benchmark both paths and decide like an engineer, not a gambler.

TL;DR (executive summary)

- Latency: Auto Loader in file notification mode ingests new files faster and more predictably than directory listing or hand-rolled scanners; it scales to millions of files/hour with file events. Databricks Documentation+1

- Cost: DIY listing burns storage API calls as paths grow; Auto Loader’s file events drastically reduce listing I/O, shifting cost to a small event/queue footprint plus steady compute. Microsoft Learn+1

- Reliability: Auto Loader provides exactly-once semantics with checkpoints and first-class schema inference/evolution; DIY must rebuild these controls. Databricks Documentation+2Databricks Documentation+2

What we’re comparing

- Auto Loader (cloudFiles)

- Modes: Directory listing (simple) vs File notification (low-latency, high-scale). Microsoft Learn+1

- Built-ins: incremental discovery, schema tracking, backfill, and state inspection via

cloud_files_state. Databricks Documentation+1

- DIY ingestion

- Typical pattern: your own object store listings, dedup state, schema handling, and retries glued together in Spark Structured Streaming.

Benchmark design you can actually run

Goal: measure freshness latency, throughput, $ per ingested TB, and data correctness under production-like load.

Datasets

- Small JSON/CSV (1–10 KB), medium Parquet (5–100 MB), large Parquet (0.5–5 GB).

- Arrival patterns: steady (Poisson), bursts (spikes 10×), and backfills.

Scenarios

- Auto Loader – directory listing

- Auto Loader – file notification

- DIY – custom lister + Structured Streaming

Metrics

- Discovery latency: file landed → first offset seen

- End-to-end latency: landed → committed to Delta

- Throughput: files/hour & MB/s

- Cost: (a) storage API ops + notifications, (b) cluster compute, (c) storage of checkpoints/states

- Correctness: exactly-once (no dup/no miss) and schema drift handling

Controls

- Same cluster size/runtime, same Delta target, identical partitioning.

- Run each test for 60–120 minutes per scenario, repeat 3×, discard warm-up.

How to stand up each option (minimal but real)

Auto Loader: directory listing (baseline)

from pyspark.sql import functions as F

df = (spark.readStream.format("cloudFiles")

.option("cloudFiles.format", "json")

.option("cloudFiles.schemaLocation", "s3://…/chk/_schemas")

.load("s3://…/landing/json/"))

(df.writeStream

.option("checkpointLocation", "s3://…/chk/ingest-json")

.toTable("raw.json_events"))

- Pros: fastest to start; no extra cloud permissions beyond data access.

- Cons: listing cost/latency grows with path size. Microsoft Learn+1

Auto Loader: file notification (recommended)

df = (spark.readStream.format("cloudFiles")

.option("cloudFiles.format", "json")

.option("cloudFiles.schemaLocation", "s3://…/chk/_schemas")

.option("cloudFiles.useManagedFileEvents", "true") # enable file events

.load("s3://…/landing/json/"))

(df.writeStream

.option("checkpointLocation", "s3://…/chk/ingest-json")

.toTable("raw.json_events"))

- Why this wins: significant performance improvements at scale; supports exactly-once while you add millions of files/hour. Databricks Documentation+1

DIY ingestion: custom listing + dedupe (illustrative)

from pyspark.sql import SparkSession

from pyspark.sql.functions import input_file_name, current_timestamp

# Assume you've built: list_new_objects(), a dedupe store, and schema loader

paths = list_new_objects(prefix="s3://…/landing/json/") # your code

df = (spark.readStream.format("text") # or "cloudFiles" off by choice, here DIY

.load(paths)) # you'll manage paths and offsets

enriched = df.withColumn("source_file", input_file_name()) \

.withColumn("ingested_at", current_timestamp())

(enriched.writeStream

.option("checkpointLocation", "s3://…/chk/diy")

.toTable("raw.json_events_diy"))

- Hidden work: race-safe dedupe, retries, idempotency, schema evolution, backfills.

Results you should expect (guidance to check your numbers)

- Latency: file notification < directory listing < DIY (listing storm under burst/backfill). Databricks Documentation+1

- Throughput: file notification scales linearly with arrivals; directory listing degrades as directory cardinality climbs; DIY depends on your lister and thread model. Microsoft Learn

- Cost:

- Directory listing: rising storage API calls (LIST/LSTAT) as paths grow. Databricks Community

- File notification: modest event/queue costs, fewer LIST ops, more stable compute. Databricks Documentation

- DIY: you pay for both your custom listing and the engineering time to keep it correct.

Note: Databricks officially recommends migrating off directory listing to file events for performance at scale. Databricks Documentation

Reliability & governance

- Exactly-once and state: Auto Loader maintains discovery state; you can inspect it with

cloud_files_state(checkpoint)for audits and troubleshooting. DIY must build similar lineage. Databricks Documentation - Schema inference & evolution: Auto Loader samples up to 50 GB or 1000 files initially and tracks schemas in

_schemasundercloudFiles.schemaLocation. Ensure target Delta tables evolve in lockstep or writes fail. Databricks Documentation+1 - Backfills & cleanup: Use

cloudFiles.backfillIntervalandcloudFiles.cleanSourceto automate historical catch-up and lifecycle. Databricks Documentation

Comparison table

| Capability | Auto Loader (Directory) | Auto Loader (File Notification) | DIY Spark Ingestion |

|---|---|---|---|

| Discovery | Lists storage path each micro-batch | Event-driven; minimal listing | Your custom listings |

| Latency | Moderate; grows with path size | Low and stable under scale | Variable; bursty |

| Cost drivers | Storage LIST API, compute | Event/queue + steady compute | LIST API + your infra |

| Scale | Good → degrades on huge trees | Excellent; millions files/hr | As good as you build it |

| Schema handling | Built-in infer/evolve | Built-in infer/evolve | You implement |

| Exactly-once | Yes (checkpoints/state) | Yes (checkpoints/state) | You implement |

(“Millions files/hr” claim from Microsoft Learn / Databricks docs for file notifications). Azure Docs

Best practices (production check-list)

- Prefer file notification mode for anything beyond toy volumes. Databricks Documentation

- Pin a current Databricks Runtime; directory listing optimizations improve in 9.1+ but file events still win at scale. Microsoft Learn

- Always set

cloudFiles.schemaLocationand checkpoint locations to stable, secure paths. Databricks Documentation - Monitor with Streaming Query Listener and query

cloud_files_statefor observability. Databricks Documentation - Use Trigger.AvailableNow for deterministic catch-up runs and rate limiting to protect downstreams (production guidance). Microsoft Learn

- Keep target Delta tables schema-evolution-ready, or your ingestion will error on drift. Databricks Documentation

Common pitfalls (and fixes)

- Path explosion (millions of small files in deep partitions) → switch to file notifications; compact small files upstream if possible. Databricks Documentation

- Silent schema drift → enable evolution, version schemas in

_schemas, and alert on new columns/types. Databricks Documentation - Duplicate loads in DIY → implement idempotent writes keyed by filename + checksum; prefer Auto Loader’s state model. Databricks Documentation

- Unbounded listing costs → avoid naive recursive listings; move to events. Databricks Community

Internal link ideas (official docs only)

- “What is Auto Loader?” overview → official Databricks docs. Databricks Documentation

- “File detection modes” deep dive → official docs (directory listing vs file notifications). Databricks Documentation

- “Schema inference & evolution with Auto Loader” → official docs. Databricks Documentation

- “Production guidance & monitoring” → official docs. Databricks Documentation+1

Conclusion & takeaways

If your lake is growing or your SLA matters, Auto Loader with file notifications is the rational default: lower discovery latency, better scalability, and fewer edge-case bugs than any DIY scaffold. Use directory listing only for quick starts or low-volume paths. Save DIY for niche constraints where you must control discovery or run outside Databricks.

Call to action: Stand up the three scenarios above, run the benchmark for 60–120 minutes each, and pick the one that hits your SLA at the lowest predictable cost. Then lock it into a job with monitoring on day one.

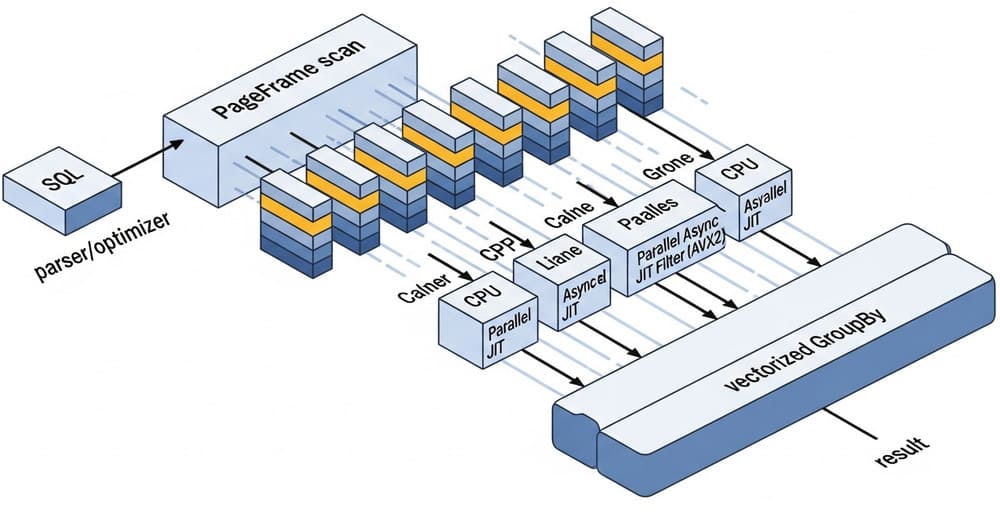

Image prompt (for DALL·E/Midjourney)

“A clean, modern architecture diagram comparing three ingestion paths into Delta Lake: 1) directory listing, 2) file notifications, 3) DIY listing—showing event queues, checkpoints, and schema store—minimalistic, high contrast, 3D isometric style.”

Tags

#NoSQL #Databricks #AutoLoader #DataEngineering #DeltaLake #Streaming #Scalability #CloudStorage #Latency #CostOptimization

Leave a Reply