K-Means Clustering: Unveiling Hidden Patterns in Your Data

In the vast landscape of data science and machine learning, K-means clustering stands as one of the most powerful and widely used unsupervised learning algorithms. From market segmentation to image compression, from document classification to anomaly detection, this elegant algorithm helps uncover hidden patterns and structures within data. In this article, we’ll dive deep into K-means clustering—explaining how it works, where it excels, and how you can leverage its capabilities to extract meaningful insights from your data.

What Is K-Means Clustering?

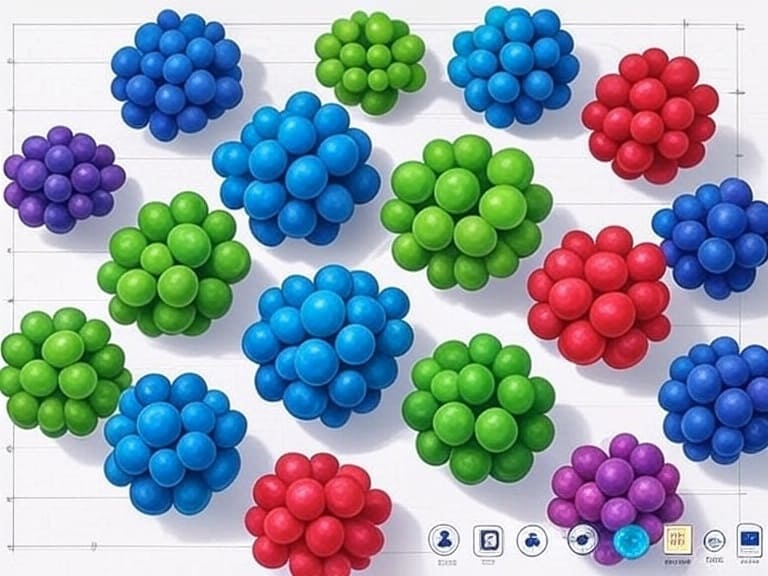

K-means clustering is an unsupervised machine learning algorithm that groups similar data points together into a predefined number of clusters (K). The algorithm works by identifying cluster centers, called centroids, and assigning each data point to the nearest centroid based on distance metrics.

Unlike supervised learning algorithms that rely on labeled training data, K-means operates without prior knowledge of group labels. It discovers patterns and relationships independently, making it particularly valuable for exploratory data analysis and situations where labeled data isn’t available.

The fundamental goal of K-means is simple yet powerful: minimize the variation within each cluster while maximizing the separation between different clusters. This balance creates meaningful groupings that reveal natural structures in your data.

How K-Means Clustering Works

The K-means algorithm follows a straightforward iterative process:

1. Initialization

The algorithm begins by randomly selecting K points from the dataset as initial centroids. These points serve as the starting centers for each cluster. The initialization step is critical, as different starting positions can lead to different final clusters.

Several initialization methods exist:

- Random selection: Choosing K random data points

- K-means++: Selecting initial centroids that are far away from each other

- Forgy method: Randomly assigning each data point to one of K clusters and computing their centroids

2. Assignment Step

Once the initial centroids are established, each data point in the dataset is assigned to the nearest centroid. This creates K clusters based on the minimum distance between data points and centroids. Common distance metrics include:

- Euclidean distance: The straight-line distance between two points

- Manhattan distance: The sum of absolute differences between coordinates

- Cosine similarity: The cosine of the angle between two vectors (particularly useful for high-dimensional data)

3. Update Step

After all data points are assigned to clusters, the algorithm recalculates each centroid by taking the mean of all points assigned to that cluster. This shifts the centroids to the center of their respective clusters.

4. Iteration

The assignment and update steps repeat iteratively until convergence. Convergence occurs when:

- Centroids no longer move significantly

- Data points no longer switch between clusters

- A maximum number of iterations is reached

This iterative refinement gradually improves the quality of clusters until an optimal or near-optimal solution is found.

A Simple Example of K-Means Clustering

To better understand how K-means works, let’s walk through a simple example with 2D data:

Imagine you have data about customers, with two features: annual income and spending score. You want to segment these customers into distinct groups to target marketing strategies more effectively.

- Initialize: You decide to create K=3 clusters and randomly select three customer data points as initial centroids.

- Assign: Each customer is assigned to the nearest centroid based on their income and spending score.

- Update: The centroids are recalculated by averaging the income and spending scores of all customers in each cluster.

- Iterate: Steps 2 and 3 repeat until the clusters stabilize.

The final result might reveal three distinct customer segments:

- High income, high spending (premium customers)

- Medium income, medium spending (standard customers)

- Low income, low spending (budget-conscious customers)

These insights could directly inform targeted marketing campaigns and product offerings for each segment.

Choosing the Optimal Number of Clusters (K)

One of the most challenging aspects of K-means clustering is determining the appropriate number of clusters. Since K-means requires you to specify K beforehand, finding the optimal value is crucial for meaningful results.

Several methods can help identify the best value for K:

The Elbow Method

The elbow method plots the relationship between the number of clusters and the Within-Cluster Sum of Squares (WCSS), which measures the compactness of clusters. As K increases, WCSS typically decreases because having more clusters means points are generally closer to their centroids.

The “elbow” in the resulting curve—where the rate of decrease sharply changes—often indicates the optimal K value. After this point, adding more clusters yields diminishing returns.

Silhouette Analysis

The silhouette coefficient measures how similar a data point is to its own cluster compared to other clusters. Values range from -1 to 1, with higher values indicating better-defined clusters.

By calculating the average silhouette score for different values of K, you can identify the K that produces the highest score and thus the most distinct clusters.

Gap Statistic

The gap statistic compares the within-cluster dispersion of your data to that of a reference distribution (usually generated through random sampling). The optimal K maximizes the gap between these distributions, indicating clusters that are significantly better than random.

Business Context

Beyond statistical methods, domain knowledge plays a crucial role. The number of clusters should make sense within your specific application—sometimes a solution with slightly worse statistical metrics might be more interpretable and useful in practice.

Strengths of K-Means Clustering

K-means offers several advantages that contribute to its popularity:

Simplicity and Efficiency

K-means is straightforward to understand and implement. Its linear time complexity (O(n)) makes it efficient for large datasets, especially compared to hierarchical clustering methods that often have quadratic complexity.

Scalability

The algorithm scales well to large datasets and can be parallelized across multiple processors or machines, making it suitable for big data applications.

Versatility

K-means works with various types of data and distance metrics, allowing adaptation to different problem domains. It’s particularly effective for spherical clusters of similar size.

Guaranteed Convergence

While K-means might converge to a local optimum rather than the global best solution, it’s guaranteed to converge eventually, providing stability in the results.

Limitations of K-Means Clustering

Despite its strengths, K-means has certain limitations to consider:

Sensitivity to Initialization

The final clusters can vary significantly depending on the initial centroids. Running the algorithm multiple times with different initializations can help mitigate this issue.

Fixed Number of Clusters

Having to specify K beforehand can be challenging when the underlying structure of the data is unknown. This requires additional analysis or domain expertise.

Assumption of Spherical Clusters

K-means works best when clusters are spherical, similarly sized, and have comparable densities. It struggles with irregular shapes, varying densities, or clusters with significantly different sizes.

Sensitivity to Outliers

Outliers can dramatically influence centroid positions, potentially distorting the resulting clusters. Preprocessing to remove outliers or using variants like K-medoids can address this issue.

Curse of Dimensionality

In high-dimensional spaces, distance metrics become less meaningful, potentially reducing the effectiveness of K-means. Dimensionality reduction techniques like PCA might be necessary before clustering.

Real-World Applications of K-Means Clustering

K-means clustering powers numerous applications across industries:

Customer Segmentation

Businesses use K-means to group customers based on purchasing behavior, demographics, and engagement metrics. These segments enable personalized marketing strategies and product recommendations.

For example, an e-commerce company might identify clusters representing:

- Frequent buyers with small purchases

- Occasional shoppers with large basket sizes

- Seasonal shoppers who only purchase during sales

- New customers still exploring the platform

Each segment would receive tailored communications and offers based on their characteristics.

Image Compression

K-means can reduce the number of colors in an image by clustering similar colors together and replacing each pixel with its cluster centroid. This technique, called color quantization, significantly reduces file sizes while preserving visual quality.

Document Clustering

By representing documents as vectors of word frequencies, K-means can group similar documents together. This facilitates content organization, recommendation systems, and efficient information retrieval in large document collections.

Anomaly Detection

Points that fall far from all cluster centroids may represent anomalies or outliers. This property makes K-means useful for detecting unusual patterns, fraudulent transactions, or system failures.

Recommendation Systems

K-means helps identify groups of users with similar preferences or products with similar characteristics, enabling more accurate recommendations by leveraging cluster information.

Geographic Clustering

From optimizing delivery routes to planning retail locations, K-means helps identify natural groupings of geographic points, supporting logistics and business strategy decisions.

Variants and Extensions of K-Means

Several adaptations of K-means address its limitations and extend its capabilities:

K-Means++

This initialization method selects initial centroids that are far apart from each other, significantly improving the algorithm’s performance and consistency. K-means++ has become the default initialization strategy in many implementations.

Mini-Batch K-Means

For very large datasets, mini-batch K-means updates centroids using random subsets of the data in each iteration. This approach dramatically reduces computation time while maintaining reasonable accuracy.

K-Medoids

Unlike K-means, which uses the mean of points to define centroids, K-medoids represents each cluster by one of its members. This makes the algorithm more robust to outliers and applicable to datasets where means cannot be computed.

Fuzzy K-Means (C-Means)

Rather than assigning each point to exactly one cluster, fuzzy K-means allows points to belong to multiple clusters with different degrees of membership. This captures ambiguity in cluster boundaries more naturally.

Kernel K-Means

By mapping data into a higher-dimensional space using kernel functions, kernel K-means can find non-linear cluster boundaries, addressing one of the core limitations of standard K-means.

Implementing K-Means Clustering

Several programming languages and libraries offer robust implementations of K-means:

Python

Python’s scikit-learn library provides a comprehensive implementation:

from sklearn.cluster import KMeans

import numpy as np

# Sample data

X = np.array([[1, 2], [1, 4], [1, 0],

[4, 2], [4, 4], [4, 0]])

# Create and fit the model

kmeans = KMeans(n_clusters=2, random_state=0).fit(X)

# Get cluster centers and labels

centers = kmeans.cluster_centers_

labels = kmeans.labels_

# Predict new data

new_data = np.array([[0, 0], [4, 4]])

kmeans.predict(new_data)

R

R’s built-in kmeans function provides a straightforward implementation:

# Sample data

data <- data.frame(

x = c(1, 1, 1, 4, 4, 4),

y = c(2, 4, 0, 2, 4, 0)

)

# Run K-means

result <- kmeans(data, centers = 2)

# Access results

centers <- result$centers

clusters <- result$cluster

MATLAB

MATLAB offers a built-in kmeans function:

% Sample data

X = [1 2; 1 4; 1 0; 4 2; 4 4; 4 0];

% Run K-means

[idx, C] = kmeans(X, 2);

% Plot results

scatter(X(:,1), X(:,2), 36, idx, 'filled');

hold on;

plot(C(:,1), C(:,2), 'kx', 'MarkerSize', 15, 'LineWidth', 3);

Best Practices for K-Means Clustering

To get the most out of K-means clustering, consider these best practices:

Data Preprocessing

- Scaling: K-means is sensitive to the scale of features. Standardize or normalize your data to ensure all features contribute equally.

- Handling Missing Values: Impute missing values or remove incomplete records before clustering.

- Outlier Treatment: Consider removing extreme outliers that might distort centroid positions.

Multiple Initializations

Run K-means multiple times with different random initializations and select the solution with the lowest within-cluster sum of squares. Most libraries have parameters like n_init to automate this process.

Feature Selection and Engineering

Not all features contribute equally to meaningful clusters. Use domain knowledge and techniques like Principal Component Analysis (PCA) to identify the most relevant features and reduce dimensionality.

Validation and Interpretation

- Visualize clusters using dimensionality reduction techniques like PCA or t-SNE for high-dimensional data.

- Calculate descriptive statistics for each cluster to understand their characteristics.

- Validate clusters against external data or business metrics when possible.

Combining with Other Techniques

Consider using K-means as part of a larger analysis pipeline:

- Apply hierarchical clustering first to estimate K

- Use K-means results as features for supervised learning models

- Combine with anomaly detection algorithms for comprehensive data exploration

Conclusion

K-means clustering stands as a fundamental algorithm in the data scientist’s toolkit, offering a balance of simplicity, efficiency, and effectiveness for discovering natural groupings in data. While it has limitations, particularly regarding cluster shapes and the need to specify K in advance, its strengths make it an excellent starting point for many clustering tasks.

By understanding how K-means works, addressing its limitations, and following best practices, you can harness this powerful algorithm to uncover valuable insights from your data. Whether you’re segmenting customers, compressing images, organizing documents, or detecting anomalies, K-means provides a solid foundation for exploring the hidden structure within your datasets.

As you apply K-means clustering to your own projects, remember that the most valuable insights often come from combining algorithmic results with domain expertise. The patterns that K-means reveals are just the beginning—interpreting what these patterns mean for your specific context is where the true value lies.

#KMeansClustering #UnsupervisedLearning #DataScience #MachineLearning #DataAnalytics #ClusterAnalysis #CustomerSegmentation #PatternRecognition #DataMining #BusinessIntelligence