Loki

In the ever-expanding landscape of observability tools, Grafana Loki has emerged as a game-changer for organizations seeking an efficient, cost-effective approach to log management. Named after the Norse god of mischief (and yes, that Marvel character), Loki brings a refreshingly streamlined approach to what has traditionally been a resource-intensive aspect of system monitoring.

Grafana Loki was introduced to the world in December 2018 at KubeCon in Seattle. Created by Grafana Labs, Loki was designed with a specific philosophy in mind: logs are incredibly valuable, but storing and analyzing them shouldn’t require mortgage-level investments in infrastructure.

This vision emerged from a practical challenge faced by many DevOps and SRE teams. Traditional logging systems like Elasticsearch can be resource-intensive and expensive at scale. While they offer powerful capabilities, the question arose: do we need full-text indexing for everything when most log queries are based on metadata and simple filtering?

Loki’s answer was a resounding “no,” leading to its design principle: index metadata, not content.

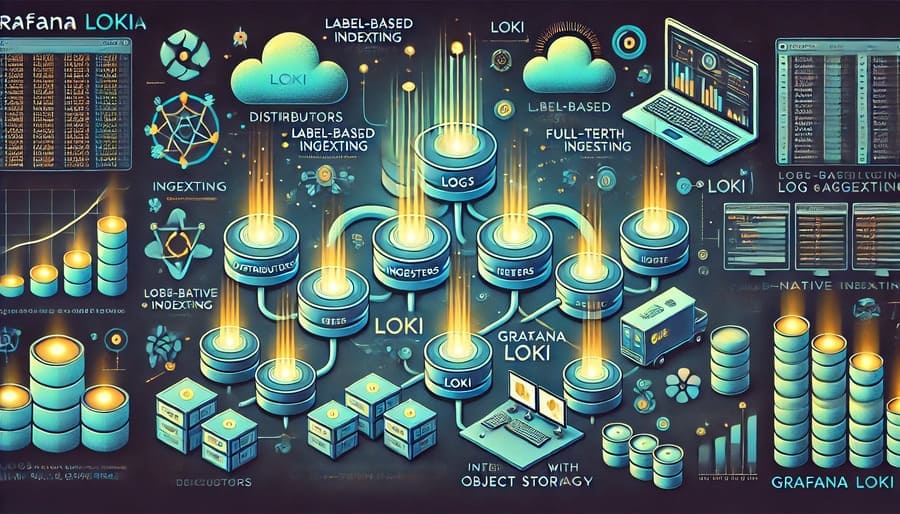

Loki’s architecture centers around a fundamentally different approach to log management:

Unlike traditional logging systems that index the full content of logs, Loki only indexes metadata in the form of labels (key-value pairs). This design choice dramatically reduces the resource requirements while still enabling powerful querying capabilities.

{app="payment-service", environment="production", server="us-west-2"}

These labels serve as the primary mechanism for filtering and finding logs, similar to how Prometheus handles metrics. This creates a consistent mental model for engineers working with both systems.

Loki’s architecture consists of several key components:

- Distributor: Receives incoming logs and distributes them to ingesters

- Ingester: Writes log data to the storage backend

- Querier: Handles processing of LogQL queries

- Storage: Split between index storage (for labels) and chunks storage (for log content)

- Compactor: Optimizes storage by compacting and deduplicating stored logs

Loki employs a multi-tiered storage approach:

- In-memory caching: Recent logs are kept in memory for fast access

- Object storage compatibility: Long-term storage leverages inexpensive cloud object stores like S3, GCS, or Azure Blob Storage

- Compression: Logs are compressed to minimize storage requirements

- Time-based sharding: Data is organized by time for efficient querying

Loki introduces LogQL, a query language inspired by PromQL (from Prometheus), which provides:

- Label-based filtering: Quickly narrow down to relevant log streams

- Content filtering: Search within log contents using regular expressions

- Aggregation operators: Calculate metrics from logs (counts, rates, etc.)

- Pipeline operators: Parse and transform log data on the fly

Example query to find error logs from a production payment service in the last hour:

{app="payment-service", environment="production"} |= "error" | json | count_over_time[1h]

By indexing only metadata instead of full log content, Loki drastically reduces:

- Storage costs: Often 10x less expensive than traditional solutions

- Memory requirements: Lower RAM footprint for indexing

- CPU utilization: Less processing power needed for indexing and searching

As part of the Grafana ecosystem, Loki offers:

- Native visualization: Beautiful dashboards and visualizations in Grafana

- Unified observability: Logs, metrics, and traces in a single interface

- Explore interface: Ad-hoc log exploration alongside metrics

- Alerting integration: Create alerts based on log patterns or derived metrics

Loki was built for modern infrastructure:

- Kubernetes-friendly: Designed to run well in containerized environments

- Horizontally scalable: Each component can scale independently

- Microservices architecture: Resilient to failures of individual components

- Multi-tenancy support: Safely handle logs from multiple teams or customers

Compared to more complex logging stacks, Loki offers:

- Easier setup and maintenance: Fewer moving parts than ELK

- Consistent with Prometheus: Similar concepts and query patterns

- Lightweight agents: Promtail and other collectors are resource-efficient

- Simplified backup and disaster recovery: Leverages object storage capabilities

Loki offers flexible deployment models:

- Monolithic mode: Single binary for small deployments or testing

- Microservices mode: Distributed deployment for production use

- Grafana Cloud: Fully-managed service with zero operational overhead

- Kubernetes with Helm: Easy deployment on Kubernetes clusters

Multiple options exist for getting logs into Loki:

- Promtail: Loki’s purpose-built log collector

- Fluentd/Fluent Bit: Popular log collectors with Loki plugins

- Logstash: Output plugin available for ELK users transitioning to Loki

- Vector: Modern data pipeline with Loki support

- Grafana Agent: Unified collector for logs, metrics, and traces

Example Promtail configuration to collect and label container logs:

scrape_configs:

- job_name: kubernetes-pods

kubernetes_sd_configs:

- role: pod

pipeline_stages:

- docker: {}

- regex:

expression: '^(?P<timestamp>\S+) (?P<level>\S+) (?P<message>.*)$'

- labels:

level:

- timestamp:

source: timestamp

format: RFC3339

To get the most out of Loki, consider these practices:

- Thoughtful labeling strategy:

- Use labels for high-cardinality identifiers (service, instance, environment)

- Avoid putting high-cardinality data in labels (user IDs, request IDs)

- Consistent labeling between metrics and logs for correlation

- Structured logging:

- Use JSON or other structured formats when possible

- Extract important fields at query time using LogQL

- Consider standardizing log formats across services

- Query optimization:

- Start queries with label filters before content filters

- Use appropriate time ranges to limit data scanned

- Leverage metrics from logs for dashboards rather than raw logs

- Resource planning:

- Size ingesters based on throughput and retention

- Plan object storage for long-term retention

- Consider read/write patterns when sizing queriers

Loki is particularly well-suited for Kubernetes environments:

- Pod and container logs: Automatic discovery and labeling

- Cluster troubleshooting: Correlate logs across namespaces and services

- Resource efficiency: Lightweight enough to run in-cluster

- Integration with Prometheus: Combined logs and metrics for complete observability

Example dashboard elements:

- Pod restart counts with direct links to relevant logs

- Error rate graphs with log samples

- Node resource utilization with corresponding system logs

For microservice architectures, Loki enables:

- Request tracing through logs: Follow requests across services

- Error correlation: Identify cascading failures

- Deployment monitoring: Track issues after new releases

- Performance analysis: Identify slow services or endpoints

Loki provides value for security teams:

- Authentication monitoring: Track login attempts and failures

- Access pattern analysis: Identify unusual behaviors

- Compliance requirements: Retain logs for audit purposes

- Suspicious activity alerting: Notify on potential security events

Comparing Loki to the popular Elasticsearch, Logstash, Kibana (ELK) stack:

| Feature | Loki | ELK Stack |

|---|---|---|

| Resource requirements | Low | High |

| Full-text indexing | No (only labels) | Yes (all content) |

| Query performance | Fast for label queries | Fast for all queries |

| Storage efficiency | Very high | Moderate |

| Setup complexity | Low to moderate | Moderate to high |

| Learning curve | Low (if familiar with Prometheus) | Moderate |

| Advanced text search | Limited | Extensive |

Comparing with the enterprise leader Splunk:

| Feature | Loki | Splunk |

|---|---|---|

| Cost model | Open-source or subscription | Licensed by data volume |

| Enterprise features | Growing | Comprehensive |

| Integration ecosystem | Focused on cloud-native | Broad coverage |

| Analytics capabilities | Basic to intermediate | Advanced |

| Operational overhead | Lower | Higher |

Loki provides robust multi-tenant capabilities:

- Isolated log storage: Keep different teams’ logs separate

- Resource limits per tenant: Prevent noisy neighbors

- Authentication integration: Work with existing identity systems

- Cost allocation: Track usage by team or service

LogQL allows deriving metrics directly from logs:

sum by (status_code) (rate({app="nginx"}

| pattern `<_> - - <_> "<method> <url> HTTP/<_>" <status_code> <_>`

| status_code != ""[5m]))

This creates a metrics series showing HTTP status code rates over time, perfect for dashboards.

Loki 2.0 introduced powerful log processing capabilities:

- Line filtering: Keep or discard logs based on content

- Parsing: Extract structured data from unstructured logs

- Formatting: Rewrite log lines for better readability

- Labeling: Add extracted values as temporary labels

Example to extract and compute latency metrics:

{app="payment-service"}

| json

| latency > 100ms

| rate[5m]

Grafana Labs continues active development of Loki with exciting roadmap items:

- Enhanced query performance: Ongoing optimizations for faster searches

- Richer parsing capabilities: More powerful log transformation options

- Deeper integration with Grafana Tempo for traces and Mimir for metrics

- Expanded alerting capabilities: More sophisticated log-based alerting

- Enterprise features: Enhanced security and compliance capabilities

Ready to try Loki? Here are steps to get started:

- Choose a deployment method:

- Docker Compose for local testing

- Kubernetes with Helm for production

- Grafana Cloud for managed service

- Set up log collection:

- Deploy Promtail alongside your applications

- Configure log sources and labels

- Verify logs are flowing into Loki

- Create Grafana dashboards:

- Add Loki as a data source in Grafana

- Build log panels alongside metrics

- Set up derived metrics from logs

- Learn LogQL:

- Start with simple label queries

- Add content filtering

- Explore aggregations and transformations

Grafana Loki represents a paradigm shift in log management—proving that effective observability doesn’t have to come with an excessive resource or financial burden. Its label-based approach, seamless integration with Grafana, and cloud-native design make it an increasingly popular choice for modern engineering teams.

Whether you’re running a small Kubernetes cluster or managing a large-scale distributed system, Loki offers a compelling balance of functionality, performance, and efficiency. As observability practices continue to evolve, Loki’s approach may well become the new standard for how we think about log aggregation and analysis.

By focusing on what matters most—making logs accessible and useful without unnecessary complexity—Loki embodies the pragmatic approach that busy engineering teams need in today’s fast-paced environments.

#GrafanaLoki #LogAggregation #Observability #CloudNative #DevOps #Kubernetes #Logging #Grafana #SRE #Monitoring #LogQL #DataEngineering #Microservices #OpenSource #Prometheus #Observability #ContainerLogging #CostEfficiency #LogManagement #DataOps