In the ever-evolving world of data management, one debate has stood the test of time: ETL vs. ELT. While these approaches sound similar, the shift from ETL (Extract, Transform, Load) to ELT (Extract, Load, Transform) has significant implications for businesses navigating modern data platforms, especially as cloud computing becomes the new standard.

Why does this matter in 2025? Let’s explore the technical differences, the role of cloud infrastructure, and how to decide which approach works best for your organization.

—

ETL vs. ELT: What’s the Difference?

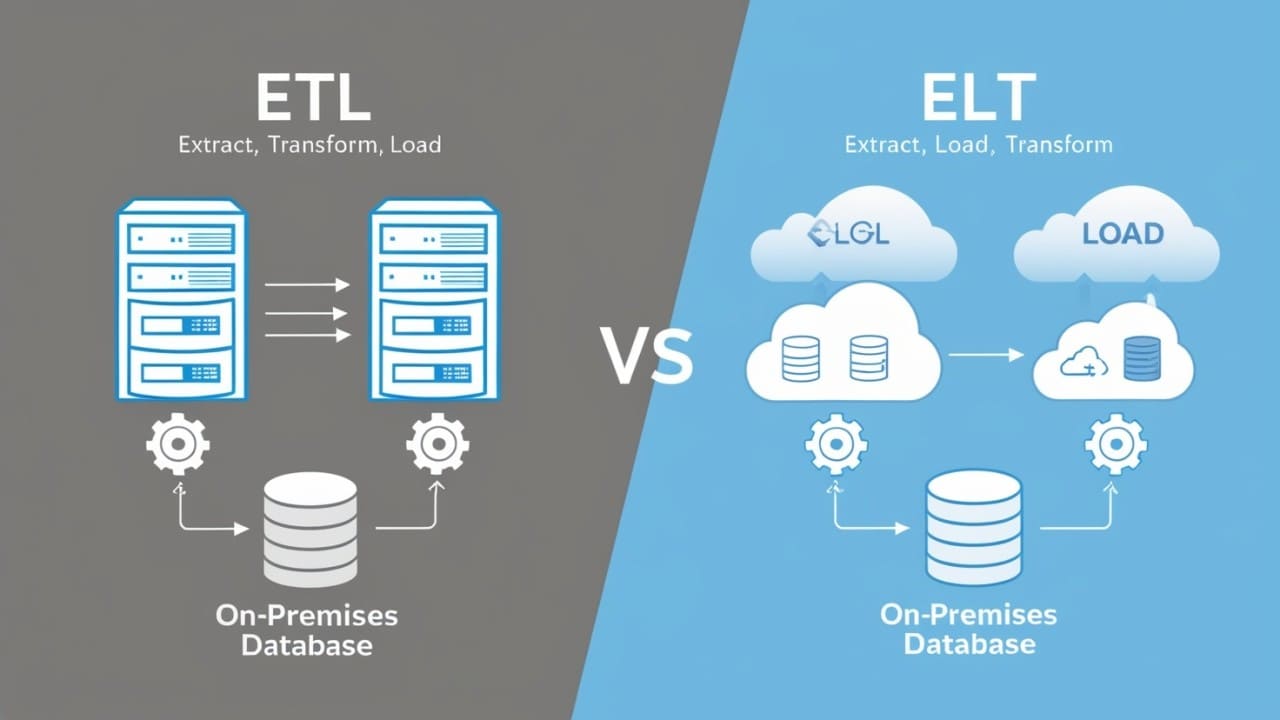

At their core, ETL and ELT refer to two ways of handling data pipelines, but the order of operations makes all the difference:

1. ETL (Extract, Transform, Load)

– How it works: Data is first extracted from source systems, transformed (cleaned, enriched, and reshaped) using an ETL tool, and then loaded into the target data warehouse or database.

– Where it shines: ETL is great for on-premises systems and situations where transformations must be applied before loading to optimize storage or meet specific business rules.

– Popular tools: Informatica, Talend, Apache Nifi, Microsoft SSIS.

2. ELT (Extract, Load, Transform)

– How it works: Data is extracted from source systems and loaded as is into a data warehouse or lake. Transformations occur after loading, often leveraging the computing power of the cloud platform.

– Where it shines: ELT thrives in cloud-native environments where storage is cheap, and compute power is scalable.

– Popular tools: dbt, Fivetran, Snowflake, BigQuery, Databricks.

Key Difference: ETL transforms data before loading, while ELT shifts transformation after loading, using the capabilities of modern cloud platforms.

—

How Cloud Computing Has Influenced the Shift

The rise of cloud platforms like Snowflake, BigQuery, and Databricks has fundamentally changed the rules of the game. Here’s why:

1. Affordable, Scalable Storage

Cloud platforms offer cheap, near-infinite storage, making it unnecessary to pre-filter or reshape data before loading. With ELT, organizations can load raw data directly into the warehouse and transform it later as needed.

2. Compute Power On Demand

The cloud decouples storage and compute, enabling on-demand processing power for heavy transformations. This eliminates the need for expensive, pre-transformation infrastructure traditionally required in ETL pipelines.

3. Agility and Speed

ELT allows teams to load raw data quickly and iterate transformations without waiting for lengthy ETL processes. This agility is essential for modern data workflows like real-time analytics and machine learning.

4. Support for Unstructured and Semi-Structured Data

Modern cloud warehouses can handle semi-structured formats like JSON, XML, and Parquet, which was previously difficult with traditional ETL tools.

—

Technical Comparison with Examples

Let’s break down a simple example:

Imagine a company collecting sales data from multiple sources (CRM, e-commerce platforms, and spreadsheets).

ETL Workflow

1. Extract: Pull structured sales data.

2. Transform: Clean, aggregate, and validate the data using an ETL tool.

3. Load: Store the cleaned data in an on-premises warehouse (e.g., Oracle).

Pro: Data is pre-validated and ready for analysis.

Con: Time-consuming and inflexible for new use cases.

ELT Workflow

1. Extract: Pull all raw data (structured, semi-structured) directly into Snowflake.

2. Load: Load the raw data into the cloud warehouse as-is.

3. Transform: Use dbt or SQL to clean and enrich data within Snowflake.

Pro: Faster ingestion, flexibility to adjust transformations later.

Con: Raw data storage can get expensive if not monitored.

—

Decision Framework: ETL or ELT?

So, how do you decide between ETL and ELT? Here are key factors to consider:

1. Data Volume and Complexity

– ETL: Best for smaller, structured datasets where pre-transformation reduces storage costs.

– ELT: Ideal for large, complex, or semi-structured datasets where cloud scalability shines.

2. Infrastructure

– ETL: Suits on-premises environments or hybrid systems with limited compute power.

– ELT: Designed for cloud-native platforms like Snowflake, BigQuery, or Databricks.

3. Time Sensitivity

– ETL: Works well for batch processing with scheduled updates.

– ELT: Better for near-real-time analytics and agile data workflows.

4. Team Expertise

– ETL: Requires skilled ETL developers for pipeline design and maintenance.

– ELT: Empowers data analysts and engineers to write SQL-based transformations with tools like dbt.

—

Why the Shift Matters in 2025

In 2025, the momentum continues to move toward ELT, driven by cloud adoption and the need for flexibility:

– Faster Time-to-Insight: Organizations can load data quickly and iterate transformations as business needs evolve.

– Cost Efficiency: Pay-as-you-go cloud storage and compute models optimize costs when managed effectively.

– Democratization of Data: Tools like dbt empower data analysts, not just engineers, to handle transformations.

– Support for AI and Machine Learning: ELT’s ability to store raw data allows ML engineers to experiment with features and models.

That said, ETL still holds value for legacy systems and specific scenarios where data must be cleaned before storage to meet compliance or cost constraints.

—

The Bottom Line

The ETL vs. ELT debate isn’t about choosing one winner. It’s about aligning the approach with your organization’s goals, infrastructure, and workflows. As cloud platforms dominate in 2025, ELT is emerging as the preferred choice for its flexibility, scalability, and speed. However, ETL remains a strong contender for structured, on-premises use cases where pre-transformation adds value.

What approach is your team using? Are you shifting towards ELT, or do you still see ETL as essential? Share your thoughts below!

Leave a Reply