Data-Skipping Indexes That Actually Help: Bloom, Token, and N-Gram Explained (with Benchmarks)

Why you should care (a quick story)

You’ve got a log table with billions of rows. Dashboards filter on status, users search error messages with LIKE, and your queries are guzzling terabytes. ClickHouse can be lightning-fast, but only if it can skip work. That’s what data-skipping indexes do: they let ClickHouse avoid reading blocks of data that cannot match your predicate. Used well, they cut scanned bytes by orders of magnitude; used blindly, they add overhead. Let’s make them work for you. (ClickHouse)

What “data-skipping index” means in ClickHouse

- Works on MergeTree tables and stores lightweight metadata per granule (default ~8192 rows) so the engine can skip blocks during reads.

- You define: name, expression, TYPE, GRANULARITY.

- They don’t return row locations (not B-trees). They just answer: “Is it even worth reading this block?” (ClickHouse)

The three Bloom-based options (and when to use each)

| Index | Best for | Typical operators / functions | Notes |

|---|---|---|---|

bloom_filter([p_false]) | Set membership on non-key columns (=, IN, rare values) | = IN (on the expression) | Simple to add; optional false-positive rate (default 0.025). (ClickHouse) |

tokenbf_v1(bytes, k, seed) | Word-level search in strings/logs | LIKE (wordish), = IN, hasToken* | Splits by non-alphanumeric tokens; String/FixedString/Map. Size & hash count matter. (ClickHouse) |

ngrambf_v1(n, bytes, k, seed) | Substring search (%foo%, languages w/o word breaks) | LIKE '%…%', hasSubstr* | Indexes n-grams (character windows). Tune n; supports String/FixedString/Map. (ClickHouse) |

Rule of thumb: tokenbf_v1 for word tokens (logs/tags), ngrambf_v1 for free-form substrings, bloom_filter for non-text membership checks (IDs, codes). Always test on your data shape. (ClickHouse)

Minimal, copy-paste-ready examples

1) bloom_filter on a status code

CREATE TABLE logs_raw

(

ts DateTime,

status UInt16,

user_id UInt64,

msg String

)

ENGINE = MergeTree

PARTITION BY toYYYYMM(ts)

ORDER BY (ts, user_id);

ALTER TABLE logs_raw

ADD INDEX ix_status status TYPE bloom_filter(0.01) GRANULARITY 2;

-- If the table already has data:

ALTER TABLE logs_raw MATERIALIZE INDEX ix_status;

The index helps when you filter on rare statuses: WHERE status IN (500, 503). (ClickHouse)

2) tokenbf_v1 for word search (case-folded)

ALTER TABLE logs_raw ADD INDEX ix_token

lowerUTF8(msg) TYPE tokenbf_v1(65536, 3, 42) GRANULARITY 2;

-- Query examples that can use it:

SELECT * FROM logs_raw WHERE hasToken(lowerUTF8(msg), 'timeout');

SELECT * FROM logs_raw WHERE msg ILIKE '% connection %';

Token index splits by non-alphanumeric boundaries; works well for word searches in log text. Tune bytes/hash functions empirically. (ClickHouse)

3) ngrambf_v1 for substring (%…%)

ALTER TABLE logs_raw ADD INDEX ix_ngram

msg TYPE ngrambf_v1(3, 1048576, 3, 123) GRANULARITY 2;

-- Good for substring lookups:

SELECT count() FROM logs_raw WHERE msg LIKE '%conn err%';

Pick n based on queries: n=3 matches 3-char substrings; smaller n increases index size/FPs. (ClickHouse)

“Explained with Benchmarks”: a 10-minute, reproducible micro-benchmark

Goal: show how indexes reduce read_rows/read_bytes and how many granules they drop.

- Create sample data (1–10M rows is fine).

- Run the query without the index; capture metrics.

- Add the index +

MATERIALIZE INDEX(for existing parts). - Rerun; compare metrics.

Measure with EXPLAIN & query log

-- See which indexes would be used:

EXPLAIN indexes=1

SELECT * FROM logs_raw WHERE hasToken(lowerUTF8(msg), 'timeout');

-- Then run and inspect the log:

SYSTEM FLUSH LOGS;

SELECT

query_duration_ms, read_rows, read_bytes

FROM system.query_log

WHERE query ILIKE '%hasToken%' AND type = 'QueryFinished'

ORDER BY event_time DESC

LIMIT 1;

EXPLAIN indexes=1 shows parts/granules before/after and which skip index fired; system.query_log has canonical read_rows/read_bytes to verify impact. (ClickHouse)

What the official docs show: a simple skip-index example reduces work from 100M rows / 800 MB scanned to just 32,768 rows / 360 KB—because most granules were skipped. Expect different numbers on your data, but the pattern is what matters. (ClickHouse)

How to choose between bloom, token, and n-gram (with visuals in mind)

- Think in blocks, not rows. If a value occurs at least once in a block, ClickHouse must still read that block—even if only one row matches. Skipping helps when your predicate is sparse within blocks. (ClickHouse)

- Correlate with ORDER BY. Indexes work best when your non-key column loosely correlates with the primary key (e.g., certain error codes cluster in certain time ranges). Otherwise, almost every block contains matches and nothing is skipped. (ClickHouse)

- Pick the right flavor:

- Rare IDs/codes ⇒

bloom_filter. - Word search (logs/tags) ⇒

tokenbf_v1onlowerUTF8(msg). - Substring / languages without spaces ⇒

ngrambf_v1with smartn. (ClickHouse)

- Rare IDs/codes ⇒

Tuning checklist (fast to apply, easy to undo)

- Start simple:

bloom_filter(0.01)on the column you filter by most, then validate withEXPLAIN indexes=1. (ClickHouse) - Materialize after adding to existing tables:

ALTER TABLE … MATERIALIZE INDEX …. (ClickHouse) - Granularity: begin at

2(i.e., index block ≈2 * 8192rows); raise if lookup overhead dominates, lower if skipping is too coarse. (ClickHouse) - Token/N-gram sizing:

tokenbf_v1(bytes, k, seed)andngrambf_v1(n, bytes, k, seed)require sizing; bytes ↑ → fewer false positives, more disk. Use the docs’ guidance/calculator to estimate. (ClickHouse)

- Verify impact: track

read_rows,read_bytes, and granules dropped inEXPLAIN. If numbers don’t move, the index isn’t helping. (ClickHouse) - Know when to bail: if almost every block matches, the index adds overhead—fix data layout (ORDER BY) or use materialized views/projections instead. (ClickHouse)

Common pitfalls (and fixes)

| Pitfall | Why it hurts | Fix |

|---|---|---|

| Adding skip indexes before fixing ORDER BY | Poor clustering ⇒ few blocks skipped | Align ORDER BY with common predicates; then index what’s left |

Expecting LIKE '%x%' to be fast with tokenbf_v1 | Token index is word-based | Use ngrambf_v1 (substring) or change query to tokens |

| Granularity too high | Cheap lookup, but too coarse to skip | Lower GRANULARITY (1–4) and re-test |

| Wrong casing | Case mismatch ⇒ index not used | Create index on lowerUTF8(col) and query with lowerUTF8() |

| Forgetting to MATERIALIZE | Old parts lack index | ALTER TABLE … MATERIALIZE INDEX … |

| Measuring by latency only | OS cache, concurrency hide effects | Compare read_rows/read_bytes & granules dropped consistently (ClickHouse) |

Quick reference: when each wins

bloom_filter:WHERE error_code IN (502,503),WHERE country='IS'—rare values on non-key columns.tokenbf_v1: log/tags:hasToken(lowerUTF8(msg),'timeout'),ILIKE '% timeout %'on token boundaries.ngrambf_v1: free text / partial matches:LIKE '%conn err%', languages without spaces. (ClickHouse)

Internal link ideas (official docs)

- Understanding data-skipping indexes (concepts, types, best practices). (ClickHouse)

- Skip index examples (syntax for bloom/set/minmax/token/n-gram + verification). (ClickHouse)

EXPLAIN … indexes=1reference (see parts/granules before/after). (ClickHouse)system.query_logfields (read_rows,read_bytes, duration). (ClickHouse)- “Use data-skipping indices where appropriate” (pragmatic guidance). (ClickHouse)

Summary

Skip indexes are not magic; they’re pruning hints. Use them after you’ve picked a good ORDER BY. Start with a simple bloom_filter for rare values, then add tokenbf_v1 (tokens) or ngrambf_v1 (substrings) where text search is hot. Prove value with EXPLAIN and system.query_log. If scanned bytes don’t drop, rethink the approach. (ClickHouse)

Call to action: Pick one painful text filter today. Add a single bloom_filter or ngrambf_v1, materialize, and measure read_rows before/after. Keep what pays rent—drop what doesn’t.

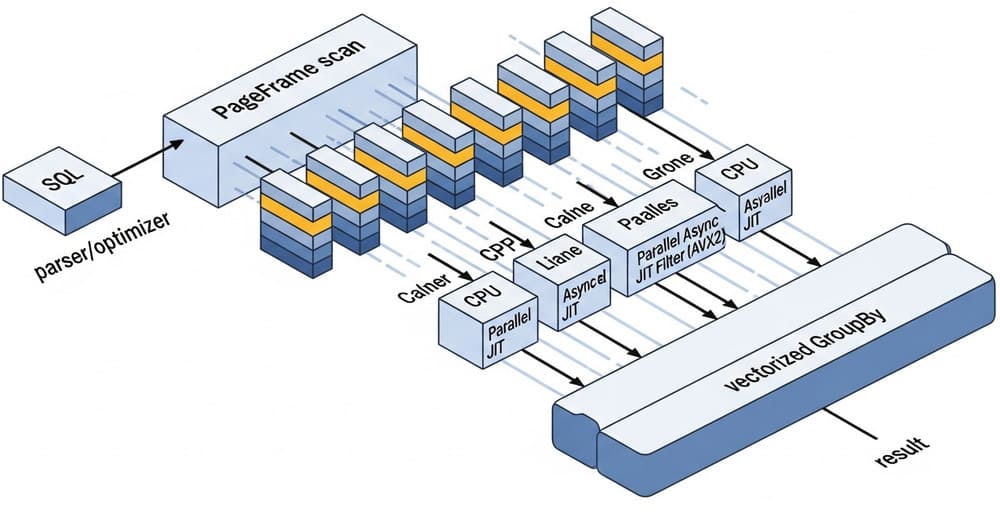

Image prompt

“A clean, modern diagram showing ClickHouse MergeTree blocks and skip indexes dropping granules: three panels for bloom_filter, tokenbf_v1, and ngrambf_v1; highlight pruned granules vs scanned; minimalistic, high contrast, isometric 3D.”

Tags

#ClickHouse #SkippingIndex #BloomFilter #tokenbf #ngrambf #Performance #DataEngineering #OLAP #Logs #Benchmarks

Leave a Reply