Arrow Flight SQL from Python with VeloDB: How to Get “10×-Style” Read Speedups

Meta description (158 chars):

Supercharge Python reads from VeloDB (Apache Doris) with Arrow Flight SQL + ADBC. Setup, code, tuning (timeouts, gRPC size), and pitfalls for real-time analytics.

Why this matters

Ever waited minutes for pandas.read_sql() to pull a wide result? With VeloDB (built on Apache Doris) you can switch the transport to Arrow Flight SQL and stream columnar batches directly into Python—eliminating row-by-row serialization overhead. In Doris/VeloDB, Arrow Flight SQL is an officially supported high-speed path and, in some scenarios, the docs report “up to 100×” better transfer performance than MySQL/JDBC/ODBC-based fetches. (docs.velodb.io)

What Arrow Flight SQL is (in plain terms)

Arrow Flight SQL is a standard RPC protocol (gRPC) for SQL engines to deliver data in Apache Arrow’s columnar format. Clients use ADBC Flight SQL drivers (DB-API) to run queries and fetch Arrow tables with near-zero copy. Less CPU wasted transforming rows to columns → more bandwidth for your actual analytics. (Apache Arrow)

How this fits VeloDB / Doris architecture

- Frontends (FE): plan/coordinate queries, expose Flight SQL endpoint.

- Backends (BE): execute queries, store columnar data, and often serve the result stream to clients. That’s why BE network access matters for Flight SQL. (doris.apache.org)

Enabling/connecting (self-hosted / BYOC)

Doris/VeloDB expose Flight SQL via gRPC ports. Server-side you typically set:

fe.conf: arrow_flight_sql_port(e.g., 8070)be.conf: arrow_flight_sql_port(e.g., 8050)

Then restart; logs confirm startup. (doris.apache.org)

Networking gotcha: Flight SQL clients often pull results from BE nodes. If BEs aren’t reachable, configure public_host/arrow_flight_sql_proxy_port or put a reverse proxy (e.g., Nginx) in front of BEs. (doris.apache.org)

BYOC note (VeloDB Cloud): the VeloDB agent runs in your VPC and uses PrivateLink/private endpoints for control. Ensure Flight SQL ports/paths you use are reachable per the BYOC guides. (docs.velodb.io)

Quick start from Python (ADBC Flight SQL)

1) Install drivers

pip install adbc-driver-manager adbc-driver-flightsql pyarrow pandas polars

(Driver packages are published on PyPI.) (docs.velodb.io)

2) Connect and fetch Arrow directly

import adbc_driver_flightsql.dbapi as flightsql

import adbc_driver_manager

import pyarrow as pa

import pandas as pd

import polars as pl

# VeloDB / Doris FE Flight endpoint

URI = "grpc://fe.example.company:8070"

conn = flightsql.connect(

URI,

db_kwargs={

adbc_driver_manager.DatabaseOptions.USERNAME.value: "user",

adbc_driver_manager.DatabaseOptions.PASSWORD.value: "pass",

# Optional: lift default gRPC message size cap for big batches

# adbc_driver_flightsql.DatabaseOptions.WITH_MAX_MSG_SIZE.value: "2147483647",

},

)

cur = conn.cursor()

cur.execute("""

SELECT ts, user_id, metric_a, metric_b

FROM prod.fact_events

WHERE ts >= now() - interval 1 day

""")

# Fetch as an Arrow table (columnar) then use Pandas/Polars zero-copy

tbl = cur.fetchallarrow() # -> pyarrow.Table

df = tbl.to_pandas() # Pandas

pl_df = pl.from_arrow(tbl) # Polars

cur.close(); conn.close()

The ADBC cookbook shows recipes for authenticated connections, timeouts, and raising the max gRPC message size when you hit “received message larger than max.” (Apache Arrow)

Performance checklist (what actually moves the needle)

- Use Arrow end-to-end

Fetch Arrow from the cursor (fetchallarrow()), then hand it to Pandas/Polars. This avoids row→column conversions on the client side. (Apache Arrow) - Tune Flight SQL driver options

Set connection/statement timeouts; increase WITH_MAX_MSG_SIZE for very wide batches; optionally enable cookie middleware if your gateway requires it. (Apache Arrow) - Plan for BE reachability

Flight SQL often streams from BEs. If they’re private, configurepublic_host/proxy or expose appropriate egress paths; otherwise the client stalls or times out. (doris.apache.org) - Parallel endpoints (advanced)

Doris 3.0.4+ can return multiple endpoints; clients then pull from several BEs in parallel for some query shapes (setenable_parallel_result_sink=truewhen applicable). Measure carefully; small results don’t benefit. (doris.apache.org) - Reduce bytes first

Project only needed columns, filter early, and consider async materialized views for hot aggregates—let the warehouse do the heavy lifting, then stream fewer bytes. (doris.apache.org)

Reality check: The official docs say Flight SQL can be “up to 100× faster” than MySQL/JDBC/ODBC in some scenarios. Your mileage depends on schema width, network, CPU, and whether you actually consume Arrow on the client. Treat “10×” as a goal, not a guarantee. (docs.velodb.io)

Comparison table: Flight SQL vs. classic drivers

| Aspect | Flight SQL (Arrow + gRPC) | MySQL/JDBC/ODBC path |

|---|---|---|

| Data format | Columnar Arrow batches | Row-oriented |

| Client cost | Low (no row→column reshaping) | Extra CPU to reshape |

| Transport | gRPC streaming, large frames | Text/binary row fetch |

| Python integration | ADBC DB-API; Arrow → Pandas/Polars | DB-API; usually rows→DataFrame |

| Network prereqs | FE + often BE reachability | Typically FE only |

| When it shines | Wide tables, big scans, data-science export | Small OLTP-style lookups |

Sources: protocol & driver docs; Doris/VeloDB Flight SQL guides. (Apache Arrow)

End-to-end example with tuning

Set timeouts and bigger message size

from adbc_driver_flightsql import ConnectionOptions, DatabaseOptions

conn = flightsql.connect(

URI,

db_kwargs={

adbc_driver_manager.DatabaseOptions.USERNAME.value: "user",

adbc_driver_manager.DatabaseOptions.PASSWORD.value: "pass",

DatabaseOptions.WITH_MAX_MSG_SIZE.value: "2147483647", # ~2 GiB

},

)

conn.adbc_connection.set_options(

**{

ConnectionOptions.TIMEOUT_FETCH.value: 60.0,

ConnectionOptions.TIMEOUT_QUERY.value: 60.0,

ConnectionOptions.TIMEOUT_UPDATE.value: 60.0,

}

)

These options are straight from the ADBC Flight SQL recipes. (Apache Arrow)

Common pitfalls (and fixes)

- BEs not reachable: Can’t fetch? It’s likely networking. Add a reverse proxy or configure

public_host/arrow_flight_sql_proxy_portso clients can reach the BE that holds the result. (doris.apache.org) - Hitting gRPC size limits: Increase

WITH_MAX_MSG_SIZEor fetch fewer columns per query. (Apache Arrow) - Expecting speedups but still slow: If your code converts Arrow → rows prematurely, you lose the benefit. Keep data in Arrow as long as possible. (Apache Arrow)

- Small result sets: For tiny queries, transport doesn’t dominate; don’t expect miracles—optimize the SQL or add an async MV instead. (doris.apache.org)

Summary & call-to-action

Switching Python reads to Arrow Flight SQL is the fastest way to cut “data copy tax” when exporting analytics data from VeloDB/Doris. Start with ADBC, fetch Arrow tables, tune timeouts and message size, and verify BE reachability. If your workload is wide and scan-heavy, the gains can be dramatic. Try one pipeline today: migrate a single read path (wide table → Pandas/Polars) and compare wall-clock times.

Internal link ideas (for your site)

- “VeloDB Flight SQL Setup: Ports, Proxies, and Security in BYOC”

- “Async Materialized Views in Doris: Designing Read-Friendly Aggregates”

- “Pandas vs. Polars with Arrow: Choosing Your Python Frame”

- “Designing Tables for Fast Export: Partitioning & Sort Keys in Doris”

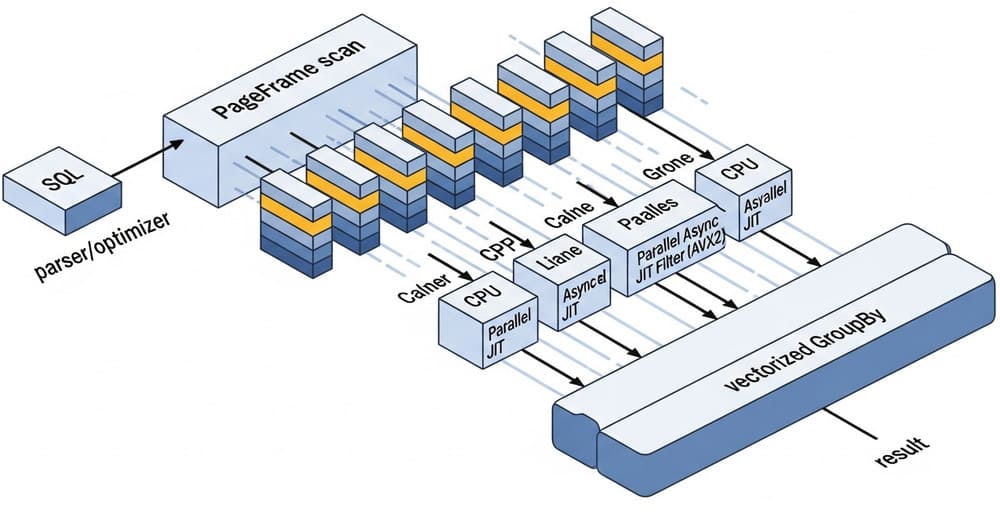

Image prompt (for DALL·E / Midjourney)

“A clean, modern diagram of a VeloDB (Apache Doris) cluster streaming query results via Arrow Flight SQL to Python clients (Pandas/Polars). Show FE/BE nodes, gRPC flows, and Arrow columnar batches. Minimalist, high-contrast, isometric vector style.”

Tags

#VeloDB #ApacheDoris #ArrowFlightSQL #ADBC #Python #Pandas #Polars #RealTimeAnalytics #DataEngineering #HighPerformanceIO

Official references

- VeloDB Flight SQL guide (setup, code, performance note) (docs.velodb.io)

- Apache Doris Flight SQL doc (ports, BE reachability, parallel endpoints) (doris.apache.org)

- Apache Arrow Flight SQL spec (protocol fundamentals) (Apache Arrow)

- ADBC Python docs & cookbook (DB-API usage, timeouts, max gRPC size, Pandas/Polars) (Apache Arrow)

If you want, I can add a tiny benchmarking harness (MySQL vs. Flight SQL) that you can run in your environment to quantify wins for your schemas.

Leave a Reply