Dagster Chapter 1 — Hello Assets: CSV → DuckDB

This is where we start.

You’ll see what Dagster means by an asset, how to run it locally, and why this approach feels cleaner than a pile of ad-hoc scripts.

We’ll use one small CSV file, turn it into a few tidy DataFrames, and save the result into a DuckDB file. Everything runs in Docker so your environment stays reproducible.

What You’ll Learn

- What Dagster assets are and how they describe data flow.

- How to run Dagster locally in Docker.

- How to materialize a simple graph and check the result in DuckDB.

- How to run a test end-to-end.

Why Start with Assets

In Dagster, an asset is a data product with a name, lineage, and history.

Think of it as a file, table, or dataset that Dagster knows how to build and track.

Instead of a script that just “runs,” an asset says, “Here’s what I produce, here’s what I depend on, and here’s where the result lives.”

When you build with assets, you automatically get:

- Lineage — Dagster knows which asset depends on which.

- Metadata — every run records rows, columns, and timestamps.

- Observability — logs, previews, and history appear in the UI.

- Selective runs — you can rebuild only what changed.

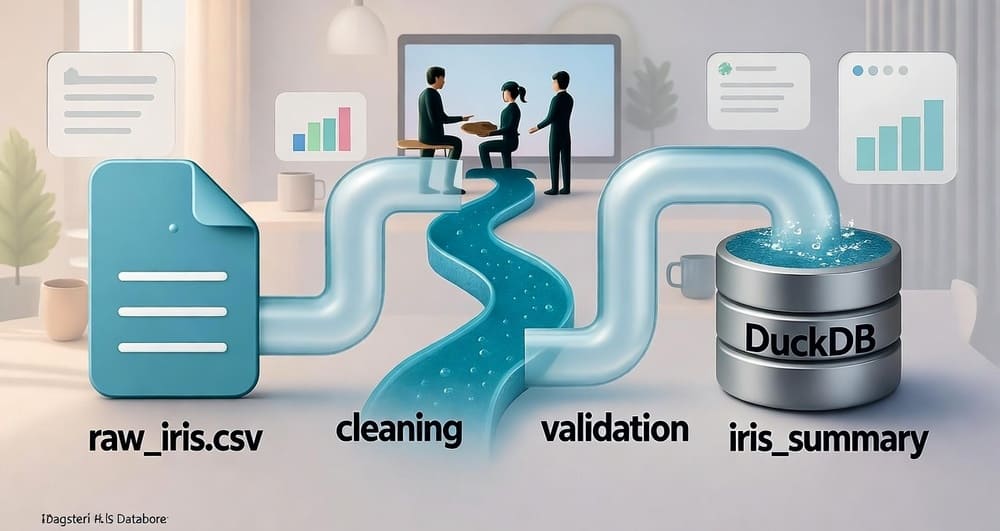

Our first graph looks like this:

graph TD

A[raw_iris] --> B[iris_clean] --> C[iris_summary]

Project Layout

chapter-01-hello-assets/

data/

raw/iris.csv

warehouse/

docker/

Dockerfile

docker-compose.yaml

dagster_home/dagster.yaml

scripts/start.sh

scripts/stop.sh

src/pipelines_the_right_way/ch01/

assets.py

defs.py

tests/test_assets.py

Makefile

requirements.txt

Each folder serves a clear purpose:

data/holds the CSV and the DuckDB output.docker/defines a light container image and turns off telemetry.src/keeps the Dagster code.tests/includes a small test that materializes everything once.Makefilewraps common commands.

Run It

From inside the project folder:

bash docker/scripts/start.sh

Then open http://localhost:3000.

Click Materialize all. Dagster will run three assets:

- raw_iris reads

data/raw/iris.csvand emits metadata. - iris_clean checks column types and drops nulls.

- iris_summary groups data by species and writes a DuckDB file in

data/warehouse/iris.duckdb.

When you’re done:

bash docker/scripts/stop.sh

Check the result manually:

duckdb data/warehouse/iris.duckdb

D SELECT * FROM iris_summary;

How the Code Works

assets.py

Each asset is a plain Python function decorated with @asset.

To keep paths flexible, we compute them at runtime:

def _paths():

data_dir = Path(os.getenv("PTWR_DATA_DIR", ROOT / "data"))

raw_csv = data_dir / "raw" / "iris.csv"

warehouse_dir = data_dir / "warehouse"

duckdb_path = warehouse_dir / "iris.duckdb"

return data_dir, raw_csv, warehouse_dir, duckdb_path

This avoids the usual “path not found” issues when tests or containers run in different environments.

raw_irisloads the CSV and emits metadata (row count, column names, preview).iris_cleanchecks for missing columns, converts types, and removes nulls.iris_summarygroups by species and writes the result to DuckDB:

con = duckdb.connect(str(duckdb_path))

con.register("iris_summary_df", summary)

con.execute("CREATE OR REPLACE TABLE iris_summary AS SELECT * FROM iris_summary_df")

defs.py

Dagster needs to know what assets exist:

from dagster import Definitions

from .assets import raw_iris, iris_clean, iris_summary

defs = Definitions(assets=[raw_iris, iris_clean, iris_summary])

When you run dagster dev -m pipelines_the_right_way.ch01.defs, Dagster loads this list and shows the graph in the UI.

Running in Docker

The Compose file mounts:

../datato/app/data./dagster_hometo/app/.dagster

That keeps your data and instance config outside the container image.

Telemetry is off by default, so the setup stays clean.

Testing

Run all tests with:

make test

The test creates a temporary data folder, sets environment variables before importing assets, copies the sample CSV, and materializes the graph.

It checks that the DuckDB file exists and that iris_summary has rows.

This pattern guarantees reproducible results without touching your real data/ directory.

Common Issues and Fixes

- ImportError: attempted relative import with no known parent package

→ Run withdagster dev -m pipelines_the_right_way.ch01.defs. - No instance configuration file warning

→ We already mountdocker/dagster_home/dagster.yaml. - No output file created

→ Make sure you materialized all assets; check thedata/mount in Docker. - Missing columns in CSV

→iris_cleanvalidates headers and fails clearly if they don’t match.

Observability in the UI

Click each asset to see:

- metadata you emitted,

- row counts and previews,

- compute kind (“pandas” or “duckdb”),

- the dependency graph connecting all assets.

This visibility is why assets are worth using even for small projects.

Production-Ready Touches

Even this simple setup follows good habits:

- Pinned dependencies for reproducibility.

- Dockerized environment — no local Python mess.

- Tests that cover the full pipeline.

- Idempotent DuckDB writes (

CREATE OR REPLACE TABLE).

Looking Ahead

In the next chapter, we’ll replace a static CSV with a live API and see how assets handle real data and retries.

By the end of this book, you’ll have learned how to scale from this small example to real-world, testable, observable data pipelines.

For now, if these boxes check out, you’re ready:

- UI opens at

http://localhost:3000. - Materialization creates

data/warehouse/iris.duckdb. - SQL query shows three species.

- Tests pass.

If everything works, congratulations — you’ve just built your first Dagster pipeline the right way.

(End of Chapter 1)

Official Dagster: https://dagster.io/

Github for Chapter 1: https://github.com/alexnews/pipelines-the-right-way/tree/main/chapter-01-hello-assets