How Snowflake Cortex Changes MLOps & Architectures — an actionable, runnable guide (ingest → embeddings → RAG → model serving)

Snowflake Cortex folds vector search, embeddings, and retrieval-augmented generation (RAG) capabilities into the data warehouse. That changes how teams design MLOps and architectures: fewer moving parts, tighter governance, and an option to run RAG pipelines entirely inside Snowflake. Below is a practical, readable walkthrough of what changes, architecture patterns, governance points — and a copy-pasteable POC you can run (SQL + Python) to see Cortex in action.

Quick sourcing notes: the Cortex SQL objects and functions used below are documented in Snowflake’s docs:

CREATE CORTEX SEARCH SERVICE. Snowflake Documentation The vector/embedding functions and VECTOR type are official features. Snowflake Documentation+1 TheSEARCH_PREVIEWand AI completion functions (AI_COMPLETE/COMPLETE) are the supported query/completion primitives. Snowflake Documentation+1

Why Cortex matters for MLOps (short)

- Consolidation of components: vectors + data + queries live in Snowflake, reducing infra sprawl (no separate vector DB required). Snowflake Documentation

- Native governance: embeddings and search are subject to Unity Catalog / Snowflake access controls and auditing, simplifying compliance. Snowflake Documentation

- Simpler RAG orchestration: retrieve relevant context using SQL, then call an LLM function inside Snowflake — entire RAG can remain within the warehouse (useful for auditability and data locality). Snowflake Documentation+1

High-level architecture (classic vs Cortex-enabled)

Classic RAG:

- Ingest → store raw docs (S3) → pipeline to vector DB → serve vectors via API → app calls vector DB + external LLM → model serving + app.

Cortex-enabled:

- Ingest → store raw docs in Snowflake → compute embeddings inside Snowflake (VECTOR type) → create Cortex Search Service → query +

AI_COMPLETEinside Snowflake → return result to app.

Benefits: fewer services to operate, single security policy, easier lineage.

End-to-end POC (runnable): support-tickets example

Requirements:

- Snowflake account with Cortex features enabled and an appropriate role/warehouse. (Follow Snowflake admin steps in your account.) Snowflake Documentation

Step 0 — create a demo database & warehouse (SQL)

CREATE OR REPLACE WAREHOUSE cortex_wh WAREHOUSE_SIZE = 'XSMALL' AUTO_SUSPEND=60 AUTO_RESUME=TRUE;

CREATE OR REPLACE DATABASE demo_cortex;

USE DATABASE demo_cortex;

CREATE OR REPLACE SCHEMA public;

Step 1 — create a small table and insert demo rows

CREATE OR REPLACE TABLE public.support_tickets (

ticket_id INT,

ticket_text STRING,

created_at TIMESTAMP_NTZ DEFAULT CURRENT_TIMESTAMP

);

INSERT INTO public.support_tickets(ticket_id, ticket_text) VALUES

(1, 'Payment failed when using credit card ending 1234'),

(2, 'App crashes when exporting CSV file'),

(3, 'How can I change my billing address?'),

(4, 'Refund requested for duplicate charge on order 9876'),

(5, 'How to enable two-factor authentication for my account?');

Step 2 — add VECTOR column and compute embeddings in SQL

Snowflake exposes embedding functions (e.g., EMBED_TEXT_768 / AI_EMBED) and a native VECTOR type; use the function available in your account/region. Snowflake Documentation+1

ALTER TABLE public.support_tickets

ADD COLUMN embedding VECTOR(FLOAT32, 768);

-- Example using EMBED_TEXT_768 (replace model name with one available to your account)

UPDATE public.support_tickets

SET embedding = SNOWFLAKE.CORTEX.EMBED_TEXT_768('e5-base-v2', ticket_text);

Notes & tips

- If your texts are long, chunk them before embedding (chunk ~256–512 tokens) to improve retrieval quality.

AI_EMBEDis the newer generic function in some regions; confirm the exact name in your account docs. Snowflake Documentation

Step 3 — create a Cortex Search Service (managed index)

This call builds a managed service that Snowflake prepares for low-latency serving. Use a dedicated warehouse for indexing / serving. Snowflake Documentation+1

CREATE OR REPLACE CORTEX SEARCH SERVICE demo_tickets_search

ON ticket_text

WAREHOUSE = cortex_wh

TARGET_LAG = '5 minutes'

EMBEDDING_MODEL = 'e5-base-v2' -- optional if you set embeddings earlier

AS (

SELECT ticket_id, ticket_text, embedding

FROM public.support_tickets

);

What this does: Snowflake transforms the source rows into the internal format for Cortex and builds the index. Initialization behavior is documented (you can initialize immediately or on schedule). Snowflake Documentation

Step 4 — preview searches via SQL

Use SEARCH_PREVIEW to test a natural language query from SQL/worksheet:

SELECT SNOWFLAKE.CORTEX.SEARCH_PREVIEW(

'demo_cortex.public.demo_tickets_search',

'{ "query":"how do I change billing address", "limit": 3 }'

);

This returns JSON-like rows with nearest matches; use the result to extract ticket_text for RAG. Snowflake Documentation

Step 5 — simple RAG inside Snowflake (SQL-only)

Retrieve top matches and call an LLM with them using AI_COMPLETE:

WITH top_docs AS (

SELECT VALUE:ticket_text::STRING AS ctx

FROM TABLE(

SNOWFLAKE.CORTEX.SEARCH_PREVIEW(

'demo_cortex.public.demo_tickets_search',

'{"query":"how to change billing address","limit":3}'

)

)

)

SELECT AI_COMPLETE(

MODEL => 'llama2-13b-chat', -- example; use a model available in your account

PROMPT => 'You are a helpful assistant. Use the context below to answer the question.' || '\n\n' ||

LISTAGG(ctx, '\n\n') || '\n\nQuestion: How do I change my billing address?'

) AS rag_answer

FROM top_docs;

AI_COMPLETE (preferred name for newer functionality) runs the model call inside Snowflake, keeping traffic and logs internal to the warehouse. Snowflake Documentation

Step 6 — query Cortex from Python (optional): simple connector example

import snowflake.connector, json

conn = snowflake.connector.connect(

user='YOU', password='PW', account='ACCOUNT', warehouse='CORTEX_WH',

database='DEMO_CORTEX', schema='PUBLIC', role='YOUR_ROLE'

)

cur = conn.cursor()

try:

payload = json.dumps({"query":"refund duplicate charge", "limit": 3})

cur.execute("SELECT SNOWFLAKE.CORTEX.SEARCH_PREVIEW('demo_cortex.public.demo_tickets_search', %s)", (payload,))

rows = cur.fetchall()

for r in rows:

print(r[0]) # preview returns PARSE_JSON-like objects depending on call

finally:

cur.close()

conn.close()

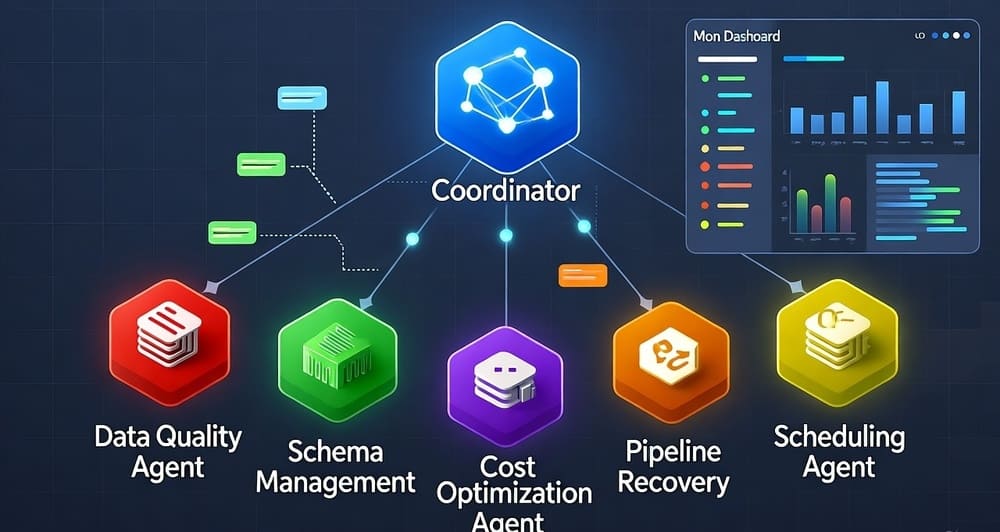

How Cortex changes MLOps workflows — practical implications

1) Pipeline simplification

Traditional pipeline: extractor → blob storage → ETL → vector DB → orchestration (Airflow) → model serving.

With Cortex, many steps compress to: ingest → enriched Snowflake tables (with VECTOR) → Cortex Search Service → RAG calls (AI_COMPLETE) — fewer moving services to monitor.

2) Governance and lineage

- Unity Catalog integration + Snowflake access controls mean embeddings + index access respects the same data-access policies you already manage. This simplifies audits, data classification, and compliance checks. Snowflake Documentation

3) Observability & cost control

- Monitor embedding and LLM function calls via Snowflake’s usage views (

METERING_DAILY_HISTORYetc.) and standard ACCOUNT_USAGE for cost attribution. Plan refresh intervals (TARGET_LAG) to control compute costs. Snowflake Documentation

4) Deployment & CI/CD

- Treat Cortex Search Service creation & SQL that populates embeddings as part of infra-as-code (e.g., Terraform + SQL scripts or CI jobs).

- Keep connector/config artifacts (model names, target lag, warehouse names) in Git and promote through environments.

5) When Cortex is NOT ideal

- Ultra-low-latency global search at massive scale (specialized vector DBs with customized ANN indexes may still beat general-purpose warehouse serving). Chaos Genius

- If you must avoid vendor lock-in, embedding vectors into Snowflake ties you to the platform; weigh that against governance benefits.

Governance checklist (practical)

- Use Unity Catalog roles to limit who can read embeddings and search services. Snowflake Documentation

- Store audit logs and review

LOGIN_HISTORY,QUERY_HISTORY, and AI usage metrics. - Encrypt PII before embedding (or use tokenization) if embeddings might leak sensitive info.

- Version embedding models in metadata (record model name & embedding parameters in a table).

- Cost guardrails: tag warehouses used for Cortex and set budgets/alerts.

Operational tips & gotchas

- Chunking: split long docs — chunk size affects retrieval quality. Aim for 200–512 tokens per chunk for many models.

- Re-indexing & target_lag: set

TARGET_LAGto balance freshness vs cost; large initial builds are compute-heavy. Snowflake Documentation - Model choice & costs:

AI_EMBED/EMBED_TEXT_*andAI_COMPLETEcalls consume credits/tokens. Track usage carefully. Snowflake Documentation - Testing: validate

SEARCH_PREVIEWresults before wiring into production; it’s designed for quick checks. Snowflake Documentation

Example project ideas where Cortex fits well

- Internal knowledge base + secure enterprise chat (RAG) where documents already live in Snowflake.

- Customer support summarization + ticket routing using similarity search across historical tickets.

- Semantic enrichment of product catalogs for recommendation and search inside an ecommerce analytics pipeline.

Conclusion

Snowflake Cortex changes MLOps by moving embedding generation, vector indexing, semantic search, and even LLM completions into the warehouse. That reduces operational overhead and tightens governance — but you should still evaluate latency, scale, and vendor-lock trade-offs for your use case. A small POC (like the demo above) that measures latency, cost, and quality is the fastest way to decide if Cortex fits your workload.

Quick resources & tutorials

CREATE CORTEX SEARCH SERVICEreference. Snowflake Documentation- Vector embeddings & functions (

EMBED_TEXT_*,AI_EMBED). Snowflake Documentation+1 SEARCH_PREVIEWand querying Cortex. Snowflake DocumentationAI_COMPLETE/ AISQL functions for RAG. Snowflake Documentation- Step-by-step Cortex Search tutorials (Snowflake docs). Snowflake Documentation

Hashtags:

#Snowflake #Cortex #MLOps #RAG #Embeddings #VectorSearch #DataEngineering #AIinDW #DataOps #AIInfrastructure

Leave a Reply