Apache Samza

In today’s data-driven landscape, processing information in real-time has become a critical capability for organizations seeking competitive advantage. Apache Samza stands out among distributed stream processing frameworks as a powerful, resilient solution designed specifically for high-volume, mission-critical streaming applications. Originally developed at LinkedIn to handle their massive real-time data needs, Samza has evolved into a robust open-source project that provides unique capabilities for modern data engineering challenges.

Apache Samza emerged from LinkedIn’s need to process billions of events daily with high reliability. The framework was designed to overcome the limitations of existing solutions, particularly around state management, fault tolerance, and integration with messaging systems. After its initial internal success, LinkedIn contributed Samza to the Apache Software Foundation, where it became a top-level project in 2015.

The framework was built with a clear philosophy: stream processing should be simple, scalable, and stateful while providing strong guarantees around data consistency and fault tolerance. These principles continue to guide Samza’s development today.

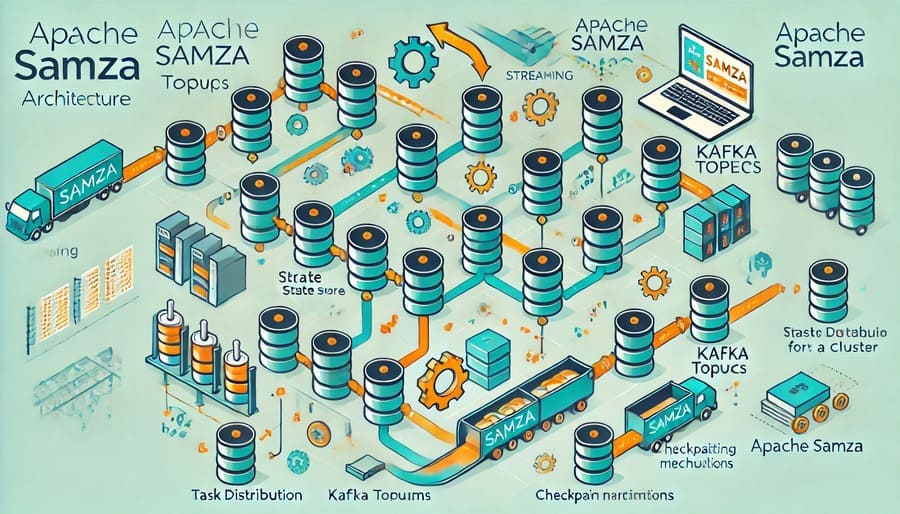

One of Samza’s defining characteristics is its deep integration with Apache Kafka. While Samza can work with other messaging systems, its architecture was optimized from the beginning to leverage Kafka’s unique capabilities:

- Samza uses Kafka’s offset tracking for exactly-once processing guarantees

- It leverages Kafka’s partitioning model for parallelism

- The framework uses Kafka for fault-tolerant checkpointing

- Samza’s processing model aligns perfectly with Kafka’s stream abstractions

This symbiotic relationship creates a particularly powerful combination for organizations already invested in Kafka-based architectures.

Unlike many early streaming systems that treated state as an afterthought, Samza was built from the ground up with sophisticated state management:

- Local State Stores: Each processor maintains its own persistent, high-performance state

- Fault-Tolerant State: Automatic checkpointing ensures state can be recovered after failures

- State Migration: When tasks are rebalanced, state moves automatically to new processors

- Incremental Checkpointing: Only changes are persisted, improving efficiency

This robust approach to state enables complex stream processing use cases that would be challenging to implement in stateless systems.

Samza offers multiple deployment options to fit various operational environments:

- YARN Integration: Native support for Apache YARN cluster management

- Standalone Mode: Simple deployment without external cluster managers

- SamzaContainer: Lightweight execution environment for flexibility

- Samza on Kubernetes: Modern containerized deployment (in newer versions)

This flexibility allows organizations to integrate Samza into their existing infrastructure with minimal disruption.

Samza’s processing architecture is designed for horizontal scalability:

- Partitioned Processing: Workloads are automatically distributed across available processors

- Independent Scaling: Input, processing, and state can be scaled independently

- Dynamic Task Assignment: Tasks are redistributed automatically as resources change

- No Central Coordinator: Eliminates bottlenecks in large-scale deployments

These characteristics enable Samza applications to scale from modest beginnings to processing millions of events per second.

For applications where data correctness is paramount, Samza provides strong processing guarantees:

- Coordinates with Kafka to track message offsets precisely

- Implements transactional state updates

- Ensures consistent processing despite failures

- Prevents duplicate processing or data loss

This capability is essential for financial, compliance, and other sensitive data processing scenarios.

Samza applications are designed to continue operating through various failure conditions:

- Automatic task redistribution when containers fail

- Seamless local state recovery from checkpoints

- Graceful handling of network partitions

- Resilience to processor and node failures

These features minimize downtime and ensure continuous processing in production environments.

While RocksDB is the default state store, Samza supports multiple storage engines:

- Key-Value Stores: For simple lookup operations

- Databases: For more complex data structures

- Custom Implementations: For specialized requirements

- In-Memory Options: For maximum performance

This flexibility allows developers to choose the right storage technology for their specific use case.

Samza provides sophisticated windowing abstractions for time-based processing:

- Tumbling Windows: Fixed-size, non-overlapping time intervals

- Sliding Windows: Overlapping time periods that advance gradually

- Session Windows: Activity-based grouping that adapts to usage patterns

- Custom Windows: Flexible implementations for specialized requirements

These capabilities enable complex analytics on streaming data, including detection of patterns over time.

Samza offers multiple programming models to suit different developer preferences:

- Low-Level Task API: For maximum control and customization

- High-Level Streams API: For simpler, declarative stream processing

- SQL API: For accessibility to non-programmers via standard SQL

- Samza Beam API: For Apache Beam integration and portability

This range of options makes Samza accessible to developers with varying backgrounds and requirements.

At LinkedIn, Samza powers numerous critical streaming applications:

- Real-time analytics for site interactions

- News feed processing and personalization

- Metrics monitoring and anomaly detection

- Security and fraud detection systems

These applications process billions of events daily with high reliability, showcasing Samza’s enterprise-grade capabilities.

Retailers leverage Samza for real-time customer experiences:

- Dynamic pricing based on demand and inventory

- Personalized product recommendations

- Inventory management and stock alerts

- Fraud detection for transactions

These use cases demonstrate Samza’s ability to process high-volume streams while maintaining state for business logic.

Banks and financial institutions use Samza for time-sensitive processing:

- Transaction monitoring and risk assessment

- Real-time portfolio valuation

- Market data processing and alerting

- Compliance and regulatory reporting

Samza’s strong consistency guarantees make it particularly suitable for financial applications.

Organizations processing sensor and device data benefit from Samza’s scalability:

- Industrial equipment monitoring

- Connected vehicle telemetry analysis

- Smart city infrastructure management

- Energy grid optimization

These applications showcase Samza’s ability to handle high-throughput, bursty data streams from distributed sources.

Compared to Spark Streaming, Samza offers:

- Lower latency for real-time requirements

- More robust stateful processing

- Lighter resource footprint

- Better integration with Kafka

Spark Streaming may be preferred when integration with the broader Spark ecosystem is important or when combining batch and stream processing.

In comparison with Flink, Samza provides:

- Tighter Kafka integration

- Simpler deployment and operations

- More straightforward scaling model

- Potentially lower learning curve

Flink may be advantageous for complex event processing, sophisticated windowing, or when true event-time processing is critical.

When comparing with Kafka Streams, Samza offers:

- More deployment flexibility

- Better scalability for larger workloads

- More mature stateful processing

- Broader ecosystem integration

Kafka Streams might be simpler for lightweight applications already using Kafka or for microservices architectures.

Setting up a simple Samza application involves several steps:

- Define your streaming job configuration

- Create input and output stream definitions

- Implement your processing logic

- Package your application

- Deploy to your execution environment

Here’s a basic example of a Samza job configuration:

# Job configuration

job:

factory.class: org.apache.samza.job.yarn.YarnJobFactory

name: my-first-samza-job

# Task configuration

task:

class: com.example.samza.MyStreamProcessor

inputs: kafka.input-topic

window.ms: 10000

# Serialization

serializers:

json:

class: org.apache.samza.serializers.JsonSerdeFactory

string:

class: org.apache.samza.serializers.StringSerdeFactory

# Kafka system configuration

systems:

kafka:

samza.factory: org.apache.samza.system.kafka.KafkaSystemFactory

consumer.zookeeper.connect: localhost:2181

producer.bootstrap.servers: localhost:9092

A basic Samza application might look like this:

public class PageViewCounter implements StreamTask {

private KeyValueStore<String, Integer> store;

@Override

public void init(Config config, TaskContext context) {

this.store = (KeyValueStore<String, Integer>) context.getStore("page-views");

}

@Override

public void process(IncomingMessageEnvelope envelope,

MessageCollector collector,

TaskCoordinator coordinator) {

PageView pageView = (PageView) envelope.getMessage();

String pageId = pageView.getPageId();

// Update count in state store

Integer count = store.get(pageId);

if (count == null) {

count = 0;

}

store.put(pageId, count + 1);

// Emit updated count

collector.send(new OutgoingMessageEnvelope(

SystemStream("kafka", "page-view-counts"),

pageId,

new PageViewCount(pageId, count + 1)

));

}

}

The high-level API provides a more declarative approach:

public class PageViewCounterApp implements StreamApplication {

@Override

public void describe(StreamApplicationDescriptor appDescriptor) {

// Define input stream

KafkaSystemDescriptor kafkaSystem = new KafkaSystemDescriptor("kafka")

.withConsumerZkConnect("localhost:2181")

.withProducerBootstrapServers("localhost:9092");

KafkaInputDescriptor<PageView> inputDescriptor =

kafkaSystem.getInputDescriptor("page-views", new JsonSerde<>(PageView.class));

KafkaOutputDescriptor<PageViewCount> outputDescriptor =

kafkaSystem.getOutputDescriptor("page-view-counts", new JsonSerde<>(PageViewCount.class));

// Define state store

RocksDbKeyValueStoreDescriptor storeDescriptor =

new RocksDbKeyValueStoreDescriptor("page-views", new StringSerde(), new IntegerSerde());

// Define the stream processing logic

appDescriptor.getInputStream(inputDescriptor)

.map(pageView -> pageView.getPageId())

.partitionBy(pageId -> pageId)

.map(KV::getKey)

.window(Windows.keyedSessionWindow(

pageId -> pageId, Duration.ofMinutes(10), () -> 0, (count, pageId) -> count + 1))

.map(KV::getValue)

.sendTo(appDescriptor.getOutputStream(outputDescriptor));

}

}

Effective operation of Samza applications requires comprehensive monitoring:

- JMX Metrics: Samza exposes detailed performance metrics via JMX

- Logging Framework: Configurable logging for troubleshooting

- Health Checks: Integration with monitoring systems

- Alerting: Detection of processing delays or failures

These capabilities enable operations teams to maintain reliable stream processing pipelines.

Optimizing Samza applications involves several considerations:

- Container Sizing: Balancing memory and CPU allocation

- Partition Assignment: Ensuring even distribution of work

- Checkpoint Frequency: Trading off recovery time against overhead

- State Store Configuration: Tuning for access patterns

Careful tuning can significantly improve throughput, latency, and resource utilization.

As processing needs grow, Samza applications can be scaled in multiple ways:

- Horizontal Scaling: Adding more containers

- Vertical Scaling: Increasing container resources

- Partition Scaling: Adjusting input partition count

- State Distribution: Optimizing state placement

These approaches can be combined to address specific scalability challenges.

The Samza community continues to evolve the framework with several focus areas:

Recent developments include better integration with cloud environments:

- Improved Kubernetes deployment

- Cloud storage integration

- Auto-scaling capabilities

- Containerization enhancements

These features make Samza more accessible for cloud-based streaming architectures.

Like other modern frameworks, Samza is evolving toward a unified processing model:

- Consistent APIs across batch and streaming

- Improved support for bounded datasets

- Better integration with data lake technologies

- Optimization for both scenarios

This convergence simplifies development and operation of complex data pipelines.

The community is actively enhancing Samza’s interoperability:

- Additional connectors for data sources and sinks

- Better integration with modern data formats

- Support for more serialization frameworks

- Expanded language support beyond JVM

These improvements make Samza more accessible and versatile for diverse use cases.

Apache Samza offers a compelling combination of reliability, performance, and flexibility for distributed stream processing. Its unique architecture, designed specifically for stateful processing and tight integration with messaging systems, makes it particularly well-suited for mission-critical applications that require strong consistency guarantees and fault tolerance.

While newer streaming frameworks have emerged since Samza’s inception, its foundational design principles—separation of processing from state, reliable checkpointing, and horizontal scalability—have influenced the entire stream processing ecosystem. For organizations building streaming data pipelines, especially those already using Kafka, Samza remains a powerful and proven solution worth serious consideration.

As real-time data continues to grow in importance across industries, frameworks like Samza provide the essential infrastructure that enables organizations to transform raw event streams into actionable insights and timely business decisions.

#ApacheSamza #StreamProcessing #DistributedSystems #RealTimeData #DataEngineering #EventStreaming #DataProcessing #ApacheKafka #BigData #StatefulProcessing #OpenSource #DataPipelines #FaultTolerance #StreamingAnalytics