Apache Beam

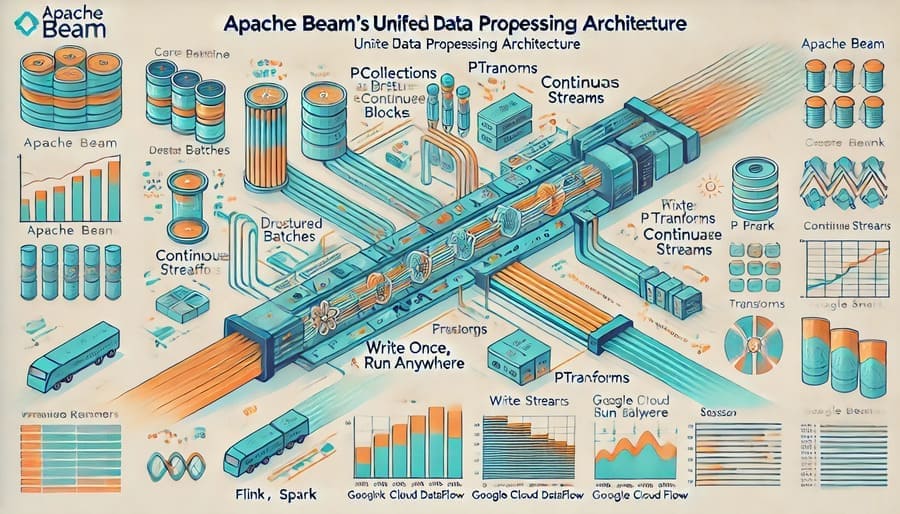

In the ever-evolving world of data engineering, the divide between batch and streaming data processing has traditionally forced organizations to maintain separate codebases, duplicate logic, and manage complex infrastructure for different processing paradigms. Enter Apache Beam—an innovative open-source framework that fundamentally changes this approach by providing a unified programming model that elegantly bridges the gap between batch and streaming workloads.

Apache Beam emerged from Google’s internal data processing systems, specifically the evolution of technologies like MapReduce, FlumeJava, and Millwheel. The name “Beam” itself is an acronym for “Batch + strEAM,” reflecting its core purpose of unifying these previously distinct processing models.

Google open-sourced the technology in 2016, and it quickly gained traction as a top-level Apache Software Foundation project. Since then, Beam has evolved into a powerful, vendor-neutral framework embraced by organizations seeking simplicity and flexibility in their data pipelines.

The fundamental innovation of Apache Beam lies in its programming model, which separates what computation to perform from how and where it’s executed. This separation of concerns powers Beam’s transformative promise:

- Write your data processing logic once using a single, unified API

- Run it anywhere on various execution engines (called “runners”)

- Process both batch and streaming data with the same code

This approach dramatically reduces complexity, eliminates code duplication, and provides remarkable flexibility for organizations with diverse data processing needs.

Apache Beam introduces four key abstractions that form the foundation of its unified approach:

PCollection represents a potentially distributed, multi-element dataset that serves as both the input and output of Beam transformations. These collections can be:

- Bounded: Representing finite data (traditional batch processing)

- Unbounded: Representing infinite data (streaming)

The beauty of Beam’s model is that the same transformations work on both bounded and unbounded collections, allowing developers to write code once and apply it to either processing paradigm.

PTransform represents the operations applied to data. Transforms take one or more PCollections as input and produce one or more PCollections as output. Beam provides a rich library of pre-built transforms while also allowing custom implementations:

- ParDo: Parallel processing similar to Map or FlatMap operations

- GroupByKey: Aggregations on key/value data

- CoGroupByKey: Joining multiple collections

- Combine: Efficient aggregation of data

- Flatten: Merging multiple collections

- Partition: Splitting collections based on criteria

The composability of these transforms enables the creation of complex processing pipelines with clean, readable code.

A Pipeline encapsulates the entire data processing workflow, connecting the data sources, transformations, and destinations. Pipelines represent the complete graph of all data and transformations in your program and serve as the container for executing the workflow.

The Runner translates the pipeline into the native API of the execution engine. This is where the magic of “write once, run anywhere” happens. The same pipeline can be executed on different runners:

- DirectRunner: Local execution for development and testing

- FlinkRunner: Execution on Apache Flink

- SparkRunner: Execution on Apache Spark

- DataflowRunner: Execution on Google Cloud Dataflow

- SamzaRunner: Execution on Apache Samza

- JetRunner: Execution on Hazelcast Jet

These abstractions together enable remarkable flexibility while maintaining a consistent developer experience.

One of Beam’s most sophisticated capabilities is its approach to handling time in data processing. This becomes especially important when dealing with unbounded data streams where operations like aggregations need well-defined boundaries.

Windowing divides a PCollection into finite groups of elements based on timestamps. Beam offers several windowing strategies:

- Fixed Time Windows: Processing data in consistent time intervals (e.g., hourly windows)

- Sliding Time Windows: Overlapping windows (e.g., 5-minute windows every minute)

- Session Windows: Dynamic windows based on activity periods, ideal for user session analysis

- Global Windows: A single window containing all elements in the collection

- Custom Windows: Specialized implementations for unique requirements

While windows define how data is grouped, triggers determine when the results of window computations are emitted:

- Event-time Triggers: Based on the progress of event time in the data

- Processing-time Triggers: Based on the passage of time in the processing system

- Data-driven Triggers: Based on the observed data itself

- Composite Triggers: Combinations of other triggers for complex scenarios

Beam provides sophisticated mechanisms for handling late-arriving data:

- Watermarks: Tracking progress through unbounded data

- Allowed Lateness: Specifying how late data can arrive and still be processed

- Late Data Handling: Directing what happens to data that arrives beyond the allowed lateness

These capabilities enable accurate results even in the face of data that arrives out of order or is delayed—a common challenge in distributed systems.

The unified model of Apache Beam unlocks numerous applications across industries:

Online retailers leverage Beam for:

- Real-time inventory management

- Customer behavior analysis

- Dynamic pricing optimization

- Personalized recommendation engines

- Fraud detection systems

The ability to combine historical analysis with real-time signals creates powerful hybrid use cases.

Banks and financial institutions use Beam for:

- Transaction monitoring and analysis

- Risk assessment and compliance reporting

- Real-time fraud detection

- Market data processing

- Customer sentiment analysis

The processing guarantees and exactly-once semantics make Beam particularly valuable for financial applications.

Telecom providers implement Beam for:

- Network traffic analysis

- Predictive maintenance

- Customer experience monitoring

- Service quality optimization

- Usage pattern analysis

The ability to process high-volume streaming data while incorporating historical context is especially valuable in telecom scenarios.

Industrial applications leverage Beam for:

- Sensor data processing and analysis

- Equipment monitoring and maintenance

- Quality control systems

- Supply chain optimization

- Energy consumption analysis

The combination of real-time alerting with long-term analytics provides comprehensive operational intelligence.

Creating a simple Beam pipeline involves a few basic steps:

- Create a Pipeline: Establish the execution context

- Read Data: Connect to input sources

- Apply Transforms: Process the data

- Write Results: Output to destination systems

- Run the Pipeline: Execute on your chosen runner

Here’s a simple word count example in Java:

public class WordCount {

public static void main(String[] args) {

// Create the pipeline

Pipeline pipeline = Pipeline.create(

PipelineOptionsFactory.fromArgs(args).withValidation().create());

// Read input, process it, and write output

pipeline

.apply("ReadLines", TextIO.read().from("gs://my-bucket/input.txt"))

.apply("SplitWords", ParDo.of(new DoFn<String, String>() {

@ProcessElement

public void processElement(@Element String line, OutputReceiver<String> out) {

for (String word : line.split("\\W+")) {

if (!word.isEmpty()) {

out.output(word.toLowerCase());

}

}

}

}))

.apply("CountWords", Count.perElement())

.apply("FormatResults", MapElements.into(TypeDescriptors.strings())

.via(kv -> kv.getKey() + ": " + kv.getValue()))

.apply("WriteResults", TextIO.write().to("gs://my-bucket/output"));

// Run the pipeline

pipeline.run().waitUntilFinish();

}

}

And here’s the equivalent in Python:

import apache_beam as beam

from apache_beam.options.pipeline_options import PipelineOptions

with beam.Pipeline(options=PipelineOptions()) as pipeline:

(pipeline

| 'ReadLines' >> beam.io.ReadFromText('gs://my-bucket/input.txt')

| 'SplitWords' >> beam.FlatMap(lambda line: line.lower().split())

| 'CountWords' >> beam.combiners.Count.PerElement()

| 'FormatResults' >> beam.Map(lambda word_count: f'{word_count[0]}: {word_count[1]}')

| 'WriteResults' >> beam.io.WriteToText('gs://my-bucket/output')

)

Adapting the above example for streaming is remarkably straightforward. For a streaming word count from Kafka, you would simply change the input source:

pipeline

.apply("ReadFromKafka", KafkaIO.<Long, String>read()

.withBootstrapServers("kafka-server:9092")

.withTopic("input-topic")

.withKeyDeserializer(LongDeserializer.class)

.withValueDeserializer(StringDeserializer.class)

.withoutMetadata())

.apply("ExtractValues", Values.create())

// The rest of the pipeline remains identical

The remarkable aspect is that the core processing logic remains unchanged between batch and streaming scenarios.

Beam’s value extends beyond its programming model through rich integrations with the broader data ecosystem:

Beam provides numerous built-in connectors for data sources and sinks:

- File-based: TextIO, AvroIO, ParquetIO, TFRecordIO

- Databases: JdbcIO, MongoDbIO, RedisIO, CassandraIO

- Messaging Systems: KafkaIO, PubSubIO, JmsIO, AmqpIO

- Cloud Storage: S3IO, GcsIO, AzureIO

- Big Data Systems: HadoopFileSystemIO, SpannerIO, BigQueryIO, HBaseIO

While initially focused on Java, Beam now supports multiple programming languages:

- Java: The original SDK with the most comprehensive feature support

- Python: Nearly feature-complete with growing adoption

- Go: Relatively newer but rapidly maturing

- SQL: For expressing pipelines through SQL queries

Beam offers growing support for machine learning workflows:

- TensorFlow: Integration for TFX (TensorFlow Extended)

- PyTorch: Support through Python SDK

- Scikit-learn: Pipeline integration for model training and serving

- MLIR: Integration with Multi-Level Intermediate Representation

Optimizing Beam pipelines involves several considerations:

- Fusion: Combining multiple operations to reduce communication overhead

- Parallelism: Tuning the level of concurrency in pipeline execution

- Data Locality: Minimizing data movement across the network

- Resource Allocation: Balancing CPU, memory, and network resources

- Combiner Lifting: Using partial aggregation to reduce shuffled data volume

Effective operation of Beam pipelines requires comprehensive monitoring:

- Metrics Collection: Tracking custom and system metrics

- Visualization: Using runner-specific UIs for pipeline execution

- Logging: Capturing detailed execution information

- Testing: Validating pipeline behavior with PAssert and direct runner

Apache Beam continues to evolve with several exciting developments on the horizon:

Enabling pipelines that combine components written in different languages, allowing teams to leverage the best of each language ecosystem.

Deeper integration with ML frameworks for end-to-end machine learning pipelines, from data preparation to model training and serving.

More streamlined deployment options, including portable runners that reduce the operational complexity of managing different execution environments.

Enhanced features for data quality, lineage tracking, and compliance to address growing regulatory requirements around data processing.

While Beam offers compelling advantages, potential adopters should consider:

- Learning Curve: The abstraction model requires some initial investment to fully understand

- Runner Maturity: Some runners are more mature than others in terms of feature support

- Performance Tuning: Optimizing across different runners can require runner-specific knowledge

- Community Support: While growing rapidly, the community is still smaller than some older frameworks

Organizations should evaluate these factors against their specific requirements when considering Beam adoption.

Apache Beam represents a significant advancement in data processing technology by successfully unifying batch and streaming paradigms under a single programming model. This unification dramatically reduces the complexity of building and maintaining data pipelines that can smoothly adapt to changing business requirements.

The framework’s “write once, run anywhere” philosophy not only enhances developer productivity but also provides remarkable flexibility in deployment options. Organizations can develop their data processing logic once and then deploy it on the execution engine that best fits their specific needs—whether that’s Apache Flink, Apache Spark, Google Cloud Dataflow, or another supported runner.

As data processing continues to evolve toward more real-time, event-driven architectures while still requiring historical analysis capabilities, the value proposition of Apache Beam’s unified approach becomes increasingly compelling. For organizations seeking to streamline their data engineering practices and build more adaptable data processing systems, Apache Beam offers a powerful solution that can grow and evolve with changing requirements.

The framework’s active community and ongoing development ensure that it will continue to advance the state of the art in data processing, making it a technology worth serious consideration for modern data engineering teams.

#ApacheBeam #DataProcessing #StreamProcessing #BatchProcessing #DataEngineering #BigData #UnifiedComputing #CloudNative #DataPipelines #ETL #RealTimeAnalytics #OpenSource #DataArchitecture #DistributedSystems