Databricks

In the rapidly evolving world of big data and analytics, organizations face growing challenges in building, scaling, and maintaining data pipelines and machine learning workflows. Amidst this complexity, Databricks has emerged as a transformative force—a unified analytics platform that seamlessly integrates data engineering, data science, and business intelligence within a collaborative environment. Built on the foundation of Apache Spark but extending far beyond it, Databricks has fundamentally changed how enterprises derive value from their data assets.

The Databricks story begins in the AMPLab at UC Berkeley, where Ali Ghodsi, Matei Zaharia, Ion Stoica, and other researchers created Apache Spark as a faster and more flexible alternative to Hadoop MapReduce. After Spark’s initial release in 2010, its adoption accelerated rapidly due to its performance advantages and developer-friendly APIs.

Recognizing the potential for Spark to transform enterprise data processing, the team founded Databricks in 2013 with a vision that extended beyond just providing commercial support for Spark. Their ambition was to build a comprehensive platform that would democratize data and AI, making advanced analytics accessible to organizations of all sizes while solving the fundamental challenges of data management at scale.

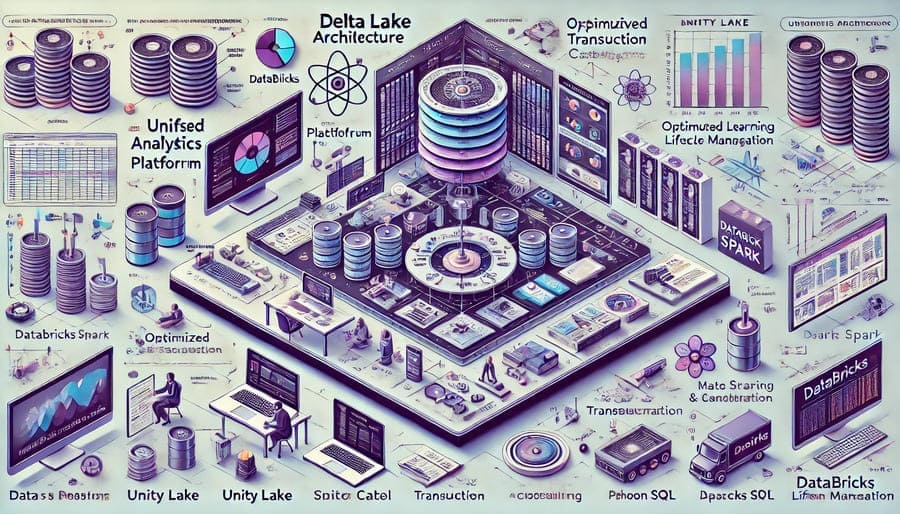

At the heart of Databricks lies the “lakehouse” architecture—a groundbreaking paradigm that combines the best elements of data lakes and data warehouses:

- The flexibility and scalability of data lakes: Store massive amounts of raw, unstructured data in open formats

- The reliability and performance of data warehouses: Implement ACID transactions, schema enforcement, and optimized query performance

This hybrid approach eliminates the traditional divide between data lakes for storage and data warehouses for analytics, creating a unified platform where data engineers, data scientists, and business analysts can collaborate seamlessly.

Delta Lake, an open-source storage layer initially developed by Databricks, serves as the foundation of the lakehouse architecture:

- ACID Transactions: Ensures data consistency even with concurrent reads and writes

- Schema Enforcement and Evolution: Prevents data corruption while allowing controlled schema changes

- Time Travel: Access previous versions of data for auditing, rollbacks, or reproducibility

- Data Versioning: Track changes to datasets over time

- Optimized Layout: Improve query performance through file compaction and indexing

These capabilities transform data lakes from “swamps” of disorganized data into reliable, high-performance systems suitable for both operational and analytical workloads.

The Photon engine represents Databricks’ next-generation execution engine:

- Vectorized Processing: Optimizes CPU usage by processing data in batches

- Advanced Query Optimization: Automatically rewrites queries for maximum efficiency

- Native C++ Implementation: Delivers performance improvements over Java-based Spark

- Seamless Integration: Works with existing Spark APIs without code changes

With Photon, Databricks achieves performance improvements of up to 12x over standard Spark, enabling interactive analytics on massive datasets.

The Databricks workspace provides an integrated environment for collaboration:

- Notebooks: Interactive documents combining code, visualizations, and narrative text

- Dashboards: Real-time visualizations for monitoring and reporting

- Version Control Integration: Native Git support for code management

- Commenting and Sharing: Collaborative features for team communication

- Role-Based Access Control: Fine-grained security for enterprise requirements

This collaborative approach breaks down silos between technical teams, enabling faster iteration and knowledge sharing.

The Databricks Runtime extends Apache Spark with numerous enhancements:

- Performance Optimizations: Advanced query planning and execution

- Pre-configured Libraries: Curated packages for common data science tasks

- GPU Acceleration: Support for hardware acceleration with NVIDIA GPUs

- Auto-scaling: Dynamic resource allocation based on workload demands

- Serverless Computing: Instant compute without cluster management overhead

These enhancements deliver significantly better performance and reliability compared to standard Apache Spark deployments.

MLflow addresses the challenges of managing the machine learning lifecycle:

- Experiment Tracking: Record parameters, metrics, and artifacts

- Model Registry: Centralized repository for model versions

- Model Serving: Deployment mechanisms for real-time and batch inference

- Model Lineage: Track the full provenance of models from data to deployment

- Reproducibility: Recreate environments and results consistently

This comprehensive approach to MLOps accelerates model development while improving governance and compliance.

Databricks SQL democratizes data access across organizations:

- SQL Editor: Familiar interface for SQL users

- Dashboard Creation: Visual reporting capabilities

- Query Federation: Access data across multiple sources

- Scheduled Queries: Automated reporting workflows

- BI Tool Integration: Connects with popular tools like Tableau and Power BI

This component extends the value of the lakehouse to business analysts and decision-makers, not just technical specialists.

Banks and financial institutions leverage Databricks for:

- Fraud Detection: Real-time transaction monitoring with machine learning

- Risk Management: Comprehensive analysis of market and credit risk

- Customer 360: Unified view of customer interactions across channels

- Regulatory Compliance: Automated reporting with complete data lineage

- Algorithmic Trading: Development and backtesting of trading strategies

The platform’s ability to handle both batch and real-time workloads makes it particularly valuable in finance, where timeliness and accuracy are paramount.

Healthcare organizations implement Databricks for:

- Patient Analytics: Longitudinal analysis of patient outcomes

- Clinical Research: Processing and analyzing clinical trial data

- Genomics Research: Scalable processing of genomic sequences

- Drug Discovery: Accelerating the identification of therapeutic compounds

- Operational Efficiency: Optimizing hospital operations and resource allocation

The platform’s security features and compliance capabilities address the strict regulatory requirements in healthcare.

Retailers use Databricks to transform customer experiences:

- Personalized Recommendations: Real-time product suggestions

- Demand Forecasting: Predictive models for inventory optimization

- Supply Chain Analytics: End-to-end visibility and optimization

- Customer Segmentation: Advanced clustering for targeted marketing

- Price Optimization: Dynamic pricing based on market conditions

These applications enable retailers to compete effectively in an increasingly digital marketplace.

Industrial organizations deploy Databricks for operational technology integration:

- Predictive Maintenance: Anticipating equipment failures before they occur

- Quality Control: Statistical process control with machine learning

- Supply Chain Optimization: End-to-end visibility and forecasting

- Energy Optimization: Reducing consumption through advanced analytics

- Digital Twin Analysis: Simulating physical assets for optimization

The platform’s ability to process streaming IoT data alongside historical records enables comprehensive operational analytics.

Getting started with Databricks involves a few key steps:

- Choose a Cloud Provider: Databricks is available on AWS, Azure, and Google Cloud

- Configure Workspace: Set up authentication and security settings

- Create Clusters: Define compute resources for your workloads

- Import Data: Connect to your data sources

- Create Notebooks: Begin developing your data pipelines and analyses

The platform’s user-friendly interface makes this process accessible even for teams new to big data technologies.

# Read data from a source

raw_data = spark.read.format("csv").option("header", "true").load("/data/sales.csv")

# Transform the data

from pyspark.sql.functions import col, date_format

transformed_data = raw_data \

.withColumn("date", date_format(col("transaction_date"), "yyyy-MM-dd")) \

.withColumn("revenue", col("quantity") * col("unit_price")) \

.dropDuplicates() \

.filter(col("revenue") > 0)

# Write to Delta Lake

transformed_data.write.format("delta").mode("overwrite").saveAsTable("sales_transformed")

This simple example demonstrates the core workflow of reading, transforming, and writing data—a foundation that can be extended to more complex pipelines.

# Prepare features

from pyspark.ml.feature import VectorAssembler

feature_cols = ["quantity", "unit_price", "customer_age", "product_category_id"]

assembler = VectorAssembler(inputCols=feature_cols, outputCol="features")

training_data = assembler.transform(transformed_data)

# Split into training and testing sets

train, test = training_data.randomSplit([0.8, 0.2], seed=42)

# Train a model

from pyspark.ml.regression import RandomForestRegressor

from pyspark.ml.evaluation import RegressionEvaluator

rf = RandomForestRegressor(featuresCol="features", labelCol="revenue")

model = rf.fit(train)

# Evaluate the model

predictions = model.transform(test)

evaluator = RegressionEvaluator(labelCol="revenue", predictionCol="prediction")

rmse = evaluator.evaluate(predictions)

print(f"Root Mean Squared Error: {rmse}")

# Log the model with MLflow

import mlflow

mlflow.spark.log_model(model, "random_forest_model")

This example illustrates how machine learning workflows integrate seamlessly within the Databricks environment.

Unity Catalog provides a comprehensive governance layer:

- Unified Permissions: Single access control model across data assets

- Fine-grained Access Control: Column and row-level security

- Auditing: Detailed logs of all data access

- Lineage Tracking: Visual representation of data flows

- Discovery: Search and metadata management for all data assets

This governance layer is critical for organizations with compliance requirements or sensitive data.

The Databricks Feature Store streamlines feature engineering:

- Feature Registry: Centralized repository of feature definitions

- Feature Sharing: Reuse features across multiple models

- Online Serving: Low-latency access for real-time inference

- Point-in-time Correctness: Prevent data leakage in training

- Monitoring: Track feature drift and quality over time

This capability significantly accelerates machine learning development while improving model quality.

Databricks Auto ML makes model development accessible to non-specialists:

- Automated Feature Engineering: Intelligent preprocessing of raw data

- Hyperparameter Optimization: Finding optimal model configurations

- Model Selection: Evaluating multiple algorithms automatically

- Explainability: Understanding model decisions with interpretability tools

- Code Generation: Creating editable notebooks from automated processes

This approach bridges the gap between data analysts and advanced machine learning capabilities.

Effective cost control is built into the platform:

- Cluster Policies: Define constraints on cluster configurations

- Auto-termination: Automatically shut down idle resources

- Observability Tools: Monitor usage and identify optimization opportunities

- Photon Engine: Reduce compute needs through improved performance

- Delta Engine Optimization: Minimize I/O with intelligent caching and pruning

These capabilities help organizations maximize their return on investment in data infrastructure.

Optimizing costs on Databricks involves several key strategies:

- Right-sizing Clusters: Match compute resources to workload requirements

- Implementing Autoscaling: Scale resources based on actual demand

- Using Spot Instances: Leverage lower-cost compute for fault-tolerant workloads

- Data Skipping: Structure data to minimize unnecessary processing

- Workload Scheduling: Run jobs during off-peak hours when appropriate

A thoughtful approach to resource management can significantly reduce platform costs.

Comparing Databricks with traditional cloud data warehouses:

- Data Flexibility: Better support for unstructured and semi-structured data

- Machine Learning Integration: Native support for advanced analytics

- Open Formats: Storage in open formats versus proprietary formats

- Hybrid Workloads: Support for both SQL analytics and data science

- Computing Model: Separation of storage and compute with flexible scaling

While data warehouses excel at structured SQL analytics, Databricks offers greater versatility for diverse data workloads.

Comparing with DIY open-source stacks:

- Integration: Pre-built integration versus manual component assembly

- Maintenance: Managed service versus self-maintenance

- Optimization: Performance tuning built-in versus manual optimization

- Security: Enterprise-grade security features out of the box

- Support: Professional support versus community assistance

The trade-off between control and convenience often favors Databricks for enterprise workloads.

Comparing with native cloud analytics services:

- Cloud Agnostic: Works across multiple clouds versus cloud lock-in

- Unified Experience: Consistent interface versus disparate services

- Performance: Optimized specifically for data workloads

- Innovation Pace: Specialized focus on analytics and machine learning

- Community: Leverages the broader Spark ecosystem

The choice often depends on existing cloud commitments and specific workload requirements.

Ongoing enhancements to query performance include:

- Extended Photon Coverage: Supporting more operations and data types

- Adaptive Query Execution: Dynamic optimization based on data characteristics

- Materialized Views: Transparent acceleration through pre-computation

- Data Skipping and Indexing: Reducing I/O through intelligent data access

- Machine Learning Acceleration: Specialized optimizations for ML workloads

These improvements continue to push the performance boundaries for big data analytics.

Democratizing data access through simplified interfaces:

- Visual ETL: Drag-and-drop pipeline creation

- Automated ML: Simplified model development for non-specialists

- Natural Language Queries: SQL generation from conversational language

- Automated Dashboarding: Intelligent visualization recommendations

- Guided Analytics: Step-by-step workflows for common analysis patterns

These capabilities extend the platform’s value to a broader audience within organizations.

Enhancing enterprise readiness with advanced controls:

- Enhanced Privacy Features: Supporting data anonymization and masking

- Cross-cloud Governance: Unified policies across multi-cloud deployments

- Regulatory Compliance Frameworks: Pre-built configurations for GDPR, CCPA, etc.

- Zero Trust Security Models: Comprehensive verification for all access

- Real-time Monitoring: Advanced threat detection and prevention

These features address the growing regulatory and security challenges facing data-driven organizations.

Databricks has fundamentally transformed the data analytics landscape by unifying the previously separate worlds of data engineering, data science, and business intelligence. The platform’s lakehouse architecture resolves the traditional trade-offs between data lakes and data warehouses, enabling organizations to build comprehensive data platforms that serve diverse analytical needs.

As data volumes continue to grow and analytics requirements become more sophisticated, Databricks’ unified approach offers a compelling solution to the complexity challenges that have traditionally hampered data initiatives. By providing a collaborative environment where different technical specialists can work together effectively, the platform accelerates innovation while improving governance and cost efficiency.

For organizations embarking on data transformation initiatives, Databricks represents more than just a technology platform—it embodies a modern approach to data architecture that can fundamentally change how enterprises derive value from their data assets. As the platform continues to evolve with advancements in performance, accessibility, and governance, it remains at the forefront of the rapidly changing data and AI landscape.

#Databricks #ApacheSpark #DataLakehouse #BigData #DataEngineering #DataScience #MachineLearning #MLOps #CloudComputing #DataAnalytics #DeltaLake #UnifiedAnalytics #ETL #BusinessIntelligence #DataGovernance