How AI Copilots Are Replacing Manual Data Pipeline Development: The 40% Revolution Transforming Data Engineering

The data engineering landscape is undergoing its most significant transformation since the advent of cloud computing. As we navigate through 2025, a striking statistic has emerged: 40% of new data pipeline development efforts now involve some form of AI assistance. This isn’t just another incremental improvement—it’s a fundamental shift that’s redefining how data engineers approach their craft.

Gone are the days when data engineers spent countless hours crafting SQL queries from scratch, manually mapping API endpoints, or debugging transformation logic line by line. AI copilots have stepped in as intelligent partners, automating the tedious work that once consumed 60-70% of a data engineer’s time. But what does this mean for the profession, and how can teams harness this technology effectively?

This article explores the revolutionary impact of AI-assisted data pipeline development, examining real-world implementations, measurable benefits, and the strategic considerations every data team must address to stay competitive in this new era.

The Evolution From Manual to AI-Assisted Pipeline Development

Traditional Pipeline Development: The Pain Points

Data pipeline development has historically been a labor-intensive process fraught with repetitive tasks. Consider a typical scenario: connecting to a new SaaS platform’s API, transforming the data, and loading it into a data warehouse. This process traditionally involved:

- Manual API exploration: Hours spent reading documentation, testing endpoints, and understanding data schemas

- Custom connector development: Writing boilerplate code for authentication, pagination, and error handling

- Schema mapping and transformation logic: Manually defining how source data maps to target schemas

- Testing and validation: Creating test cases and validation rules from scratch

A senior data engineer at a Fortune 500 company recently shared that their team spent an average of 3-4 weeks developing a single new data pipeline from a complex SaaS platform. Today, with AI assistance, that same pipeline can be operational in 3-4 days.

The AI Copilot Revolution

AI copilots in data engineering function as intelligent assistants that understand context, generate code, and automate decision-making processes. Unlike simple code generators, these tools leverage:

- Large Language Models (LLMs) trained on vast codebases and documentation

- Domain-specific knowledge about data engineering patterns and best practices

- Real-time context awareness of your existing infrastructure and schemas

- Iterative learning from your team’s coding patterns and preferences

Key Areas Where AI Copilots Excel

1. Automated SQL Query Generation and Optimization

One of the most immediate impacts of AI copilots is in SQL development. Modern tools can:

Generate Complex Queries from Natural Language:

-- Generated from: "Show me monthly revenue trends by product category,

-- including year-over-year growth rates"

WITH monthly_revenue AS (

SELECT

DATE_TRUNC('month', order_date) as month,

product_category,

SUM(revenue) as monthly_revenue

FROM sales_data

WHERE order_date >= '2023-01-01'

GROUP BY 1, 2

),

yoy_comparison AS (

SELECT

month,

product_category,

monthly_revenue,

LAG(monthly_revenue, 12) OVER (

PARTITION BY product_category

ORDER BY month

) as prev_year_revenue

FROM monthly_revenue

)

SELECT

month,

product_category,

monthly_revenue,

ROUND(

((monthly_revenue - prev_year_revenue) / prev_year_revenue) * 100, 2

) as yoy_growth_rate

FROM yoy_comparison

WHERE prev_year_revenue IS NOT NULL

ORDER BY month DESC, product_category;

Optimize Existing Queries: AI copilots analyze query execution plans and suggest performance improvements, often reducing query execution time by 30-50%.

2. REST API Integration Automation

API integration, once a time-consuming manual process, has been revolutionized by AI assistance:

Intelligent Connector Generation:

# AI-generated connector for Salesforce API

import requests

from typing import Dict, List, Optional

import logging

class SalesforceConnector:

def __init__(self, instance_url: str, access_token: str):

self.instance_url = instance_url

self.access_token = access_token

self.session = requests.Session()

self.session.headers.update({

'Authorization': f'Bearer {access_token}',

'Content-Type': 'application/json'

})

def get_records(self, sobject: str, fields: List[str],

batch_size: int = 2000) -> List[Dict]:

"""

Fetch records with automatic pagination handling

"""

records = []

query = f"SELECT {','.join(fields)} FROM {sobject}"

try:

response = self._execute_soql(query, batch_size)

records.extend(response.get('records', []))

# Handle pagination automatically

while not response.get('done', True):

next_url = response.get('nextRecordsUrl')

response = self._get_next_batch(next_url)

records.extend(response.get('records', []))

except Exception as e:

logging.error(f"Error fetching {sobject} records: {str(e)}")

raise

return records

3. Schema Evolution and Data Transformation

AI copilots excel at understanding schema changes and automatically generating transformation logic:

Automated dbt Model Generation:

-- Generated transformation model for customer lifecycle analysis

{{ config(materialized='table') }}

WITH customer_events AS (

SELECT

customer_id,

event_type,

event_timestamp,

ROW_NUMBER() OVER (

PARTITION BY customer_id

ORDER BY event_timestamp

) as event_sequence

FROM {{ ref('raw_customer_events') }}

),

first_purchase AS (

SELECT

customer_id,

event_timestamp as first_purchase_date

FROM customer_events

WHERE event_type = 'purchase' AND event_sequence = 1

),

customer_metrics AS (

SELECT

c.customer_id,

c.created_at as registration_date,

fp.first_purchase_date,

DATEDIFF('day', c.created_at, fp.first_purchase_date) as days_to_first_purchase,

COUNT(ce.event_type) as total_events

FROM {{ ref('customers') }} c

LEFT JOIN first_purchase fp ON c.customer_id = fp.customer_id

LEFT JOIN customer_events ce ON c.customer_id = ce.customer_id

GROUP BY 1, 2, 3, 4

)

SELECT * FROM customer_metrics

Real-World Impact: Case Studies and Metrics

Case Study 1: E-commerce Data Platform Modernization

A mid-sized e-commerce company implemented AI-assisted pipeline development with remarkable results:

Before AI Implementation:

- Pipeline development time: 2-3 weeks per new source

- Error rate: 15-20% of initial deployments required fixes

- Team productivity: 3-4 new pipelines per quarter

After AI Implementation:

- Pipeline development time: 3-5 days per new source

- Error rate: <5% of deployments required fixes

- Team productivity: 12-15 new pipelines per quarter

Key Success Factors:

- Gradual adoption starting with SQL generation

- Team training on prompt engineering for data tasks

- Integration with existing CI/CD workflows

Case Study 2: Financial Services Data Mesh Implementation

A large financial institution leveraged AI copilots to accelerate their data mesh initiative:

Challenges Addressed:

- Standardization: Ensuring consistent data product development across 20+ domains

- Compliance: Maintaining regulatory requirements while increasing development velocity

- Skill gaps: Enabling domain experts to create data products without extensive technical knowledge

Results:

- 50% reduction in time-to-market for new data products

- 80% improvement in code consistency across domains

- 90% of domain experts could independently create basic data transformations

The Technology Stack Behind AI-Assisted Development

Leading AI Copilot Platforms

GitHub Copilot for Data Engineering:

- Excellent for Python, SQL, and YAML generation

- Strong integration with popular IDEs

- Continuously improving context awareness

Databricks Assistant:

- Native integration with Databricks environment

- Specialized for Spark and Delta Lake operations

- Advanced notebook-based development support

Snowflake Copilot:

- Optimized for Snowflake-specific SQL dialects

- Warehouse performance optimization suggestions

- Integration with Snowpark for advanced analytics

Custom LLM Solutions:

# Example of custom AI assistant integration

from openai import OpenAI

import ast

class DataPipelineAssistant:

def __init__(self, api_key: str):

self.client = OpenAI(api_key=api_key)

def generate_transformation(self, source_schema: dict,

target_schema: dict,

business_rules: str) -> str:

prompt = f"""

Generate a dbt transformation model that:

- Transforms data from source schema: {source_schema}

- To target schema: {target_schema}

- Following business rules: {business_rules}

Include appropriate data quality checks and documentation.

"""

response = self.client.chat.completions.create(

model="gpt-4",

messages=[{"role": "user", "content": prompt}],

temperature=0.2

)

return response.choices[0].message.content

Integration Patterns and Best Practices

Workflow Integration:

- IDE-based assistance for real-time code generation

- CI/CD pipeline integration for automated testing and validation

- Documentation generation for maintaining pipeline lineage

- Code review automation using AI-powered analysis

Quality Assurance Framework:

# AI-assisted pipeline validation

validation_rules:

code_quality:

- ai_generated_code_review: true

- automated_testing: true

- performance_analysis: true

data_quality:

- schema_validation: true

- data_profiling: true

- anomaly_detection: true

security:

- pii_detection: true

- access_control_validation: true

- encryption_compliance: true

Challenges and Limitations

Technical Limitations

Context Window Constraints: Current LLMs have limited context windows, making it challenging to understand large, complex codebases in their entirety.

Domain-Specific Knowledge Gaps: While AI copilots excel at general programming tasks, they may struggle with highly specialized industry requirements or proprietary systems.

Code Quality Variability: Generated code quality can vary significantly based on prompt quality and context clarity.

Organizational Challenges

Skill Evolution Requirements: Teams must develop new skills in prompt engineering and AI tool management while maintaining traditional data engineering expertise.

Quality Control Processes: Organizations need robust review processes to ensure AI-generated code meets production standards.

Dependency Management: Over-reliance on AI tools can create vulnerabilities if services become unavailable or change significantly.

Strategic Implementation Roadmap

Phase 1: Foundation Building (Months 1-2)

Tool Evaluation and Selection:

- Assess current development workflows and pain points

- Pilot 2-3 AI copilot solutions with small projects

- Establish baseline productivity metrics

Team Preparation:

- Conduct prompt engineering training for data engineers

- Develop code review guidelines for AI-generated code

- Create testing frameworks for automated validation

Phase 2: Gradual Integration (Months 3-6)

Selective Automation:

- Start with SQL query generation and API integration

- Implement AI assistance for documentation generation

- Gradually expand to transformation logic development

Process Optimization:

- Integrate AI tools with existing CI/CD pipelines

- Establish quality gates and validation checkpoints

- Develop internal best practices and guidelines

Phase 3: Advanced Implementation (Months 6-12)

Comprehensive Coverage:

- Extend AI assistance to complex pipeline architectures

- Implement automated testing and monitoring generation

- Develop custom AI models for organization-specific patterns

Continuous Improvement:

- Collect and analyze productivity metrics

- Refine prompt templates and code generation patterns

- Establish feedback loops for continuous optimization

Measuring Success: Key Metrics and KPIs

Productivity Metrics

Development Velocity:

- Time from requirement to production deployment

- Number of pipelines delivered per sprint/quarter

- Code lines generated vs. manually written

Quality Indicators:

- Bug rate in AI-generated vs. manually written code

- Code review feedback frequency and severity

- Production incident rates

Resource Utilization:

- Developer time allocation across different activities

- Infrastructure cost optimization through better code

- Team capacity for strategic vs. tactical work

Business Impact Metrics

Time-to-Value:

- Reduced time to deliver new data products

- Faster response to changing business requirements

- Accelerated insights generation

Cost Efficiency:

- Development cost per pipeline

- Maintenance overhead reduction

- Infrastructure optimization savings

Future Outlook: What’s Next for AI-Assisted Data Engineering

Emerging Trends

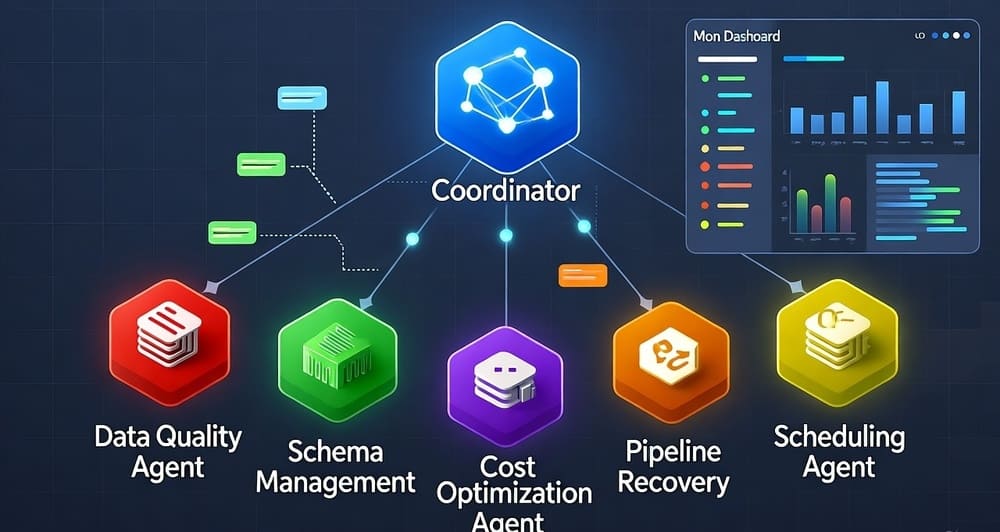

Autonomous Pipeline Management: Future AI systems will not just generate code but actively monitor, optimize, and maintain data pipelines with minimal human intervention.

Natural Language Data Exploration: Data scientists and analysts will interact with data warehouses using natural language, with AI translating intent into optimized queries and transformations.

Predictive Pipeline Optimization: AI will anticipate data volume changes, schema evolution, and performance bottlenecks, proactively adjusting pipeline configurations.

Technology Evolution

Specialized Data Engineering LLMs: Purpose-built models trained specifically on data engineering patterns, documentation, and best practices will provide more accurate and contextually appropriate assistance.

Real-time Collaboration: AI copilots will become more sophisticated in understanding team dynamics, coding standards, and organizational patterns, providing increasingly personalized assistance.

Integrated Development Environments: Future IDEs will seamlessly blend AI assistance with traditional development tools, making AI-human collaboration more natural and efficient.

Key Takeaways and Action Items

The transformation of data pipeline development through AI assistance represents more than a technological upgrade—it’s a fundamental shift in how data teams operate and deliver value. As we’ve seen, the 40% adoption rate of AI-assisted development in 2025 reflects not just a trend but a new operational standard.

Critical Success Factors:

- Start incrementally: Begin with low-risk, high-impact areas like SQL generation and API integration

- Invest in team skills: Prompt engineering and AI tool proficiency are becoming essential data engineering skills

- Maintain quality standards: Implement robust review and testing processes for AI-generated code

- Measure and optimize: Track productivity gains while monitoring code quality and maintainability

Immediate Action Items:

- Evaluate current workflows to identify the highest-impact areas for AI assistance

- Pilot one AI copilot tool with a small team on a non-critical project

- Develop prompt engineering capabilities within your data engineering team

- Establish quality gates for AI-generated code integration

- Create baseline metrics to measure the impact of AI assistance implementation

The data engineering profession is evolving rapidly, and those who embrace AI assistance while maintaining high standards for quality and reliability will find themselves at a significant competitive advantage. The future belongs to data engineers who can effectively collaborate with AI tools to deliver faster, more reliable, and more innovative data solutions.

The revolution is not about replacing human expertise—it’s about amplifying it. As AI copilots handle the routine and repetitive tasks, data engineers are freed to focus on architectural decisions, complex problem-solving, and strategic initiatives that drive real business value.

Further Reading and Resources:

Leave a Reply