The Evolution of Data Architecture: From Traditional Warehouses to Modern Lakehouse Patterns – A Complete Design Guide for 2025

Introduction

The data architecture landscape is undergoing its most significant transformation since the advent of data warehousing in the 1980s. Organizations today face an unprecedented challenge: managing exponentially growing data volumes while supporting both traditional business intelligence and modern AI workloads on a single, cost-effective platform.

The Challenge: Traditional two-tier architectures with separate data lakes and warehouses result in duplicate data, extra infrastructure costs, security challenges, and significant operational overhead. Meanwhile, the demand for real-time insights and AI-driven decision-making is propelling organizations toward unified analytics platforms.

The Solution: Modern data architecture patterns, particularly the emerging data lakehouse paradigm, promise to bridge this gap by combining the best of both worlds—the flexibility and cost-efficiency of data lakes with the performance and reliability of data warehouses.

Who should read this? Data Engineers, Data Architects, Analytics Engineers, and Technology Leaders responsible for designing scalable data platforms that support both traditional BI and modern AI workloads.

What you’ll learn: How to evaluate and implement modern data architecture patterns, understand the trade-offs between different approaches, and design future-proof data platforms that can evolve with your organization’s needs.

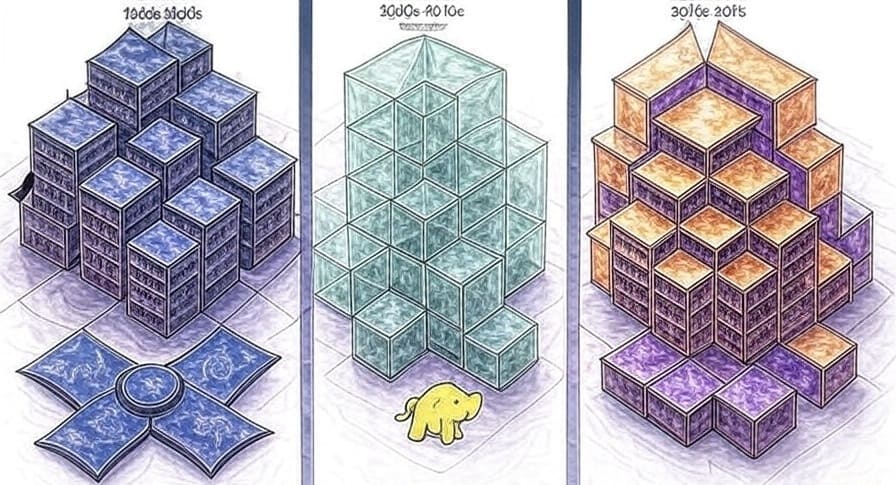

The Historical Context: Understanding Architecture Evolution

The Traditional Data Warehouse Era (1980s-2000s)

The data warehouse concept, pioneered by Bill Inmon and Ralph Kimball, established the foundation for enterprise analytics. Traditional warehouses featured:

- Structured Data Focus: Designed primarily for relational, structured data

- ETL-Heavy Processing: Extract, Transform, Load processes that cleaned and standardized data before storage

- Dimensional Modeling: Star and snowflake schemas optimized for analytical queries

- High Performance: Excellent query performance for structured reporting and BI

Architecture Characteristics:

-- Traditional data warehouse pattern

-- Fact table surrounded by dimension tables

CREATE TABLE fact_sales (

date_key INT,

product_key INT,

customer_key INT,

revenue DECIMAL(10,2),

quantity INT

);

-- Optimized for structured reporting

SELECT

d.year,

p.category,

SUM(f.revenue) as total_revenue

FROM fact_sales f

JOIN dim_date d ON f.date_key = d.date_key

JOIN dim_product p ON f.product_key = p.product_key

GROUP BY d.year, p.category;

The Big Data Revolution: Enter Data Lakes (2000s-2010s)

As organizations grappled with the “3 Vs” of big data—Volume, Velocity, and Variety—traditional warehouses showed limitations:

Challenges with Traditional Warehouses:

- Expensive scaling for massive data volumes

- Inability to handle unstructured data (logs, images, IoT streams)

- Rigid schemas that couldn’t adapt to changing data formats

- Time-consuming ETL processes that created data staleness

The Data Lake Response: Data lakes emerged as a solution, offering:

- Schema-on-Read: Store raw data first, apply structure later

- Multi-Format Support: Handle structured, semi-structured, and unstructured data

- Cost-Effective Storage: Leverage low-cost object storage (S3, ADLS)

- Processing Flexibility: Support batch and stream processing workloads

The Current Challenge: Managing Two-Tier Complexity

Most organizations today operate a two-tier architecture where data is ETL’d from operational databases into a data lake, then a subset is ETL’d again into a data warehouse for business intelligence. This creates:

Operational Challenges:

- Data Duplication: Same data stored in multiple systems

- Pipeline Complexity: Multiple ETL processes to maintain

- Data Freshness Issues: Delays between data generation and availability

- Governance Overhead: Managing security and compliance across systems

- Cost Escalation: Infrastructure and operational expenses for multiple platforms

Modern Data Architecture Patterns: A Comprehensive Analysis

Pattern 1: Modern Data Warehouse (MDW)

A Modern Data Warehouse architecture combines a data lake for storing raw data with a relational data warehouse for serving structured and curated data to business users.

Architecture Components:

# Modern Data Warehouse Pattern

components:

data_lake:

storage: "Cloud object storage (S3, ADLS, GCS)"

formats: ["Parquet", "JSON", "Avro", "Delta"]

purpose: "Raw data storage and preparation"

data_warehouse:

engine: "Cloud MPP warehouse (Snowflake, Redshift, BigQuery)"

modeling: "Dimensional models (star/snowflake schemas)"

purpose: "Curated data for BI and reporting"

processing:

batch: "Spark, Databricks, Synapse"

streaming: "Kafka, Event Hubs, Pub/Sub"

orchestration: "Airflow, Data Factory, Prefect"

Implementation Example:

# Modern ETL pipeline using cloud-native tools

from pyspark.sql import SparkSession

from delta.tables import DeltaTable

class ModernETLPipeline:

def __init__(self, spark_session):

self.spark = spark_session

def ingest_raw_data(self, source_path, target_path):

"""Ingest raw data into data lake"""

df = self.spark.read.json(source_path)

df.write.format("delta").mode("append").save(target_path)

def curate_for_warehouse(self, lake_path, warehouse_table):

"""Transform and load curated data to warehouse"""

raw_df = self.spark.read.format("delta").load(lake_path)

# Apply business transformations

curated_df = raw_df.select(

col("customer_id"),

col("order_date").cast("date"),

col("revenue").cast("decimal(10,2)"),

current_timestamp().alias("processed_at")

).filter(col("revenue") > 0)

# Write to warehouse (could be Snowflake, Redshift, etc.)

curated_df.write.format("snowflake").mode("append").save(warehouse_table)

When to Use MDW:

- Ideal for organizations handling relatively small volumes of data (typically <1TB) and those already familiar with relational data warehouses

- Strong BI and reporting requirements

- Structured data predominates

- Established ETL processes and dimensional modeling expertise

Advantages:

- Proven, well-understood patterns

- Excellent performance for structured analytics

- Strong ecosystem of BI tools

- Clear separation of concerns

Limitations:

- Limited scalability for AI and ML workloads

- Higher costs for massive data volumes

- Complexity of managing two separate systems

Pattern 2: Data Fabric Architecture

Data Fabric is an evolved form of the Modern Data Warehouse, enriched with technologies to support real-time processing, metadata catalogs, data virtualization, APIs, and governance tools.

Key Capabilities:

# Data Fabric Architecture Pattern

fabric_layers:

data_integration:

- real_time_streaming

- batch_processing

- change_data_capture

- api_connectivity

metadata_management:

- data_catalog

- lineage_tracking

- schema_registry

- business_glossary

data_virtualization:

- federated_queries

- data_abstraction

- semantic_layer

- unified_access

governance_security:

- policy_enforcement

- access_control

- data_classification

- compliance_monitoring

Implementation Approach:

# Data Fabric implementation with unified data access

class DataFabricLayer:

def __init__(self, catalog_service, governance_engine):

self.catalog = catalog_service

self.governance = governance_engine

def create_unified_view(self, data_sources):

"""Create federated view across multiple data sources"""

unified_schema = self.catalog.get_unified_schema(data_sources)

# Apply governance policies

filtered_schema = self.governance.apply_access_policies(

unified_schema,

user_context=self.get_current_user_context()

)

return self.create_virtual_dataset(filtered_schema)

def query_across_sources(self, sql_query):

"""Execute queries across distributed data sources"""

execution_plan = self.catalog.optimize_query_plan(sql_query)

return self.execute_federated_query(execution_plan)

When to Use Data Fabric:

- Suited for companies that must integrate and analyze a wide variety of data sources differing in size, speed, and format

- Complex, distributed data environments

- Strong governance and compliance requirements

- Need for real-time data access and processing

Pattern 3: Data Lakehouse Architecture

A data lakehouse is a new, open data management architecture that combines the flexibility, cost-efficiency, and scale of data lakes with the data management and ACID transactions of data warehouses.

Core Architecture Layers:

Data lakehouse architecture consists of five key layers: data ingestion, data storage, metadata, API, and data consumption:

- Ingestion Layer: Extracts data from various sources into the lake

- Storage Layer: Low-cost object storage with open file formats

- Metadata Layer: Manages schemas and provides ACID transactions

- API Layer: Enables tool connectivity and real-time processing

- Consumption Layer: Supports BI, ML, and analytics workloads

Technical Implementation:

# Data Lakehouse implementation with Delta Lake

from delta import DeltaTable

from pyspark.sql import SparkSession

from pyspark.sql.functions import *

class LakehouseArchitecture:

def __init__(self, spark_session, storage_path):

self.spark = spark_session

self.storage_path = storage_path

def create_bronze_layer(self, source_data):

"""Raw data ingestion layer"""

source_data.write.format("delta").mode("append").save(

f"{self.storage_path}/bronze/raw_events"

)

def create_silver_layer(self):

"""Cleaned and validated data layer"""

bronze_df = self.spark.read.format("delta").load(

f"{self.storage_path}/bronze/raw_events"

)

# Data quality transformations

silver_df = bronze_df.filter(

col("event_time").isNotNull() &

col("user_id").isNotNull()

).withColumn(

"processed_timestamp", current_timestamp()

).dropDuplicates(["event_id"])

silver_df.write.format("delta").mode("append").save(

f"{self.storage_path}/silver/validated_events"

)

def create_gold_layer(self):

"""Business-ready aggregated data layer"""

silver_df = self.spark.read.format("delta").load(

f"{self.storage_path}/silver/validated_events"

)

# Business aggregations

gold_df = silver_df.groupBy(

window(col("event_time"), "1 hour"),

col("user_segment")

).agg(

count("*").alias("event_count"),

countDistinct("user_id").alias("unique_users"),

avg("session_duration").alias("avg_session_duration")

)

gold_df.write.format("delta").mode("append").save(

f"{self.storage_path}/gold/user_engagement_metrics"

)

def enable_time_travel(self, table_path, version):

"""Leverage ACID capabilities for data versioning"""

return self.spark.read.format("delta").option(

"versionAsOf", version

).load(table_path)

Medallion Architecture Pattern: The medallion architecture uses bronze layer for raw data, silver layer for validated and deduplicated data, and gold layer for highly refined data.

-- Example of medallion architecture queries

-- Bronze to Silver transformation

CREATE OR REPLACE VIEW silver_customer_events AS

SELECT

customer_id,

event_type,

event_timestamp,

CASE

WHEN event_type = 'purchase' THEN event_value

ELSE 0

END as purchase_amount,

ROW_NUMBER() OVER (

PARTITION BY customer_id, event_timestamp

ORDER BY ingestion_time DESC

) as rn

FROM bronze_raw_events

WHERE customer_id IS NOT NULL

AND event_timestamp IS NOT NULL

AND rn = 1;

-- Silver to Gold aggregation

CREATE OR REPLACE VIEW gold_customer_metrics AS

SELECT

customer_id,

DATE_TRUNC('month', event_timestamp) as month,

COUNT(*) as total_events,

SUM(purchase_amount) as monthly_revenue,

AVG(purchase_amount) as avg_purchase_value

FROM silver_customer_events

WHERE event_type = 'purchase'

GROUP BY customer_id, DATE_TRUNC('month', event_timestamp);

Advanced Lakehouse Features:

# Advanced lakehouse capabilities

class AdvancedLakehouseOperations:

def setup_streaming_ingestion(self):

"""Real-time data ingestion with structured streaming"""

streaming_df = self.spark.readStream.format("kafka").option(

"kafka.bootstrap.servers", "localhost:9092"

).option("subscribe", "user_events").load()

parsed_df = streaming_df.select(

from_json(col("value").cast("string"), self.event_schema).alias("data")

).select("data.*")

query = parsed_df.writeStream.format("delta").outputMode(

"append"

).option("checkpointLocation", "/tmp/checkpoint").trigger(

processingTime="10 seconds"

).start("/lakehouse/bronze/streaming_events")

return query

def implement_data_versioning(self, table_path):

"""Implement data versioning and rollback capabilities"""

delta_table = DeltaTable.forPath(self.spark, table_path)

# Get table history

history_df = delta_table.history()

# Rollback to previous version if needed

delta_table.restoreToVersion(history_df.collect()[1]['version'])

def optimize_storage(self, table_path):

"""Optimize storage with compaction and Z-ordering"""

delta_table = DeltaTable.forPath(self.spark, table_path)

# Compact small files

delta_table.optimize().executeCompaction()

# Z-order for query performance

delta_table.optimize().executeZOrderBy("customer_id", "event_date")

When to Use Data Lakehouse:

- Best for unified analytics platforms, scalable AI workloads, and mixed data types

- Organizations need both BI and ML capabilities

- Cost optimization is important

- Requirement for real-time and batch processing

- Good rule of thumb: “Use it until you can’t.” When performance or governance needs outgrow the Lakehouse, offload specific datasets to an RDW as needed

Technology Stack Considerations

Cloud Platform Capabilities

AWS Lakehouse Stack:

aws_lakehouse:

storage: "Amazon S3"

catalog: "AWS Glue Data Catalog"

processing: "Amazon EMR, AWS Glue"

warehouse: "Amazon Redshift"

analytics: "Amazon Athena"

ml_platform: "Amazon SageMaker"

governance: "AWS Lake Formation"

Azure Synapse Analytics: When integrated with Azure Data Lake, Azure Synapse Analytics delivers many features of a data lakehouse architecture as a fully managed, petabyte-scale cloud data warehouse designed for large-scale data storage and analysis.

Databricks Platform: Databricks is at the forefront of the data lakehouse movement with Delta Lake, an open-format storage layer designed to bring reliability, security, and performance to data lakes.

Open Source Technologies

Delta Lake Implementation:

# Setting up Delta Lake for ACID transactions

from delta.tables import DeltaTable

from pyspark.sql.functions import *

# Create Delta table with schema enforcement

delta_table = DeltaTable.create(spark)\

.tableName("customer_transactions")\

.addColumn("transaction_id", "STRING")\

.addColumn("customer_id", "STRING")\

.addColumn("amount", "DECIMAL(10,2)")\

.addColumn("transaction_date", "TIMESTAMP")\

.partitionedBy("transaction_date")\

.execute()

# Perform upsert (merge) operations

delta_table.alias("target").merge(

new_transactions.alias("source"),

"target.transaction_id = source.transaction_id"

).whenMatchedUpdate(set={

"amount": "source.amount",

"transaction_date": "source.transaction_date"

}).whenNotMatchedInsert(values={

"transaction_id": "source.transaction_id",

"customer_id": "source.customer_id",

"amount": "source.amount",

"transaction_date": "source.transaction_date"

}).execute()

Apache Iceberg for Large-Scale Analytics:

-- Creating Iceberg tables for petabyte-scale analytics

CREATE TABLE customer_events (

event_id BIGINT,

customer_id STRING,

event_type STRING,

event_timestamp TIMESTAMP,

event_data MAP<STRING, STRING>

) USING ICEBERG

PARTITIONED BY (days(event_timestamp))

TBLPROPERTIES (

'write.format.default' = 'parquet',

'write.parquet.compression-codec' = 'snappy'

);

-- Time travel queries with Iceberg

SELECT * FROM customer_events

FOR SYSTEM_TIME AS OF '2025-01-01 00:00:00';

Architecture Decision Framework

Evaluation Criteria Matrix

| Criteria | Modern DW | Data Fabric | Data Lakehouse | Weight |

|---|---|---|---|---|

| Cost Efficiency | ⭐⭐⭐ | ⭐⭐ | ⭐⭐⭐⭐⭐ | High |

| BI Performance | ⭐⭐⭐⭐⭐ | ⭐⭐⭐⭐ | ⭐⭐⭐⭐ | High |

| ML/AI Support | ⭐⭐ | ⭐⭐⭐⭐ | ⭐⭐⭐⭐⭐ | High |

| Real-time Capability | ⭐⭐ | ⭐⭐⭐⭐⭐ | ⭐⭐⭐⭐ | Medium |

| Data Governance | ⭐⭐⭐⭐ | ⭐⭐⭐⭐⭐ | ⭐⭐⭐ | High |

| Implementation Complexity | ⭐⭐⭐⭐⭐ | ⭐⭐ | ⭐⭐⭐ | Medium |

| Vendor Lock-in Risk | ⭐⭐ | ⭐⭐ | ⭐⭐⭐⭐ | Medium |

Decision Tree Framework

# Architecture decision framework

class ArchitectureDecisionEngine:

def __init__(self, requirements):

self.requirements = requirements

def recommend_architecture(self):

score_mdw = self.score_modern_warehouse()

score_fabric = self.score_data_fabric()

score_lakehouse = self.score_lakehouse()

recommendations = {

'modern_warehouse': score_mdw,

'data_fabric': score_fabric,

'data_lakehouse': score_lakehouse

}

return max(recommendations, key=recommendations.get)

def score_modern_warehouse(self):

score = 0

if self.requirements['data_volume'] < 1000: # GB

score += 30

if self.requirements['structured_data_pct'] > 80:

score += 25

if self.requirements['bi_focus'] > self.requirements['ml_focus']:

score += 25

if self.requirements['team_sql_expertise'] > 7:

score += 20

return score

def score_data_fabric(self):

score = 0

if self.requirements['data_sources'] > 10:

score += 30

if self.requirements['governance_requirements'] > 8:

score += 25

if self.requirements['real_time_needs'] > 7:

score += 25

if self.requirements['distributed_teams']:

score += 20

return score

def score_lakehouse(self):

score = 0

if self.requirements['data_variety'] > 7:

score += 30

if self.requirements['ml_focus'] >= self.requirements['bi_focus']:

score += 25

if self.requirements['cost_optimization'] > 8:

score += 25

if self.requirements['cloud_native_preference']:

score += 20

return score

Implementation Strategies and Best Practices

Migration Approach: Modernizing Existing Architectures

Phase 1: Assessment and Planning

# Data architecture assessment framework

class ArchitectureAssessment:

def analyze_current_state(self, existing_systems):

assessment = {

'data_volumes': self.measure_data_growth(),

'query_patterns': self.analyze_workloads(),

'cost_analysis': self.calculate_tco(),

'performance_metrics': self.benchmark_queries(),

'governance_gaps': self.audit_compliance()

}

return assessment

def create_migration_roadmap(self, assessment, target_architecture):

phases = []

# Phase 1: Foundational changes

phases.append({

'name': 'Foundation',

'duration': '3-6 months',

'activities': [

'Cloud storage setup',

'Data lake implementation',

'Basic ETL migration'

]

})

# Phase 2: Advanced capabilities

phases.append({

'name': 'Enhancement',

'duration': '6-12 months',

'activities': [

'Streaming ingestion',

'ML pipeline deployment',

'Advanced analytics enablement'

]

})

return phases

Phase 2: Parallel Implementation

-- Implementing parallel data flows during migration

-- Traditional warehouse load

INSERT INTO traditional_dw.fact_sales

SELECT

date_id,

product_id,

customer_id,

SUM(revenue) as total_revenue

FROM staging.raw_transactions

GROUP BY date_id, product_id, customer_id;

-- Lakehouse parallel load

INSERT INTO lakehouse.bronze_transactions

SELECT * FROM staging.raw_transactions;

-- Data validation between systems

WITH traditional_summary AS (

SELECT SUM(total_revenue) as total FROM traditional_dw.fact_sales

),

lakehouse_summary AS (

SELECT SUM(revenue) as total FROM lakehouse.gold_sales_summary

)

SELECT

t.total as traditional_total,

l.total as lakehouse_total,

ABS(t.total - l.total) as variance

FROM traditional_summary t, lakehouse_summary l;

Performance Optimization Strategies

Query Optimization in Lakehouse Architecture:

# Advanced query optimization for lakehouse

class LakehouseOptimizer:

def optimize_delta_table(self, table_path, partition_cols, z_order_cols):

"""Optimize Delta tables for query performance"""

delta_table = DeltaTable.forPath(self.spark, table_path)

# Optimize file sizes and Z-order

delta_table.optimize().where(

f"date >= '{self.get_optimization_date()}'"

).executeZOrderBy(*z_order_cols)

# Vacuum old files (be careful with time travel requirements)

delta_table.vacuum(retentionHours=168) # 7 days

def implement_caching_strategy(self, frequently_accessed_tables):

"""Implement intelligent caching for hot data"""

for table in frequently_accessed_tables:

df = self.spark.read.format("delta").load(table['path'])

# Cache frequently accessed partitions

hot_partitions = df.filter(

col("date") >= date_sub(current_date(), 30)

)

hot_partitions.cache()

# Persist dimension tables in memory

if table['type'] == 'dimension':

df.persist(StorageLevel.MEMORY_AND_DISK)

Data Governance in Modern Architectures

Implementing Unified Governance:

# Comprehensive data governance framework

class ModernDataGovernance:

def __init__(self, catalog_service, policy_engine):

self.catalog = catalog_service

self.policy_engine = policy_engine

def implement_data_classification(self, table_metadata):

"""Automatically classify data based on content and metadata"""

classification_rules = {

'PII': ['email', 'ssn', 'phone', 'address'],

'FINANCIAL': ['salary', 'account', 'balance', 'payment'],

'SENSITIVE': ['medical', 'health', 'diagnosis']

}

for table in table_metadata:

table['classification'] = self.classify_columns(

table['columns'], classification_rules

)

self.catalog.update_table_metadata(table)

def enforce_access_policies(self, user_context, data_request):

"""Dynamic policy enforcement based on user context"""

policies = self.policy_engine.get_applicable_policies(

user_context['role'],

user_context['department'],

data_request['tables']

)

filtered_query = self.apply_row_level_security(

data_request['query'], policies

)

return self.apply_column_masking(filtered_query, policies)

def implement_lineage_tracking(self, pipeline_metadata):

"""Track data lineage across lakehouse layers"""

lineage_graph = {

'bronze_to_silver': self.extract_transformations(

pipeline_metadata['bronze_silver_jobs']

),

'silver_to_gold': self.extract_transformations(

pipeline_metadata['silver_gold_jobs']

),

'consumption': self.track_downstream_usage(

pipeline_metadata['analytics_jobs']

)

}

self.catalog.update_lineage_metadata(lineage_graph)

Real-World Implementation Case Studies

Case Study 1: Global Retail Chain Migration

Challenge: A global retail organization with 50+ countries needed to consolidate data from 200+ stores, e-commerce platforms, and supply chain systems to enable real-time inventory optimization and personalized customer experiences.

Previous Architecture Pain Points:

- 15+ separate data warehouses across regions

- 3-day latency for global inventory visibility

- $2M+ annual infrastructure costs

- Limited ML capabilities for demand forecasting

Solution: Lakehouse Implementation

# Retail lakehouse architecture implementation

class RetailLakehouseImplementation:

def __init__(self, spark_session):

self.spark = spark_session

self.bronze_path = "/lakehouse/bronze"

self.silver_path = "/lakehouse/silver"

self.gold_path = "/lakehouse/gold"

def ingest_store_data(self):

"""Real-time ingestion from 200+ stores"""

store_stream = self.spark.readStream.format("kafka").option(

"kafka.bootstrap.servers", "global-kafka-cluster:9092"

).option("subscribe", "store-transactions,inventory-updates").load()

# Process store transactions

transactions = store_stream.filter(

col("topic") == "store-transactions"

).select(

from_json(col("value").cast("string"), self.transaction_schema).alias("data")

).select("data.*")

# Write to bronze layer with partitioning

transactions.writeStream.format("delta").outputMode("append").option(

"checkpointLocation", "/checkpoints/store-transactions"

).partitionBy("country", "date").start(f"{self.bronze_path}/transactions")

def create_global_inventory_view(self):

"""Unified global inventory visibility"""

bronze_inventory = self.spark.read.format("delta").load(

f"{self.bronze_path}/inventory"

)

# Silver layer: Clean and standardize across regions

silver_inventory = bronze_inventory.select(

col("store_id"),

col("product_sku").alias("sku"),

col("quantity_on_hand").cast("integer"),

col("reserved_quantity").cast("integer"),

col("available_quantity").cast("integer"),

col("last_updated").cast("timestamp"),

col("country"),

col("region")

).filter(

col("quantity_on_hand") >= 0 &

col("last_updated") > current_timestamp() - expr("INTERVAL 1 DAY")

)

silver_inventory.write.format("delta").mode("overwrite").save(

f"{self.silver_path}/standardized_inventory"

)

# Gold layer: Business-ready aggregations

global_availability = silver_inventory.groupBy(

"sku", "country", "region"

).agg(

sum("available_quantity").alias("total_available"),

count("store_id").alias("stores_with_stock"),

avg("available_quantity").alias("avg_stock_per_store"),

max("last_updated").alias("latest_update")

)

global_availability.write.format("delta").mode("overwrite").save(

f"{self.gold_path}/global_inventory_summary"

)

def enable_ml_demand_forecasting(self):

"""Enable ML workflows on unified data"""

historical_sales = self.spark.read.format("delta").load(

f"{self.gold_path}/sales_history"

)

# Feature engineering for ML

ml_features = historical_sales.select(

col("sku"),

col("store_id"),

col("sales_date"),

col("quantity_sold"),

col("revenue"),

dayofweek("sales_date").alias("day_of_week"),

month("sales_date").alias("month"),

lag("quantity_sold", 7).over(

Window.partitionBy("sku", "store_id").orderBy("sales_date")

).alias("sales_7_days_ago"),

avg("quantity_sold").over(

Window.partitionBy("sku", "store_id")

.orderBy("sales_date")

.rowsBetween(-30, -1)

).alias("avg_sales_30_days")

)

ml_features.write.format("delta").mode("overwrite").save(

f"{self.gold_path}/ml_demand_features"

)

Results Achieved:

- Real-time Inventory: Global inventory visibility reduced from 3 days to 15 minutes

- Cost Reduction: 60% infrastructure cost savings ($1.2M annually)

- ML Capabilities: Demand forecasting accuracy improved by 35%

- Operational Efficiency: Inventory turnover increased by 18%

Case Study 2: Financial Services Risk Analytics Platform

Challenge: A regional bank needed to implement real-time fraud detection, regulatory reporting, and customer risk scoring while maintaining strict compliance with financial regulations.

Architecture Solution:

# Financial services lakehouse with enhanced governance

class FinancialServicesLakehouse:

def __init__(self, spark_session, encryption_service):

self.spark = spark_session

self.encryption = encryption_service

self.audit_logger = AuditLogger()

def ingest_transaction_streams(self):

"""Secure real-time transaction processing"""

# Encrypted streaming ingestion

transaction_stream = self.spark.readStream.format("kafka").option(

"kafka.bootstrap.servers", "secure-kafka:9093"

).option("kafka.security.protocol", "SSL").option(

"subscribe", "customer-transactions"

).load()

# Decrypt and validate transactions

decrypted_transactions = transaction_stream.select(

self.encryption.decrypt_column(col("value")).alias("transaction_data")

).select(

from_json("transaction_data", self.secure_transaction_schema).alias("txn")

).select("txn.*")

# Real-time fraud scoring

fraud_scored = decrypted_transactions.withColumn(

"fraud_score",

self.calculate_fraud_score(

col("amount"), col("merchant_category"),

col("customer_id"), col("transaction_time")

)

).withColumn(

"risk_level",

when(col("fraud_score") > 0.8, "HIGH")

.when(col("fraud_score") > 0.5, "MEDIUM")

.otherwise("LOW")

)

# Write with encryption and audit

fraud_scored.writeStream.foreachBatch(

self.secure_write_with_audit

).start()

def implement_regulatory_reporting(self):

"""Automated regulatory compliance reporting"""

# BSA/AML reporting requirements

suspicious_patterns = self.spark.read.format("delta").load(

"/lakehouse/gold/transaction_analytics"

).filter(

(col("daily_cash_amount") > 10000) | # CTR threshold

(col("structuring_pattern_score") > 0.7) | # Potential structuring

(col("velocity_anomaly_score") > 0.8) # Unusual velocity

)

# Generate CTR (Currency Transaction Report) data

ctr_data = suspicious_patterns.select(

col("customer_id"),

col("transaction_date"),

col("total_cash_amount"),

col("transaction_count"),

col("filing_institution"),

current_timestamp().alias("report_generated_at")

).withColumn(

"report_id",

concat(lit("CTR_"), date_format(current_date(), "yyyyMMdd"), lit("_"),

monotonically_increasing_id())

)

# Encrypted storage for regulatory data

self.secure_write_regulatory_data(ctr_data, "/regulatory/ctr_reports")

def create_customer_risk_profiles(self):

"""ML-powered customer risk scoring"""

customer_features = self.spark.sql("""

WITH customer_behavior AS (

SELECT

customer_id,

COUNT(*) as transaction_count_90d,

SUM(amount) as total_amount_90d,

AVG(amount) as avg_transaction_amount,

STDDEV(amount) as amount_volatility,

COUNT(DISTINCT merchant_category) as merchant_diversity,

COUNT(DISTINCT DATE(transaction_time)) as active_days,

MAX(amount) as max_transaction_amount

FROM lakehouse_gold.transactions

WHERE transaction_time >= current_date() - INTERVAL 90 DAYS

GROUP BY customer_id

),

risk_indicators AS (

SELECT

customer_id,

CASE WHEN avg_transaction_amount > 5000 THEN 1 ELSE 0 END as high_avg_amount,

CASE WHEN amount_volatility > avg_transaction_amount * 2 THEN 1 ELSE 0 END as high_volatility,

CASE WHEN merchant_diversity < 3 THEN 1 ELSE 0 END as low_merchant_diversity,

CASE WHEN max_transaction_amount > 25000 THEN 1 ELSE 0 END as large_transaction_flag

FROM customer_behavior

)

SELECT

cb.*,

ri.high_avg_amount + ri.high_volatility +

ri.low_merchant_diversity + ri.large_transaction_flag as risk_score

FROM customer_behavior cb

JOIN risk_indicators ri ON cb.customer_id = ri.customer_id

""")

customer_features.write.format("delta").mode("overwrite").save(

"/lakehouse/gold/customer_risk_profiles"

)

Compliance and Security Features:

class FinancialDataGovernance:

def implement_field_level_encryption(self, sensitive_columns):

"""Implement field-level encryption for PII/PCI data"""

encryption_mapping = {

'account_number': 'AES256_DETERMINISTIC',

'ssn': 'AES256_RANDOMIZED',

'customer_name': 'AES256_DETERMINISTIC',

'address': 'AES256_RANDOMIZED'

}

for column, encryption_type in encryption_mapping.items():

if column in sensitive_columns:

self.apply_column_encryption(column, encryption_type)

def implement_data_retention_policies(self):

"""Automated data retention for regulatory compliance"""

retention_policies = {

'transaction_data': 2555, # 7 years in days

'customer_communications': 1095, # 3 years

'risk_assessments': 1825, # 5 years

'audit_logs': 2920 # 8 years

}

for data_type, retention_days in retention_policies.items():

cutoff_date = current_date() - expr(f"INTERVAL {retention_days} DAYS")

self.archive_old_data(data_type, cutoff_date)

def generate_audit_trails(self, data_access_event):

"""Comprehensive audit logging for regulatory compliance"""

audit_record = {

'timestamp': current_timestamp(),

'user_id': data_access_event['user_id'],

'data_classification': data_access_event['data_classification'],

'access_type': data_access_event['access_type'], # READ, WRITE, DELETE

'data_volume': data_access_event['record_count'],

'purpose': data_access_event['business_purpose'],

'approval_reference': data_access_event['approval_id']

}

self.write_audit_record(audit_record)

Results Achieved:

- Real-time Fraud Detection: Reduced false positives by 45% while maintaining 99.2% fraud detection rate

- Regulatory Compliance: Automated 90% of regulatory reporting workflows

- Cost Efficiency: 40% reduction in compliance-related manual processes

- Risk Management: Improved customer risk scoring accuracy by 30%

Performance Benchmarking and Optimization

Query Performance Comparison

Traditional Warehouse vs. Lakehouse Performance:

-- Complex analytical query performance comparison

-- Traditional warehouse approach

WITH sales_summary AS (

SELECT

d.year,

d.quarter,

p.category,

c.segment,

SUM(f.revenue) as total_revenue,

COUNT(f.transaction_id) as transaction_count

FROM fact_sales f

JOIN dim_date d ON f.date_key = d.date_key

JOIN dim_product p ON f.product_key = p.product_key

JOIN dim_customer c ON f.customer_key = c.customer_key

WHERE d.year BETWEEN 2023 AND 2025

GROUP BY d.year, d.quarter, p.category, c.segment

),

growth_analysis AS (

SELECT

*,

LAG(total_revenue) OVER (

PARTITION BY category, segment

ORDER BY year, quarter

) as prev_quarter_revenue,

total_revenue / LAG(total_revenue) OVER (

PARTITION BY category, segment

ORDER BY year, quarter

) - 1 as growth_rate

FROM sales_summary

)

SELECT * FROM growth_analysis

WHERE growth_rate > 0.1

ORDER BY growth_rate DESC;

-- Lakehouse approach with optimizations

SELECT

year(transaction_date) as year,

quarter(transaction_date) as quarter,

product_category as category,

customer_segment as segment,

SUM(revenue) as total_revenue,

COUNT(*) as transaction_count,

(SUM(revenue) / LAG(SUM(revenue)) OVER (

PARTITION BY product_category, customer_segment

ORDER BY year(transaction_date), quarter(transaction_date)

) - 1) as growth_rate

FROM delta.`/lakehouse/gold/enriched_transactions`

WHERE year(transaction_date) BETWEEN 2023 AND 2025

GROUP BY year(transaction_date), quarter(transaction_date),

product_category, customer_segment

HAVING growth_rate > 0.1

ORDER BY growth_rate DESC;

Performance Optimization Techniques:

class PerformanceOptimizer:

def optimize_lakehouse_queries(self, table_configs):

"""Advanced performance tuning for lakehouse"""

for table in table_configs:

# Implement liquid clustering for Databricks

if table['platform'] == 'databricks':

self.spark.sql(f"""

ALTER TABLE {table['name']}

CLUSTER BY (customer_segment, product_category, transaction_date)

""")

# Optimize for time-series queries

if table['type'] == 'time_series':

self.implement_time_partitioning(table)

# Create bloom filters for high-cardinality columns

if table['has_high_cardinality']:

self.create_bloom_filters(table['high_cardinality_columns'])

def implement_caching_strategy(self, workload_patterns):

"""Intelligent caching based on query patterns"""

hot_data_threshold = 0.8 # 80% of queries access this data

for pattern in workload_patterns:

if pattern['access_frequency'] > hot_data_threshold:

# Cache in SSD/memory for frequently accessed data

df = self.spark.read.format("delta").load(pattern['table_path'])

df.cache()

# Pre-aggregate common query patterns

if pattern['aggregation_heavy']:

self.create_materialized_aggregations(pattern)

def benchmark_query_performance(self, test_queries):

"""Comprehensive performance benchmarking"""

results = {}

for query_name, query_sql in test_queries.items():

start_time = time.time()

# Execute with query plan analysis

df = self.spark.sql(query_sql)

df.explain(mode="cost") # Analyze query plan

result_count = df.count() # Force execution

execution_time = time.time() - start_time

results[query_name] = {

'execution_time_seconds': execution_time,

'rows_processed': result_count,

'performance_tier': self.classify_performance(execution_time)

}

return results

Emerging Trends and Future Architecture Patterns

AI-Native Data Architecture

Modern data architectures are evolving to be AI-first, with built-in capabilities for machine learning and generative AI workloads:

# AI-native lakehouse implementation

class AIOptimizedLakehouse:

def __init__(self, spark_session, vector_store):

self.spark = spark_session

self.vector_store = vector_store # For embeddings and similarity search

def implement_vector_search_layer(self):

"""Native vector storage for AI workloads"""

# Process unstructured data for embeddings

document_df = self.spark.read.format("delta").load("/lakehouse/bronze/documents")

# Generate embeddings using distributed processing

embedded_docs = document_df.select(

col("document_id"),

col("content"),

self.generate_embeddings(col("content")).alias("content_embedding"),

col("metadata")

)

# Store in vector-optimized format

embedded_docs.write.format("delta").mode("append").save(

"/lakehouse/gold/vector_embeddings"

)

def enable_llm_training_pipelines(self):

"""Prepare data for LLM training and fine-tuning"""

training_corpus = self.spark.sql("""

SELECT

document_id,

CONCAT('Instruction: ', instruction, ' Response: ', response) as training_text,

content_type,

quality_score

FROM lakehouse_gold.instruction_response_pairs

WHERE quality_score > 0.8

AND content_type IN ('technical_documentation', 'customer_support', 'product_info')

""")

# Tokenize and prepare for distributed training

tokenized_data = training_corpus.select(

col("document_id"),

self.tokenize_for_training(col("training_text")).alias("tokens"),

col("content_type").alias("domain_label")

)

# Save in format optimized for ML frameworks

tokenized_data.write.format("parquet").mode("overwrite").save(

"/lakehouse/ml/llm_training_data"

)

def implement_rag_architecture(self):

"""Retrieval-Augmented Generation support"""

# Create searchable knowledge base

knowledge_base = self.spark.read.format("delta").load(

"/lakehouse/gold/structured_knowledge"

)

# Implement similarity search for RAG

def retrieve_context(query_embedding, top_k=5):

similar_docs = self.vector_store.similarity_search(

query_embedding, k=top_k

)

return similar_docs

# Real-time context retrieval for LLM queries

rag_pipeline = knowledge_base.select(

col("content_id"),

col("content_text"),

col("content_embedding"),

col("metadata"),

col("last_updated")

).filter(

col("last_updated") > current_timestamp() - expr("INTERVAL 30 DAYS")

)

return rag_pipeline

Real-Time Streaming Architecture Patterns

The demand for real-time insights is driving architectures that blur the line between batch and streaming:

# Unified batch and streaming architecture

class UnifiedStreamingLakehouse:

def __init__(self, spark_session):

self.spark = spark_session

self.checkpoint_path = "/lakehouse/checkpoints"

def implement_kappa_architecture(self):

"""Single pipeline for both batch and streaming"""

# Unified processing logic for batch and stream

def process_events(df, batch_id=None):

processed = df.select(

col("event_id"),

col("user_id"),

col("event_type"),

col("timestamp"),

col("properties"),

# Unified business logic

self.calculate_user_score(col("properties")).alias("user_score"),

self.detect_anomalies(col("event_type"), col("timestamp")).alias("is_anomaly")

).withColumn(

"processing_time", current_timestamp()

)

# Write to same Delta table (handles both batch and streaming)

processed.write.format("delta").mode("append").save(

"/lakehouse/silver/processed_events"

)

# Streaming ingestion

streaming_events = self.spark.readStream.format("kafka").load()

streaming_query = streaming_events.writeStream.foreachBatch(

process_events

).trigger(processingTime="30 seconds").start()

# Batch backfill using same logic

historical_events = self.spark.read.format("parquet").load("/historical_data")

process_events(historical_events)

return streaming_query

def implement_change_data_capture(self):

"""Real-time CDC for operational databases"""

# Debezium-style CDC processing

cdc_stream = self.spark.readStream.format("kafka").option(

"subscribe", "mysql.retail_db.orders,mysql.retail_db.customers"

).load()

# Parse CDC events

parsed_cdc = cdc_stream.select(

from_json(col("value").cast("string"), self.cdc_schema).alias("cdc")

).select(

col("cdc.before").alias("before_image"),

col("cdc.after").alias("after_image"),

col("cdc.op").alias("operation"), # c=create, u=update, d=delete

col("cdc.ts_ms").alias("transaction_timestamp"),

col("cdc.source.table").alias("source_table")

)

# Apply changes to lakehouse tables

def apply_cdc_changes(df, batch_id):

for table_name in df.select("source_table").distinct().collect():

table_changes = df.filter(col("source_table") == table_name['source_table'])

self.merge_cdc_changes(table_changes, table_name['source_table'])

cdc_query = parsed_cdc.writeStream.foreachBatch(

apply_cdc_changes

).start()

return cdc_query

Data Mesh Integration with Lakehouse

The convergence of data mesh principles with lakehouse architecture represents the future of enterprise data management:

# Data mesh implementation on lakehouse foundation

class DataMeshLakehouse:

def __init__(self, domain_configs):

self.domains = domain_configs

self.global_catalog = GlobalDataCatalog()

def create_domain_data_products(self, domain_name):

"""Implement domain-driven data products"""

domain_config = self.domains[domain_name]

# Each domain manages its own data products

domain_products = {

'customer_360': {

'path': f'/lakehouse/domains/{domain_name}/gold/customer_360',

'schema': domain_config['customer_schema'],

'sla': {'freshness': '15_minutes', 'quality': '99.5%'},

'access_patterns': ['analytical', 'operational', 'ml_training']

},

'product_catalog': {

'path': f'/lakehouse/domains/{domain_name}/gold/product_catalog',

'schema': domain_config['product_schema'],

'sla': {'freshness': '1_hour', 'quality': '99.9%'},

'access_patterns': ['analytical', 'real_time_lookup']

}

}

# Register data products in global catalog

for product_name, product_config in domain_products.items():

self.global_catalog.register_data_product(

domain=domain_name,

product=product_name,

config=product_config

)

return domain_products

def implement_federated_governance(self):

"""Federated governance with global policies"""

global_policies = {

'data_classification': self.classify_data_sensitivity(),

'retention_policies': self.define_retention_requirements(),

'access_controls': self.setup_rbac_policies(),

'quality_standards': self.define_quality_metrics()

}

# Apply to all domains while allowing domain-specific extensions

for domain_name in self.domains.keys():

domain_policies = self.merge_policies(

global_policies,

self.domains[domain_name]['local_policies']

)

self.apply_domain_governance(domain_name, domain_policies)

def enable_cross_domain_analytics(self):

"""Enable analytics across domain boundaries"""

# Discover available data products

available_products = self.global_catalog.discover_products(

domains=['sales', 'marketing', 'finance'],

data_types=['customer', 'transaction', 'product']

)

# Create cross-domain analytical views

cross_domain_view = self.spark.sql(f"""

CREATE OR REPLACE VIEW cross_domain_customer_journey AS

SELECT

s.customer_id,

s.purchase_history,

m.campaign_interactions,

f.payment_preferences,

s.clv_score,

m.engagement_score,

f.credit_risk_score

FROM sales_domain.customer_360 s

JOIN marketing_domain.customer_profiles m

ON s.customer_id = m.customer_id

JOIN finance_domain.customer_financials f

ON s.customer_id = f.customer_id

WHERE s.data_quality_score > 0.95

AND m.profile_completeness > 0.8

AND f.risk_assessment_date > current_date() - INTERVAL 90 DAYS

""")

return cross_domain_view

Architecture Selection Decision Guide

Comprehensive Decision Framework

# Complete architecture decision engine

class ArchitectureDecisionFramework:

def __init__(self):

self.evaluation_criteria = {

'technical': ['data_volume', 'data_variety', 'query_complexity', 'real_time_needs'],

'organizational': ['team_skills', 'change_tolerance', 'budget_constraints'],

'strategic': ['ai_ml_priority', 'vendor_preference', 'compliance_requirements']

}

def comprehensive_assessment(self, organization_profile):

"""Complete organizational data architecture assessment"""

technical_score = self.assess_technical_requirements(organization_profile)

organizational_readiness = self.assess_organizational_factors(organization_profile)

strategic_alignment = self.assess_strategic_priorities(organization_profile)

recommendation = self.generate_recommendation(

technical_score, organizational_readiness, strategic_alignment

)

return {

'recommended_architecture': recommendation['primary'],

'alternative_options': recommendation['alternatives'],

'implementation_roadmap': self.create_roadmap(recommendation),

'risk_assessment': self.assess_implementation_risks(recommendation),

'success_metrics': self.define_success_criteria(recommendation)

}

def generate_recommendation(self, technical, organizational, strategic):

"""AI-powered architecture recommendation"""

scoring_matrix = {

'modern_warehouse': self.score_modern_warehouse(technical, organizational, strategic),

'data_fabric': self.score_data_fabric(technical, organizational, strategic),

'data_lakehouse': self.score_data_lakehouse(technical, organizational, strategic),

'hybrid_approach': self.score_hybrid_approach(technical, organizational, strategic)

}

# Sort by score and confidence

ranked_options = sorted(

scoring_matrix.items(),

key=lambda x: (x[1]['score'], x[1]['confidence']),

reverse=True

)

return {

'primary': ranked_options[0],

'alternatives': ranked_options[1:3],

'reasoning': self.explain_recommendation(ranked_options[0])

}

Implementation Roadmap Template

Phase-Based Implementation Strategy:

# 18-month implementation roadmap

implementation_phases:

phase_1_foundation:

duration: "3-6 months"

objectives:

- "Establish cloud infrastructure"

- "Implement basic data lake storage"

- "Set up initial ETL pipelines"

key_deliverables:

- "Cloud storage configuration"

- "Basic data ingestion framework"

- "Data governance foundation"

success_metrics:

- "Data ingestion latency < 30 minutes"

- "Storage cost reduction of 40%"

- "Basic data quality monitoring"

phase_2_enhancement:

duration: "6-9 months"

objectives:

- "Implement advanced analytics capabilities"

- "Deploy ML pipeline infrastructure"

- "Enable real-time data processing"

key_deliverables:

- "Streaming data pipelines"

- "ML model training infrastructure"

- "Advanced data transformations"

success_metrics:

- "Real-time processing latency < 1 minute"

- "ML model deployment time < 2 weeks"

- "Data freshness improvement of 80%"

phase_3_optimization:

duration: "9-18 months"

objectives:

- "Optimize performance and costs"

- "Implement advanced governance"

- "Enable self-service analytics"

key_deliverables:

- "Automated optimization systems"

- "Comprehensive data catalog"

- "Self-service analytics platform"

success_metrics:

- "Query performance improvement of 50%"

- "Self-service adoption rate > 70%"

- "Governance compliance score > 95%"

Key Takeaways and Strategic Recommendations

Essential Insights for Data Leaders

1. Architecture Evolution is Inevitable: Organizations will need to evolve their data architectures to support both traditional BI and modern AI workloads. The question is not whether to modernize, but how quickly and effectively to do so.

2. Lakehouse is the Current Winner: For most organizations, data lakehouse architecture provides the best balance of cost, performance, and flexibility. It enables unified analytics while reducing the complexity of managing separate systems.

3. Open Standards Matter: Choose solutions built on open standards (Delta Lake, Apache Iceberg, Parquet) to avoid vendor lock-in and ensure long-term flexibility.

4. Governance Cannot be an Afterthought: Modern data architectures require governance by design, not as an add-on. Implement data classification, lineage tracking, and access controls from the beginning.

Actionable Recommendations

For Data Engineers:

- Master Multi-Engine Architectures: Develop expertise in both traditional data warehousing and modern lakehouse patterns

- Embrace Infrastructure as Code: Implement all data infrastructure using version-controlled, automated deployment processes

- Focus on Data Quality: Build data quality monitoring and validation into every pipeline

- Learn Vector Databases: Prepare for AI workloads by understanding vector storage and similarity search

For Data Architects:

- Design for Evolution: Create architectures that can adapt to changing requirements without complete rewrites

- Implement Federated Governance: Balance central control with domain autonomy using modern data mesh principles

- Plan for Real-Time: Design with streaming-first mindset, even if current requirements are batch-oriented

- Cost Optimization: Implement automated cost monitoring and optimization from day one

For Technology Leaders:

- Invest in Team Skills: Modern architectures require new skills – invest in training and hiring

- Start Small, Scale Fast: Begin with pilot projects to prove value before enterprise-wide rollouts

- Partner Strategically: Choose technology partners that align with open standards and your long-term vision

- Measure Business Impact: Define clear metrics linking data architecture improvements to business outcomes

Future-Proofing Your Architecture

1. Prepare for AI-First Workloads: Modern architectures must natively support vector storage, embedding generation, and real-time inference.

2. Design for Real-Time: The future demands sub-second data freshness for critical business processes.

3. Embrace Hybrid Patterns: Most organizations will use multiple architecture patterns for different use cases – design for this reality.

4. Invest in Data Observability: As data systems become more complex, observability becomes critical for maintaining reliability and performance.

Conclusion

The evolution from traditional data warehouses to modern lakehouse architectures represents more than a technology shift—it’s a fundamental reimagining of how organizations can unlock value from their data assets. As we’ve explored throughout this guide, the convergence of traditional data warehousing reliability with data lake flexibility creates unprecedented opportunities for unified analytics, AI-driven insights, and cost-effective scale.

The data architecture landscape will continue to evolve rapidly, driven by advances in cloud computing, artificial intelligence, and real-time processing capabilities. Organizations that embrace these modern patterns while maintaining strong governance and operational discipline will position themselves to thrive in an increasingly data-driven world.

The choice between modern data warehouse, data fabric, data lakehouse, or hybrid approaches ultimately depends on your organization’s specific requirements, constraints, and strategic objectives. However, the trend is clear: unified platforms that can support both traditional BI and modern AI workloads on a single, cost-effective infrastructure will become the new standard.

The Bottom Line: Start your modernization journey today, but do so thoughtfully. Assess your current state, define your target vision, and implement incrementally while building the organizational capabilities needed for long-term success. The future of data architecture is here—and it’s more accessible and powerful than ever before.

Remember: the best architecture is the one that evolves with your business needs while maintaining the reliability, performance, and governance standards your organization requires. Whether you’re managing terabytes or petabytes, supporting dozens or thousands of users, the principles and patterns outlined in this guide will help you build a data platform that drives real business value.

References and Further Reading

Essential Documentation

- Databricks Lakehouse Architecture Guide – Comprehensive guide to lakehouse implementation

- Delta Lake Documentation – Open-source ACID storage layer specifications

- Apache Iceberg Table Format – Large-scale analytics table format documentation

- Snowflake Architecture Guide – Cloud data warehouse design principles

Industry Research and Reports

- McKinsey Global Institute: “The Age of AI” – Impact of AI on data architecture requirements

- Gartner Magic Quadrant: Cloud Database Management Systems – Annual vendor analysis

- Forrester Wave: Cloud Data Warehouse Report – Market landscape analysis

- IDC MarketScape: Big Data and Analytics Platform assessment

Technical Resources

- dbt (Data Build Tool) – Modern data transformation framework

- Apache Airflow – Workflow orchestration platform

- Great Expectations – Data quality and testing framework

- Apache Kafka – Distributed streaming platform

Books and Deep-Dive Resources

- “Designing Data-Intensive Applications” by Martin Kleppmann – Foundational concepts for modern data systems

- “The Data Warehouse Toolkit” by Ralph Kimball – Dimensional modeling principles (still relevant)

- “Building Analytics Teams” by John K. Thompson – Organizational aspects of data architecture

- “Data Mesh” by Zhamak Dehghani – Decentralized data architecture principles

Cloud Platform Documentation

- AWS Analytics Services – Comprehensive AWS data platform guide

- Microsoft Azure Synapse Analytics – Unified analytics platform documentation

- Google Cloud BigQuery – Serverless data warehouse documentation

Community and Learning Resources

- Data Engineering Zoomcamp – Free, comprehensive data engineering course

- dbt Community – Active community for modern data stack practitioners

- Data Council – Premier data community and conference series

- Reddit r/dataengineering – Active practitioner community

Tags:

#DataArchitecture #DataLakehouse #ModernDataWarehouse #DataEngineering #CloudDataPlatforms #DataLake #DataWarehouse #DataFabric #DataMesh #LakehouseArchitecture #DistributedData #CloudArchitecture #DeltaLake #ApacheIceberg #Databricks #Snowflake #BigQuery #ApacheSpark #Kafka #dbt #DataGovernance #BusinessIntelligence #MachineLearning #RealTimeAnalytics #DataScience #AIArchitecture #MLOps #DataOps #DigitalTransformation #DataStrategy #TechnologyLeadership #EnterpriseArchitecture #DataPlatform #ModernDataStack

Leave a Reply